Full Table of Contents at end of document.

Chapter Contents

(back to top)

1.1 Purpose

1.2 Audience

1.3 Topics Covered

1.4 Topics Not Covered

1.5 Not Optimized for Smooth Reading

1.6 How D3D11.3 Fits into this Unified Spec

This document describes hardware requirements for Direct3D 11.3 (D3D11.3).

It is assumed that the reader is familiar with real-time graphics, modern Graphics Processing Unit (GPU) design issues and the general architecture of Microsoft Windows Operating Systems, as well their planned release roadmap.

The target audience for this spec are the implementers, testers and documenters of hardware or software components that would be considered part of a D3D11.3-compliant system. In addition, software developers who are vested in the details about medium-term GPU hardware direction will find interesting information.

Topics covered in this spec center on definition of the hardware architecture being targeted by the D3D11.1 Graphics Pipeline, in a form that attempts to be agnostic to any single vendor's hardware implementation. Included will be some references to how the Graphics Pipeline is controlled through a Device Driver Interface (DDI), and occasionally depictions of API usage as needed to illustrate points.

Occasionally, boxed text such as this appears in the spec to indicate justification for decisions, explain history about a feature, provide clarifications or general remarks about a topic being described, or to flag an unresolved issues. These shaded boxes DO NOT provide a complete listing of all such trivia, however. Note that on each revision of this spec, all changes made for that revision are summarized in a separate document typically distributed with the spec.

The exact relationship and interactions between topics covered in the Graphics Pipeline with other Operating System components is not covered.

GPU resource management, GPU process scheduling, and low-level Operating System driver/kernel architecture are not covered.

High-level GPU programming concepts (such as high level shading languages) are not covered.

Little to no theory or derivation of graphics concepts, techniques or history is provided. Equally rare for this spec is any attempt to characterize what sorts of things applications software developers might do using the functionality provided by D3D11.3. There are exceptions, but do not expect to gain much more than an understanding of the "facts" about D3D11.3 from this spec.

Beware, there is little flow to the content in this spec, although there are plenty of links from place to place.

This document is the product of starting with the full D3D11.2 functional spec and adding in relevant WindowsNext D3D11.3 features.

Each Chapter in this spec begins with a summary of the changes from D3D10 to D3D10.1 to D3D11 to D3D11.1 to D3D11.2 to D3D11.3 for that Chapter. A table of links to all of the Chapter delta summaries can be found here(25.2).

To find D3D11.3 changes specifically (which includes changes for optional new features and clarifications/corrections that affect all feature levels, look for "[D3D11.3]" in the chapter changelists (or simply search the doc for it).

Chapter Contents

(back to top)

2.1 Input Assembler (IA) Overview

2.2 Vertex Shader (VS) Overview

2.3 Hull Shader (HS) Overview

2.4 Tessellator (TS) Overview

2.5 Domain Shader (DS) Overview

2.6 Geometry Shader (GS) Overview

2.7 Stream Output (SO) Overview

2.8 Rasterizer Overview

2.9 Pixel Shader (PS) Overview

2.10 Output Merger (OM) Overview

2.11 Compute Shader (CS) Overview

Summary of Changes in this Chapter from D3D10 to D3D11.3

Back to all D3D10 to D3D11.3 changes.(25.2)

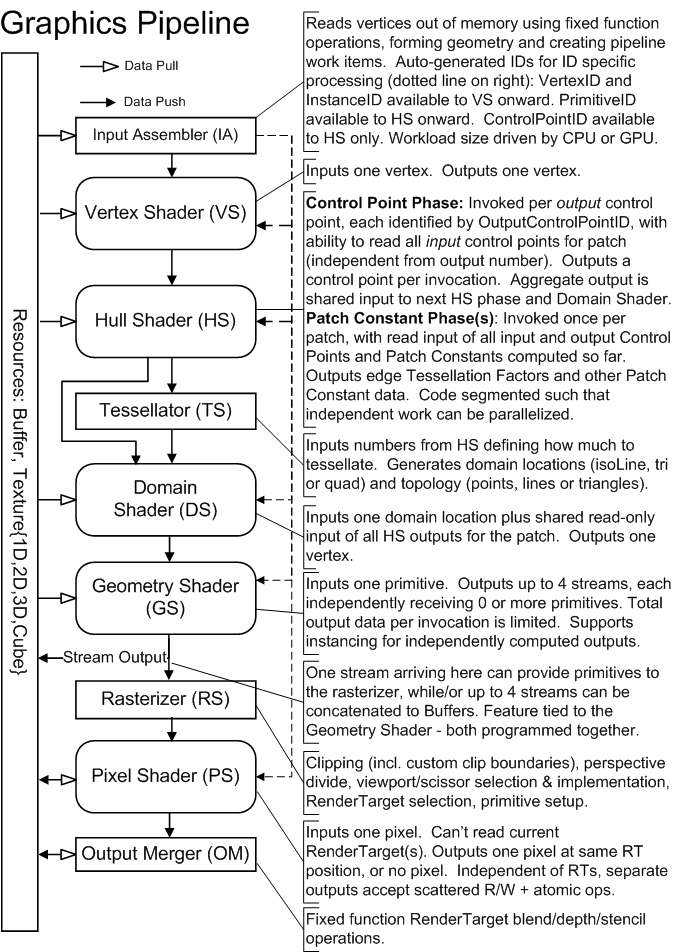

D3D11.1 hardware, like previous generations, can be designed with shared programmable cores. A farm of Shader cores exist on the GPU, able to be scheduled across the functional blocks comprising the D3D11.1 Pipeline, depicted below.

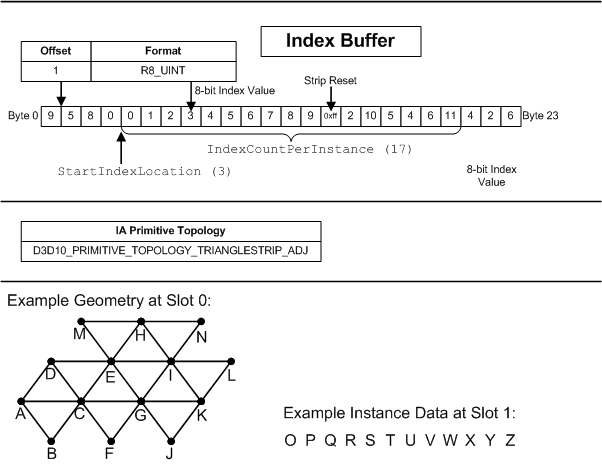

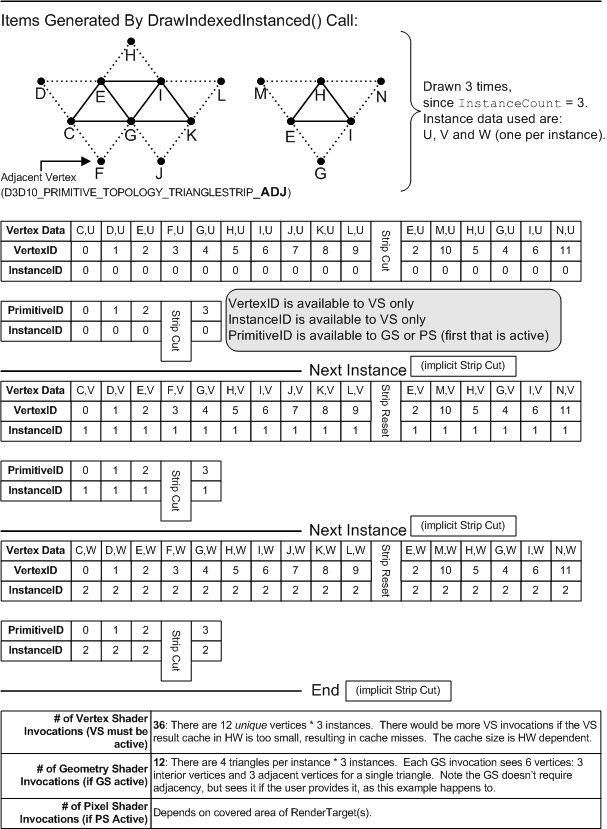

The Input Assembler (IA) introduces triangles, lines, points or Control Points (for Patches) into the graphics Pipeline, by pulling source geometry data out of 1D Buffers(5.3.4).

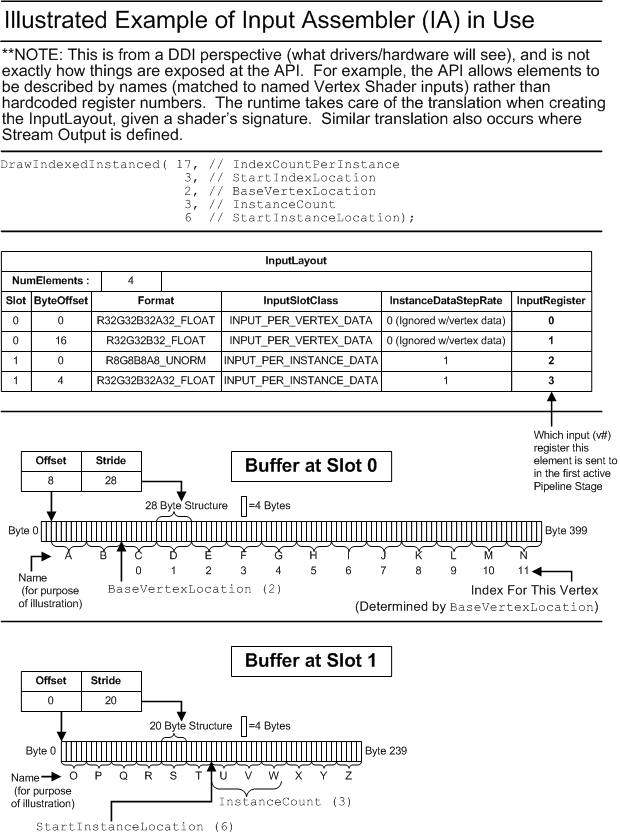

Vertex data can come from multiple Buffers, accessed in an "Array-of-Structures" fashion from each Buffer. The Buffers are each bound to an individual input slot and given a structure stride. The layout of data across all the Buffers is specified by an Input Declaration, in which each entry defines an "Element" with: an input slot, a structure offset, a data type, and a target register (for the first active Shader in the Pipeline).

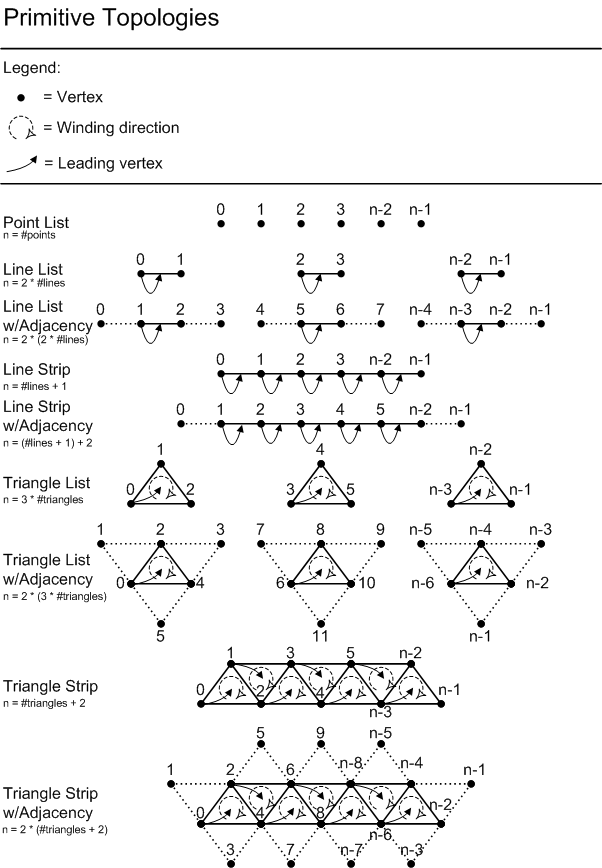

A given sequence of vertices is constructed out of data fetched from Buffers, in a traversal directed by a combination of fixed-function state and various Draw*() API/DDI calls. Various primitive topologies are available to make the sequence of vertex data represent a sequence of primitives. Example topologies are: point-list, line-list, triangle-list, triangle-strip, 8 control-point patch-list.

Vertex data can be produced in one of two ways. The first is "Non-Indexed" rendering, which is the sequential traversal of Buffer(s) containing vertex data, originating at a start offset at each Buffer binding. The second method for producing vertex data is "Indexed" rendering, which is sequential traversal of a single Buffer containing scalar integer indices, originating at a start offset into the Buffer. Each index indicates where to fetch data out of Buffer(s) containing vertex data. The index values are independent of the characteristics of the Buffers they are referring to; Buffers are described by a declaration as mentioned earlier. So the task accomplished by "Non-Indexed" and "Indexed" rendering, each in their own way, is producing addresses from which to fetch vertex data in memory, and subsequently assemble the results into vertices and primitives.

Instanced geometry rendering is enabled by allowing the sequential traversal, in either Non-indexed or Indexed rendering, to loop over a range within each Vertex Buffer (Non-Indexed case) or Index Buffer (Indexed case). Buffer-bindings can be identified "Instance Data" or "Vertex Data", indicating how to use the bound Buffer while performing instanced rendering. The address generated by "Non-Indexed" or "Indexed" rendering is used to fetch "Vertex Data", accounting also for looping when doing Instanced rendering. "Instance Data", on the other hand, is always sequentially traversed starting from a per-Buffer offset, at a frequency equal to one step per instance (e.g. one step forward after the number of vertices in an instance are traversed). The step rate for "Instance Data" can also be chosen to be a subharmonic of the instance frequency (i.e. one step forward every other instance, every third instance etc.).

Another use of the Input Assembler is that it can read Buffers that were written to from the Stream Output(2.7) stage. Such a scenario necessitates a particular type of Draw, DrawAuto(8.9). DrawAuto enables the Input Assembler to know how much data was dynamically written to a Stream Output Buffer without CPU involvement.

In addition to producing vertex data from Buffers, the IA can auto-generate scalar counter values such as: VertexID(8.16), PrimitiveID(8.17) and InstanceID(8.18), for input to shader stages in the graphics pipeline.

In "Indexed" rendering of strip topologies, such as triangle strips, a mechanism is provided for drawing multiple strips with a single Draw*() call (i.e. 'cut'ting strips).

Specific operational details of the IA are provided here(8).

The Vertex Shader stage processes vertices, performing operations such as transformations, skinning, and lighting. Vertex Shaders always operate on a single input vertex and produce a single output vertex. This stage must always be active.

Specific operational details of Vertex Shaders are provided here(9).

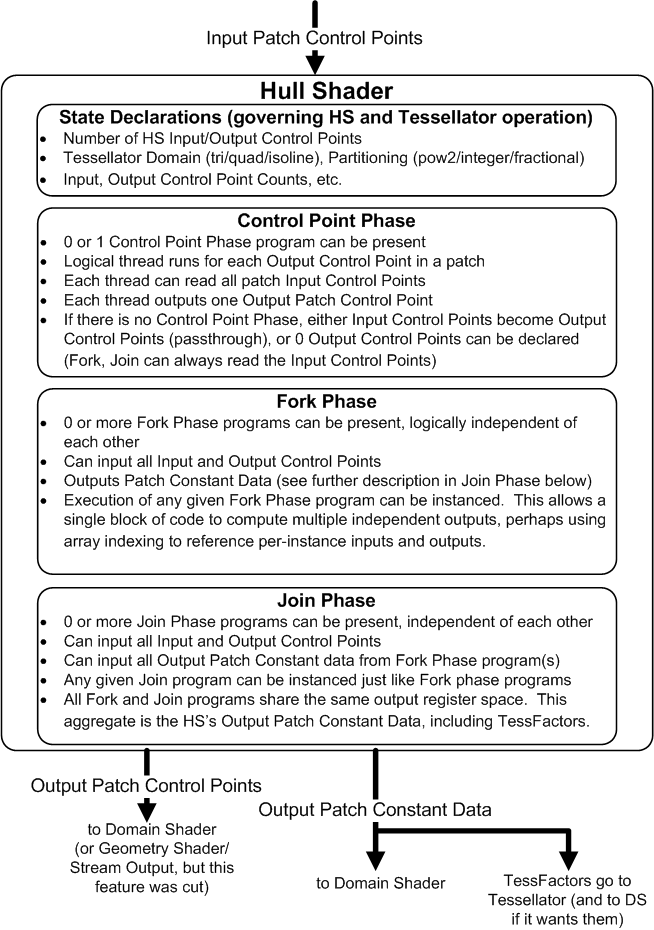

The Hull Shader operates once per Patch (can only be used with Patces from the IA). It can transform input Control Points that make up a Patch into Output Control Points, and it can perform other setup for the fixed-function Tessellator stage (outputting TessFactors, which are numbers that indicate how much to tessellate).

Specific operational details of the Hull Shader are provided here(10).

The Tessellator is a fixed function unit whose operation is defined by declarations in the Hull Shader. It operates once per Patch output by the Hull Shader. The Hull shader outputs TessFactors which are numbers that tell the Tessellator how much to tessellate (generate geometry and connectivity) over the domain of the Patch.

Specific operational details of the Tessellator provided here(11).

The Domain Shader is invoked once per vertex generated by the Tessellator. Each invocation is identified by its coordinate on a generic domain, and the role of the Domain Shader is to turn that coordinate into something tangible (such as a point in 3D space) for use downstream. Each Domain Shader invocation for a Patch also sees shared input of all the Hull Shader output (such as output Control Points).

Specific operational details of the Domain Shader are provided here(12).

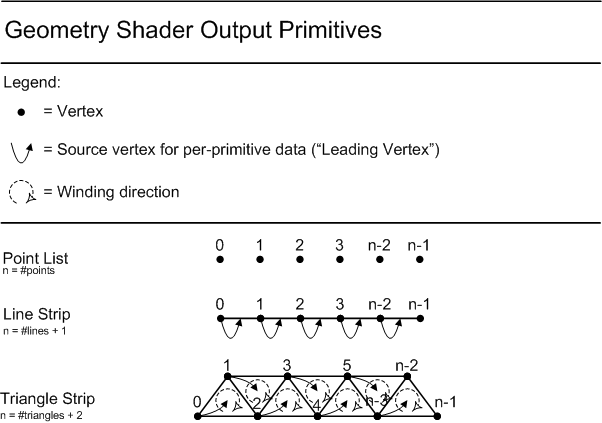

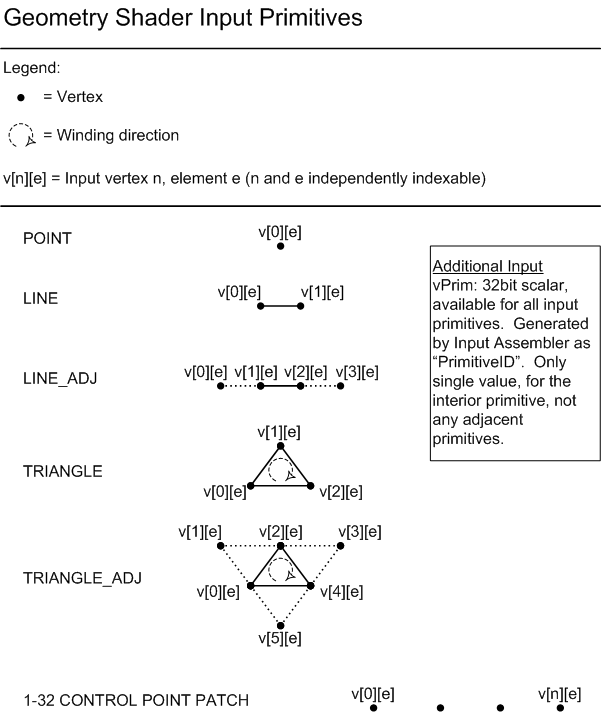

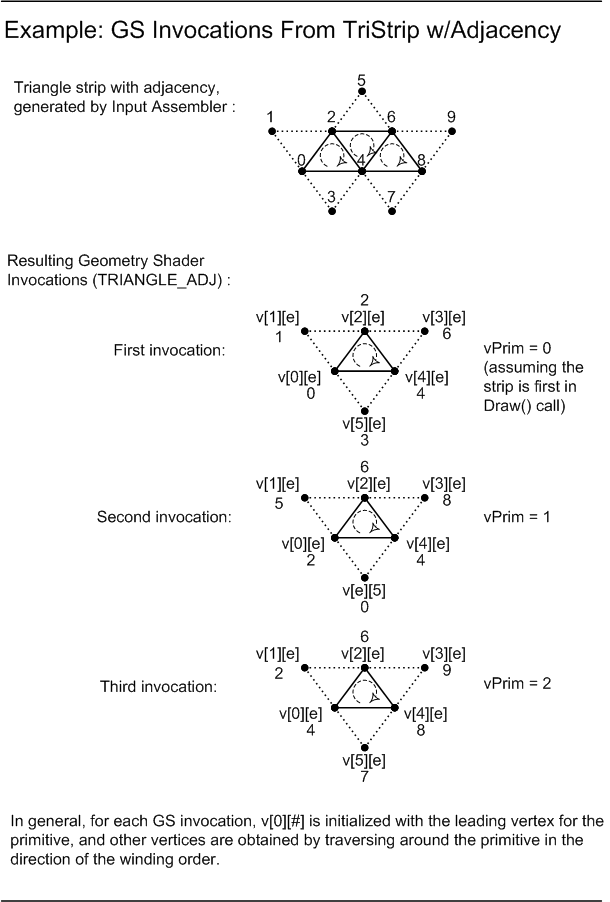

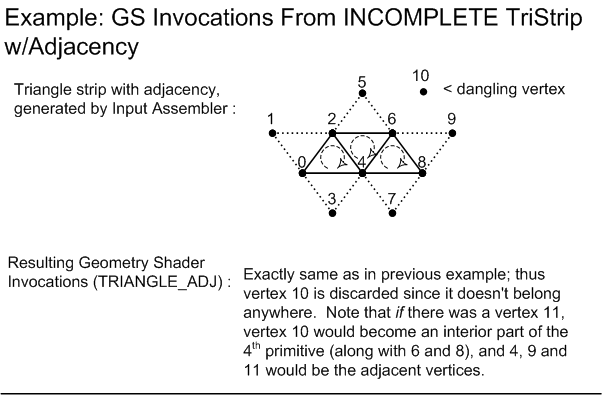

The Geometry Shader runs application-specified Shader code with vertices as input and the ability to generate vertices on output. The Geometry Shader's inputs are the vertices for a full primitive (two vertices for lines, three vertices for triangles, a single vertex for point, or all Control Points for a Patch if it reaches the GS with Tessellation disabled). Some types of primitives can also include the vertices of edge-adjacent primitive (an additional two vertices for a line, an additional three for a triangle).

Another input is a PrimitiveID auto-generated by the IA. This allows per-face data to be fetched or computed if desired.

The Geometry Shader stage is capable of outputting multiple vertices forming a single selected topology (GS output topologies available are: tristrip, linestrip, pointlist). The number of primitives emitted can vary freely within any invocation of the Geometry Shader, though the maximum number of vertices that could be emitted must be declared statically. Strip lengths emitted from a GS invocation can be arbitrary (there is a 'cut'(22.8.1) command).

Output may be fed to rasterizer and/or out to vertex Buffers in memory. Output fed to memory is expanded to individual point/line/triangle lists (the same way they would get passed to the rasterizer).

Algorithms that can be implemented in the Geometry Shader include:

Specific operational details of the Geometry Shader are provided here(13).

Vertices may be streamed out to memory just before arriving at the Rasterizer. This is like a "tap" in the Pipeline, which can be turned on even as data continues to flow down to the Rasterizer. Data sent out via Stream Output is concatenated to Buffer(s). These Buffers may on subsequent passes be recirculated as Pipeline inputs.

One constraint about Stream Output is that it is tied to the Geometry Shader, in that both must be created together (though either can be "NULL"/"off"). The particular memory Buffer(s) being Streamed out are not tied to this GS/SO pair though. Only the description of which parts of vertex data to feed to Stream Output are tied to the GS.

One use for Stream Output is for saving ordered Pipeline data that will be reused. For example a batch of vertices might be "skinned" by passing the vertices into the Pipeline as if they are independent points (just to visit all of them once), applying "skinning" operations on each vertex, and streaming out the results to memory. The saved out "skinned" vertices are now available for use in subsequent passes as input.

Since the amount of output written through Stream Output can be unpredictably dynamic, a special type of Draw command, DrawAuto(8.9), is necessary. DrawAuto enables the Input Assembler to know how much data was dynamically written to a Stream Output Buffer without CPU involvement. In addition, Queries are necessary to mitigate Stream Output overflow(20.4.10), as well as retrieve how much data was written(20.4.9) to the Stream Output Buffers.

Specific operational details of the Stream Output are provided here(14).

The rasterizer is responsible for clipping, primitive setup, and determining how to invoke Pixel Shaders. D3D11.3 does not view this as a "stage" in the Pipeline, but rather an interface between Pipeline stages which happens to perform a significant set of fixed function operations, many of which can be adjusted by software developers.

The rasterizer always assumes input positions are provided in clip-space, performs clipping, perspective divide and applies viewport scale/offset.

Specific operational details of the Rasterizer are provided here(15).

Input data available to the Pixel Shader includes vertex attributes that can be chosen, on a per-Element basis, to be interpolated with or without perspective correction, or be treated as constant per-primitive.

The Pixel Shader can also be chosen to be invoked either once per pixel or once per covered sample within the pixel.

Outputs are one or more 4-vectors of output data for the current pixel or sample, or no color (if pixel is discarded).

The Pixel Shader has some other inputs and outputs available as well, similar to the kind of inputs and outputs the Compute Shader can use, allowing, for instance, the ability to write to scattered locations.

Specific operational details of Pixel Shaders are provided here(16).

The final step in the logical Pipeline is visibility determination, through stencil or depth, and writing or blending of output(s) to RenderTarget(s), which may be one of many Resource Types(5).

These operations, as well as the binding of output resources (RenderTargets), are defined at the Output Merger.Specific operational details of the Output Merger are provided here(17).

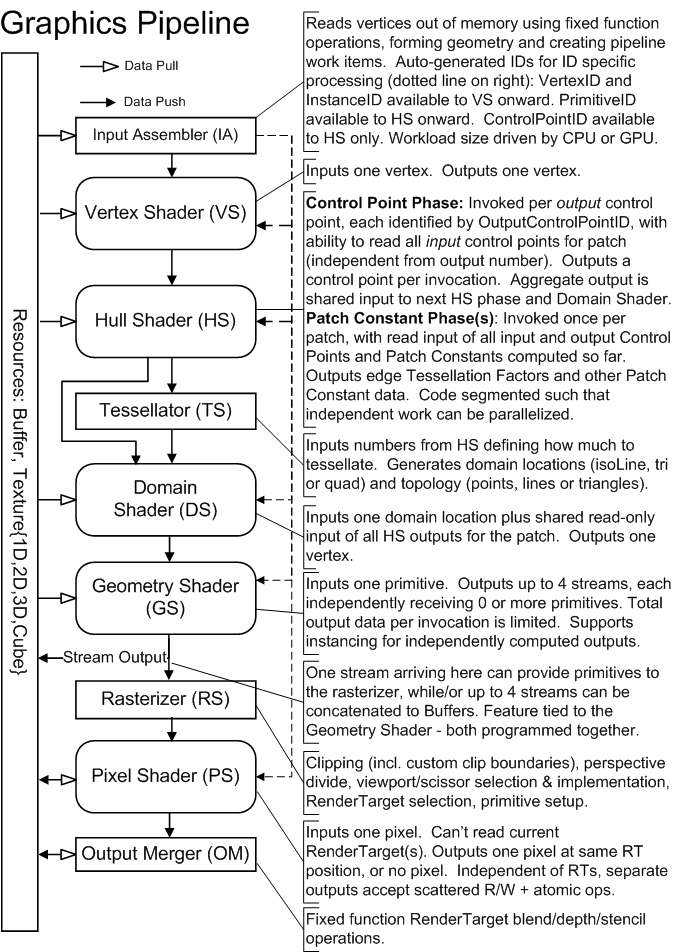

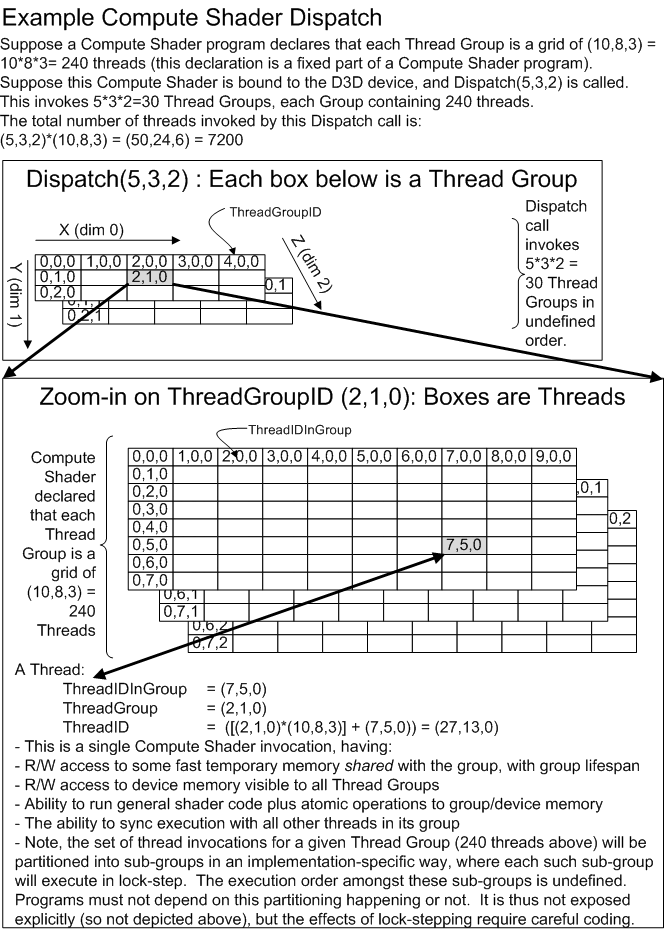

The Compute Shader allows the GPU to be viewed as a generic grid of data-parallel processors, without any graphics baggage from the graphics pipeline. The Compute Shader has explicit access to fast shared memory to facilitate communication between groups of shader invocations, and the ability to perform scattered reads and writes to memory. The availablility of atomic operations enables unique access to shared memory addresses. The Compute Shader is not part of the Graphics Pipeline (all the previously discussed shader stages). The Compute Shader exists on its own, albeit on the same device as all the other Shader Stages. To invoke this shader, Dispatch*() APIs are called instead of Draw*().

Specific operational details of Compute Shaders are provided here(18).

Chapter Contents

(back to top)

3.1 Floating Point Rules

3.2 Data Conversion

3.3 Coordinate Systems

3.4 Rasterization Rules

3.5 Multisampling

Summary of Changes in this Chapter from D3D10 to D3D11.3

Back to all D3D10 to D3D11.3 changes.(25.2)

Section Contents

(back to chapter)

3.1.1 Overview

3.1.2 Term: Unit-Last-Place (ULP)

3.1.3 32-bit Floating Point

D3D11 supports several different floating point representations for storage. However, all floating point computations in D3D11, whether in Shader programs written by application developers or in fixed function operations such as texture filtering or RenderTarget blending, are required to operate under a defined subset of the IEEE 754 32-bit single precision floating point behavior.

One ULP is the smallest representable delta from one value in a numeric representation to an adjacent value. The absolute magnitude of this delta varies with the magnitude of the number in the case of a floating point number. If, hypothetically, the result of an arithmetic operation were allowed to have a tolerance 1 ULP from the infinitely precise result, this would allow an implementation that always truncated its result (without rounding), resulting in an error of at most one unit in the last (least significant) place in the number representation. On the other hand, it would be much more desirable to require 0.5 ULP tolerance on arithmetic results, since that requires the result be the closest possible representation to the infinitely precise result, using round to nearest-even.

Here is a summary of expected 32-bit floating point behaviors for D3D11. Some of these points choose a single option in cases where IEEE-754 offers choices. This is followed by a listing of deviations or additions to IEEE-754 (some of which are significant). Refer to IEEE-754 for topics not mentioned.

Double-precision floating-point support is optional, however all double-precision floating point instructions listed in this spec (here (arithmetic)(22.14), here (conditional)(22.15), here (move)(22.16) and here (type conversion)(22.17) ) must be implemented if double support is enabled.

Double-precision floating-point usage is indicated at compile time by declaring shadel model 5_a. Support for Shader Model 5.0a will be reportable by drivers and discoverable by users via an API.

When supported, double-precision instructions match IEEE 754R behavior requirements (with the exception of double precision reciprocal(22.14.5) which is permitted 1.0 ULP tolerance and the exact result if representable).

An exception to the 4-vector register convention exists for double-precision floating-point instructions, which operate on pairs of doubles. Double-precision floating-point values are in IEEE 754R format. One double is stored in .xy with the least significant 32 bits in x, and the most significant 32 bits in y. Similarly the second double is stored in .zw with the least significant 32 bits in z, and the most significant 32 bits in w.

The permissible swizzles for double operations are .xyzw, .xyxy, .zwxy, .zwzw. The permissible write masks for double operations are .xy, .zw, and .xyzw.

Support for generation of denormalized values is required for double-precision data (no flush-to-zero behavior). Likewise, instructions do not read denormalized data as a signed zero - they honor the denorm value.

Several resource formats in D3D11 contain 16-bit representations of floating point numbers. This section describes the float16 representation.

Format:

A float16 value, v, made from the format above takes the following meaning:

32-bit floating point rules also hold for 16-bit floating point numbers, adjusted for the bit layout described above.

The exceptions are:

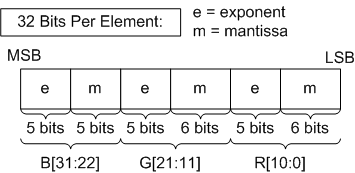

A single resource format in D3D11 contains 11-bit and 10-bit representations of floating point numbers. This section describes the float11 and float10 representations.

Format:

A float11/float10 value, v, made from the format above takes the following meaning:

32-bit floating point rules also hold for 11-bit and 10-bit floating point numbers, adjusted for the bit layout described above.

The exceptions are:

Section Contents

(back to chapter)

3.2.1 Overview

3.2.2 Floating Point Conversion

3.2.3 Integer Conversion

This section describes the rules for various data conversions in D3D11. Other relevant information regarding data conversion is in the Data Invertability(19.1.2) section.

Whenever a floating point conversion between different representations occurs, including to/from non-floating point representations, the following rules apply.

These are rules for converting from a higher range representation to a lower range representation:

These are rules for converting from a lower precision/range representation to a higher precision/range representation:

The following set of terms are subsequently used to characterize various integer format conversions.

| Term | Definition |

|---|---|

| SNORM | Signed normalized integer, meaning that for an n-bit 2's complement number, the maximum value means 1.0f (e.g. the 5-bit value 01111 maps to 1.0f), and the minimum value means -1.0f (e.g. the 5-bit value 10000 maps to -1.0f). In addition, the second-minimum number maps to -1.0f (e.g. the 5-bit value 10001 maps to -1.0f). There are thus two integer representations for -1.0f. There is a single representation for 0.0f, and a single representation for 1.0f. This results in a set of integer representations for evenly spaced floating point values in the range (-1.0f...0.0f), and also a complementary set of representations for numbers in the range (0.0f...1.0f) |

| UNORM | Unsigned normalized integer, meaning that for an n-bit number, all 0's means 0.0f, and all 1's means 1.0f. A sequence of evenly spaced floating point values from 0.0f to 1.0f are represented. e.g. a 2-bit UNORM represents 0.0f, 1/3, 2/3, and 1.0f. |

| SINT | Signed integer. 2's complement integer. e.g. an 3-bit SINT represents the integral values -4, -3, -2, -1, 0, 1, 2, 3. |

| UINT | Unsigned integer. e.g. a 3-bit UINT represents the integral values 0, 1, 2, 3, 4, 5, 6, 7 |

| FLOAT | A floating-point value in any of the representations defined by D3D11. |

| SRGB | Similar to UNORM, in that for an n-bit number, all 0's means 0.0f and all 1's means 1.0f. However unlike UNORM, with SRGB the sequence of unsigned integer encodings between all 0's to all 1's represent a nonlinear progression in the floating point interpretation of the numbers, between 0.0f to 1.0f. Roughly, if this nonlinear progression, SRGB, is displayed as a sequence of colors, it would appear as a linear ramp of luminosity levels to an "average" observer, under "average" viewing conditions, on an "average" display. For complete detail, refer to the SRGB color standard, IEC 61996-2-1, at IEC (International Electrotechnical Commission) |

Note that the terms above are also used as Format Name Modifiers(19.1.3.2), where they describe both how data is layed out in memory and what conversion to perform in the transport path (potentially including filtering) from memory to/from a Pipeline unit such as a Shader. See the Formats(19.1) section to see exactly how these names are used in the context of resource formats.

What follows are descriptions of conversions from various representations described above to other representations. Not all permutations are shown, but at least all the ones that show up in D3D11 somewhere are shown.

Unless otherwise specified for specific cases, all conversions to/from integer representations to float representations described below must be done exactly. Where float arithmetic is involved, FULL IEEE-754 precision is required (1/2 ULP(3.1.2) of the infinitely precise result), which is stricter than the general D3D11 Floating Point Rules(3.1).

Given an n-bit integer value representing the signed range [-1.0f to 1.0f], conversion to floating-point is as follows:

Given a floating-point number, conversion to an n-bit integer value representing the signed range [-1.0f to 1.0f] is as follows:

This conversion is permitted tolerance of 0.6f ULP(3.1.2) (on the integer side). This means that after converting from float to integer scale, any value within 0.6f ULP(3.1.2) of a representable target format value is permitted to map to that value. The additional Data Invertability(19.1.2) requirement ensures that the conversion is nondecreasing across the range and all output values are attainable.

Requiring exact (1/2 ULP) conversion precision is acknowledged to be too expensive.

This conversion is permitted tolerance of 0.6f ULP(3.1.2) (on the integer side). This means that after converting from float to integer scale, any value within 0.6f ULP(3.1.2) of a representable target format value is permitted to map to that value. The additional Data Invertability(19.1.2) requirement ensures that the conversion is nondecreasing across the range and all output values are attainable.

Requiring exact (1/2 ULP) conversion precision is acknowledged to be too expensive.

The following is the ideal SRGB to FLOAT conversion.

This conversion will be permitted a tolerance of 0.5f ULP(3.1.2) (on the SRGB side). The procedure for measuring this tolerance, given that it is relative to the SRGB side even though the result is a FLOAT, is to convert the result back into SRGB space using the ideal FLOAT -> SRGB conversion specified below, but WITHOUT the rounding to integer, and taking the floating point difference versus the original SRGB value to yield the error. There are a couple of exceptions to this tolerance, where exact conversion is required: 0.0f and 1.0f (the ends) must be exactly achievable.

The following is the ideal FLOAT -> SRGB conversion.

Assuming the target SRGB color component has n bits:

This conversion is permitted tolerance of 0.6f ULP(3.1.2) (on the integer side). This means that after converting from float to integer scale, any value within 0.6f ULP(3.1.2) of a representable target format value is permitted to map to that value. The additional Data Invertability(19.1.2) requirement ensures that the conversion is nondecreasing across the range and all output values are attainable.

Requiring exact (1/2 ULP) conversion precision is acknowledged to be too expensive.

To convert from SINT to an SINT with more bits, the MSB bit of the starting number is "sign-extended" to the additional bits available in the target format.

To convert from UINT to an SINT with more bits, the number is copied to the target format's LSBs and additional MSB's are padded with 0.

To convert from SINT to UINT with more bits: If negative, the value is clamped to 0. Otherwise the number is copied to the target format's LSBs and additional MSB's are padded with 0.

To convert from UINT to UINT with more bits the number is copied to the target format's LSBs and additional MSB's are padded with 0.

To convert from a SINT or UINT to SINT or UINT with fewer or equal bits (and/or change in signedness), the starting value is simply clamped to the range of the target format.

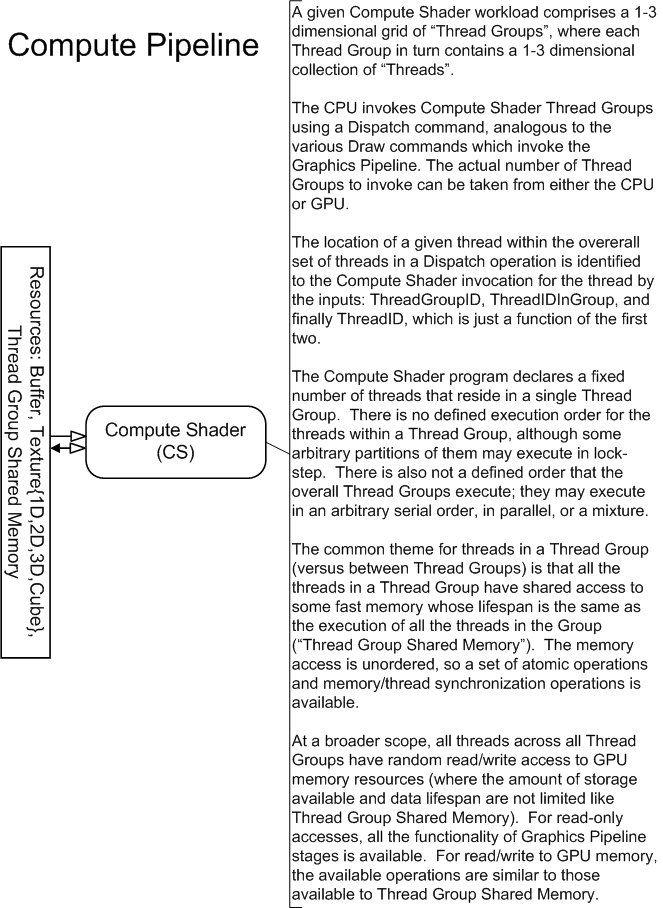

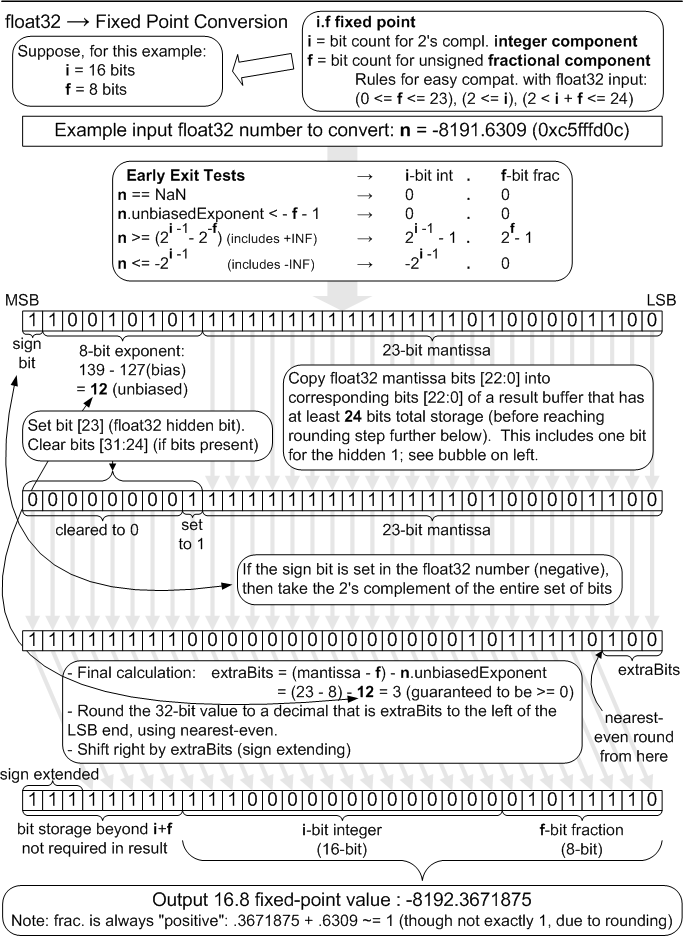

Fixed point integers are simply integers of some bit size that have an implicit decimal point at a fixed location. The ubiquitous "integer" data type is a special case of a fixed point integer with the decimal at the end of the number. Fixed point number representations are characterized as: i.f, where i is the number of integer bits and f is the number of fractional bits. e.g. 16.8 means 16 bits integer followed by 8 bits of fraction. The integer part is stored in 2's complement, at least as defined here (though it can be defined equally for unsigned integers as well). The fractional part is stored in unsigned form. The fractional part always represents the positive fraction between the two nearest integral values, starting from the most negative. Exact details of fixed point representation, and mechanics of conversion from floating point numbers are provided below.

Addition and subtraction operations on fixed point numbers are performed simply using standard integer arithmetic, without any consideration for where the implied decimal lies. Adding 1 to a 16.8 fixed point number just means adding 256, since the decimal is 8 places in from the least significant end of the number. Other operations such as multiplication, can be performed as well simply using integer arithmetic, provided the effect on the fixed decimal is accounted for. For example, multiplying two 16.8 integers using an integer multiply produces a 32.16 result.

Fixed point integer representations are used in a couple of places in D3D11:

The following is the general procedure for converting a floating point number n to a fixed point integer i.f, where i is the number of (signed) integer bits and f is the number of fractional bits:

Note: Sign of zero is preserved.

For D3D11 implementations are permitted 0.6f ULP(3.1.2) tolerance in the integer result vs. the infinitely precise value n*2^f after the last step above.

The diagram below depicts the ideal/reference float to fixed conversion (including round-to-nearest-even), yielding 1/2 ULP accuracy to an infinitely precise result, which is more accurate than required by the tolerance defined above. Future D3D versions will require exact conversion like this reference.

Specific choices of bit allocations for fixed point integers are listed in the places in the D3D11 spec where they are used.

Assume that the specific fixed point representation being converted to float does not contain more than a total of 24 bits of information, no more than 23 bits of which is in the fractional component. Suppose a given fixed point number, fxp, is in i.f form (i bits integer, f bits fraction). The conversion to float is akin to the following pseudocode:

float result = (float)(fxp >> f) + // extract integer

((float)(fxp & (2f - 1)) / (2f)); // extract fraction

Although the situation rarely, if ever arises, consider that a number that originates as fixed point, gets converted to float32, and then gets converted back to fixed point will remain identical to its original value. This holds provided that bit representation for the fixed point number does not contain more information than can be represented in a float32. This lossless conversion property does not hold when making the opposite round-trip, starting from float32, moving to fixed-point, and back; indeed lossy conversion is in fact the "point" of converting from float32 to fixed-point in the first place.

One final note on round-trip conversion. Observe that when the float32 number -2.75 is converted to fixed-point, it becomes -3 +0.25, that is, the integer part is negative but the fixed point part, considered by itself, is positive. When that is converted back to float32, it becomes -2.75 again, since floating point stores negative numbers in sign-magnitude form, instead of in two's complement form.

Section Contents

(back to chapter)

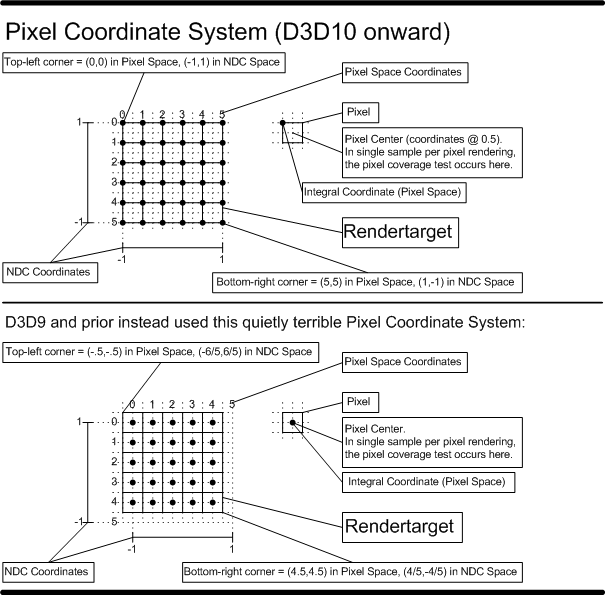

3.3.1 Pixel Coordinate System

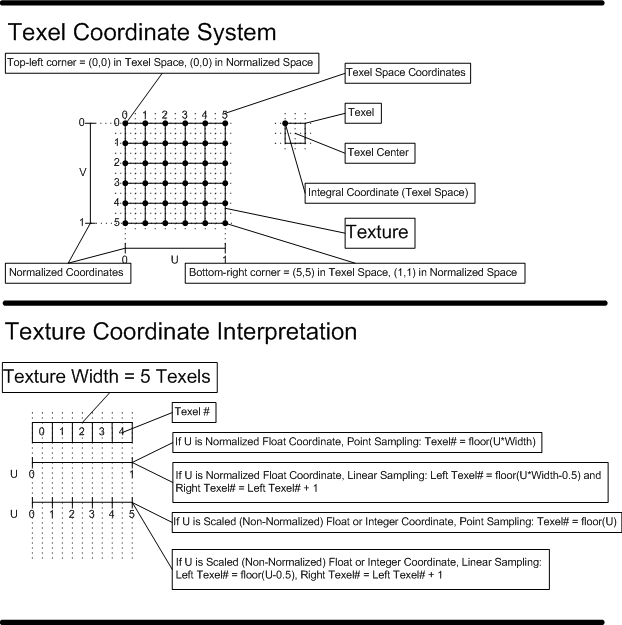

3.3.2 Texel Coordinate System

3.3.3 Texture Coordinate Interpretation

The Pixel Coordinate System defines the origin as the upper-left corner of the RenderTarget. Pixel centers are therefore offset by (0.5f,0.5f) from integer locations on the RenderTarget. This choice of origin makes rendering screen-aligned textures trivial, as the pixel coordinate system is aligned with the texel coordinate system.

D3D9 and prior had a terrible Pixel Coordinate System where the origin was the center of the top left pixel on the RenderTarget. In other words, the origin was (0.5,0.5) away from the upper left corner of the RenderTarget. There was the nice property that Pixel centers were at integer locations, but the fact this was misaligned with the texture coordinate system frequently burned unsuspecting developers. Further, with Multisample rendering, thre was a 1/2 pixel wide region of the RenderTarget along the top and left edge that the viewport could not cover. D3D11 allows applications that want to emulate this behavior to specify a fractional offset to the top left corner of the viewport (-0.5,-0.5).

The texel coordinate system has its origin at the top-left corner of the texture. See the "Texel Coordinate System" diagram below. This is consistent with the Pixel Coordinate System.

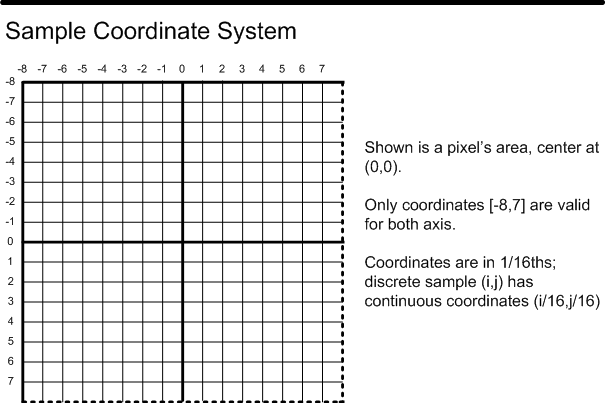

The memory load instructions like sample(22.4.15) or ld(22.4.6) have a couple of ways texture coordinates are interpreted (normalized float, or scaled integer respectively). The "Texture Coordinate Interpretation" diagram below describes how these interpretations get mapped to specific texel(s), for point and linear sampling. The diagram does not illustrate address wrapping, which occurs after the shown equations for computing texel locations. The addressing math shown in this diagram is only a general guideline, and exact definition of texel selection arithmetic is provided in the Texture Sampling(7.18) section, including the role of Fixed Point(3.2.4.1) snapping of precision in the addressing process.

Section Contents

(back to chapter)

3.4.1 Coordinate Snapping

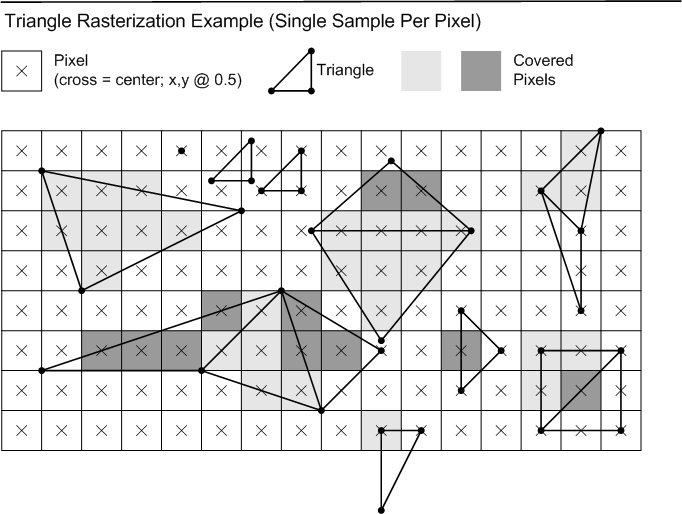

3.4.2 Triangle Rasterization Rules

Consider a set of vertices going through the Rasterizer, after having gone through clipping, perspective divide and viewport scale. Suppose that any further primitive expansion has been done (e.g. rectangular lines can be drawn by implementations as 2 triangles, described later). After the final primitives to be rasterized have been obtained, the x and y positions of the vertices are snapped to exactly n.8 fixed point integers. Any front/back culling is applied (if applicable) after vertices have been snapped. Interpolation of pixel attributes is set up based on the snapped vertex positions of primitives being rasterized.

Any pixel sample locations which fall inside the triangle are drawn. An example with a single sample per pixel (at the center) is shown below. If a sample location falls exactly on the edge of the triangle, the Top-Left Rule applies, to ensure that adjacent triangles do not overdraw. The Top-Left rule is described below.

Top edge: If an edge is exactly horizontal, and it is above the other edges of the triangle in pixel space, then it is a "top" edge.

Left edge: If an edge is not exactly horizontal, and it is on the left side of the triangle in pixel space, then it is a "left" edge. A triangle can have one or two left edges.

Top-Left Rule: If a sample location falls exactly on the edge of a triangle, the sample is inside the triangle if the edge is a "top" edge or a "left" edge. If two edges from the same triangle touch the pixel center, then if both edges are "top" or "left" then the sample is inside the triangle.

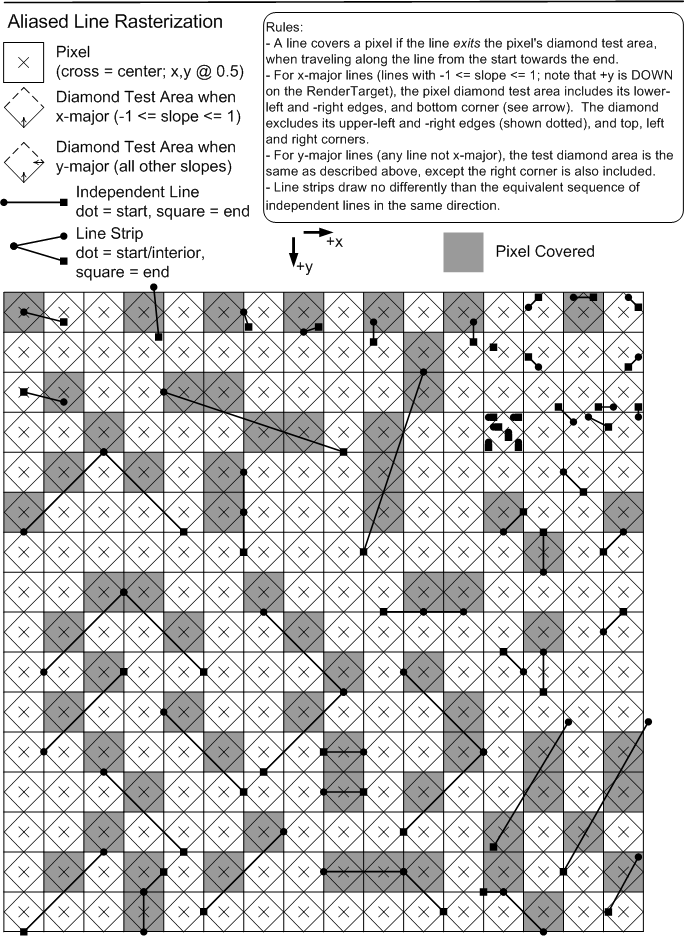

Rasterization rules for infinitely-thin lines, with no antialiasing, are described below.

One futher implication of these line rasterization rules is that lines that are geometrically clipped to the viewport extent may set one less pixel than lines that are rendered to a larger 2D extent with the pixels outside the viewport discarded. (This is due to the handling of the line endpoints.)

Since geometric clip to the viewport is neither required nor disallowed, aliased line rendering is allowed to differ in viewport-edge pixels due to geometric clipping.

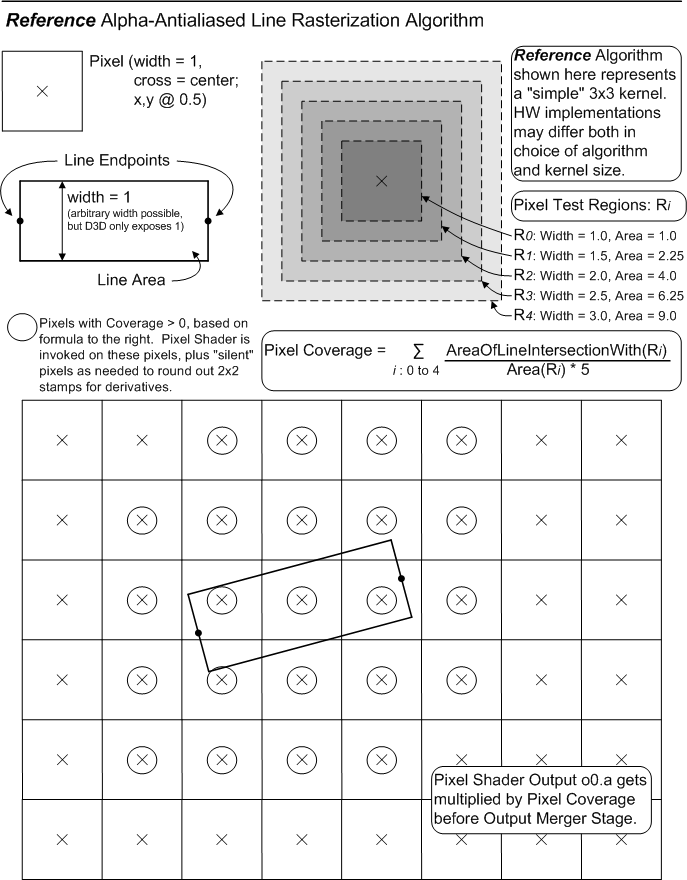

The alpha-based antialiased rasterization of a line (defined by two end vertices) is implemented as the visualization of a rectangle, with the line's two vertices centered on two opposite "ends" of the rectangle, and the other two edges separated by a width (in D3D11 width is only 1.0f). No accounting for connected line segments is done. The region of intersection of this rectangle with the RenderTarget is estimated by some algorithm, producing "Coverage" values [0.0f..1.0f] for each pixel in a region around the line. The Coverage values are multiplied into the Pixel Shader output o0.a value before the Output Merger Stage. Undefined results are produced if the PS does not output o0.a. D3D11 exposes no controls for this line mode.

It is deemed that there is no single "best" way to perform alpha-based antialiased line rendering. D3D11 adopts as a guideline the method shown in the diagram below. This method was derived empirically, exhibiting a number of visual properties deemed desirable. Hardware need not exactly match this algorithm; tests against this reference shall have "reasonable" tolerances, guided by some of the principles listed further below, permitting various hardware implementations and filter kernel sizes. None of this flexibility permitted in hardware implementation, however, can be communicated up through D3D11 to applications, beyond simply drawing lines and observing/measuring how they look.

The following is a listing of the "nice" properties that fall out of the above algorithm, which in general will be expected of hardware implementations (admittedly many of which are likely difficult to test):

Note that the wider the filter kernel an implementation uses, the blurrier the line, and thus the more sensitive the resulting perceived line intensity is to display gamma. The reference implmentation's kernel is quite large, at 3x3 pixel units about each pixel.

Quadrilateral lines take 2 endpoints and turn them into a simple rectangle with width 1.4f, drawn with triangles. The attributes at each end of the line are duplicated for the 2 vertices at each end of the rectangle.

This mode is not supported with center sample patterns (D3D11_CENTER_MULTISAMPLE_PATTERN) where there is more than one sample overlapping the center of the pixel, in which case results of drawing this style of line are undefined. See here(19.2.4.1).

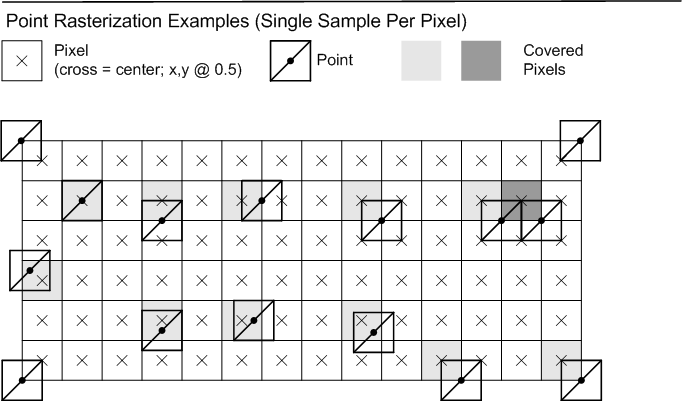

For the purpose of rasterization, a point is represented as a square of width 1 oriented to the RenderTarget. Actual implementation may vary, but output behavior should be identical to what is described here. The coordinate for a point indentifies where the center of the square is located. Pixel coverage for points follows Triangle Rasterization Rules, interpreted as though a point is composed of 2 triangles in a Z pattern, with attributes duplicated at the 4 vertices. Cull modes do not apply to points.

Section Contents

(back to chapter)

3.5.1 Overview

3.5.2 Warning about the MultisampleEnable State

3.5.3 Multisample Sample Locations And Reconstruction

3.5.4 Effects of Sample Count > 1

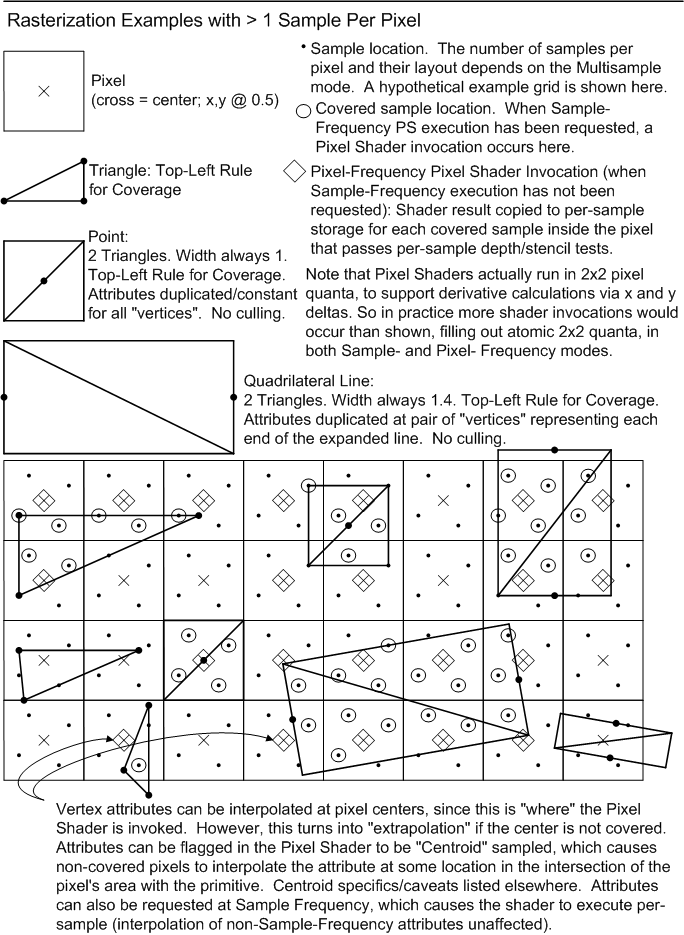

Multisample Antialiasing seeks to fight geometry aliasing, without necessarily dealing with surface aliasing (leaving that as a shading problem, e.g. texture filterng). This is accomplished by performing pixel coverage tests and depth/stencil tests at multiple sample locations per pixel, backed by storage for each sample, while only performing pixel shading calculations once for covered pixels (broadcasting Pixel Shader output across covered samples). It is also possible to request Pixel Shader invocations to occur at sample-frequency rather than at pixel-frequency.

The MultisampleEnable Rasterizer State remains as an awkward leftover from D3D9. It no longer does what the name implies; it no longer has any bearing on multisampling; it only controls line rendering behavior now. The state should have been renamed/refactored, but the opportunity was missed in D3D11. For a detailed discussion about what this state actually does now, see State Interaction With Point/Line/Triangle Rasterization Behavior(15.14).

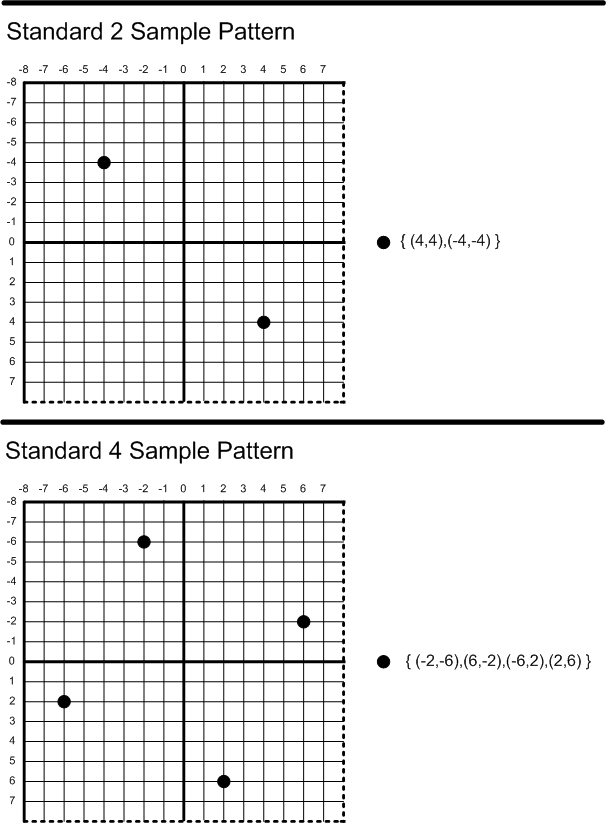

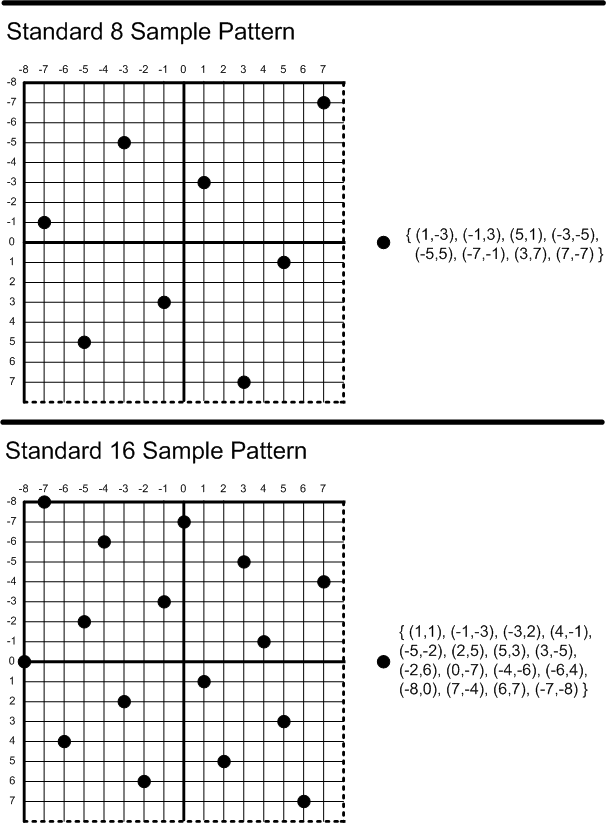

Specifics about sample locations and reconstruction functions for multisample antialiasing are dependent on the chosen Multisample mode, which is outside the scope of this section. See Multisample Format Support(19.2), and Specification of Sample Positions(19.2.4).

Rasterization behavior when sample count is greater than 1 is simply that primitive coverage tests are done for each sample location within a pixel. If one or more sample locations in a pixel are covered, the Pixel Shader is run once for the pixel in Pixel-Frequency mode, or in Sample-Frequency mode once for each covered sample that is also in the Rasterizer SampleMask. Pixel-frequency execution produces a single set of Pixel Shader output data that is replicated to all covered samples that pass their individual depth/stencil tests and blended to the RenderTarget per-sample. Sample-frequency execution produces a unique set of Pixel Shader output data per covered sample (and in SampleMask), each output getting blended 1:1 to the corresponding RenderTarget sample if its depth/stencil test passes.

Note that points(3.4.6) and quadrilateral lines(3.4.5) are functionally equivalent to drawing their area with triangles. So Sample-Frequency execution is easily defined for all of these primitives. For points, the samples covered by the point area (and in the RasterizerState's SampleMask) each get Pixel Shader invocations with attributes replicated from its single vertex (except one parameter is available that is varying - an ID identifying each sample from the total set of samples in the pixel). For quadrilateral lines, the two end vertices define how attributes interpolate along the length, staying constant across the perpendicular. Again, the samples covered by the area of the primitive (and in the SampleMask) each get a Pixel Shader invocations in Sample-Frequency execution mode, with unique input attributes per sample, including an ID identifying which sample it is.

Alpha-Antialiased Lines(3.4.4) and Aliased Lines(3.4.3) are algorithms that inherently do not deal with discrete sample locations within a pixel's area, and thus it is illegal/undefined to request Sample-Frequency execution for these primitives, unless the sample count is 1, which is identical to Pixel-Frequency execution.

Consider a Pixel Shader that operates only on pixel-frequency inputs (e.g. all attributes have one of the following interpolation modes(16.4): constant, linear, linear_centroid, linear_noperspective or linear_noperspective_centroid). Implementations need only execute the shader once per pixel and replicate the results to all samples in the pixel. Now suppose code is added to the shader that generates new outputs based on reading sample-frequency inputs. The existing pixel-frequency part of the shader behaves identically to before. Even though the shader will now execute at sample-frequency (so the new outputs can vary per-sample), each invocation produces the same result for the original outputs.

Though this example happens to separate out the different interpolation frequencies to highlight their invariance, of course it is perfectly valid in general for shader code to mix together inputs with any different interpolation modes.

When a sample-frequency interpolation mode(16.4) is not needed on an attribute, pixel-frequency interpolation-modes such as linear evaluate at the pixel center. However with sample count > 1 on the RenderTarget, attributes could be interpolated at the pixel center even though the center of the pixel may not be covered by the primitive, in which case interpolation becomes "extrapolation". This "extrapolation" can be undesirale in some cases, so short of going to sample-frequency interpolation, a compromise is the centroid interpolation mode.

Centroid behaves exactly as follows:

The term Conservative Rasterization has been used to describe basically a GPU rasterizer assist for shader computed antialiasing. This concept has not been actually implemented in GPUs, at least that are known, but the following short discussion of Conservative Rasterization somewhat motivates the alternative that is specified here - Target Independent Rasterization. Note that as of D3D11.3, hardware has evolved to support Conservative Rasterization(15.17).

Consider how multisampling works in D3D (or GPU rasterization in general). Each pixel has “sample” positions which cause Pixel Shaders to be invoked when primitives (e.g. triangles) cover the samples. For multisampling, a single Pixel Shader invocation occurs when at least one sample in a pixel is covered. Alternatively, D3D10.1+ also allows the shader to request that the Pixel Shader be invoked for each covered sample – this has historically been called “supersampling”.

The downside to these antialiasing approaches is they are based on a discrete number of samples. The more samples the better, but there are still holes in the pixel area between the sample points in which geometry rendered there does not contribute to the image.

Conservative Rasterization, instead, would ideally invoke the Pixel Shader if the area of a primitive (e.g. triangle) being rendered has any chance of intersecting with the pixel’s square area. It would then be up to shader code to compute whatever measure of pixel area intersection it desires. It may be acceptable for the rasterization to be “conservative” in that triangles/primitives are simply rasterized with a fattened screen space area that could include some pixels with no actual coverage – it doesn’t really matter since the shader will be computing the actual coverage.

The win is that the number of Pixel Shader invocations is reasonably bounded to the triangle extents (as opposed to rendering bounding rectangles), and the output can be “perfect” antialiasing if desired. This is particularly the case if also utilizing some other features in D3D11 that allow arbitrary length lists to be recorded per pixel.

However, the complexity of the shader code required to compute an analytic coverage solution with Conservative Rasterization might be too high for the benefit. An alternative scheme, Target Independent Rasterization is defined here, under the more mundane heading 'Forcing Rasterizer Sample Count' below. First though, some discussion about how Target Independent Rasterization can help in at least one scenario - path rendering in Direct2D.

A common usage scenario of Direct2D is to stroke and/or fill anti-aliased paths. The semantics of the Direct2D anti-aliasing scheme are different from MSAA. The key difference is when the resolve step occurs. With MSAA the resolve step typically happens once per frame. With Direct2D anti-aliasing the resolve step occurs after each path is rendered. To work around these semantic differences the Windows 7 version of Direct2D performs rasterization on the CPU. When a path is to be filled or stroked, an expensive CPU-based algorithm computes the percentage of each pixel that is covered by the path. The GPU is used to multiply the path color by the coverage and blend the results with the existing render target contents. This approach is heavily CPU-bound.

Target Independent Rasterization enables Direct2D to move the rasterization step from the CPU to the GPU while still preserving the Direct2D anti-aliasing semantics. Rendering of anti-aliased paths will be performed in 2 passes on the GPU. The first pass will write per-pixel coverage to an intermediate render target texture. Paths will be tessellated into non-overlapping triangles. The GPU will be programmed to use Target Independent Rasterization and additive blending during the first pass. The pixel shader used in the first pass will simply count the number of bits set in the coverage mask and output the result normalized to [0.0,1.0]. During the second pass the GPU will read from the intermediate texture and write to the application’s render target. This pass will multiply the path color by the coverage computed during the first pass.

In some cases, it will be faster for Direct2D to tessellate paths into potentially overlapping triangles. In these cases, the 1st pass will set the ForcedSampleCount to 16 and simply output the coverage mask to the intermediate (R16_UINT). The blender would be setup to do a bitwise OR, or XOR operation (depending on the scenario). The second pass would read this 16-bit value from the intermediate, count the number of bits set, and modulate the color being written to the render target.

There are 2 fallbacks that could be used to implement this algorithm on GPUs that do not support Target Independent Rasterization. The first fallback would render the scene N times, with alpha = 1/N and additive blending for the first step of the algorithm. This would produce the same results, but at the cost of resorting to multipass rendering to to mimic the effect of supersampling at the rasterizer. The second fallback would use MSAA to implement the first pass of the algorithm. Both fallbacks are bound by memory bandwidth (render target writes). Using Target Independent Rasterization would significantly reduce the memory bandwidth requirements of this algorithm.

Overriding the Rasterizer sample count means defining the multisample pattern at the Rasterizer independent of what RenderTargetViews(5.2) (or UnorderedAccessView(5.3.9)s) may be bound at the Output Merger (and their associated sample count / Quality Level).

The ForcedSampleCount state setting is located in the Rasterizer State(15.1) object.

UINT ForcedSampleCount; // Valid values for Target Independent Rasterization (TIR): 0, 1, 4, 8, 16

// Valid values for UAV(5.3.9) only render: 0, 1, 4, 8, 16

// 0 means don't force sample count.

Devices must support all the standard sample patterns up to and including 16 for the ForcedSampleCount. This is even if the device does not support that many samples in RenderTarget / DepthStencil resources.

Investigations show that the 16 sample standard D3D pattern performs favorably with Direct2D's original software based rasterization pattern, which had the significant disadvantage of using a regular grid layout, even though it was 64 samples.

With a forced sample count/pattern selected at the rasterizer (ForcedSampleCount > 0), pixels are candidates for shader invocation based on the selected sample pattern, independent of the RTV ("output") sample count. The burden is then on shader code to make sense of the possible mismatch between rasterizer and output storage sample count, given the defined semantics.

Here are the behaviors with ForcedSampleCount > 0.

The above functionality is required for Feature Level 11_1 hardware.

D3D10.0 - D3D11.0 hardware (and Feature Level 10_0 - 11_0) supports ForcedSampleCount set to 1 (and any sample count for RTV) along with the described limitations (e.g. no depth/stencil).

For 10_0, 10_1, and 11_0 hardware, when ForcedSampleCount is set to 1, line rendering cannot be configured to 2-triangle (quadrilateral) based mode (i.e. the MultisampleEnable state cannot be set to true). This limitation isn't present for 11_1 hardware. Note the naming of the 'MultisampleEnable' state is misleading since it no longer has anything to do with enabling multisampling; instead it is now one of the controls along with AntialiasedLineEnable for selecting line rendering mode.

This limited form of Target Indepdendent Rasterization, ForcedSampleCount = 1, closely matches a mode that was present in D3D10.0 but due to API changes became unavailable for D3D10.1 and D3D11 (and Feature Levels 10_1 and 11_0). In D3D10.0 this mode was the center sampled rendering even on an MSAA surface that was available when MultisampleEnable was set to false (and this could be toggled by toggling MultisampleEnable). In D3D10.1+, MultisampleEnable no longer affects multisampling (despite the name) and only controls line rendering behavior. It turns out some software, such as Direct2D, depended on this mode to be able to render correctly on MSAA surfaces. As of D3D11.1, D2D can use ForcedSampleCount = 1 to bring back this mode consistently on all D3D10+ hardare and Feature Levels. D3D10.0 also supported depth testing in this mode as well, but it is not worth exposing that given it D2D did not expose it, and the full D3D11.1 definition of the feature doesn't work with depth/stencil.

D3D11 allows rasterization with only UAVs bound, and no RTVs/DSVs. Even though UAVs can have any/different sizes, essentially, the viewport/scissor identify the pixel dimensions. Before this feature, when rendering with only UAVs bound, the rasterizer was limited to a single sample only.

UAV(5.3.9)-only rendering with multisampling at the rasterizer is possible by keying off the ForcedSampleCount state described earlier, with the sample patterns limited to 0, 1, 4, 8 and 16. (The UAVs themselves are not multisampled in terms of allocation.) A setting of 0 is equivalent to the setting 1 - single sample rasterization.

Shaders can request pixel-frequency invocation with UAV-only rendering, but requesting sample-frequency invocation is invalid (produces undefined shading results).

The SampleMask Rasterizer State does not affect rasterization behavior at all here.

On D3D11.0 hardware, ForcedSampleCount can be 0, 1, 4 and 8 with UAV only Rasterization. D3D11.1 hardware additionally supports 16.

Attempting to render with unsupported ForcedSampleCount produces undefined rendering results - though if a ForcedSampleCount is chosen that could never be valid for TIR or UAV-only rendering the runtime will fail the Rasterizer State object creation immediately.

Pixel Shaders always run in minimum 2x2 quanta to be able to support derivative calculations, regardless of the RenderTarget sample count. These Pixel Shader derivative calculations, used in texture filtering operations, but also available directly in shaders, are calculated by taking deltas of data in adjacent pixels. This requires data in each pixel has been sampled with unit spacing horizontally or vertically.

RenderTarget sample counts > 1 do not affect derivative calculation methods. If derivatives are requested on an attribute that has been Centroid sampled, the hardware calculation is not adjusted, and therefore incorrect derivatives will often result. What the Shader expects to be a derivative wrt a unit distance in the x or y direction in RenderTarget space will actually be the rate of change with respect to some other direction vector, which also probably isn't unit length.

The point here is that it is the application's responsibility to exhibit caution when requesting derivative from Centroid sampled attributes, ideally never requesting them at all. Centroid sampling can be useful for situations where it is critical that a primitive's interpolated attributes are not "extrapolated", but this comes with some tradeoffs: First, centroid sampled attributes may appear to jump around as a primitive edge moves over a pixel, rather than changing continuously. Secondly, derivative calculations on the attributes become unreliable or difficult to use correctly (which also hurts texture sampling operations that derive LOD from derivatives).

Under sample-frequency execution, a 2x2 quad of Pixel Shaders executes for each sample index where that sample is covered in at least one of the pixels participating in the 2x2 quad. This allows derivatives to be calculated in the usual way since any given sample is located one unit apart horizonally or vertically from the corresponding sample in the neighboring pixels.

It is left to the application's shader author to decide how to adjust for the fact that derivatives calculated from spacings of one unit may need to be scaled in some way to reflect higher frequency shader execution, depending on the sample pattern/count.

Further important discussion of Pixel Shader derivatives is under Interaction of Varying Flow Control With Screen Derivatives(16.8).

Chapter Contents

(back to top)

4.1 Minimal Pipeline Configurations

4.2 Fixed Order of Pipeline Results

4.3 Shader Programs

4.4 The Element

Summary of Changes in this Chapter from D3D10 to D3D11.3

Back to all D3D10 to D3D11.3 changes.(25.2)

The rendering Pipeline encapsulates all state related to the rendering of a primitive. This includes a sequence of pipeline stages as well as various state objects.

Section Contents

(back to chapter)

4.1.1 Overview

4.1.2 No Buffers at Input Assembler

4.1.3 IA + VS (+optionally GS) + No PS + Writes to Depth/Stencil Enabled

4.1.4 IA + VS (+optionally GS) + PS (incl. Rasterizer, Output Merger)

4.1.5 IA + VS + SO

4.1.6 No RenderTarget(s) and/or Depth/Stencil and/or Stream Output

4.1.7 IA + VS + HS + Tessellation + DS + ...

4.1.8 Compute alone

4.1.9 Minimal Shaders

Not all Pipeline Stages must be active. This section clarifies this concept by illustrating some minimal configurations that can produce useful results. The Graphics pipeline is accessed by Draw* calls from the API. The alternative pipeline, Compute, is accessed by issuing Dispatch* calls from the API.

For the Graphics pipepine, the Input Assembler is always active, as it produces pipeline work items. In addition, the Vertex Shader is always active. Relying on the presence of the Vertex Shader at all times simplifies data flow permutations very significantly, versus allowing the Input Assembler with its limited programming flexibility to feed any pipeline stage.

Note that even though the Vertex Shader must always be active in the Graphics pipeline, in scenarios where applications really don't want to have a Vertex Shader, and must simply implement it as a trivial or nearly trivial sequence of mov's from inputs to outputs, the short length and simplicity of such "passthrough" shaders should not be a problem for hardware implementations to practically hide the cost of, one way or another.

A minimal use of the Input Assembler is to not have any input Buffers bound (vertex or index data). The Input Assembler can generate counters such as VertexID(8.16), InstanceID(8.18) and PrimitiveID(8.17), which can identicy vertices/primitives generated in the pipeline by Draw*(), or DrawIndexed*() (if at least an Index Buffer is bound). Thus Shaders can minimally drive all their processing based on the IDs if desired, including fetching appropriate data from Buffers or Textures.

If the shader stage before the rasterizer outputs position, and Depth/Stencil writes are enabled, the rasterizer will simply perform the fixed-function depth/stencil tests and updates to the Depth/Stencil buffer, even if there is no Pixel Shader active. No Pixel Shader means no updates to RenderTargets other than Depth/Stencil.

The Input Assembler + Vertex Shader (required) can drive the Pixel Shader directly (GS does not have to be used, but can be). If an application seeks to write data to RenderTarget(s), not including Depth/Stencil which were explained earlier, the Pixel Shader must be active. This implicitly Output Merger as well, though as described further below, there's no requirement that RenderTargets need to be bound just because rasterization is occuring.

The Input Assembler (+required VS) can feed Stream Output directly with no other stages active. Note that as described in the Stream Output Stage(14) section, Stream Output is tied to the Geometry Shader, however a "NULL" Geometry Shader can be specified, allowing the outputs of the Vertex Shader to be sent to Stream Output with no other stages active.

Whether or not the Pixel Shader is active, it is always legal to NOT have any output targets bound (and/or have output masks defined so that no output targets are written). Likewise for Stream Output. This might be interesting for performance tests which don't include output memory bandwidth (and which might examine feedback statistics such as shader invocation counts, which is itself a form of pipeline output anyway).

The Input Assembler (+required VS) can feed Stream Output directly with no other stages active. Note that as described in the Stream Output Stage(14) section, Stream Output is tied to the Geometry Shader, however a "NULL" Geometry Shader can be specified, allowing the outputs of the Vertex Shader to be sent to Stream Output with no other stages active.

Take any of the configurations above, and HS + Tessellator + DS can be inserted after the VS. The presence of the DS is what implises the presence of the Tessellator before it.

When the Compute Shader runs, it runs by itself. The state for both the Graphics pipeline shaders and Compute Shader can be simultaneously bound. The selection of which pipeline to use is Draw* invokes Graphics and Dispatch* invokes Compute.

All vertex shaders must have a minimum of one input and one output, which can be as little as one scalar value. Note that System Generated Values such as VertexID(8.16) and InstanceID(8.18) count as input.

The rendering Pipeline is designed to allow hardware to execute tasks at various stages in parallel. However observable rendering results must match results produced by serial processing of tasks. Whenever a task in the Pipeline could be performed either serially or in parallel, the results produced by the Pipeline must match serial operation. That is, the order that tasks enter the Pipeline is the order that tasks are observed to be propagated all the way through to completion. If a task moving through the Pipeline generates additional sub-tasks, those sub-tasks are completed as part of completing the spawning task, before any subsequent tasks are completed. Note that this does not prevent hardware from executing tasks out of order or in parallel if desirable, just as long as results are buffered appropriately such that externally visible results reflect serial execution.

One exception to this fixed ordering is with Tessellation. With the fixed function Tessellation stage, implementations are free to generate points and topology in any order as long as that order is consistent given the same input on the same device. Vertices can even be generated multiple times in the course of tessellating a patch, as long as the Tessellator output topology is not point (in which case only the unique points in the patch must be generated). This tessellator exception is discussed here(11.7.9).

Another exception to the fixed ordering of pipeline results is any access to an Unordered Transaction View of a Resource (for example via the Compute Shader or Pixel Shader). These types of Views explicitly allow unordered results, leaving the burden to applications to make careful choices of atomic instructions to access Unordered Transaction Views if deterministic and implementation invariant output is desired.

A Shader object encapsulates a Shader program for any type of Shader unit. All shaders have a common binary format and basically have the following typical layout. A helpful reference for this is the source code accompanying the Reference Rasterizer, which includes facilities for parsing the shader binary.

The Tessellation related shaders have a significantly different structure, particularly the Hull Shader, which appears as multiple phases of shaders concatenated together (not depicted here).

version

input declarations

output declarations

resource declarations

code

version

describes the Shader type: Vertex Shader(vs),

Hull Shader (hs), Domain Shader (ds),

Geometry Shader (gs), Pixel Shader (ps),

Compute Shader (cs).

Example: vs_5_0, ps_5_0

input declarations

declare which input registers are read

Example:

dcl_input v[0]

dcl_input v[1].xy

dcl_input v[2]

output declarations

declare which output registers are written

Example:

dcl_output o[0].xyz

dcl_output o[1]

dcl_output o[2].xw

resource declarations

Example:

dcl_resource t0, Buffer, UNORM

dcl_resource t2, Texture2DArray, FLOAT

code

This Shader section contains executable instructions.

Section Contents

(back to chapter)

4.4.1 Overview

4.4.2 Elements in the Pipeline

4.4.3 Passing Elements Through Pipeline Interfaces

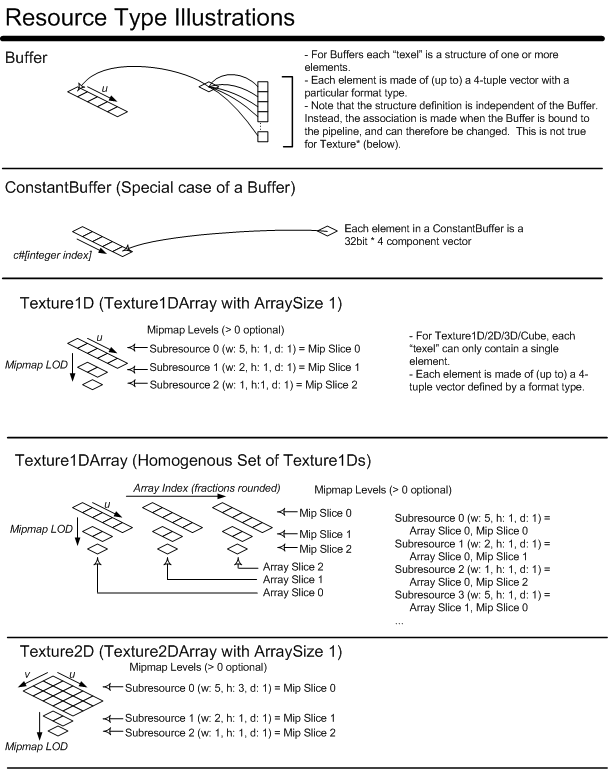

From the perspective of individual D3D11.3 Pipeline stages accessing and interpreting memory, all memory layouts (e.g. Buffer, Texture1D/2D/3D/Cube) are viewed as being composed of "Elements". An individual Element represents a vector of anywhere from 1 to 4 values. An Element could be an R8G8B8A8 packing of data, a single 8-bit integer value, 4 float32 values, etc. In particular, an Element is any one of the DXGI_FORMAT_* formats(19.1), e.g. DXGI_FORMAT_R8G8B8A8 (DXGI stands for "DirectX Graphics Infrastructure", a software component outside the scope of this specification which happens to own the list of DirectX formats going forward). Filtering may be involved in the process of fetching an Element from a texture, and this simply involves looking at multiple values for a given Element in memory and blending them in some fashion to produce an Element that is returned to the Shader.

Buffers in memory can be made up of structures of Elements (as opposed to being a collection of a single Element). For example a Buffer could represent an array of vertices, each vertex containing several elements, such as: position, normal and texture coordinates. See the Resources(5) section for full detail.

The concept of "Elements" does not only apply to resources. Elements also characterize data passing from one Pipeline stage to the next. For example the outputs of a Vertex Shader (Elements making up a vertex) are typically read into a subsequent Pipeline stage as input data, for instance into a Geometry Shader. In this scenario, the Vertex Shader writes values to output registers, each of which represents an individual Element. The subsequent Shader (Geometry Shader in this example) would see a set of input registers each initialized with an Element out of the set of input data.

There are various types of data interfaces in the hardware Pipeline through which Elements pass. This section describes the interfaces in generic terms, and characterizes how Elements of data pass through them. Specific descriptions for each of the actual interfaces in the Pipeline are provided throughout the spec, in a manner consistent with the principles outlined here. The overall theme here is that data mappings through all interfaces are always direct, without any linkage resolving required.

The first type of interface is Memory-to-Stage, where an Element from a Resource (Texture/Buffer) is being fetched into the some part of the Pipeline, possibly the "top" of the Pipeline (Input Assembler(8)), or the "side", meaning a fetch driven from within a Shader Stage. At the point of binding of memory Resources to these interfaces, a number is given to each Element that is bound, representing which input (v#) or texture (t#) "register" at the particular interface refers to the Element. Note that there is no linkage resolving done on behalf of the application; the Shader assumes which "registers" will refer to particular Elements in memory, and so when memory is bound to the interface, it must be bound (or declared, in cases where multiple Elements come from the same Resource in memory) at the "register" expected by the Shader.

For Memory-to-Stage interfaces, Elements always provide to the Shader 4 components of data, with defaults provided for Elements in memory containing fewer than 4 components (though this can be masked to be any subset of the 4 components in the Shader if desired).

For interfaces on the "side", where memory Resources are bound to Shader Stages so they can be fetched from via Shader code, the set of binding points (t# registers in the Shader) cannot be dynamically indexed within the Shader program without using flow control.

On the other hand, the interface at the "top" of the Pipeline (the input v# registers of the first active Shader Stage) can be dynamically indexed as an array from Shader code. The Elements in v# registers being indexed must have a declaration(22.3.30) specifying each range that is to be indexed, where each range specifies a contiguous set of Elements/v# registers, ranges do not overlap, and the components declared for each Element in a given range are identical across the range.

The second type of interface is Stage-to-Stage, where one Pipeline Stage outputs a set of 4 component Elements (written to output o# registers) to the subsequent active Pipeline Stage, which receives Elements in its input v# registers. The mapping of output registers in one Stage to input registers in the next Stage is always direct; so a value written to o3 always goes to v3 in the subsequent Stage. Any subset of the 4 components of any Element can be declared rather than the whole thing.

If more Elements or components within Elements are output than are expected/declared for input by the subsequent Stage, the extra data gets discarded / becomes undefined. If fewer Elements or components within Elements are output than are expected/declared for input by the subsequent Stage, the missing data is undefined.

Similar to the Memory-to-Stage interface at the "top" of the Pipeline, which feeds the input v# registers of the first active Pipeline Stage, at a Stage-to-Stage interface, writes to output Elements (o#) and at the subsequent Stage, reads from input elements (v#) can each be dynamically indexed as arrays from code at the respective Shaders. The Elements in o# registers being indexed must have a declaration(22.3.30) for each range, specifying a contiguous set of Elements/o# registers, without overlapping, and with the same component masks declared for each Element in a given range. The same applies to input v# registers at the subsequent stage (the array declarations for the input v# registers in the Shader are independent/orthogonal to the array declarations for o# in the previous Shader).

There is a detail which is mostly orthogonal to the the Stage-to-Stage interface discussion above: the frequency of operation at subsequent Stages varies, in addition to different amounts of data different Stages can input. For example the Geometry Shader(13) inputs all the vertices for a primitive. The Pixel Shader(16) can choose to have its inputs inperpolated from vertices, or take the data from one. The point of the above discussion is only to describe the mechanism for Element transport through the interfaces independently of these varying frequencies of operation between Stages.

The final type of interface is Stage-to-Memory, where a Pipeline Stage outputs a set of 4 component Elements (written to output o# registers) on a path out to memory. These interfaces (e.g. to RenderTargets or Stream Output) are somewhat the converse of the Memory-to-Stage Interface. Each memory Resource representing one or more Elements of output identifies each Element by a number #, corresponding directly to an output o# register. There is no linkage resolving done on behalf of the application; the application must associate target memory for Element output directly with each o# register that will provide it. Details on specifying these associations are unique for the different Stage-to-Memory interfaces (RenderTargets, Stream Output).

If a Stage-to-Memory interface outputs more Elements or components within Elements than there are destination memory bindings to accommodate, the extra data is discarded. If a Stage-to-Memory interface outputs fewer Elements or components within Elements than there are destination memory bindings expecting to be written, undefined data will be output (i.e. no defaults). At RenderTarget output, there are various means to mask what data gets output, most interesting of which is depth testing, but that is outside the scope of this discussion.

At the RenderTarget output interface (which is Pixel Shader(16) output), dynamic indexing of the o# registers is not supported. For the other Stage-to-Memory interface, Stream Output, indexing of outputs is permissible. Stream Output shares the output o# registers used for Stage-to-Stage output in the Geometry Shader(13) Stage, where indexing is permitted as defined for the Stage-to-Stage interface.

There are various hardware generated values which can each be made available when for input to certain Shader Stages by declaring them for input to a component of an input register. A listing of each System Generated Value in D3D11.3 can be found in the System Generated Value Reference(23), but in addition, here are links to descriptions of some (not all) of the System Generated Values: VertexID(8.16), InstanceID(8.18), PrimitiveID(8.17), IsFrontFace(15.12).

In the Hull Shader(10), Domain Shader(12) and Geometry Shader(13), PrimitiveID is a special case that has its own input register, but for all other cases of inputting hardware generated values into Shaders, (including the PrimitiveID into the Pixel Shader(16)), the Shader must declare a scalar component of one of its input v# registers as one of the System Generated Values to receive each input value. If that v# register also has some components provided by a the previous Stage or Input Assembler(8), the hardware generated value can only be placed in one of the components after the rest of the data. For example if the Input Assembler provides v0.xz, then VertexID might be declared for v0.w (since w is after z), but not v0.y. There cannot be overlap between the target for generated values and the target for values arriving from an upstream Stage or the Input Assembler.

Hardware generated values that are input into the generic v# registers can only be input into the first active Pipeline Stage in a given Pipeline configuration that understands the particular value; from that point on it is the responsibility of the Shader to manually pass the values down if desired through output o# registers. If multiple Stages in the pipeline request a hardware generated value, only the first stage receives it, and at the subsequent stages, the declaration is ignored (though a prudent Shader programmer would pass down the value manually to correspond with the naming).

Since VertexID(8.16), InstanceID(8.18) are both meaningful at a vertex level, and IDs generated by hardware can only be fed into the the first stage that understands them, these ID values can only be fed into the Vertex Shader. PrimitiveID(8.17) generated by hardware can only be fed into the Hull Shader, Domain Shader, as well as whichever of the follwing is the first remaining active stage: Geometry Shader or Pixel Shader.

It is not legal to declare a range of input registers as indexable(22.3.30) if any of the registers in the range contains a System Generated Value.

From the API point of view, System Generated Values and System Interpreted Values (below) may be exposed to developers as just once concept: "System Values" "SV_*".

In many cases, hardware must be informed of the meaning of some of the application-provided or computed data moving through the D3D11.3 Pipeline, so the hardware may perform a fixed function operation using the data. The most obvious example is "position", which is interpreted by the Rasterizer (just before the Pixel Shader). Data flowing through the D3D11.3 Pipeline must be identified as a System Interpreted Value at the output interface between Stages where the hardware is expected to make use of the data. For the case where the Input Assembler(8) is the only Stage present in a Pipeline configuration before the place where the hardware is expected to interpret some data, the Input Assembler(8) has a mechanism for identifying System Interpreted Values to the relevant (components of) Elements it declares.

A listing of each System Interpreted Value in D3D11.3 can be found in the System Interpreted Values Reference(24). Each System Interpreted Value has typically one place in the Pipeline where it is meaningful to the hardware. Also, there may be constraints on how many components in an Element need to be present (such as .xyzw for "position" going to the Rasterizer).

If data produced by the Input Assembler or by the output o# registers of any Stage is identified as a System Interpreted Value at a point in the pipeline where the hardware has no use for interpreting the data, the label is silently ignored (and the data simply flows to the next active Stage uninterpreted). For example if the Input Assembler labels the xyzw components of one of the Elements it is producing as "position", but the first active Pipeline Stage is the Vertex Shader, the hardware ignores the label, since there is nothing for hardware to do with a "position" going into the Vertex Shader.

Just because data is tagged as a System Interpreted Value, telling hardware what to do with it, does not mean the hardware necessarily "consumes" the data. Any data flowing through the Pipeline (System Interpreted Value or not) can typically be input into the next Pipeline Stage's Shader regardless of whether the hardware did something with the data in between. In other words, output data identified as a System Interpreted Value is available to the subsequent Shader Stage if it chooses to input the data, no differently from non-System Interpreted Values. If there are exceptions, they would be described in the System Interpreted Value Reference(24). One catch is that if a given Pipeline Stage, or the Input Assembler, identifies a System Interpreted Value (e.g. "clipDistance"), and the next Shader Stage declares it wants to input that value, it must not only declare as input the appropriate register # and component(s), but also identify the input as the same System Interpreted Value (e.g. "clipDistance"). Mismatching declarations results in undefined behavior. e.g. Identifying an output o3.x as "clipDistance", but not naming a declared input at the next stage v3.x as "clipDistance" is bad. Of course, in this example it would be legal for the subsequent Shader to not declare v3.x for input at all.

It is not legal to declare a range of input or output registers as indexable(22.3.30) if any of the registers in the range contains a System Interpreted Value, with the exception of System Interpeted Values for the Tessellator, which have their own indexing rules - see the Hull Shader(10) specification.

Note that there is no mechanism in the hardware to identify things that the hardware does not care about, such as "texture coordinate" or "color". At a high level in the software stack, full naming of all data may or may not be present to assist in authoring and/or discoverability, but these issues are outside the scope of anything that hardware or drivers need to know about.

Note that while it may seem redundant to label System Interpreted Values at both the place producing the values as well as the next stage inputting it (in the case where the next stage actually wants to input it), this helps hardware/drivers isolate the compilation step for Shader programs at different Stages from any dependency on each other, in the event the driver needs to rename registers to fit hardware optimally, in a way that is transparent to the application.

From the API point of view, System Generated Values and System Interpreted Values (above) may be exposed to developers as just once concept, "System Values" "SV_*".

In many cases in D3D11.3, an offset for an Element is required, a stride for a structure (e.g. vertex) is required, or an initial offset for a Buffer is required. All of these types of values have the following alignment restrictions:

Example byte alignments for some of the formats(19.1) which can be used in structures (e.g. vertex buffers) or as elements in index buffers:

However, these alignment rules do not apply to Buffer offsets when creating Views on Buffers. These Buffer offsets have more stringent requirements, detailed in the View section(5.2).

There is also some similar discussion, focused on memory accesses common to UAVs(5.3.9), SRVs and Thread Group Shared Memory in the Memory Addressing and Alignment Issues(7.13) section.

None of these rules are validated (except in debug mode) and violations will result in undefined behavior.

Chapter Contents

(back to top)

5.1 Memory Structure

5.2 Resource Views

5.3 Resource Types and Pipeline Bindings

5.4 Resource Creation

5.5 Resource Dimensions

5.6 Resource Manipulation

5.7 Resource Discard

5.8 Per-Resource Mipmap Clamping

5.9 Tiled Resources