Method Overview

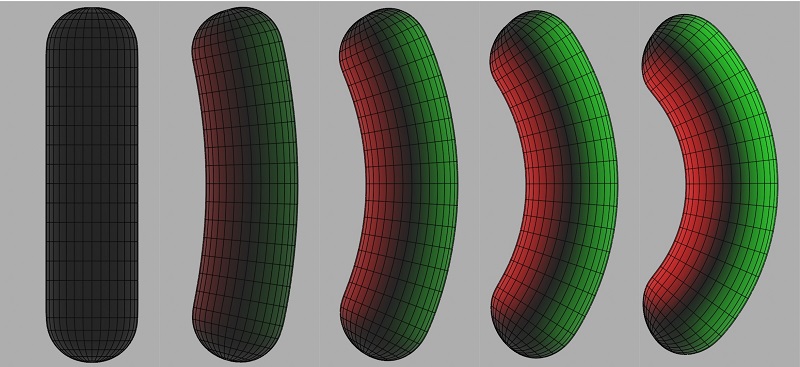

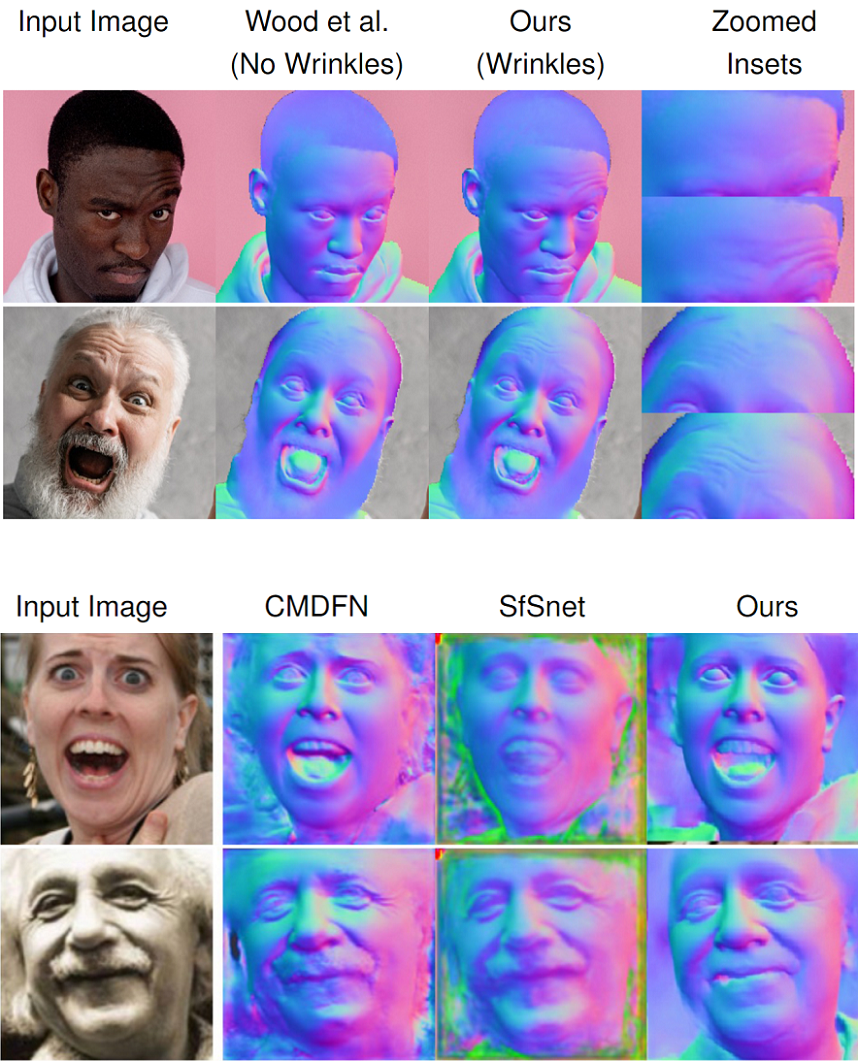

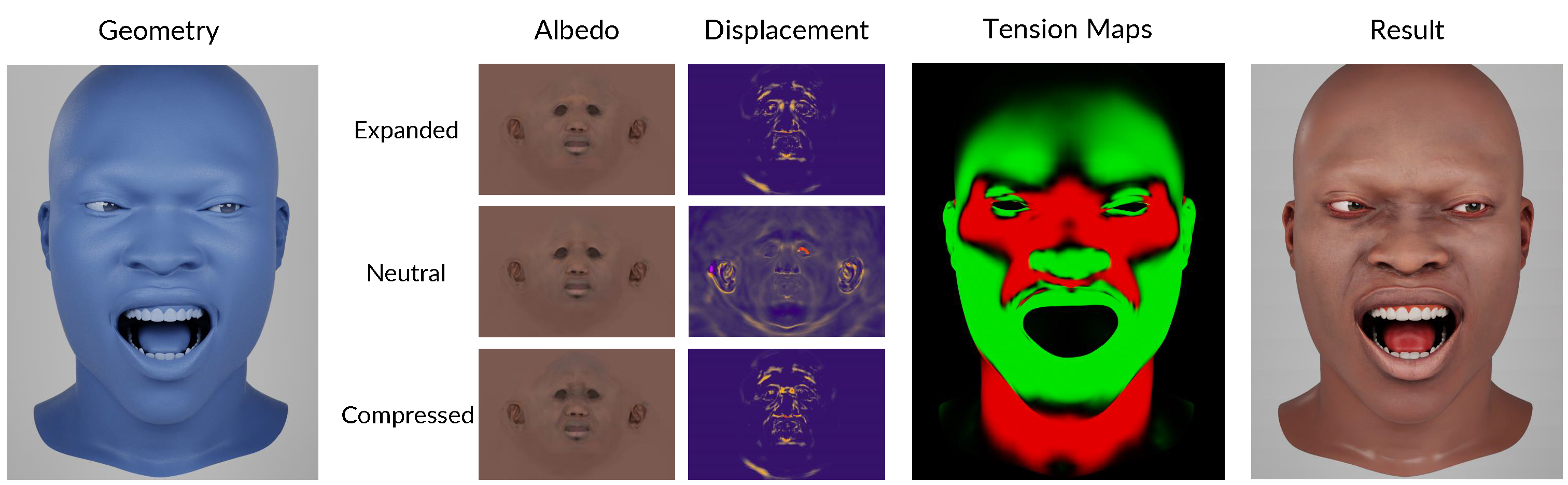

We build upon the synthetic face generation framework of Wood et al., which generates albedo and displacement textures using only the neutral-expression scan for an identity (middle row). In contrast, we automatically compute expanded and compressed texture maps (known collectively as wrinkle maps) to aggregate wrinkling effects in the face and neck regions across available posed-expression scans for the identity. At synthesis, for a given set of arbitrary expression parameters we compute the local tension at every vertex in the corresponding face mesh: we depict expansion in green and compression in red. This mesh tension serves as weights to dynamically blend between the neutral, expanded, and compressed texture maps to synthesize the wrinkling effect at that vertex. Note that our method can thereby generate wrinkles for expressions even beyond those represented in the source scans.