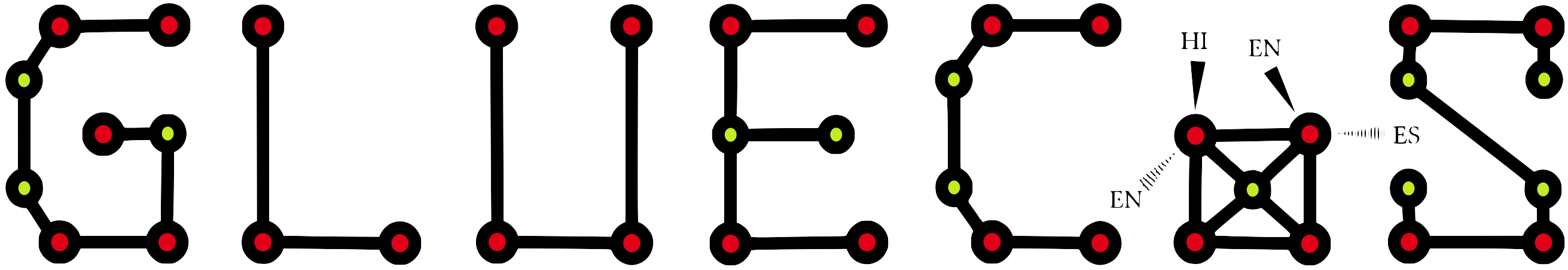

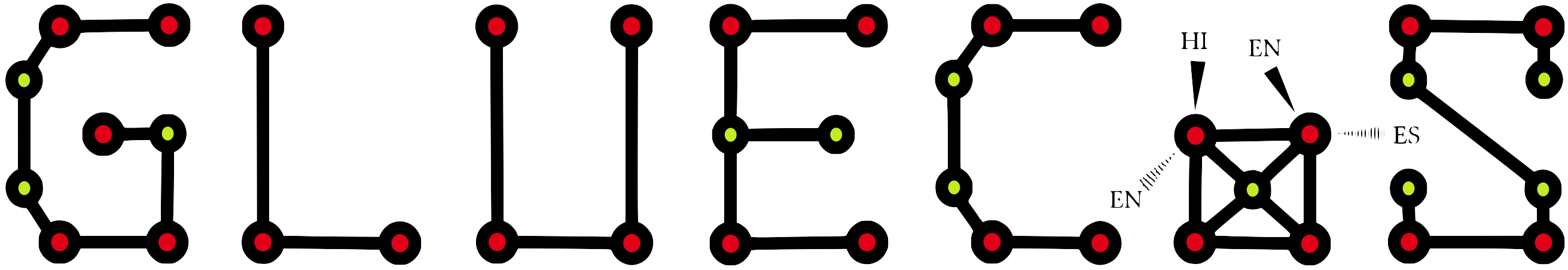

General Language Understanding and Evaluation for Code-Switching

General Language Understanding and Evaluation for Code-Switching

GLUECoS is an evaluation benchmark for code-switched NLP. The current version of the benchmark has eleven datasets, spanning six tasks and two language pairs (English-Hindi and English-Spanish). The tasks included in the benchmark are :

Scripts for data preprocessing and evaluation for the benchmark can be found on our Github page which can be used to download all the datasets, process them and evaluate models on the benchmark.

To be included in the leaderboard, please refer to the README in the above link for submission instructions.

| Rank | Team | Model | Average |

|---|---|---|---|

|

1 July 18, 2020 |

Microsoft Research | mBERT | 72.80 |

| Rank | Team | Model | EN-ES | EN-HI |

|---|---|---|---|---|

|

1 July 18, 2020 |

Microsoft Research | mBERT | 96.44 | 95.18 |

| Rank | Team | Model | EN-ES | EN-HI FG | EN-HI UD |

|---|---|---|---|---|---|

|

1 July 18, 2020 |

Microsoft Research | mBERT | 93.98 | 64.14 | 87.68 |

| Rank | Team | Model | EN-ES | EN-HI |

|---|---|---|---|---|

|

1 July 18, 2020 |

Microsoft Research | mBERT | 60.66 | 76.95 |

| Rank | Team | Model | EN-ES | EN-HI |

|---|---|---|---|---|

|

1 July 18, 2020 |

Microsoft Research | mBERT | 63.02 | 57.51 |

| Rank | Team | Model | EN-HI |

|---|---|---|---|

|

1 July 18, 2020 |

Microsoft Research | mBERT | 62.23 |

| Rank | Team | Model | EN-HI |

|---|---|---|---|

|

1 July 18, 2020 |

Microsoft Research | mBERT | 57.74 |

Simran Khanuja, Microsoft Research India (work done during internship at MSRI)

Anirudh Srinivasan, Microsoft Research India

Sandipan Dandapat, Microsoft IDC

Sunayana Sitaram, Microsoft Research India

Monojit Choudhury, Microsoft Research India

Tanuja Ganu, Microsoft Research India

Kalika Bali, Microsoft Research India

Contact us: If you have any questions, or have worked with code-mixed datasets that can be a part of this benchmark, mail us at sunayana.sitaram@microsoft.com