Gestures

Gestures are input events based on human hands. There are two types of devices that raise gesture input events in MRTK:

Windows Mixed Reality devices such as HoloLens. This describes pinching motions ("Air Tap") and tap-and-hold gestures.

For more information on HoloLens gestures see the Windows Mixed Reality Gestures documentation.

WindowsMixedRealityDeviceManagerwraps the Unity XR.WSA.Input.GestureRecognizer to consume Unity's gesture events from HoloLens devices.Touch screen devices.

UnityTouchControllerwraps the Unity Touch class that supports physical touch screens.

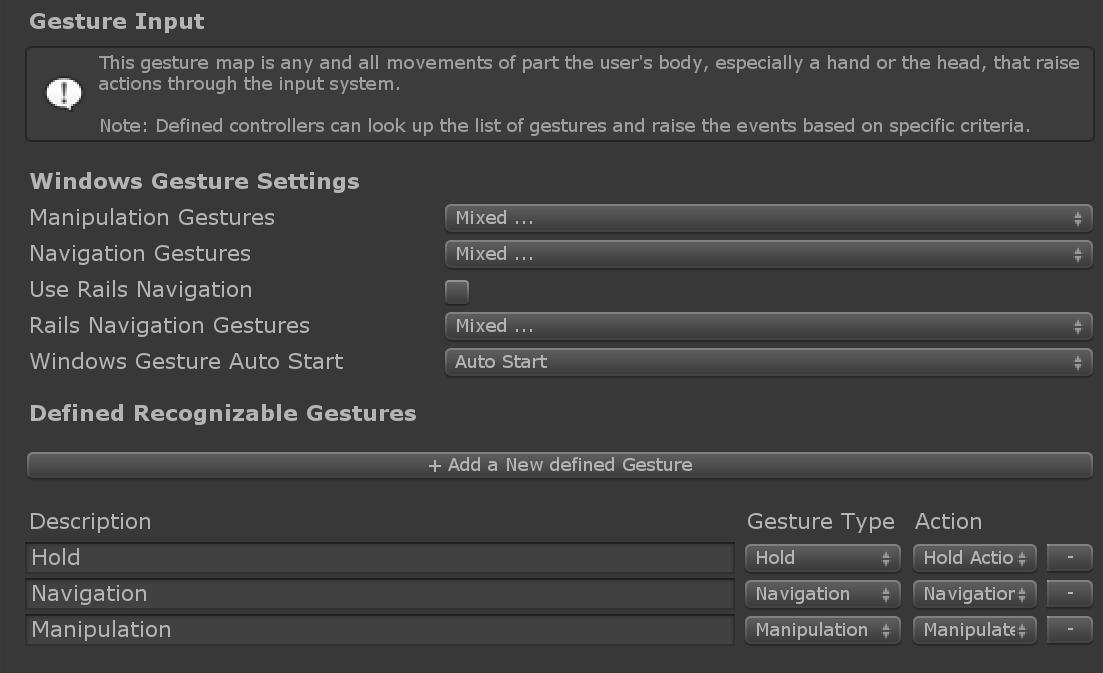

Both of these input sources use the Gesture Settings profile to translate Unity's Touch and Gesture events respectively into MRTK's Input Actions. This profile can be found under the Input System Settings profile.

Gesture events

Gesture events are received by implementing one of the gesture handler interfaces: IMixedRealityGestureHandler or IMixedRealityGestureHandler<TYPE> (see table of event handlers).

See Example Scene for an example implementation of a gesture event handler.

When implementing the generic version, the OnGestureCompleted and OnGestureUpdated events can receive typed data of the following types:

Vector2- 2D position gesture. Produced by touch screens to inform of theirdeltaPosition.Vector3- 3D position gesture. Produced by HoloLens to inform of:cumulativeDeltaof a manipulation eventnormalizedOffsetof a navigation event

Quaternion- 3D rotation gesture. Available to custom input sources but not currently produced by any of the existing ones.MixedRealityPose- Combined 3D position/rotation gesture. Available to custom input sources but not currently produced by any of the existing ones.

Order of events

There are two principal chains of events, depending on user input:

"Hold":

- Hold tap:

- start Manipulation

- Hold tap beyond HoldStartDuration:

- start Hold

- Release tap:

- complete Hold

- complete Manipulation

- Hold tap:

"Move":

- Hold tap:

- start Manipulation

- Hold tap beyond HoldStartDuration:

- start Hold

- Move hand beyond NavigationStartThreshold:

- cancel Hold

- start Navigation

- Release tap:

- complete Manipulation

- complete Navigation

- Hold tap:

Example scene

The HandInteractionGestureEventsExample (Assets/MRTK/Examples/Demos/HandTracking/Scenes) scene shows how to use the pointer Result to spawn an object at the hit location.

The GestureTester (Assets/MRTK/Examples/Demos/HandTracking/Script) script is an example implementation to visualize gesture events via GameObjects. The handler functions change the color of indicator objects and display the last recorded event in text objects in the scene.