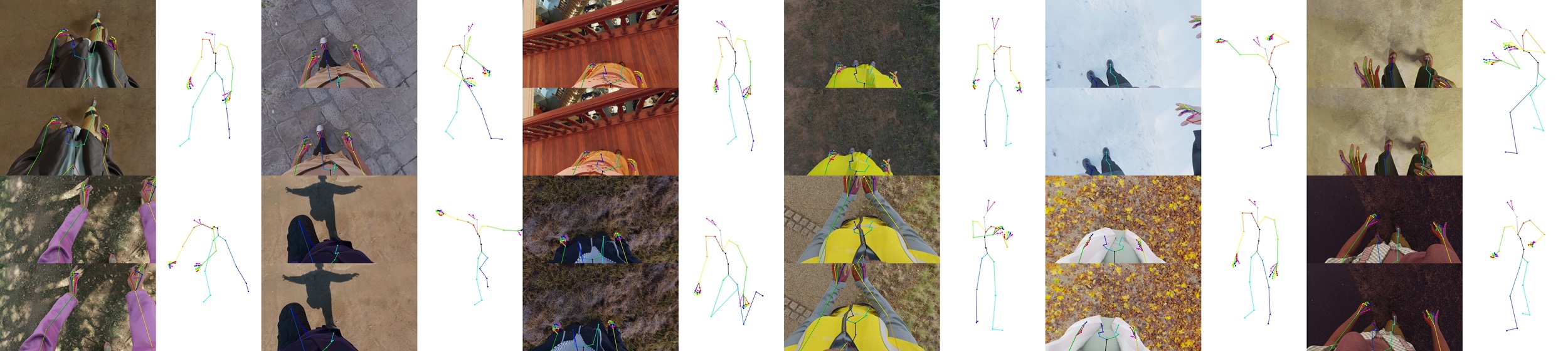

SynthEgo Dataset

To construct the SynthEgo dataset we render 60K stereo pairs at 1280×720 pixel resolution, building on the pipeline of Hewitt et al. This dataset is comprised of 6000 unique identities, each performing 10 different poses in 10 different lighting environments. Each identity is made up of a randomly sampled body shape, skin textures sampled from a library of 25 and randomly recolored, and clothing assets sampled from a library of 202. Lighting environments are sampled from a library of 489 HDRIs, to ensure correct disparity of the environment between the stereo pair, we project the HDRI background onto the ground plane. Poses are sampled from a library of over 2 million unique poses and randomly mirrored; sampling is weighted by the mean absolute joint angle and common poses like T-pose are significantly down-weighted to increase diversity.

| Mo2Cap2 | xR-EgoPose | UnrealEgo | SynthEgo | |

|---|---|---|---|---|

| Unique Identities | 700 | 46 | 17 | 6000 |

| Environments | Unspecified | Unspecified | 14 | 489 |

| Body Model | SMPL | Unspecified | UnrealEngine | SMPL-H |

| Lens Type | Fisheye | Fisheye | Fisheye | Rectilinear |

| Mono/Stereo | Mono | Mono | Stereo | Stereo |

| Body Shape GT | ✓ | |||

| Joint Location GT | ✓ | ✓ | ✓ | ✓ |

| Joint Rotation GT | ✓ | |||

| Realism | Low | Medium | High | High |

We position the camera on the front of the forehead looking down at the body. The camera uses a pinhole model approximating the ZED mini stereo. We add uniform noise within ±1 cm to the location and ±10° around all axes of rotation of the camera to simulate misplacement and movement of the HMD on the head. The resulting images are typically quite challenging for pose estimation, as many parts of the body are often not seen by the camera.