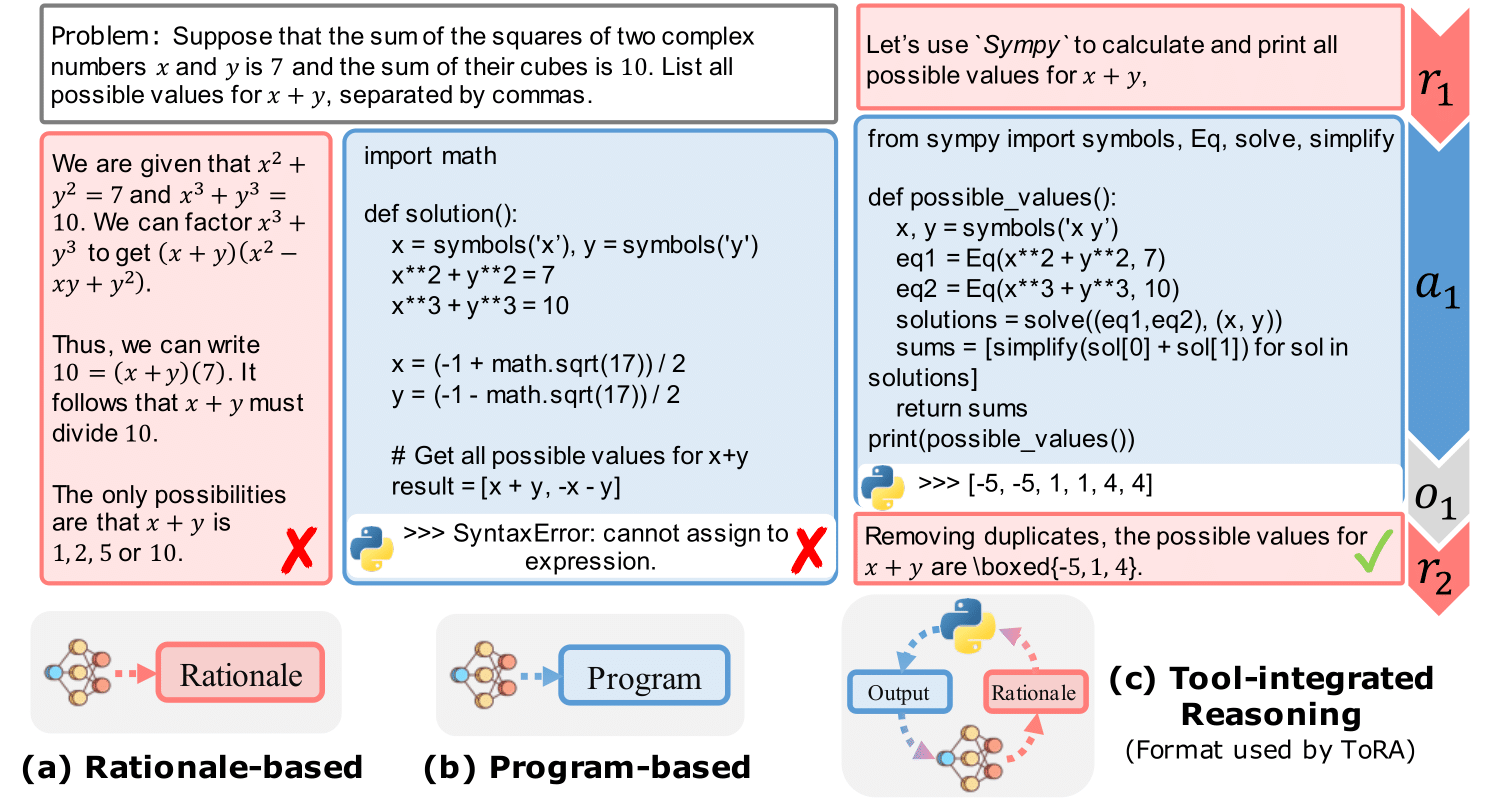

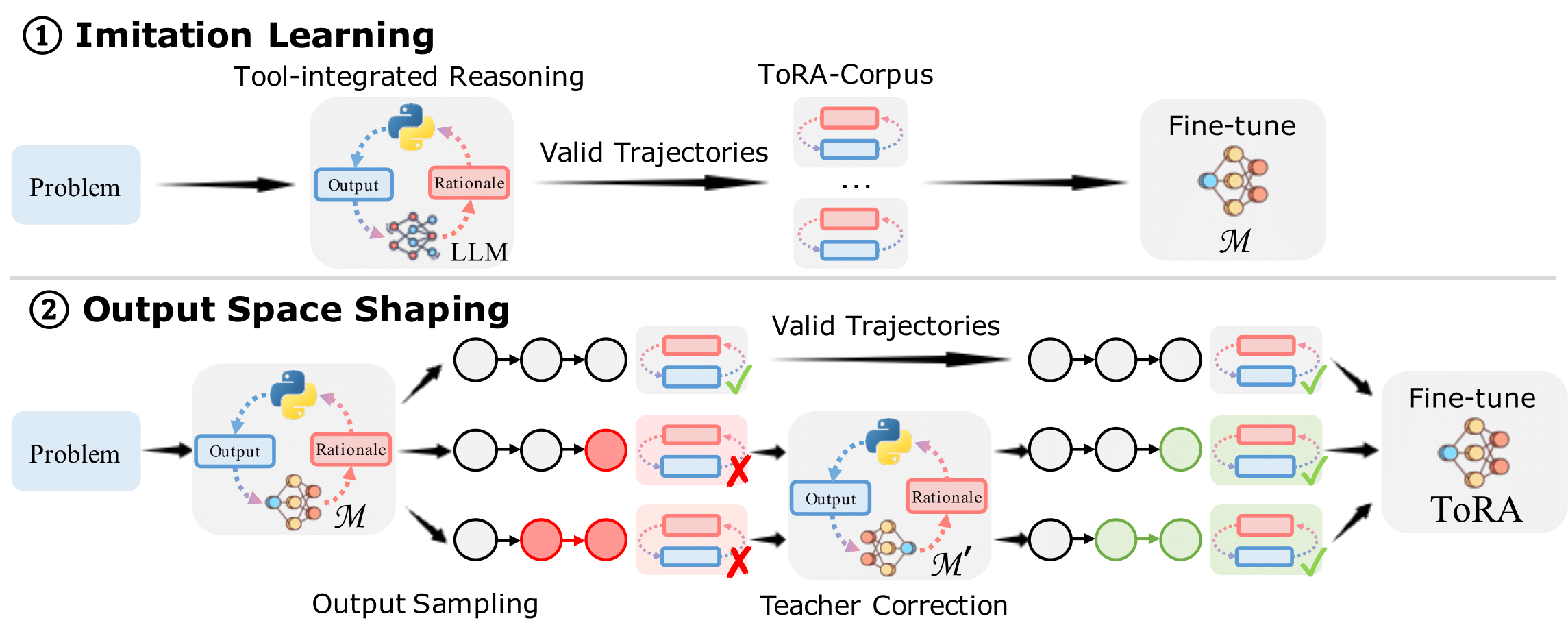

ToRA is a series of Tool-integrated Reasoning Agents

designed to solve challenging mathematical reasoning problems by interacting with tools,

e.g., computation libraries and symbolic solvers. ToRA series seamlessly integrate

natural

language reasoning with the utilization of external tools, thereby amalgamating

the

analytical prowess of language and the computational efficiency of external tools.

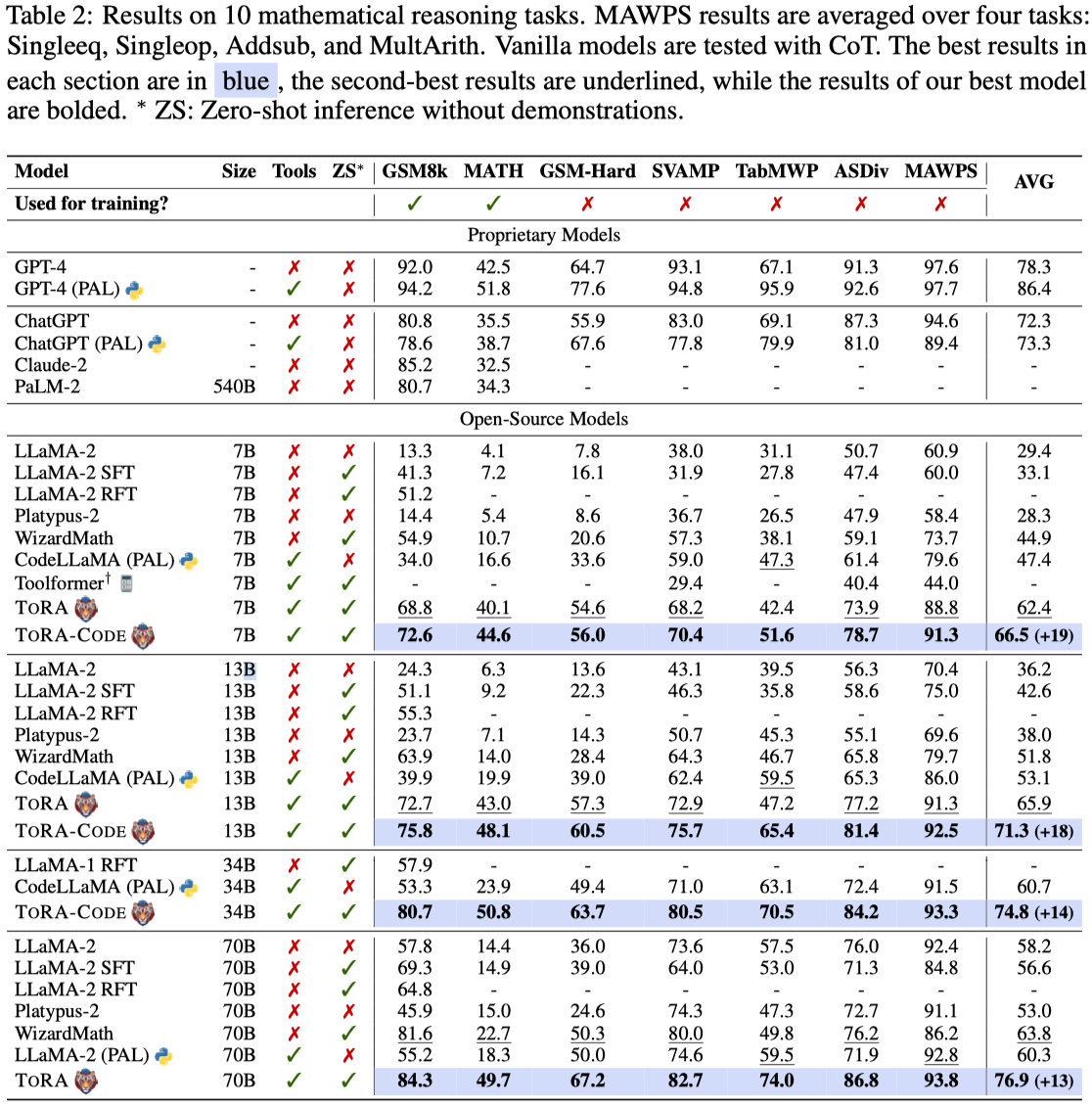

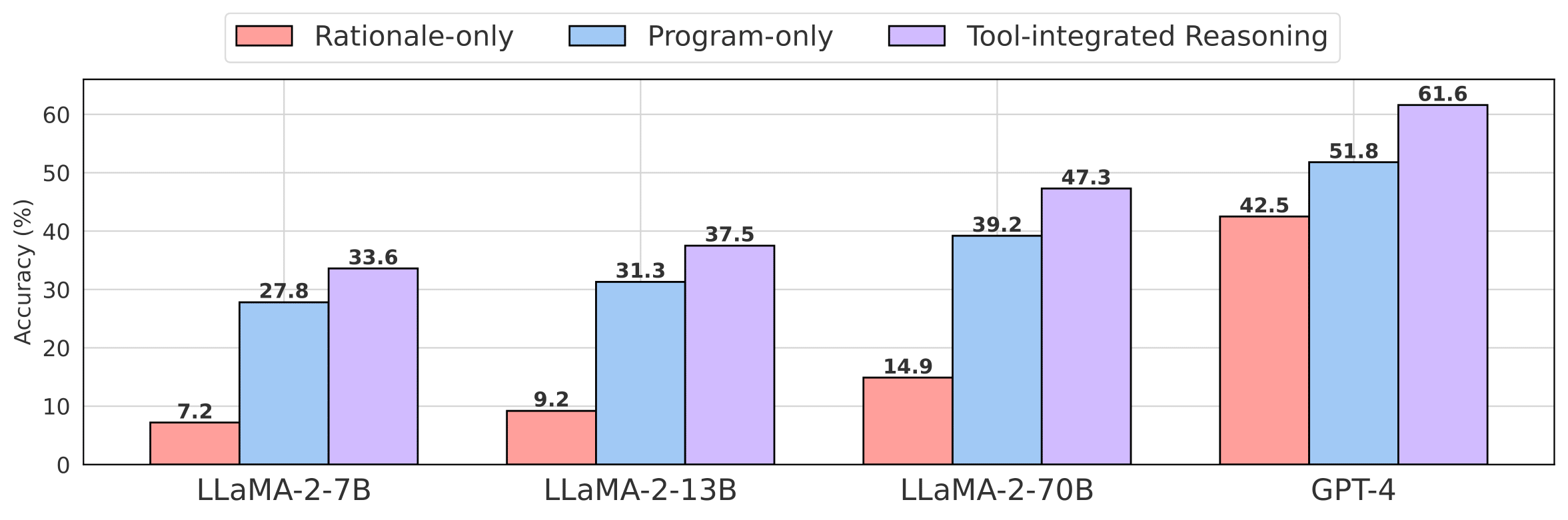

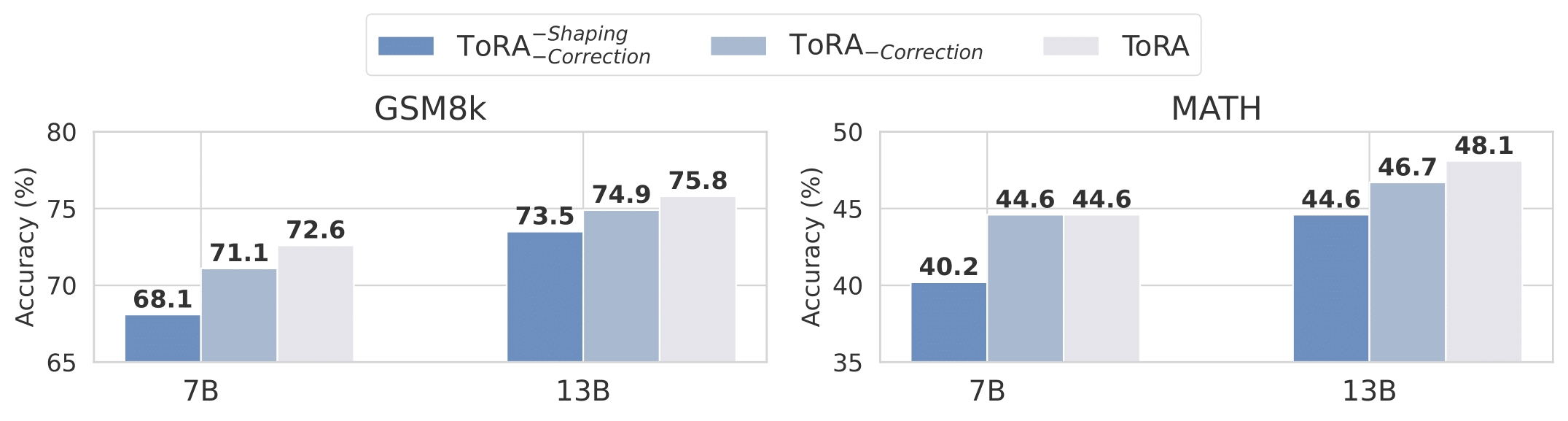

ToRA models significantly outperform open-source models on 10 mathematical reasoning

datasets across all scales with 13%-19% absolute improvements on average. Notably,

ToRA-7B

reaches 44.6% on the competition-level dataset MATH, surpassing the best open-source

model

WizardMath-70B by 22% absolute. ToRA-Code-34B is also the first open-source model

that achieves an accuracy exceeding 50% on MATH, which significantly outperforms

GPT-4’s

CoT result, and is competitive with GPT-4 solving problems with programs.

ToRA:

ToRA: