TL;DR

- AutoGen has received tremendous interest and recognition.

- AutoGen has many exciting new features and ongoing research.

Five months have passed since the initial spinoff of AutoGen from FLAML. What have we learned since then? What are the milestones achieved? What's next?

Background

AutoGen was motivated by two big questions:

- What are future AI applications like?

- How do we empower every developer to build them?

Last year, I worked with my colleagues and collaborators from Penn State University and University of Washington, on a new multi-agent framework, to enable the next generation of applications powered by large language models. We have been building AutoGen, as a programming framework for agentic AI, just like PyTorch for deep learning. We developed AutoGen in an open source project FLAML: a fast library for AutoML and tuning. After a few studies like EcoOptiGen and MathChat, in August, we published a technical report about the multi-agent framework. In October, we moved AutoGen from FLAML to a standalone repo on GitHub, and published an updated technical report.

Feedback

Since then, we've got new feedback every day, everywhere. Users have shown really high recognition of the new levels of capability enabled by AutoGen. For example, there are many comments like the following on X (Twitter) or YouTube.

Autogen gave me the same a-ha moment that I haven't felt since trying out GPT-3 for the first time.

I have never been this surprised since ChatGPT.

Many users have deep understanding of the value in different dimensions, such as the modularity, flexibility and simplicity.

The same reason autogen is significant is the same reason OOP is a good idea. Autogen packages up all that complexity into an agent I can create in one line, or modify with another.

Over time, more and more users share their experiences in using or contributing to autogen.

In our Data Science department Autogen is helping us develop a production ready multi-agents framework.

Sam Khalil, VP Data Insights & FounData, Novo Nordisk

When I built an interactive learning tool for students, I looked for a tool that could streamline the logistics but also give enough flexibility so I could use customized tools. AutoGen has both. It simplified the work. Thanks to Chi and his team for sharing such a wonderful tool with the community.

Yongsheng Lian, Professor at the University of Louisville, Mechanical Engineering

Exciting news: the latest AutoGen release now features my contribution… This experience has been a wonderful blend of learning and contributing, demonstrating the dynamic and collaborative spirit of the tech community.

Davor Runje, Cofounder @ airt / President of the board @ CISEx

With the support of a grant through the Data Intensive Studies Center at Tufts University, our group is hoping to solve some of the challenges students face when transitioning from undergraduate to graduate-level courses, particularly in Tufts' Doctor of Physical Therapy program in the School of Medicine. We're experimenting with Autogen to create tailored assessments, individualized study guides, and focused tutoring. This approach has led to significantly better results than those we achieved using standard chatbots. With the help of Chi and his group at Microsoft, our current experiments include using multiple agents in sequential chat, teachable agents, and round-robin style debate formats. These methods have proven more effective in generating assessments and feedback compared to other large language models (LLMs) we've explored. I've also used OpenAI Assistant agents through Autogen in my Primary Care class to facilitate student engagement in patient interviews through digital simulations. The agent retrieved information from a real patient featured in a published case study, allowing students to practice their interview skills with realistic information.

Benjamin D Stern, MS, DPT, Assistant Professor, Doctor of Physical Therapy Program, Tufts University School of Medicine

Autogen has been a game changer for how we analyze companies and products! Through collaborative discourse between AI Agents we are able to shave days off our research and analysis process.

Justin Trugman, Cofounder & Head of Technology at BetterFutureLabs

These are just a small fraction of examples. We have seen big enterprise customers’ interest from pretty much every vertical industry: Accounting, Airlines, Biotech, Consulting, Consumer Packaged Goods, Electronics, Entertainment, Finance, Fintech, Government, Healthcare, Manufacturer, Metals, Pharmacy, Research, Retailer, Social Media, Software, Supply Chain, Technology, Telecom…

AutoGen is used or contributed by companies, organizations, universities from A to Z, in all over the world. We have seen hundreds of example applications. Some organization uses AutoGen as the backbone to build their agent platform. Others use AutoGen for diverse scenarios, including research and investment to novel and creative applications of multiple agents.

Milestones

AutoGen has a large and active community of developers, researchers and AI practitioners.

- 22K+ stars on GitHub, 3K+ forks

- 14K+ members on Discord

- 100K+ downloads per months

- 3M+ views on Youtube (400+ community-generated videos)

- 100+ citations on Google Scholar

I am so amazed by their creativity and passion. I also appreciate the recognition and awards AutoGen has received, such as:

- Selected by TheSequence: My Five Favorite AI Papers of 2023

- Top trending repo on GitHub in Oct'23

- Selected into Open100: Top 100 Open Source achievements only 35 days after spinoff

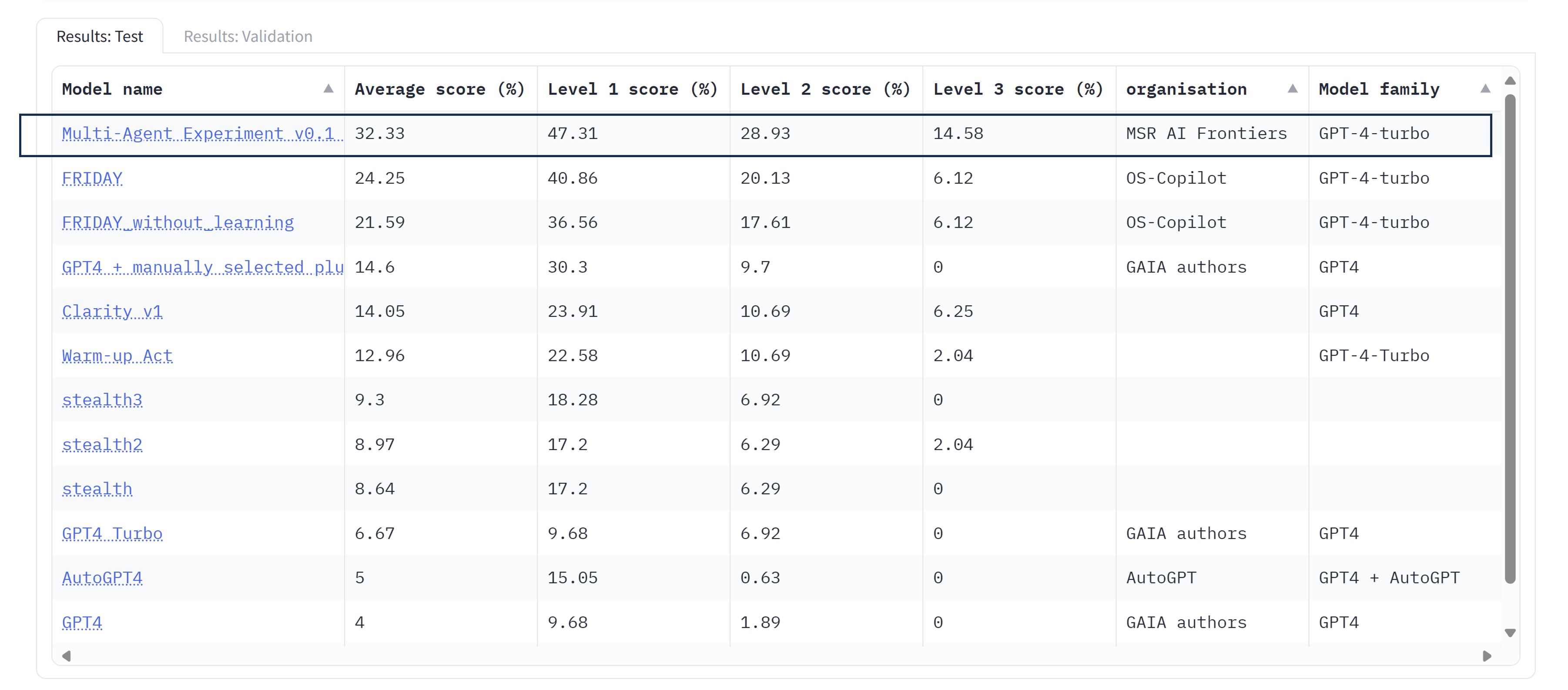

On March 1, the initial AutoGen multi-agent experiment on the challenging GAIA benchmark turned out to achieve the No. 1 accuracy with a big leap, in all the three levels.

That shows the big potential of using AutoGen in solving complex tasks. And it's just the beginning of the community's effort to answering a few hard open questions.

Open Questions

In the AutoGen technical report, we laid out a number of challenging research questions:

- How to design optimal multi-agent workflows?

- How to create highly capable agents?

- How to enable scale, safety and human agency?

The community has been working hard to address them in several dimensions:

- Evaluation. Convenient and insightful evaluation is the foundation of making solid progress.

- Interface. An intuitive, expressive and standardized interface is the prerequisite of fast experimentation and optimization.

- Optimization. Both the multi-agent interaction design (e.g., decomposition) and the individual agent capability need to be optimized to satisfy specific application needs.

- Integration. Integration with new technologies is an effective way to enhance agent capability.

- Learning/Teaching. Agentic learning and teaching are intuitive approaches for agents to optimize their performance, enable human agency and enhance safety.

New Features & Ongoing Research

Evaluation

We are working on agent-based evaluation tools and benchmarking tools. For example:

- AgentEval. Our research finds that LLM agents built with AutoGen can be used to automatically identify evaluation criteria and assess the performance from task descriptions and execution logs. It is demonstrated as a notebook example. Feedback and help are welcome for building it into the library.

- AutoGenBench. AutoGenBench is a commandline tool for downloading, configuring, running an agentic benchmark, and reporting results. It is designed to allow repetition, isolation and instrumentation, leveraging the new runtime logging feature.

These tools have been used for improving the AutoGen library as well as applications. For example, the new state-of-the-art performance achieved by a multi-agent solution to the GAIA benchmark has benefited from these evaluation tools.

Interface

We are making rapid progress in further improving the interface to make it even easier to build agent applications. For example:

- AutoBuild. AutoBuild is an ongoing area of research to automatically create or select a group of agents for a given task and objective. If successful, it will greatly reduce the effort from users or developers when using the multi-agent technology. It also paves the way for agentic decomposition to handle complex tasks. It is available as an experimental feature and demonstrated in two modes: free-form creation and selection from a library.

- AutoGen Studio. AutoGen Studio is a no-code UI for fast experimentation with the multi-agent conversations. It lowers the barrier of entrance to the AutoGen technology. Models, agents, and workflows can all be configured without writing code. And chatting with multiple agents in a playground is immediately available after the configuration. Although only a subset of

pyautogenfeatures are available in this sample app, it demonstrates a promising experience. It has generated tremendous excitement in the community. - Conversation Programming+. The AutoGen paper introduced a key concept of Conversation Programming, which can be used to program diverse conversation patterns such as 1-1 chat, group chat, hierarchical chat, nested chat etc. While we offered dynamic group chat as an example of high-level orchestration, it made other patterns relatively less discoverable. Therefore, we have added more convenient conversation programming features which enables easier definition of other types of complex workflow, such as finite state machine based group chat, sequential chats, and nested chats. Many users have found them useful in implementing specific patterns, which have been always possible but more obvious with the added features. I will write another blog post for a deep dive.

Learning/Optimization/Teaching

The features in this category allow agents to remember teachings from users or other agents long term, or improve over iterations. For example:

- AgentOptimizer. This research finds an approach of training LLM agents without modifying the model. As a case study, this technique optimizes a set of Python functions for agents to use in solving a set of training tasks. It is planned to be available as an experimental feature.

- EcoAssistant. This research finds a multi-agent teaching approach when using agents with different capacities powered by different LLMs. For example, a GPT-4 agent can teach a GPT-3.5 agent by demonstration. With this approach, one only needs 1/3 or 1/2 of GPT-4's cost, while getting 10-20% higher success rate than GPT-4 on coding-based QA. No finetuning is needed. All you need is a GPT-4 endpoint and a GPT-3.5-turbo endpoint. Help is appreciated to offer this technique as a feature in the AutoGen library.

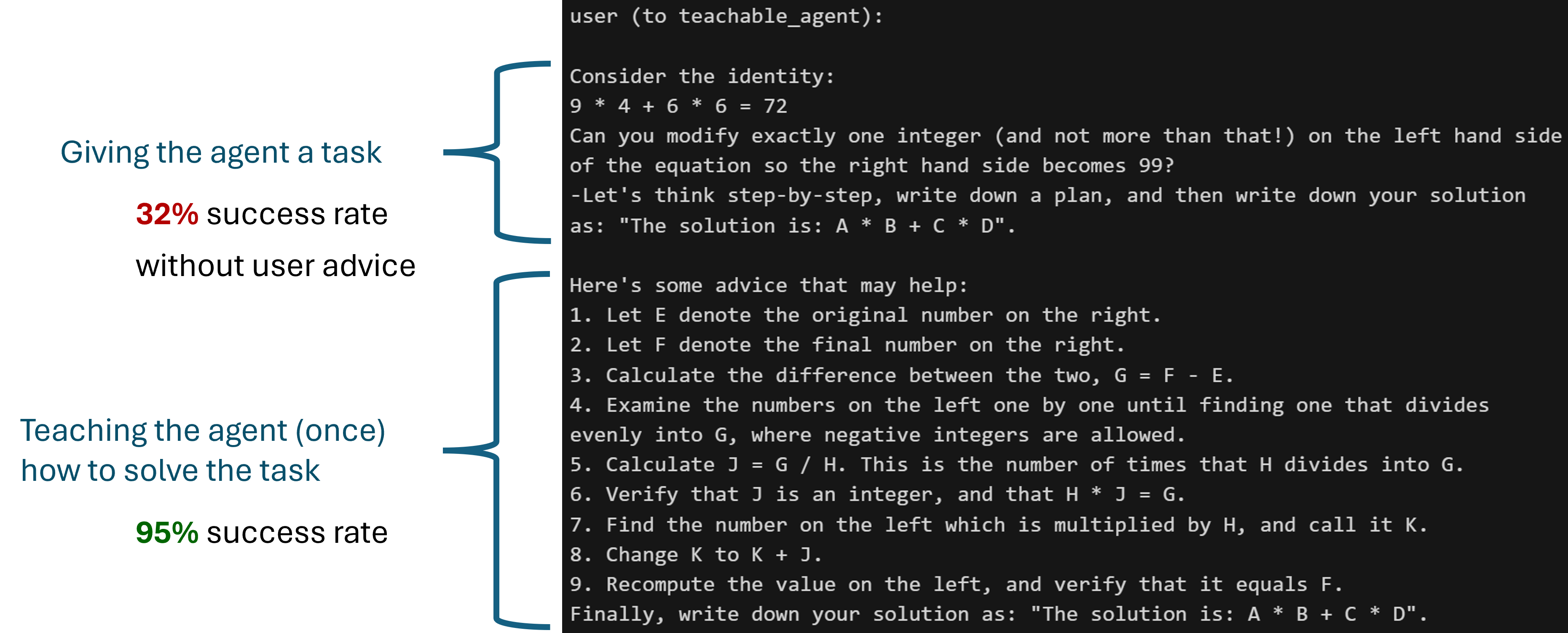

- Teachability. Every LLM agent in AutoGen can be made teachable, i.e., remember facts, preferences, skills etc. from interacting with other agents. For example, a user behind a user proxy agent can teach an assistant agent instructions in solving a difficult math problem. After teaching once, the problem solving rate for the assistant agent can have a dramatic improvement (e.g., 37% -> 95% for gpt-4-0613).

This feature works for GPTAssistantAgent (using OpenAI's assistant API) and group chat as well. One interesting use case of teachability + FSM group chat: teaching resilience.

This feature works for GPTAssistantAgent (using OpenAI's assistant API) and group chat as well. One interesting use case of teachability + FSM group chat: teaching resilience.

Integration

The extensible design of AutoGen makes it easy to integrate with new technologies. For example:

- Custom models and clients can be used as backends of an agent, such as Huggingface models and inference APIs.

- OpenAI assistant can be used as the backend of an agent (GPTAssistantAgent). It will be nice to reimplement it as a custom client to increase the compatibility with ConversableAgent.

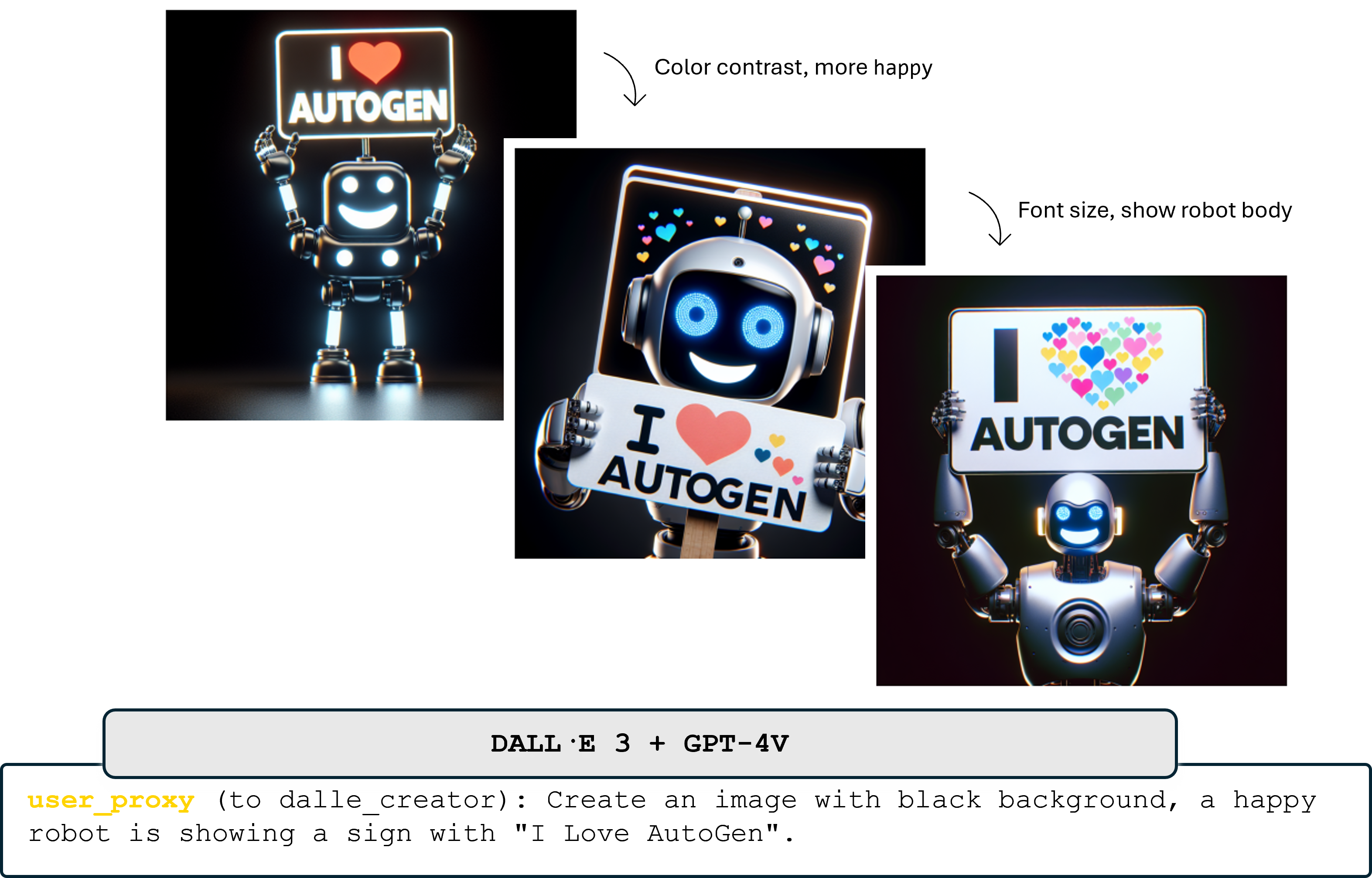

- Multimodality. LMM models like GPT-4V can be used to provide vision to an agent, and accomplish interesting multimodal tasks by conversing with other agents, including advanced image analysis, figure generation, and automatic iterative improvement in image generation.

The above only covers a subset of new features and roadmap. There are many other interesting new features, integration examples or sample apps:

- new features like stateful code execution, tool decorators, long context handling, web agents.

- integration examples like using guidance to generate structured response.

- sample apps like AutoAnny.

Call for Help

I appreciate the huge support from more than 14K members in the Discord community. Despite all the exciting progress, there are tons of open problems, issues and feature requests awaiting to be solved. We need more help to tackle the challenging problems and accelerate the development. You're all welcome to join our community and define the future of AI agents together.

Do you find this update helpful? Would you like to join force? Please join our Discord server for discussion.