Exercise 3: Advanced Pod Scheduling

Task 1 - Pod Affinity and Anti-Affinity

Create the affinity example manifest:

$affinityYaml = @" apiVersion: apps/v1 kind: Deployment metadata: name: web-app spec: replicas: 3 selector: matchLabels: app: web-app template: metadata: labels: app: web-app spec: affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app operator: In values: - web-app topologyKey: "kubernetes.io/hostname" podAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: app operator: In values: - mongodb topologyKey: "kubernetes.io/hostname" containers: - name: web-app image: k8sonazureworkshoppublic.azurecr.io/nginx:latest "@cat << EOF > web-app-affinity.yaml apiVersion: apps/v1 kind: Deployment metadata: name: web-app spec: replicas: 3 selector: matchLabels: app: web-app template: metadata: labels: app: web-app spec: affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app operator: In values: - web-app topologyKey: "kubernetes.io/hostname" podAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: app operator: In values: - mongodb topologyKey: "kubernetes.io/hostname" containers: - name: web-app image: k8sonazureworkshoppublic.azurecr.io/nginx:latest EOFOutput the file to review it:

$affinityYamlcat web-app-affinity.yamlThis manifest includes:

podAntiAffinity: Ensures pods of the same app don’t run on the same nodepodAffinity: Prefers to run near pods with the labelapp: mongodb

Deploy the web application with affinity rules:

$affinityYaml | kubectl apply -f -kubectl apply -f web-app-affinity.yamlVerify the deployment:

kubectl get pods -l app=web-app -o widekubectl get pods -l app=web-app -o wideExamine where pods were scheduled:

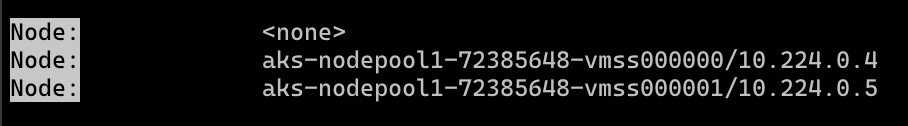

kubectl describe pods -l app=web-app | Select-String Node:kubectl describe pods -l app=web-app | grep Node:Note which nodes the pods were scheduled to and how the anti-affinity rules were applied. Note that one pod cannot be scheduled, and is in the

Pendingstate due to us having only two nodes and the anti-affinity rule requiring pods to be on different nodes.

Task 2 - Create a deployment with node affinity

Get a list node names

kubectl get nodeskubectl get nodesLabel one of your nodes (replace

NODE_NAMEwith actual node name):kubectl label nodes NODE_NAME workload=frontendkubectl label nodes NODE_NAME workload=frontendCreate the deployment with node affinity:

$frontendDeploy = @" apiVersion: apps/v1 kind: Deployment metadata: name: frontend spec: replicas: 3 selector: matchLabels: app: frontend template: metadata: labels: app: frontend spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: workload operator: In values: - frontend containers: - name: nginx image: k8sonazureworkshoppublic.azurecr.io/nginx:latest resources: requests: memory: "64Mi" cpu: "100m" limits: memory: "128Mi" cpu: "200m" "@ $frontendDeploy | kubectl apply -f -cat << EOF | kubectl apply -f - apiVersion: apps/v1 kind: Deployment metadata: name: frontend spec: replicas: 3 selector: matchLabels: app: frontend template: metadata: labels: app: frontend spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: workload operator: In values: - frontend containers: - name: nginx image: k8sonazureworkshoppublic.azurecr.io/nginx:latest resources: requests: memory: "64Mi" cpu: "100m" limits: memory: "128Mi" cpu: "200m" EOFVerify the deployment is running on the labelled node:

kubectl get pods -l app=frontend -o widekubectl get pods -l app=frontend -o wideAll pods should be running on the node you labelled.

Task 3 - Taints and Tolerations

Get a list of node names:

kubectl get nodeskubectl get nodesApply taint to a node (replace NODE_NAME with actual node name):

kubectl taint nodes NODE_NAME dedicated=analytics:NoSchedulekubectl taint nodes NODE_NAME dedicated=analytics:NoScheduleDeploy the application with tolerations:

$tolerationsYaml = @" apiVersion: apps/v1 kind: Deployment metadata: name: analytics-app spec: replicas: 2 selector: matchLabels: app: analytics template: metadata: labels: app: analytics spec: tolerations: - key: "dedicated" operator: "Equal" value: "analytics" effect: "NoSchedule" containers: - name: analytics-app image: k8sonazureworkshoppublic.azurecr.io/busybox command: ["sh", "-c", "while true; do echo analytics processing; sleep 10; done"] resources: requests: memory: "64Mi" cpu: "100m" limits: memory: "128Mi" cpu: "200m" "@ $tolerationsYaml | kubectl apply -f -cat << EOF | kubectl apply -f - apiVersion: apps/v1 kind: Deployment metadata: name: analytics-app spec: replicas: 2 selector: matchLabels: app: analytics template: metadata: labels: app: analytics spec: tolerations: - key: "dedicated" operator: "Equal" value: "analytics" effect: "NoSchedule" containers: - name: analytics-app image: k8sonazureworkshoppublic.azurecr.io/busybox command: ["sh", "-c", "while true; do echo analytics processing; sleep 10; done"] resources: requests: memory: "64Mi" cpu: "100m" limits: memory: "128Mi" cpu: "200m" EOFVerify pod placement:

kubectl get pods -l app=analytics -o widekubectl get pods -l app=analytics -o wideThe pods should be scheduled on both nodes, including the tainted node because they have the required toleration.

Test the effect of the taint by creating a pod without tolerations:

$noTolerationPod = @" apiVersion: v1 kind: Pod metadata: name: no-toleration-pod spec: containers: - name: nginx image: k8sonazureworkshoppublic.azurecr.io/nginx "@ $noTolerationPod | kubectl apply -f -cat << EOF | kubectl apply -f - apiVersion: v1 kind: Pod metadata: name: no-toleration-pod spec: containers: - name: nginx image: k8sonazureworkshoppublic.azurecr.io/nginx EOFCheck where this pod was scheduled:

kubectl get pod no-toleration-pod -o widekubectl get pod no-toleration-pod -o wideThe pod should avoid the tainted node.

Remove the taint from the node:

kubectl taint nodes NODE_NAME dedicated=analytics:NoSchedule-kubectl taint nodes NODE_NAME dedicated=analytics:NoSchedule-

Task 4 - Pod Topology Spread Constraints

Create a deployment with topology spread constraints:

$spreadDemo = @" apiVersion: apps/v1 kind: Deployment metadata: name: spread-demo spec: replicas: 6 selector: matchLabels: app: spread-demo template: metadata: labels: app: spread-demo spec: topologySpreadConstraints: - maxSkew: 1 topologyKey: kubernetes.io/hostname whenUnsatisfiable: DoNotSchedule labelSelector: matchLabels: app: spread-demo containers: - name: nginx image: k8sonazureworkshoppublic.azurecr.io/nginx "@ $spreadDemo | kubectl apply -f -cat << EOF | kubectl apply -f - apiVersion: apps/v1 kind: Deployment metadata: name: spread-demo spec: replicas: 6 selector: matchLabels: app: spread-demo template: metadata: labels: app: spread-demo spec: topologySpreadConstraints: - maxSkew: 1 topologyKey: kubernetes.io/hostname whenUnsatisfiable: DoNotSchedule labelSelector: matchLabels: app: spread-demo containers: - name: nginx image: k8sonazureworkshoppublic.azurecr.io/nginx EOFVerify the pod distribution:

kubectl get pods -l app=spread-demo -o widekubectl get pods -l app=spread-demo -o wideThe pods should be evenly spread across your nodes due to the topology spread constraint.

Task 5 - Cleanup

Clean up the resources created in this exercise:

kubectl delete deployment web-app frontend spread-demo analytics-app kubectl delete pod no-toleration-podkubectl delete deployment web-app frontend spread-demo analytics-app kubectl delete pod no-toleration-pod