Exercise 6: Scaling

In this exercise, you’ll implement and test various scaling mechanisms in your AKS cluster, including Horizontal Pod Autoscaling (HPA), Cluster Autoscaler, and learn how to optimize resource usage and performance.

Task 1: Implementing Horizontal Pod Autoscaler (HPA)

The Horizontal Pod Autoscaler automatically scales the number of pods in a deployment or stateful set based on observed CPU utilization or other metrics.

Deploy a PHP Apache server with the

apacheimage that includes a CPU-intensive endpoint:kubectl create deployment php-apache --image=k8s.gcr.io/hpa-example kubectl set resources deployment php-apache --requests=cpu=200m --limits=cpu=500m kubectl expose deployment php-apache --port=80kubectl create deployment php-apache --image=k8s.gcr.io/hpa-example kubectl set resources deployment php-apache --requests=cpu=200m --limits=cpu=500m kubectl expose deployment php-apache --port=80Create a Horizontal Pod Autoscaler:

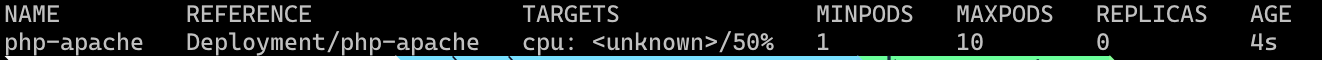

kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10Check the status of the HPA:

kubectl get hpakubectl get hpaYou should see something like this:

This indicates that the HPA is monitoring the

php-apachedeployment and will scale it based on CPU usage.Generate load on the service:

kubectl run load-generator --image=busybox --rm -it -- /bin/sh -c "while true; do wget -q -O- http://php-apache; done"kubectl run load-generator --image=busybox --rm -it -- /bin/sh -c "while true; do wget -q -O- http://php-apache; done"Open another terminal and watch the HPA scale the pods:

kubectl get hpa php-apache --watchkubectl get hpa php-apache --watchYou should see the CPU load increase and the number of replicas increase accordingly.

To stop the load, go back to the terminal running the load generator and press Ctrl+C.

Watch the HPA scale back down over the next few minutes:

kubectl get hpa php-apache --watch kubectl get podskubectl get hpa php-apache --watch kubectl get pods

Task 2: Implementing Cluster Autoscaler with a Dedicated Node Pool

Our current system node pool is set to manual scaling, we’ll create a dedicated node pool with autoscaling enabled.

Create a new node pool with autoscaling enabled:

$NODEPOOL_NAME = "scalepool" az aks nodepool add ` --resource-group $RESOURCE_GROUP ` --cluster-name $AKS_NAME ` --name $NODEPOOL_NAME ` --node-count 1 ` --enable-cluster-autoscaler ` --min-count 1 ` --max-count 3 ` --node-vm-size $VM_SKU ` --labels purpose=autoscaleNODEPOOL_NAME="scalepool" az aks nodepool add \ --resource-group $RESOURCE_GROUP \ --cluster-name $AKS_NAME \ --name $NODEPOOL_NAME \ --node-count 1 \ --enable-cluster-autoscaler \ --min-count 1 \ --max-count 3 \ --node-vm-size $VM_SKU \ --labels purpose=autoscaleWait for the node pool to be ready:

az aks nodepool show ` --resource-group $RESOURCE_GROUP ` --cluster-name $AKS_NAME ` --name $NODEPOOL_NAME ` --query provisioningState -o tsvaz aks nodepool show \ --resource-group $RESOURCE_GROUP \ --cluster-name $AKS_NAME \ --name $NODEPOOL_NAME \ --query provisioningState -o tsvWait until the output shows “Succeeded” before proceeding.

Validdate the new node pool shows up, with a single node currently deployed:

kubectl get nodeskubectl get nodesDeploy a resource-intensive application that will target our new node pool:

$resourceConsumerYaml = @" apiVersion: apps/v1 kind: Deployment metadata: name: resource-consumer spec: replicas: 2 selector: matchLabels: app: resource-consumer template: metadata: labels: app: resource-consumer spec: nodeSelector: purpose: autoscale containers: - name: resource-consumer image: k8sonazureworkshoppublic.azurecr.io/k8s.gcr.io/pause:3.1 resources: requests: cpu: 500m memory: 512Mi limits: cpu: 1000m memory: 1Gi "@ $resourceConsumerYaml | kubectl apply -f -cat << EOF | kubectl apply -f - apiVersion: apps/v1 kind: Deployment metadata: name: resource-consumer spec: replicas: 2 selector: matchLabels: app: resource-consumer template: metadata: labels: app: resource-consumer spec: nodeSelector: purpose: autoscale containers: - name: resource-consumer image: k8sonazureworkshoppublic.azurecr.io/k8s.gcr.io/pause:3.1 resources: requests: cpu: 500m memory: 512Mi limits: cpu: 1000m memory: 1Gi EOFVerify that the pods are running on our new node pool:

kubectl get pods -l app=resource-consumer -o widekubectl get pods -l app=resource-consumer -o wideYou should see the pods scheduled on nodes from the autoscale node pool.

Scale the deployment to trigger node autoscaling:

kubectl scale deployment resource-consumer --replicas=10kubectl scale deployment resource-consumer --replicas=10Monitor the nodes to see the Cluster Autoscaler add new nodes:

kubectl get nodes -l purpose=autoscale --watchkubectl get nodes -l purpose=autoscale --watchIt may take 3-5 minutes for new nodes to be added. You should eventually see additional nodes with the label

purpose=autoscale.You can also view the status of the node pool in the Azure portal under your AKS cluster’s “Node pools” section. It should show the target node count increasing and that it’s scaling up.

Check the status of your pods and which nodes they’re running on:

kubectl get pods -l app=resource-consumer -o widekubectl get pods -l app=resource-consumer -o wideScale down the deployment to observe the Cluster Autoscaler removing nodes:

kubectl scale deployment resource-consumer --replicas=1kubectl scale deployment resource-consumer --replicas=1The nodes that are no longer needed should be removed automatically by the Cluster Autoscaler. This can take 10-15 minutes due to the default node deletion delay. You can monitor the process:

kubectl get nodes -l purpose=autoscalekubectl get nodes -l purpose=autoscale

Task 3: Clean Up

Delete the resource consumer deployment:

kubectl delete deployment resource-consumerkubectl delete deployment resource-consumerDelete the autoscale node pool:

az aks nodepool delete ` --resource-group $RESOURCE_GROUP ` --cluster-name $AKS_NAME ` --name $NODEPOOL_NAMEaz aks nodepool delete \ --resource-group $RESOURCE_GROUP \ --cluster-name $AKS_NAME \ --name $NODEPOOL_NAME