CNI Networking Models

Azure Kubernetes Service (AKS) supports several Container Network Interface (CNI) plugins that define how pod IPs are assigned and how traffic is routed. This section focuses on the three actively supported and recommended options.

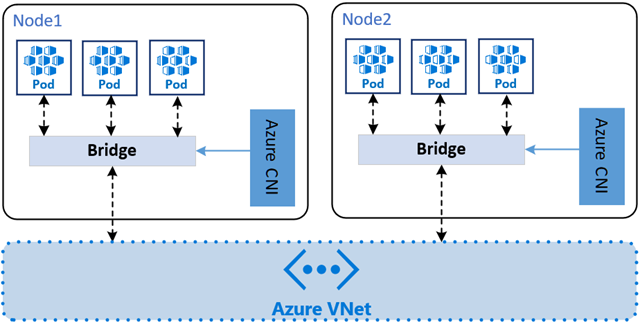

Azure CNI Pod Subnet

This model assigns pod IPs directly from a delegated subnet within the same Azure Virtual Network (VNet) as the nodes. It provides flat networking, meaning pods are directly routable from other Azure resources. This differs from the legacy Node Subnet model, where pods and nodes shared the same subnet. By moving pods to a dedicated subnet, you can avoid IP address conflicts and better manage IP allocation.

IPs can be assigned either dynamically from the range of the subnet, or statically by defining blocks of IPs to assign to each node. This is useful for scenarios where you need predictable IPs for services or external integrations.

When using Azure CNI Pod Subnet, careful IP planning is essential. Each pod receives an IP address from a delegated subnet within your Azure Virtual Network (VNet). The number of IPs required depends on your expected pod density per node and the total number of nodes in your cluster. It is recommended that you allocate a subnet that can accommodate future growth, as resizing subnets later can be complex and disruptive. Consider using a /23 or larger subnet for production workloads, and avoid overlapping with other subnets in your VNet to prevent routing conflicts. Azure also enforces limits on the number of IPs per NIC and per subnet, so review the Subnet Sizing guidance when designing your cluster.

During cluster upgrades or node image updates, AKS may temporarily scale out your node pool to ensure high availability while nodes are being drained and replaced. This means additional nodes are created — each requiring its own set of pre-allocated pod IPs. If your subnet is tightly sized, this temporary increase can lead to IP exhaustion, causing upgrade failures or scheduling issues. To mitigate this, always plan for a buffer of extra IPs in your subnet to accommodate upgrade-related scaling. Azure recommends reserving at least 20–30% headroom in your subnet for such operations.

Tip

IP address exhaustion can happen even if you’re not running the maximum number of pods per node. For instance, with Azure CNI Pod Subnet networking, the default setting allows up to 110 pods per node . As a result, the CNI reserves 110 IP addresses for each node, regardless of how many pods are actually running. If you’re only deploying, say, 20 pods per node, it’s worth considering lowering the max pods setting to avoid unnecessary IP address consumption.

Key Features:

- Pod IPs are part of the VNet address space.

- No SNAT required for outbound traffic.

- Pods are directly accessible from external systems.

- Supports both static and dynamic IP allocation.

Use Cases:

- Clusters that require direct pod-to-resource communication.

- Environments with sufficient VNet IP space.

Limitations:

- Requires careful IP planning to avoid exhaustion.

- IP planning is also needed to avoid overlapping with other VNets or on-premises networks.

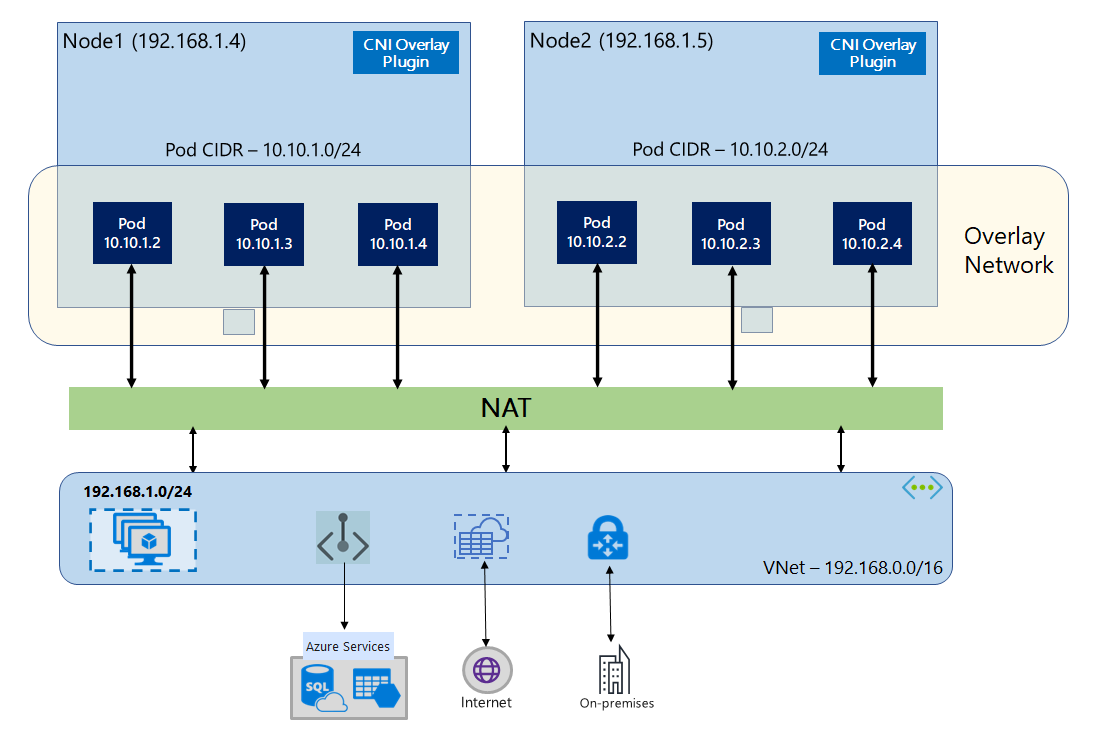

Azure CNI Overlay

With Azure CNI Overlay, the cluster nodes are deployed into an VNet subnet, but pods are assigned IP addresses from a private CIDR logically different from the VNet hosting the nodes. Pod and node traffic within the cluster use an Overlay network. Network Address Translation (NAT) uses the node’s IP address to reach resources outside the cluster. This solution saves a significant amount of VNet IP addresses and enables you to scale your cluster to large sizes. An extra advantage is that you can reuse the private CIDR in different AKS clusters, which extends the IP space available for containerized applications in Azure Kubernetes Service (AKS).

Key Features:

- Pod IPs come from a separate overlay range that is not routable to your main VNet and can be very large.

- No UDRs or route table management required.

- Scales to thousands of nodes and over 1 million pods.

- Supports dual-stack IPv4/IPv6 (with some limitations).

Use Cases:

- Large-scale clusters.

- IP-constrained environments.

- Multi-cluster setups with overlapping CIDRs.

Limitations:

- SNAT is required for outbound traffic.

- Pod IPs are not directly accessible from outside the cluster.

- Does not support virtual nodes

Azure CNI Powered by Cilium

Azure CNI Powered by Cilium is a modern networking solution that combines the benefits of Azure CNI with the advanced features of Cilium , a popular open-source networking and security project. This model uses eBPF (extended Berkeley Packet Filter) technology to provide high-performance networking, security policies, and observability. Azure CNI Powered by Cilium offers the following benefits:

- Functionality equivalent to existing Azure CNI and Azure CNI Overlay plugins

- Improved Service routing

- More efficient network policy enforcement

- Better observability of cluster traffic

- Support for larger clusters (more nodes, pods, and services)

Azure CNI Powered by Cilium can be deployed in two configurations for IP address management:

- Assign IP addresses from an overlay network (similar to Azure CNI Overlay mode)

- Assign IP addresses from a virtual network (similar to existing Azure CNI Pod Subnet mode with Dynamic Pod IP Allocation)

Key Features:

- Replaces

iptableswith eBPF for efficient traffic routing. - Built-in network policy enforcement (no need for Calico or Azure NPM).

- Advanced observability via Hubble and integration with Azure Monitor.

- Supports both overlay and flat IP allocation modes.

- Enables features like FQDN filtering and Layer 7 policies.

Use Cases:

- Clusters requiring advanced security and observability.

- High-performance workloads.

- Multi-cluster networking via Cilium Cluster mesh .

Considerations:

- Linux-only.

- Some limitations with host networking and ipBlock policies.

- AKS manages the Cilium configuration, which cannot be modified.

Cilium with Advanced Container Networking Services (ACNS)

Some features of Cillium, such as FQDN Filtering and Layer 7 policies, require the implementation of Advanced Container Networking Services which is an additional paid service.

| Supported Feature | w/o ACNS | w/ ACNS |

|---|---|---|

| Cilium Endpoint Slices | ✅ | ✅ |

| K8s Network Policies | ✅ | ✅ |

| Cilium L3/L4 Network Policies | ✅ | ✅ |

| FQDN Filtering | ❌ | ✅ |

| L7 Network Policies (HTTP/gRPC/Kafka) | ❌ | ✅ |

| Container Network Observability (Metrics and Flow logs) | ❌ | ✅ |

| Cilium Clustermesh | ❌ | ✅ |

Legacy Models

The following models are no longer recommended for new clusters, but may still be in use in existing deployments. They are provided here for reference only.

- Kubenet

- Azure CNI with Node Subnet

Warning

Kubenet networking has been deprecated and will be removed in March 2028. You should avoid using it for new clusters and consider migrating existing clusters to a supported CNI model.