Node Autoprovisioner

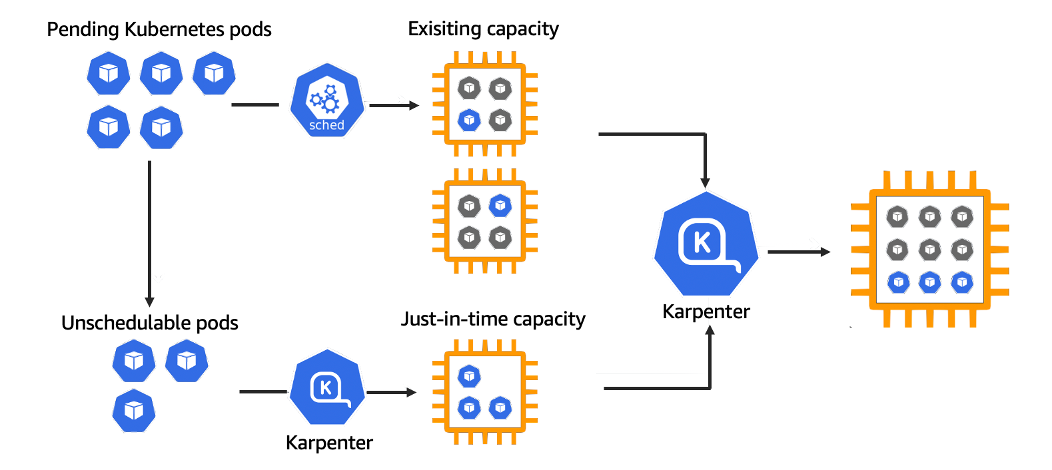

Node Autoprovisioning (NAP) in AKS is a dynamic node management feature, currently in preview, that automatically provisions and configures new node pools based on the resource requirements of pending pods. NAP is built on the open-source [Karpenter}(https://karpenter.sh/) project and the AKS provider for Karpenter, enabling AKS clusters to respond to changing workload demands with minimal manual intervention.

How Node Autoprovisioner Works

Unlike traditional node pool management, where administrators must predefine VM sizes and node pool configurations, NAP analyzes the resource requests of unscheduled pods and determines the optimal VM SKU and configuration to satisfy those requirements. It then creates new node pools on demand, using Azure VM SKUs that best fit the workload’s needs for CPU, memory, GPU, architecture, and other capabilities.

NAP continuously monitors the cluster for pending pods and uses a set of selectors and requirements to match workloads to the most suitable VM types. It supports both spot and on-demand instances, multiple architectures (AMD64, ARM64), and can be customized to prefer certain VM families or sizes. Node pools are created, scaled, and consolidated automatically, optimizing for cost and efficiency.

Node Pool Configuration: Limits and Weights

Node Autoprovisioner allows fine-grained control over how node pools are created and managed. Administrators can set constraints and preferences to ensure workloads are scheduled onto the most appropriate nodes, while managing cost and resource usage.

| Configuration Option | Description |

|---|---|

| VM Family/Type | Restrict node pools to specific VM families (e.g., D, E, F) or types (e.g., GPU, ARM64). |

| CPU/Memory Limits | Set minimum and maximum CPU/memory for node pools to control resource allocation and quotas. |

| GPU Requirements | Specify if workloads require GPU-enabled nodes. |

| Availability Zones | Limit node pools to specific Azure availability zones for resilience. |

| Node Pool Weights | Assign weights to pools to influence scheduling preference when multiple pools match. |

| Spot/On-Demand | Choose between spot and on-demand VM instances for cost optimization. |

| Consolidation Policies | Control when underutilized or empty nodes are removed to optimize costs. |

Limits

- CPU/Memory: Prevents over-provisioning and helps manage Azure subscription quotas. For example, you can set a maximum of 32 vCPUs per pool, or restrict memory to 128GiB.

- VM Family/Type: Ensures workloads only run on compatible hardware (e.g., restrict to GPU nodes for ML workloads).

- Availability Zones: Improves fault tolerance by spreading node pools across zones.

Weights

When multiple pools satisfy a pod’s requirements, weights determine which pool is preferred. Higher weights increase the likelihood of scheduling onto that pool. This is useful for cost management (e.g., prefer spot pools) or hardware affinity. An example of using weights could be assigning a higher weight to a spot instance pool so that this is preferred, but if no spot nodes are available they will fall back to an on-demand pool.

Node Pool Configuration and Control

NAP uses Kubernetes custom resources (NodePool, AKSNodeClass) to define node pool requirements, limits, and disruption policies. Administrators can specify constraints such as VM family, CPU/memory limits, GPU requirements, and availability zones. Node pool weights can be set to influence scheduling preference when multiple pools are available.

Node pool consolidation policies (e.g., WhenUnderutilized, WhenEmpty) control when nodes are removed or replaced to optimize resource usage. Limits on CPU and memory can be set to prevent over-provisioning and manage Azure quota usage. Node image updates and Kubernetes upgrades are handled automatically, ensuring node pools remain up to date with the cluster control plane.

Limitations and Considerations

NAP is currently in preview and has several limitations:

- Only Linux node pools are supported; Windows node pools are not available.

- Custom kubelet configuration, IPv6 clusters, disk encryption sets, and some advanced networking features are not supported.

- Cannot be enabled on clusters with Cluster Autoscaler active.

- Network policy engine support is limited; Cilium is recommended, Calico is not supported.

- Node pool configuration is subject to Azure quota and VM SKU availability in the region.

- Node pool disruption and consolidation may cause pod rescheduling; disruption policies can be tuned to minimize impact.