KEDA

KEDA (Kubernetes Event-driven Autoscaling) is an open-source project that enables Kubernetes workloads to scale based on external event sources, such as message queues, databases, HTTP requests, and cloud services. Unlike traditional autoscaling, which relies on resource metrics like CPU and memory, KEDA allows scaling based on real-time application demand and business events.

How KEDA Works

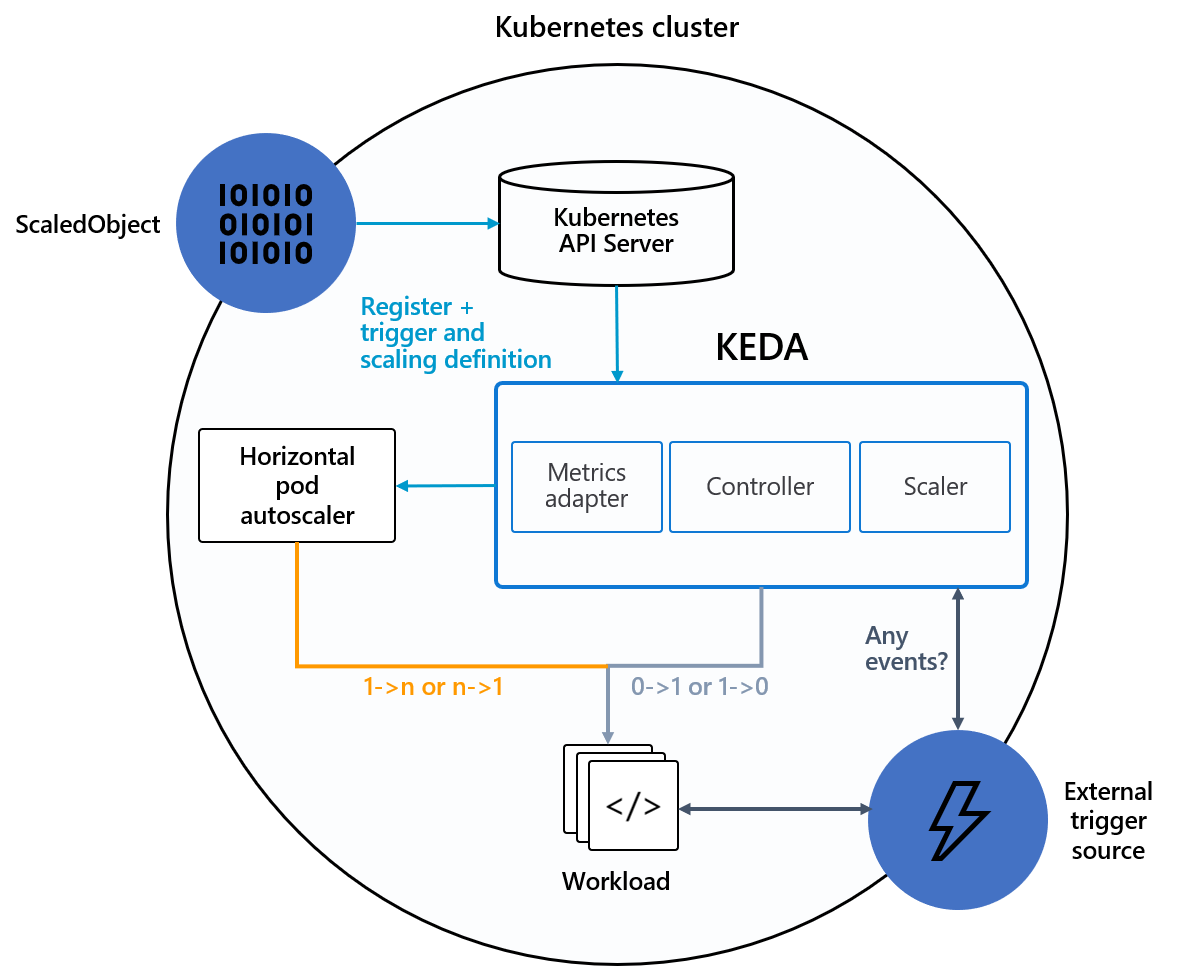

KEDA introduces the concept of Scalers, which monitor external systems for activity (e.g., queue length, database row count, custom metrics). When a defined threshold is met, KEDA adjusts the replica count of the target deployment or job, ensuring workloads scale up or down in response to actual demand.

KEDA operates as a Kubernetes operator, running in the cluster and managing the lifecycle of ScaledObjects and ScaledJobs. It works alongside the Horizontal Pod Autoscaler (HPA), automatically creating and updating HPA resources based on event-driven triggers.

Integration with AKS

KEDA can be enabled in Azure Kubernetes Service (AKS) using the built-in AKS KEDA extension. This extension simplifies deployment and lifecycle management, ensuring KEDA is installed, configured, and kept up to date as part of the cluster’s managed services. The extension provides:

- Automated installation and upgrades of KEDA components

- Integration with Azure Monitor and other Azure services

- Support for scaling based on Azure event sources (e.g., Service Bus, Event Hubs, Storage Queues)

- Centralized management through Azure CLI, portal, or ARM templates

While enabling KEDA in AKS is straightforward using the extension, configuration of scalers and event sources is done via Kubernetes manifests. No manual installation steps are required when using the extension.

KEDA Configuration Objects

KEDA uses several Kubernetes custom resources to define how workloads should scale in response to external events:

- ScaledObject: The primary resource for event-driven scaling of deployments, statefulsets, or other scalable objects. It specifies the target workload, the event source (via a scaler), and scaling parameters such as min/max replicas and cooldown periods.

- ScaledJob: Used for scaling Kubernetes jobs based on event sources. ScaledJob defines how jobs are triggered and managed in response to events, supporting batch and parallel processing scenarios.

- TriggerAuthentication: Stores authentication details for accessing external event sources securely (e.g., connection strings, secrets, managed identities). This resource is referenced by ScaledObjects or ScaledJobs when a scaler requires credentials.

Each ScaledObject or ScaledJob references a scaler type (such as Azure Service Bus, Kafka, HTTP, or custom metrics) and defines the conditions under which scaling should occur. These objects are declarative and managed via standard Kubernetes manifests, allowing integration with GitOps and CI/CD workflows.

KEDA automatically creates and manages Horizontal Pod Autoscaler (HPA) resources based on the configuration in ScaledObjects, ensuring seamless event-driven scaling alongside traditional metric-based autoscaling.

Use Cases and Benefits

KEDA is ideal for scenarios where workloads must respond to fluctuating demand driven by external events, such as:

- Processing messages from queues or topics

- Scaling jobs based on database changes

- Autoscaling APIs based on request rate

- Integrating with cloud-native event sources

Benefits include:

- Fine-grained, event-driven scaling

- Reduced resource consumption during idle periods

- Seamless integration with existing Kubernetes autoscaling

- Support for a wide range of event sources