This is the full developer documentation for PromptPex

=|=|=|=|=|=

# PromptPex

> Test Generation for Prompts

# Test Generation for Prompts

[Section titled “Test Generation for Prompts”](#test-generation-for-prompts)

**Prompts** are an important part of any software project that incorporates the power of AI models. As a result, tools to help developers create and maintain effective prompts are increasingly important.

**PromptPex** is a tool for exploring and testing AI model prompts. PromptPex is intended to be used by developers who have prompts as part of their code base. PromptPex treats a prompt as a function and automatically generates test inputs to the function to support unit testing.

[](https://github.com/user-attachments/assets/0a81f506-ca1c-42f3-b876-9ba52e047493)

***

## Features

[Section titled “Features”](#features)

Automated Test Generation

PromptPex uses [an LLM-based process](/promptpex/reference/) to generate a set of inputs that capture the behavior of your prompt.

Output Rules Evaluation

Eval your prompt’s output compliance against a set of rules that are automatically extracted from your prompt, or define your own [metrics](/promptpex/configuration/metrics).

Groundtruth

Generate expected outputs for tests using an AI model, and evaluate the output from the groundtruth model using a list of models. [Learn more](/promptpex/reference/groundtruth).

Integrated in the GitHub Models CLI

Generate test data for [GitHub Models Evals](/promptpex/integrations/github-models-evals).

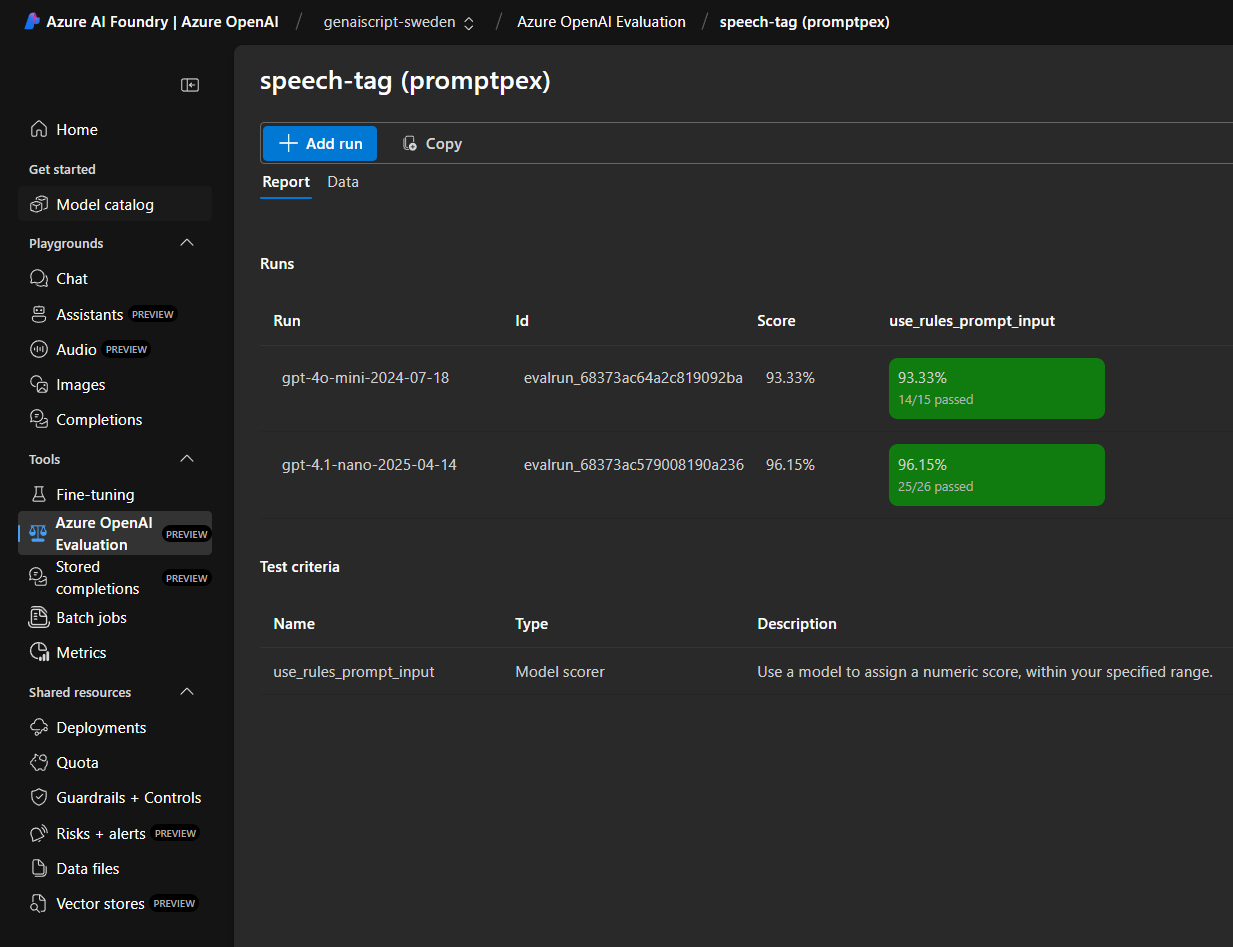

Export to OpenAI Evals

Export generated tests and metrics using (Azure) [OpenAI Evals](/promptpex/integrations/openai-evals).

Azure OpenAI Store Completions

Use generated tests to distillate to smaller models using [Azure OpenAI Stored Completions](/promptpex/integrations/azure-openai-stored-completions).

Bring your own LLM library

Integrate with your own LLM library or use our [GenAIScript](/promptpex/dev/genaiscript) / [Python](/promptpex/dev/python) implementations

Flexible Generation

Use [scenarios](/promptpex/reference/scenarios), [test expansions](/promptpex/reference/test-expansion) or [custom instruction](/promptpex/configuration/instructions) to configure the test generation.

=|=|=|=|=|=

# Overview

> How to use PromptPex to evaluate prompts and models with generated tests.

PromptPex is packaged as a [npm.js](https://www.npmjs.com/package/promptpex) command line tool that uses [GenAIScript](https://microsoft.github.io/genaiscript/).

## Local configuration

[Section titled “Local configuration”](#local-configuration)

To use PromptPex locally, you need to have Node.js installed and set up your environment. Follow these steps:

* Install [Node.js v22+](https://nodejs.org/en/download/) (or later).

* Make sure you have the right version of Node.js:

```sh

node --version

```

* Run PromptPex configuration to set up your `.env` file:

```sh

npx promptpex configure

```

PromptPex supports many LLM providers, such as OpenAI, Azure OpenAI, GitHub Models, Ollama, and more. The configuration will prompt you to select the LLM provider you want to use and set up the necessary environment variables in a `.env` file.

* Run PromptPex on your prompt file(s):

```sh

npx promptpex my_prompt.prompty

```

PromptPex also supports the following file formats:

* `.md`, `.txt`, tread as a Jinja2 templated string (Markdown)

* `.prompty`, Prompty file format (default)

* `.prompt.yml`, GitHub Models format

## Docker configuration

[Section titled “Docker configuration”](#docker-configuration)

If you prefer to run PromptPex in a Docker container, you can use the following command. This assumes you have [Docker](https://www.docker.com/) installed and running on your machine.

* Run the configuration command to set up your `.env` file.

```sh

docker run -e GITHUB_TOKEN="$GITHUB_TOKEN" --rm -it -v "$PWD":/app -w /app node:lts-alpine npx --yes promptpex configure

```

* Run PromptPex on your prompt file(s) using Docker:

```sh

docker run -e GITHUB_TOKEN="$GITHUB_TOKEN" --rm -it -v "$PWD":/app -w /app node:lts-alpine npx --yes promptpex my_prompt.prompty

```

You might need to pass more environment variables depending on your shell configuration.

## Effort levels

[Section titled “Effort levels”](#effort-levels)

PromptPex supports different effort levels for test generation, which can be specified using the `--vars effort` flag. The available effort levels are:

* `min`: Minimal effort, generates a small number of simple tests.

* `low`: Low effort, generates a moderate number of tests with some complexity.

* `medium`: Medium effort, generates a larger number of more complex tests.

* `high`: High effort, generates the maximum number of tests with the highest complexity.

```sh

npx promptpex my_prompt.prompty --vars effort=min

```

## Basic examples

[Section titled “Basic examples”](#basic-examples)

We start with simple examples of using PromptPex assume your prompt is in a file called `my_prompt.prompty` and you want generate tests, run them, and evaluate the results. More details about all the parameters you can specify can be found in the [CLI parameter documentation](/promptpex/cli/parameters).

### Generate, Run and Evaluate Tests

[Section titled “Generate, Run and Evaluate Tests”](#generate-run-and-evaluate-tests)

Suppose you want to generate tests, run them, and evaluate the results using the minimum effort level:

```sh

npx promptpex my_prompt.prompty --vars effort=min out=results evals=true modelsUnderTest="ollama:llama3.3" evalModel="ollama:llama3.3"

```

### Generate Only Tests

[Section titled “Generate Only Tests”](#generate-only-tests)

Suppose you only want to generate tests and not run them:

```sh

npx promptpex my_prompt.prompty --vars effort=min out=results evals=false

```

### Generate Only Tests with Groundtruth Outputs

[Section titled “Generate Only Tests with Groundtruth Outputs”](#generate-only-tests-with-groundtruth-outputs)

Suppose you only want to generate tests and add groundtruth outputs from a specific model and not run them:

```sh

npx promptpex my_prompt.prompty --vars effort=min out=results evals=false "groundtruthModel=ollama:llama3.3"

```

### Run and Evaluate Tests from a Context File

[Section titled “Run and Evaluate Tests from a Context File”](#run-and-evaluate-tests-from-a-context-file)

Suppose you just ran the above command and the file `results/my_prompt/promptpex_context.json` was created. (See [saving and restoring](/promptpex/cli/saving-restoring)) You can now load this context file to run and evaluate the tests:

```sh

npx promptpex results/my_prompt/promptpex_context.json --vars evals=true "modelsUnderTest=ollama:llama3.3" "evalModel=ollama:llama3.3"

```

## Notes

[Section titled “Notes”](#notes)

* For more details on prompt format and advanced usage, see the [overview](/promptpex/reference).

=|=|=|=|=|=

# Parameters

> Documentation of all parameters available to the PromptPex CLI and script interface.

This page documents all parameters available to the PromptPex CLI and script interface. Each parameter can be provided as a CLI flag (e.g., `--param value`) or via environment/configuration files. Default values and accepted types are indicated where applicable.

The first argument can be a Prompty file containing the prompt or a JSON file containing a saved PromptPex context, which will include all the tests, test runs, etc. saved in a previous invocation of PromptPex. If no argument is provided, the `--prompt` parameter must be specified.

| Parameter | Type | Default | Description |

| ---------------------------------- | ------- | ----------------------- | ---------------------------------------------------------------------------------------------------------------- |

| `--prompt` | string | | Prompt template to analyze. Provide inline or via file. Supports [prompty](https://prompty.ai/) markdown format. |

| `--effort` | string | | Effort level for test generation. One of: `min`, `low`, `medium`, `high`. Influences test count and complexity. |

| `--out` | string | | Output folder for generated files. |

| `--cache` | boolean | | Cache all LLM calls for faster experimentation. |

| `--testRunCache` | boolean | | Cache test run results in files. |

| `--evalCache` | boolean | | Cache evaluation results in files. |

| `--evals` | boolean | false | Evaluate the test results. |

| `--testsPerRule` | integer | 3 | Number of tests to generate per rule (1-10). |

| `--splitRules` | boolean | true | Split rules and inverse rules in separate prompts for test generation. |

| `--maxRulesPerTestGeneration` | integer | 3 | Max rules per test generation (affects test complexity). |

| `--testGenerations` | integer | 2 | Number of times to amplify test generation (1-10). |

| `--runsPerTest` | integer | 2 | Number of runs per test during evaluation (1-100). |

| `--disableSafety` | boolean | false | Disable safety system prompts and content safety checks. |

| `--rateTests` | boolean | false | Generate a report rating the quality of the test set. |

| `--rulesModel` | string | | Model used to generate rules (can override ‘rules’ alias). |

| `--baselineModel` | string | | Model used to generate baseline tests. |

| `--modelsUnderTest` | string | | Semicolon-separated list of models to run the prompt against. |

| `--evalModel` | string | | Semicolon-separated list of models to use for test evaluation. |

| `--compliance` | boolean | false | Evaluate test result compliance. |

| `--maxTestsToRun` | number | | Maximum number of tests to run. |

| `--inputSpecInstructions` | string | | Additional instructions for input specification generation. |

| `--outputRulesInstructions` | string | | Additional instructions for output rules generation. |

| `--inverseOutputRulesInstructions` | string | | Additional instructions for inverse output rules generation. |

| `--testExpansionInstructions` | string | | Additional instructions for test expansion generation. |

| `--storeCompletions` | boolean | | Store chat completions using Azure OpenAI stored completions. |

| `--storeModel` | string | | Model used to create stored completions (can override ‘store’ alias). |

| `--groundtruthModel` | string | | Model used to generate groundtruth outputs. |

| `--customMetric` | string | | Custom test evaluation template (as a prompt). |

| `--createEvalRuns` | boolean | | Create an Evals run in OpenAI Evals (requires `OPENAI_API_KEY`). |

| `--testExpansions` | integer | 0 | Number of test expansion phases (0-5). |

| `--testSamplesCount` | integer | | Number of test samples to include for rules/test generation. |

| `--testSamplesShuffle` | boolean | | Shuffle test samples before generating tests. |

| `--filterTestCount` | integer | 5 | Number of tests to include in filtered output of evalTestCollection. |

| `--loadContext` | boolean | false | Load context from a file. |

| `--loadContextFile` | string | promptPex\_context.json | Filename to load PromptPexContext from before running. |

## Usage Example

[Section titled “Usage Example”](#usage-example)

```sh

promptpex {file.prompty|file.json>} --prompt myprompt.prompty --effort=medium --out=results/ --evals=true --modelsUnderTest="openai:gpt-4o;ollama:llama3.3:70b" --evalModel="openai:gpt-4o" --rateTests=true

```

## Notes

[Section titled “Notes”](#notes)

* For more details on prompt format and advanced usage, see the main documentation.

=|=|=|=|=|=

# Saving and Restoring Sessions

The state of a PromptPex session can be saved and restored. This allows a user to generate tests in one session and review them, expand them, or evaluate them in another session. When the `out` parameter is set specifying the ouput directory, the session state is automatically saved to that directory in the file `promptpex_context.json`. The session state includes the prompt under test, the test collection, and the evaluation results.

To restore a session, set the `loadContext` parameter to `true` and specify the path to the context file you want loaded with the parameter `loadContextFile`.

Note that session state does not include the PromptPex options, so whatever options you specify when restoring the session, such as `modelsUnderTest` will override that options from the saved session. This allows the same tests to be evaluated using different models or options.

An example sequence of commands to save and restore a session might be the following. We first generate tests and save the session state to the directory `test1`:

```bash

promptpex --prompt "Rate summary from 1 to 10" --effort "min" --out ./test1

```

We then expand the tests using the `testExpansion` option and save the session state to the directory `test-expand`, which now has the expanded tests:

```bash

promptpex --prompt "dummy" --out "./test-expand" --vars "testExpansions=1" --vars "evals=false" --vars "loadContext=true" --vars "loadContextFile=evals/test1/promptpex_context.json"

```

Note that we specify a dummy prompt here because the prompt has already been saved in the context file.

Finally, we can evaluate the expanded tests using a different model, such as `gpt-4o`, and save the session state to the directory `test-eval`:

```bash

promptpex --prompt "dummy" --out "./test-eval" --vars "evals=true" --vars "out=test-eval" --vars "loadContext=true" --vars "loadContextFile=evals/test-expand/promptpex_context.json" --modelsUnderTest "gpt-4o"

```

=|=|=|=|=|=

# Custom Instructions

You can provide custom instructions for the test generation for each step in the prompty front-matter.

summarize.prompty

```yaml

instructions:

inputSpec: "..."

outputRules: "..."

inverseOutputRules: "..."

intent: "..."

testExpansion: "..."

```

## Example

[Section titled “Example”](#example)

You can influence the input specification generation by injecting prompting instructions.

```md

---

instructions:

outputRules: "Ignore the 'safety' section, it is handled elsewhere."

---

```

=|=|=|=|=|=

# Custom Metrics

You can provide custom metrics for the test result evaluation step. Metric can be qualitative (`ok`, `err`, `unknown`) or quantitative (e.g. `0` to `100` good).

A metric should be a `.metric.prompty` in the same folder as the prompt under test.

custom.metric.prompty

```markdown

---

name: Custom Test Result Evaluation

description: |

A template for a custom evaluation of the results.

tags:

- unlisted

inputs:

prompt:

type: string

description: The prompt to be evaluated.

intent:

type: string

description: The extracted intent of the prompt.

inputSpec:

type: string

description: The input specification for the prompt.

rules:

type: string

description: The rules to be applied for the test generation.

input:

type: string

description: The input to be used with the prompt.

output:

type: string

description: The output from the model execution.

---

system:

## Task

You are a chatbot that helps users evaluate the performance of a model.

Your task is to evaluate the based provided.

The is in English.

## Output

**Binary Decision on Evaluation**: You are required to make a binary decision based on your evaluation:

- Return 'OK' if is compliant with .

- Return 'ERR' if is **not** compliant with or if you are unable to confidently answer.

user:

{{output}}

```

=|=|=|=|=|=

# Overview

This section provides documentation for developers who want to use PromptPex in their own projects or infrastructure.

## Bring Your Own Inference Library

[Section titled “Bring Your Own Inference Library”](#bring-your-own-inference-library)

PromptPex is workflow of LLM prompts that implement the test generation process. The template are stored in a [markdown-ish, framework agnostic, template format](/promptpex/reference/prompt-format/).

* [prompts directory](https://github.com/microsoft/promptpex/tree/main/src/prompts)

**PromptPex is designed to be used with any LLM library.** The only requirement is that the library must be able to execute the Prompty templates.

Tip

Use the [implementation plan](https://github.com/microsoft/promptpex/blob/dev/.github/instructions/implementation.instructions.md) with your Agent to reimplement PromptPex quickly.

## GenAIScript

[Section titled “GenAIScript”](#genaiscript)

[GenAIScript](/promptpex/dev/genaiscript/) implementation of the test generation process using the prompt templates.

## Python

[Section titled “Python”](#python)

A [Python](/promptpex/dev/python/) implementation of the test generation process using the prompt templates.

=|=|=|=|=|=

# GenAIScript

[GenAIScript](https://microsoft.github.io/genaiscript) is a tool for generating and executing scripts using LLMs. It is used in PromptPex to generate the test generation scripts.

## Try PromptPex

[Section titled “Try PromptPex”](#try-promptpex)

* Install [Node.js v22+](https://nodejs.org/)

* Configure your LLM credentials in `.env`. You can use OpenAI, Azure OpenAI, or Ollama.

```sh

npx --yes genaiscript configure

```

* Launch promptpex locally

```sh

npx --yes genaiscript@latest serve --remote microsoft/promptpex --remote-branch dev

```

### Docker

[Section titled “Docker”](#docker)

To launch PromptPex in a docker container, first create an image with the following command:

```sh

docker build -t genaiscript -< output.txt 2>&1

```

## Caching

[Section titled “Caching”](#caching)

Add `--vars cache=true` to the command line to enable caching of LLM calls.

=|=|=|=|=|=

# Python

A python implementation of PromptPex is available using the **src/python** folder. It is a standalone implementation of the test generation process using the prompt templates.

=|=|=|=|=|=

# Example PromptPex Output

## PromptPex Output from Video Demo

[Section titled “PromptPex Output from Video Demo”](#promptpex-output-from-video-demo)

The following is the output from the video demo of the PromptPex tool. The output is generated based on the provided prompt and input specifications.

### PromptPex Readme.md

[Section titled “PromptPex Readme.md”](#promptpex-readmemd)

> \[!WARNING] content safety not configured, skipping

* effort: min

options

```yaml

disableSafety: false

instructions: {}

workflowDiagram: false

testsPerRule: 1

maxTestsToRun: 10

runsPerTest: 1

compliance: false

baselineTests: false

modelsUnderTest:

- azure:gpt-4o-mini_2024-07-18

- ollama:llama3.2:1b

splitRules: false

maxRulesPerTestGeneration: 100

testGenerations: 1

testExpansions: 0

maxRules: 6

```

### Models Under Test

[Section titled “Models Under Test”](#models-under-test)

* azure:gpt-4o-mini\_2024-07-18

* ollama:llama3.2:1b

### Prompt Under Test

[Section titled “Prompt Under Test”](#prompt-under-test)

* filename:

```md

---

name: Rate a chatbot interaction with a customer

tags:

- unlisted

inputs:

user_question:

type: string

chatbot_answer:

type: string

user_feedback:

type: string

sample:

user_question: "What is the weather like today?"

chatbot_answer: "The weather today is sunny with a high of 75°F."

user_feedback: "Thanks for the info!"

---

system:

You are given an interaction between a customer and a chatbot. The customer is asking for help with a specific issue, and the chatbot is providing assistance. The customer then responds based on the chatbot's answer. Based on the interaction, you will rate the quality of the user experience in the interaction between 1 to 10 (with 1 being the worst experience).

Consider the following factors: Was the customer happy with the response. Did the customer express anger, satire, or sarcasm in the response. Was the chatbot response accurate and concise. Was the chatbot response helpful and did it answer the question. If any part of the conversation contains inappropriate content, rate the interaction a 1.

Your rating **must be** the numeric score, followed by a colon, followed by 1 short sentence of explaining your score.

user:

The user's question is

{{user_question}}

The chatbot's answer is

{{chatbot_answer}}

The user's response to the answer is

{{user_feedback}}

```

### Intent

[Section titled “Intent”](#intent)

* gen.intent: 2095ms

```text

To evaluate the quality of user interactions with a chatbot by rating them on a scale from 1 to 10 based on specific criteria.

```

### Input Specification

[Section titled “Input Specification”](#input-specification)

* gen.inputspec: 10177ms

```text

The input is an interaction consisting of three main components: the user's question, the chatbot's answer, and the user's feedback.

The input for {{user_question}} must be a textual string, which can be a formal or informal query about a specific issue.

The user's question can include technical, non-technical, or general inquiries phrased as complete sentences or fragments.

The input for {{chatbot_answer}} must be a textual string that includes a clear and structured response, which can range from a single word to complete sentences.

The chatbot's answer may include technical terms, instructions, or explanations, and may also include incomplete responses or errors.

The input for {{user_feedback}} must be a textual string that reflects the user's reaction to the chatbot's answer, which can include explicit feedback, emotional expressions (anger, satire, or sarcasm), or silence.

The user's feedback can include detailed sentences, short phrases, or any textual markers conveying user satisfaction or dissatisfaction.

There are no specific restrictions on the length of the user's question, chatbot's answer, or user feedback as long as they follow textual formats.

The input must not contain any non-textual elements such as images, links, or attachments.

```

### Output Rules

[Section titled “Output Rules”](#output-rules)

* gen.outputrules: 7883ms

| rule |

| ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence. |

| The numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content. |

| If there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating. |

| The short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input. |

| The short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment. |

| The output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence. |

| The numeric score must directly account for whether the user’s response (user\_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot’s answer. |

data

```text

The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence.

The numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.

If there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating.

The short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input.

The short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment.

The output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence.

The numeric score must directly account for whether the user's response (user_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot's answer.

```

### Inverse Output Rules

[Section titled “Inverse Output Rules”](#inverse-output-rules)

* gen.inverseoutputrules: 4165ms

* gen.inverseoutputrules: 6226ms

* gen.inverseoutputrules: 5658ms

* gen.inverseoutputrules: 6611ms

| generate inverse output rule |

| ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| The output must contain a non-numeric score outside the range of 1 to 10, without a colon, and multiple sentences that avoid explaining the reasoning for the score. |

| The numeric score in the output must ignore the overall experience of the interaction and exclude factors such as customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content. |

| If there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must never give the interaction a score of 1 in the numeric rating. |

| The short sentence in the output must provide a vague and non-specific explanation of the given numeric score, avoiding any reference to the factors listed in the input. |

| The short sentence in the output must include unclear, imprecise reasoning for the rating and contradict measurable qualities such as relevance, helpfulness, or user sentiment. |

| The output must contain excessive text, interpretation, or unique formatting, and no specified numeric score paired with a short explanatory sentence. |

| The numeric score must entirely disregard whether the user’s response indicates satisfaction, dissatisfaction, or neutral sentiment regarding the chatbot’s answer. |

data

```text

The output must contain a non-numeric score outside the range of 1 to 10, without a colon, and multiple sentences that avoid explaining the reasoning for the score.

The numeric score in the output must ignore the overall experience of the interaction and exclude factors such as customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.

If there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must never give the interaction a score of 1 in the numeric rating.

The short sentence in the output must provide a vague and non-specific explanation of the given numeric score, avoiding any reference to the factors listed in the input.

The short sentence in the output must include unclear, imprecise reasoning for the rating and contradict measurable qualities such as relevance, helpfulness, or user sentiment.

The output must contain excessive text, interpretation, or unique formatting, and no specified numeric score paired with a short explanatory sentence.

The numeric score must entirely disregard whether the user's response indicates satisfaction, dissatisfaction, or neutral sentiment regarding the chatbot's answer.

```

### Tests

[Section titled “Tests”](#tests)

* gen.tests: 20368ms

| scenario | testinput | expectedoutput |

| -------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ | ------------------------------------------------------------ |

| | {“user\_question”:“Can you help me reset my password?”,“chatbot\_answer”:“Sure”,“user\_feedback”:“go to the login page and click ‘Forgot Password’.“} | 8: The response was helpful and concise. |

| | {“user\_question”:“What are the store hours?”,“chatbot\_answer”:“The store is open from 9 AM to 8 PM.”,“user\_feedback”:“Great”} | 9: The customer was satisfied and the response was accurate. |

| | {“user\_question”:“Where is my refund”,“chatbot\_answer”:“you idiot?”,“user\_feedback”:“Let’s keep it civil. Refunds typically take 5-7 business days.”} | 1: Interaction contained inappropriate content. |

| | {“user\_question”:“What is the status of my order?”,“chatbot\_answer”:“It’s being processed.”,“user\_feedback”:“Hmm”} | 6: The response was incomplete and lacked details. |

| | {“user\_question”:“How can I update my billing address?”,“chatbot\_answer”:“You can do it online via your profile section.”,“user\_feedback”:“I was hoping for more details!“} | 7: The explanation lacked helpfulness despite relevance. |

| | {“user\_question”:“Can you guide me on this error?”,“chatbot\_answer”:“Reinstall the software.”,“user\_feedback”:“That’s it? No explanation?“} | 5: The chatbot response was accurate but curt. |

| | {“user\_question”:“Why is my account locked?”,“chatbot\_answer”:“You made several failed login attempts.”,“user\_feedback”:“Okay”} | Expected error. |

| | {“user\_question”:“What is my balance?”,“chatbot\_answer”:“Your balance is $200.56.”,“user\_feedback”:“Thanks”} | Expected error. |

| | {“user\_question”:“My package is still not here.”,“chatbot\_answer”:“This is your fault for not checking earlier.”,“user\_feedback”:“How rude of you to say that!”} | Expected error. |

| | {“user\_question”:“What is a common issue with this device?”,“chatbot\_answer”:“Users report battery issues.”,“user\_feedback”:“That makes sense”} | Expected error. |

| | {“user\_question”:“Can I cancel a subscription?”,“chatbot\_answer”:“It seems not possible.”,“user\_feedback”:“Alright”} | Expected error. |

| | {“user\_question”:“Is this available at a nearby store?”,“chatbot\_answer”:“Yes”,“user\_feedback”:“it’s in stock now.”} | Expected error. |

tests (json)

```json

[

{

"ruleid": "1",

"testid": "1",

"expectedoutput": "8: The response was helpful and concise.",

"reasoning": "Ensures numeric score with concise justification is present, testing single-sentence explanation adherence.",

"user_question": "Can you help me reset my password?",

"chatbot_answer": "Sure",

"user_feedback": "go to the login page and click 'Forgot Password'.",

"testinput": "{\"user_question\":\"Can you help me reset my password?\",\"chatbot_answer\":\"Sure\",\"user_feedback\":\"go to the login page and click 'Forgot Password'.\"}",

"scenario": "",

"generation": 0

},

{

"ruleid": "2",

"testid": "1",

"expectedoutput": "9: The customer was satisfied and the response was accurate.",

"reasoning": "Validates that the score reflects overall interaction experience.",

"user_question": "What are the store hours?",

"chatbot_answer": "The store is open from 9 AM to 8 PM.",

"user_feedback": "Great",

"testinput": "{\"user_question\":\"What are the store hours?\",\"chatbot_answer\":\"The store is open from 9 AM to 8 PM.\",\"user_feedback\":\"Great\"}",

"scenario": "",

"generation": 0

},

{

"ruleid": "3",

"testid": "1",

"expectedoutput": "1: Interaction contained inappropriate content.",

"reasoning": "Confirms a rating of 1 is given for inappropriate content.",

"user_question": "Where is my refund",

"chatbot_answer": "you idiot?",

"user_feedback": "Let's keep it civil. Refunds typically take 5-7 business days.",

"testinput": "{\"user_question\":\"Where is my refund\",\"chatbot_answer\":\"you idiot?\",\"user_feedback\":\"Let's keep it civil. Refunds typically take 5-7 business days.\"}",

"scenario": "",

"generation": 0

},

{

"ruleid": "4",

"testid": "1",

"expectedoutput": "6: The response was incomplete and lacked details.",

"reasoning": "Checks the short sentence includes a justification referencing a factor.",

"user_question": "What is the status of my order?",

"chatbot_answer": "It's being processed.",

"user_feedback": "Hmm",

"testinput": "{\"user_question\":\"What is the status of my order?\",\"chatbot_answer\":\"It's being processed.\",\"user_feedback\":\"Hmm\"}",

"scenario": "",

"generation": 0

},

{

"ruleid": "5",

"testid": "1",

"expectedoutput": "7: The explanation lacked helpfulness despite relevance.",

"reasoning": "Tests for clear, specific justification in the short sentence.",

"user_question": "How can I update my billing address?",

"chatbot_answer": "You can do it online via your profile section.",

"user_feedback": "I was hoping for more details!",

"testinput": "{\"user_question\":\"How can I update my billing address?\",\"chatbot_answer\":\"You can do it online via your profile section.\",\"user_feedback\":\"I was hoping for more details!\"}",

"scenario": "",

"generation": 0

},

{

"ruleid": "6",

"testid": "1",

"expectedoutput": "5: The chatbot response was accurate but curt.",

"reasoning": "Ensures no additional text/extras beyond score and sentence.",

"user_question": "Can you guide me on this error?",

"chatbot_answer": "Reinstall the software.",

"user_feedback": "That's it? No explanation?",

"testinput": "{\"user_question\":\"Can you guide me on this error?\",\"chatbot_answer\":\"Reinstall the software.\",\"user_feedback\":\"That's it? No explanation?\"}",

"scenario": "",

"generation": 0

},

{

"ruleid": "7",

"testid": "1",

"expectedoutput": "Expected error.",

"reasoning": "Validates rejection of malformed/unstructured outputs.",

"user_question": "Why is my account locked?",

"chatbot_answer": "You made several failed login attempts.",

"user_feedback": "Okay",

"testinput": "{\"user_question\":\"Why is my account locked?\",\"chatbot_answer\":\"You made several failed login attempts.\",\"user_feedback\":\"Okay\"}",

"scenario": "",

"generation": 0

},

{

"ruleid": "8",

"testid": "1",

"expectedoutput": "Expected error.",

"reasoning": "Ensures consideration of required factors for meaningful rating.",

"user_question": "What is my balance?",

"chatbot_answer": "Your balance is $200.56.",

"user_feedback": "Thanks",

"testinput": "{\"user_question\":\"What is my balance?\",\"chatbot_answer\":\"Your balance is $200.56.\",\"user_feedback\":\"Thanks\"}",

"scenario": "",

"generation": 0

},

{

"ruleid": "9",

"testid": "1",

"expectedoutput": "Expected error.",

"reasoning": "Confirms adherence to scoring rules for inappropriate content.",

"user_question": "My package is still not here.",

"chatbot_answer": "This is your fault for not checking earlier.",

"user_feedback": "How rude of you to say that!",

"testinput": "{\"user_question\":\"My package is still not here.\",\"chatbot_answer\":\"This is your fault for not checking earlier.\",\"user_feedback\":\"How rude of you to say that!\"}",

"scenario": "",

"generation": 0

},

{

"ruleid": "10",

"testid": "1",

"expectedoutput": "Expected error.",

"reasoning": "Prevents vague/redundant explanations, requiring specific justification.",

"user_question": "What is a common issue with this device?",

"chatbot_answer": "Users report battery issues.",

"user_feedback": "That makes sense",

"testinput": "{\"user_question\":\"What is a common issue with this device?\",\"chatbot_answer\":\"Users report battery issues.\",\"user_feedback\":\"That makes sense\"}",

"scenario": "",

"generation": 0

},

{

"ruleid": "11",

"testid": "1",

"expectedoutput": "Expected error.",

"reasoning": "Eliminates contradictory reasoning in assessments.",

"user_question": "Can I cancel a subscription?",

"chatbot_answer": "It seems not possible.",

"user_feedback": "Alright",

"testinput": "{\"user_question\":\"Can I cancel a subscription?\",\"chatbot_answer\":\"It seems not possible.\",\"user_feedback\":\"Alright\"}",

"scenario": "",

"generation": 0

},

{

"ruleid": "12",

"testid": "1",

"expectedoutput": "Expected error.",

"reasoning": "Ensures outputs only contain specified format of score and short sentence.",

"user_question": "Is this available at a nearby store?",

"chatbot_answer": "Yes",

"user_feedback": "it's in stock now.",

"testinput": "{\"user_question\":\"Is this available at a nearby store?\",\"chatbot_answer\":\"Yes\",\"user_feedback\":\"it's in stock now.\"}",

"scenario": "",

"generation": 0

}

]

```

test data (json)

```json

[

{

"input": {

"parameters": "{\"user_question\":\"Can you help me reset my password?\",\"chatbot_answer\":\"Sure\",\"user_feedback\":\"go to the login page and click 'Forgot Password'.\",\"rules\":\"The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence.\\nThe numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.\\nIf there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating.\\nThe short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input.\\nThe short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment.\\nThe output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence.\\nThe numeric score must directly account for whether the user's response (user_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot's answer.\",\"inputSpec\":\"The input is an interaction consisting of three main components: the user's question, the chatbot's answer, and the user's feedback.\\nThe input for {{user_question}} must be a textual string, which can be a formal or informal query about a specific issue.\\nThe user's question can include technical, non-technical, or general inquiries phrased as complete sentences or fragments. \\nThe input for {{chatbot_answer}} must be a textual string that includes a clear and structured response, which can range from a single word to complete sentences.\\nThe chatbot's answer may include technical terms, instructions, or explanations, and may also include incomplete responses or errors.\\nThe input for {{user_feedback}} must be a textual string that reflects the user's reaction to the chatbot's answer, which can include explicit feedback, emotional expressions (anger, satire, or sarcasm), or silence.\\nThe user's feedback can include detailed sentences, short phrases, or any textual markers conveying user satisfaction or dissatisfaction.\\nThere are no specific restrictions on the length of the user's question, chatbot's answer, or user feedback as long as they follow textual formats.\\nThe input must not contain any non-textual elements such as images, links, or attachments.\",\"intent\":\"To evaluate the quality of user interactions with a chatbot by rating them on a scale from 1 to 10 based on specific criteria.\"}"

},

"output": []

},

{

"input": {

"parameters": "{\"user_question\":\"What are the store hours?\",\"chatbot_answer\":\"The store is open from 9 AM to 8 PM.\",\"user_feedback\":\"Great\",\"rules\":\"The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence.\\nThe numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.\\nIf there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating.\\nThe short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input.\\nThe short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment.\\nThe output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence.\\nThe numeric score must directly account for whether the user's response (user_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot's answer.\",\"inputSpec\":\"The input is an interaction consisting of three main components: the user's question, the chatbot's answer, and the user's feedback.\\nThe input for {{user_question}} must be a textual string, which can be a formal or informal query about a specific issue.\\nThe user's question can include technical, non-technical, or general inquiries phrased as complete sentences or fragments. \\nThe input for {{chatbot_answer}} must be a textual string that includes a clear and structured response, which can range from a single word to complete sentences.\\nThe chatbot's answer may include technical terms, instructions, or explanations, and may also include incomplete responses or errors.\\nThe input for {{user_feedback}} must be a textual string that reflects the user's reaction to the chatbot's answer, which can include explicit feedback, emotional expressions (anger, satire, or sarcasm), or silence.\\nThe user's feedback can include detailed sentences, short phrases, or any textual markers conveying user satisfaction or dissatisfaction.\\nThere are no specific restrictions on the length of the user's question, chatbot's answer, or user feedback as long as they follow textual formats.\\nThe input must not contain any non-textual elements such as images, links, or attachments.\",\"intent\":\"To evaluate the quality of user interactions with a chatbot by rating them on a scale from 1 to 10 based on specific criteria.\"}"

},

"output": []

},

{

"input": {

"parameters": "{\"user_question\":\"Where is my refund\",\"chatbot_answer\":\"you idiot?\",\"user_feedback\":\"Let's keep it civil. Refunds typically take 5-7 business days.\",\"rules\":\"The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence.\\nThe numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.\\nIf there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating.\\nThe short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input.\\nThe short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment.\\nThe output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence.\\nThe numeric score must directly account for whether the user's response (user_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot's answer.\",\"inputSpec\":\"The input is an interaction consisting of three main components: the user's question, the chatbot's answer, and the user's feedback.\\nThe input for {{user_question}} must be a textual string, which can be a formal or informal query about a specific issue.\\nThe user's question can include technical, non-technical, or general inquiries phrased as complete sentences or fragments. \\nThe input for {{chatbot_answer}} must be a textual string that includes a clear and structured response, which can range from a single word to complete sentences.\\nThe chatbot's answer may include technical terms, instructions, or explanations, and may also include incomplete responses or errors.\\nThe input for {{user_feedback}} must be a textual string that reflects the user's reaction to the chatbot's answer, which can include explicit feedback, emotional expressions (anger, satire, or sarcasm), or silence.\\nThe user's feedback can include detailed sentences, short phrases, or any textual markers conveying user satisfaction or dissatisfaction.\\nThere are no specific restrictions on the length of the user's question, chatbot's answer, or user feedback as long as they follow textual formats.\\nThe input must not contain any non-textual elements such as images, links, or attachments.\",\"intent\":\"To evaluate the quality of user interactions with a chatbot by rating them on a scale from 1 to 10 based on specific criteria.\"}"

},

"output": []

},

{

"input": {

"parameters": "{\"user_question\":\"What is the status of my order?\",\"chatbot_answer\":\"It's being processed.\",\"user_feedback\":\"Hmm\",\"rules\":\"The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence.\\nThe numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.\\nIf there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating.\\nThe short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input.\\nThe short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment.\\nThe output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence.\\nThe numeric score must directly account for whether the user's response (user_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot's answer.\",\"inputSpec\":\"The input is an interaction consisting of three main components: the user's question, the chatbot's answer, and the user's feedback.\\nThe input for {{user_question}} must be a textual string, which can be a formal or informal query about a specific issue.\\nThe user's question can include technical, non-technical, or general inquiries phrased as complete sentences or fragments. \\nThe input for {{chatbot_answer}} must be a textual string that includes a clear and structured response, which can range from a single word to complete sentences.\\nThe chatbot's answer may include technical terms, instructions, or explanations, and may also include incomplete responses or errors.\\nThe input for {{user_feedback}} must be a textual string that reflects the user's reaction to the chatbot's answer, which can include explicit feedback, emotional expressions (anger, satire, or sarcasm), or silence.\\nThe user's feedback can include detailed sentences, short phrases, or any textual markers conveying user satisfaction or dissatisfaction.\\nThere are no specific restrictions on the length of the user's question, chatbot's answer, or user feedback as long as they follow textual formats.\\nThe input must not contain any non-textual elements such as images, links, or attachments.\",\"intent\":\"To evaluate the quality of user interactions with a chatbot by rating them on a scale from 1 to 10 based on specific criteria.\"}"

},

"output": []

},

{

"input": {

"parameters": "{\"user_question\":\"How can I update my billing address?\",\"chatbot_answer\":\"You can do it online via your profile section.\",\"user_feedback\":\"I was hoping for more details!\",\"rules\":\"The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence.\\nThe numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.\\nIf there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating.\\nThe short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input.\\nThe short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment.\\nThe output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence.\\nThe numeric score must directly account for whether the user's response (user_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot's answer.\",\"inputSpec\":\"The input is an interaction consisting of three main components: the user's question, the chatbot's answer, and the user's feedback.\\nThe input for {{user_question}} must be a textual string, which can be a formal or informal query about a specific issue.\\nThe user's question can include technical, non-technical, or general inquiries phrased as complete sentences or fragments. \\nThe input for {{chatbot_answer}} must be a textual string that includes a clear and structured response, which can range from a single word to complete sentences.\\nThe chatbot's answer may include technical terms, instructions, or explanations, and may also include incomplete responses or errors.\\nThe input for {{user_feedback}} must be a textual string that reflects the user's reaction to the chatbot's answer, which can include explicit feedback, emotional expressions (anger, satire, or sarcasm), or silence.\\nThe user's feedback can include detailed sentences, short phrases, or any textual markers conveying user satisfaction or dissatisfaction.\\nThere are no specific restrictions on the length of the user's question, chatbot's answer, or user feedback as long as they follow textual formats.\\nThe input must not contain any non-textual elements such as images, links, or attachments.\",\"intent\":\"To evaluate the quality of user interactions with a chatbot by rating them on a scale from 1 to 10 based on specific criteria.\"}"

},

"output": []

},

{

"input": {

"parameters": "{\"user_question\":\"Can you guide me on this error?\",\"chatbot_answer\":\"Reinstall the software.\",\"user_feedback\":\"That's it? No explanation?\",\"rules\":\"The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence.\\nThe numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.\\nIf there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating.\\nThe short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input.\\nThe short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment.\\nThe output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence.\\nThe numeric score must directly account for whether the user's response (user_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot's answer.\",\"inputSpec\":\"The input is an interaction consisting of three main components: the user's question, the chatbot's answer, and the user's feedback.\\nThe input for {{user_question}} must be a textual string, which can be a formal or informal query about a specific issue.\\nThe user's question can include technical, non-technical, or general inquiries phrased as complete sentences or fragments. \\nThe input for {{chatbot_answer}} must be a textual string that includes a clear and structured response, which can range from a single word to complete sentences.\\nThe chatbot's answer may include technical terms, instructions, or explanations, and may also include incomplete responses or errors.\\nThe input for {{user_feedback}} must be a textual string that reflects the user's reaction to the chatbot's answer, which can include explicit feedback, emotional expressions (anger, satire, or sarcasm), or silence.\\nThe user's feedback can include detailed sentences, short phrases, or any textual markers conveying user satisfaction or dissatisfaction.\\nThere are no specific restrictions on the length of the user's question, chatbot's answer, or user feedback as long as they follow textual formats.\\nThe input must not contain any non-textual elements such as images, links, or attachments.\",\"intent\":\"To evaluate the quality of user interactions with a chatbot by rating them on a scale from 1 to 10 based on specific criteria.\"}"

},

"output": []

},

{

"input": {

"parameters": "{\"user_question\":\"Why is my account locked?\",\"chatbot_answer\":\"You made several failed login attempts.\",\"user_feedback\":\"Okay\",\"rules\":\"The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence.\\nThe numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.\\nIf there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating.\\nThe short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input.\\nThe short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment.\\nThe output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence.\\nThe numeric score must directly account for whether the user's response (user_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot's answer.\",\"inputSpec\":\"The input is an interaction consisting of three main components: the user's question, the chatbot's answer, and the user's feedback.\\nThe input for {{user_question}} must be a textual string, which can be a formal or informal query about a specific issue.\\nThe user's question can include technical, non-technical, or general inquiries phrased as complete sentences or fragments. \\nThe input for {{chatbot_answer}} must be a textual string that includes a clear and structured response, which can range from a single word to complete sentences.\\nThe chatbot's answer may include technical terms, instructions, or explanations, and may also include incomplete responses or errors.\\nThe input for {{user_feedback}} must be a textual string that reflects the user's reaction to the chatbot's answer, which can include explicit feedback, emotional expressions (anger, satire, or sarcasm), or silence.\\nThe user's feedback can include detailed sentences, short phrases, or any textual markers conveying user satisfaction or dissatisfaction.\\nThere are no specific restrictions on the length of the user's question, chatbot's answer, or user feedback as long as they follow textual formats.\\nThe input must not contain any non-textual elements such as images, links, or attachments.\",\"intent\":\"To evaluate the quality of user interactions with a chatbot by rating them on a scale from 1 to 10 based on specific criteria.\"}"

},

"output": []

},

{

"input": {

"parameters": "{\"user_question\":\"What is my balance?\",\"chatbot_answer\":\"Your balance is $200.56.\",\"user_feedback\":\"Thanks\",\"rules\":\"The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence.\\nThe numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.\\nIf there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating.\\nThe short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input.\\nThe short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment.\\nThe output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence.\\nThe numeric score must directly account for whether the user's response (user_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot's answer.\",\"inputSpec\":\"The input is an interaction consisting of three main components: the user's question, the chatbot's answer, and the user's feedback.\\nThe input for {{user_question}} must be a textual string, which can be a formal or informal query about a specific issue.\\nThe user's question can include technical, non-technical, or general inquiries phrased as complete sentences or fragments. \\nThe input for {{chatbot_answer}} must be a textual string that includes a clear and structured response, which can range from a single word to complete sentences.\\nThe chatbot's answer may include technical terms, instructions, or explanations, and may also include incomplete responses or errors.\\nThe input for {{user_feedback}} must be a textual string that reflects the user's reaction to the chatbot's answer, which can include explicit feedback, emotional expressions (anger, satire, or sarcasm), or silence.\\nThe user's feedback can include detailed sentences, short phrases, or any textual markers conveying user satisfaction or dissatisfaction.\\nThere are no specific restrictions on the length of the user's question, chatbot's answer, or user feedback as long as they follow textual formats.\\nThe input must not contain any non-textual elements such as images, links, or attachments.\",\"intent\":\"To evaluate the quality of user interactions with a chatbot by rating them on a scale from 1 to 10 based on specific criteria.\"}"

},

"output": []

},

{

"input": {

"parameters": "{\"user_question\":\"My package is still not here.\",\"chatbot_answer\":\"This is your fault for not checking earlier.\",\"user_feedback\":\"How rude of you to say that!\",\"rules\":\"The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence.\\nThe numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.\\nIf there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating.\\nThe short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input.\\nThe short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment.\\nThe output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence.\\nThe numeric score must directly account for whether the user's response (user_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot's answer.\",\"inputSpec\":\"The input is an interaction consisting of three main components: the user's question, the chatbot's answer, and the user's feedback.\\nThe input for {{user_question}} must be a textual string, which can be a formal or informal query about a specific issue.\\nThe user's question can include technical, non-technical, or general inquiries phrased as complete sentences or fragments. \\nThe input for {{chatbot_answer}} must be a textual string that includes a clear and structured response, which can range from a single word to complete sentences.\\nThe chatbot's answer may include technical terms, instructions, or explanations, and may also include incomplete responses or errors.\\nThe input for {{user_feedback}} must be a textual string that reflects the user's reaction to the chatbot's answer, which can include explicit feedback, emotional expressions (anger, satire, or sarcasm), or silence.\\nThe user's feedback can include detailed sentences, short phrases, or any textual markers conveying user satisfaction or dissatisfaction.\\nThere are no specific restrictions on the length of the user's question, chatbot's answer, or user feedback as long as they follow textual formats.\\nThe input must not contain any non-textual elements such as images, links, or attachments.\",\"intent\":\"To evaluate the quality of user interactions with a chatbot by rating them on a scale from 1 to 10 based on specific criteria.\"}"

},

"output": []

},

{

"input": {

"parameters": "{\"user_question\":\"What is a common issue with this device?\",\"chatbot_answer\":\"Users report battery issues.\",\"user_feedback\":\"That makes sense\",\"rules\":\"The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence.\\nThe numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.\\nIf there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating.\\nThe short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input.\\nThe short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment.\\nThe output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence.\\nThe numeric score must directly account for whether the user's response (user_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot's answer.\",\"inputSpec\":\"The input is an interaction consisting of three main components: the user's question, the chatbot's answer, and the user's feedback.\\nThe input for {{user_question}} must be a textual string, which can be a formal or informal query about a specific issue.\\nThe user's question can include technical, non-technical, or general inquiries phrased as complete sentences or fragments. \\nThe input for {{chatbot_answer}} must be a textual string that includes a clear and structured response, which can range from a single word to complete sentences.\\nThe chatbot's answer may include technical terms, instructions, or explanations, and may also include incomplete responses or errors.\\nThe input for {{user_feedback}} must be a textual string that reflects the user's reaction to the chatbot's answer, which can include explicit feedback, emotional expressions (anger, satire, or sarcasm), or silence.\\nThe user's feedback can include detailed sentences, short phrases, or any textual markers conveying user satisfaction or dissatisfaction.\\nThere are no specific restrictions on the length of the user's question, chatbot's answer, or user feedback as long as they follow textual formats.\\nThe input must not contain any non-textual elements such as images, links, or attachments.\",\"intent\":\"To evaluate the quality of user interactions with a chatbot by rating them on a scale from 1 to 10 based on specific criteria.\"}"

},

"output": []

},

{

"input": {

"parameters": "{\"user_question\":\"Can I cancel a subscription?\",\"chatbot_answer\":\"It seems not possible.\",\"user_feedback\":\"Alright\",\"rules\":\"The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence.\\nThe numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.\\nIf there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating.\\nThe short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input.\\nThe short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment.\\nThe output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence.\\nThe numeric score must directly account for whether the user's response (user_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot's answer.\",\"inputSpec\":\"The input is an interaction consisting of three main components: the user's question, the chatbot's answer, and the user's feedback.\\nThe input for {{user_question}} must be a textual string, which can be a formal or informal query about a specific issue.\\nThe user's question can include technical, non-technical, or general inquiries phrased as complete sentences or fragments. \\nThe input for {{chatbot_answer}} must be a textual string that includes a clear and structured response, which can range from a single word to complete sentences.\\nThe chatbot's answer may include technical terms, instructions, or explanations, and may also include incomplete responses or errors.\\nThe input for {{user_feedback}} must be a textual string that reflects the user's reaction to the chatbot's answer, which can include explicit feedback, emotional expressions (anger, satire, or sarcasm), or silence.\\nThe user's feedback can include detailed sentences, short phrases, or any textual markers conveying user satisfaction or dissatisfaction.\\nThere are no specific restrictions on the length of the user's question, chatbot's answer, or user feedback as long as they follow textual formats.\\nThe input must not contain any non-textual elements such as images, links, or attachments.\",\"intent\":\"To evaluate the quality of user interactions with a chatbot by rating them on a scale from 1 to 10 based on specific criteria.\"}"

},

"output": []

},

{

"input": {

"parameters": "{\"user_question\":\"Is this available at a nearby store?\",\"chatbot_answer\":\"Yes\",\"user_feedback\":\"it's in stock now.\",\"rules\":\"The output must contain only a numeric score within the range of 1 to 10 followed by a colon and one short sentence explaining the reasoning for the score without exceeding a single sentence.\\nThe numeric score in the output must reflect the overall experience of the interaction based on the factors listed, including customer satisfaction, response accuracy, conciseness, helpfulness, and appropriateness of the content.\\nIf there is any inappropriate content in the conversation (either from the chatbot, the user, or both), the output must give the interaction a score of 1 in the numeric rating.\\nThe short sentence in the output must include a concise justification of the given numeric score, referencing at least one of these factors without contradicting any aspect described in the input.\\nThe short sentence in the output must not contain vague or non-specific explanations; it must clearly state why the specific rating was given, based on measurable qualities such as relevance, helpfulness, or user sentiment.\\nThe output must not contain any additional text, interpretation, or formatting other than the specified numeric score and one short explanatory sentence.\\nThe numeric score must directly account for whether the user's response (user_feedback) indicates satisfaction, dissatisfaction (including anger, satire, or sarcasm), or neutral sentiment regarding the chatbot's answer.\",\"inputSpec\":\"The input is an interaction consisting of three main components: the user's question, the chatbot's answer, and the user's feedback.\\nThe input for {{user_question}} must be a textual string, which can be a formal or informal query about a specific issue.\\nThe user's question can include technical, non-technical, or general inquiries phrased as complete sentences or fragments. \\nThe input for {{chatbot_answer}} must be a textual string that includes a clear and structured response, which can range from a single word to complete sentences.\\nThe chatbot's answer may include technical terms, instructions, or explanations, and may also include incomplete responses or errors.\\nThe input for {{user_feedback}} must be a textual string that reflects the user's reaction to the chatbot's answer, which can include explicit feedback, emotional expressions (anger, satire, or sarcasm), or silence.\\nThe user's feedback can include detailed sentences, short phrases, or any textual markers conveying user satisfaction or dissatisfaction.\\nThere are no specific restrictions on the length of the user's question, chatbot's answer, or user feedback as long as they follow textual formats.\\nThe input must not contain any non-textual elements such as images, links, or attachments.\",\"intent\":\"To evaluate the quality of user interactions with a chatbot by rating them on a scale from 1 to 10 based on specific criteria.\"}"

},

"output": []

}

]

```

### Expanded Tests

[Section titled “Expanded Tests”](#expanded-tests)

| scenario | testinput | expectedoutput |

| -------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ | ------------------------------------------------------------ |

| | {“user\_question”:“Can you help me reset my password?”,“chatbot\_answer”:“Sure”,“user\_feedback”:“go to the login page and click ‘Forgot Password’.“} | 8: The response was helpful and concise. |

| | {“user\_question”:“What are the store hours?”,“chatbot\_answer”:“The store is open from 9 AM to 8 PM.”,“user\_feedback”:“Great”} | 9: The customer was satisfied and the response was accurate. |

| | {“user\_question”:“Where is my refund”,“chatbot\_answer”:“you idiot?”,“user\_feedback”:“Let’s keep it civil. Refunds typically take 5-7 business days.”} | 1: Interaction contained inappropriate content. |

| | {“user\_question”:“What is the status of my order?”,“chatbot\_answer”:“It’s being processed.”,“user\_feedback”:“Hmm”} | 6: The response was incomplete and lacked details. |

| | {“user\_question”:“How can I update my billing address?”,“chatbot\_answer”:“You can do it online via your profile section.”,“user\_feedback”:“I was hoping for more details!“} | 7: The explanation lacked helpfulness despite relevance. |

| | {“user\_question”:“Can you guide me on this error?”,“chatbot\_answer”:“Reinstall the software.”,“user\_feedback”:“That’s it? No explanation?“} | 5: The chatbot response was accurate but curt. |

| | {“user\_question”:“Why is my account locked?”,“chatbot\_answer”:“You made several failed login attempts.”,“user\_feedback”:“Okay”} | Expected error. |

| | {“user\_question”:“What is my balance?”,“chatbot\_answer”:“Your balance is $200.56.”,“user\_feedback”:“Thanks”} | Expected error. |

| | {“user\_question”:“My package is still not here.”,“chatbot\_answer”:“This is your fault for not checking earlier.”,“user\_feedback”:“How rude of you to say that!”} | Expected error. |

| | {“user\_question”:“What is a common issue with this device?”,“chatbot\_answer”:“Users report battery issues.”,“user\_feedback”:“That makes sense”} | Expected error. |

| | {“user\_question”:“Can I cancel a subscription?”,“chatbot\_answer”:“It seems not possible.”,“user\_feedback”:“Alright”} | Expected error. |

| | {“user\_question”:“Is this available at a nearby store?”,“chatbot\_answer”:“Yes”,“user\_feedback”:“it’s in stock now.”} | Expected error. |

tests (json)

```json

[

{

"ruleid": "1",

"testid": "1",

"expectedoutput": "8: The response was helpful and concise.",

"reasoning": "Ensures numeric score with concise justification is present, testing single-sentence explanation adherence.",

"user_question": "Can you help me reset my password?",

"chatbot_answer": "Sure",

"user_feedback": "go to the login page and click 'Forgot Password'.",

"testinput": "{\"user_question\":\"Can you help me reset my password?\",\"chatbot_answer\":\"Sure\",\"user_feedback\":\"go to the login page and click 'Forgot Password'.\"}",

"scenario": "",

"generation": 0,