🏠 Overview#

Version: 0.1.6

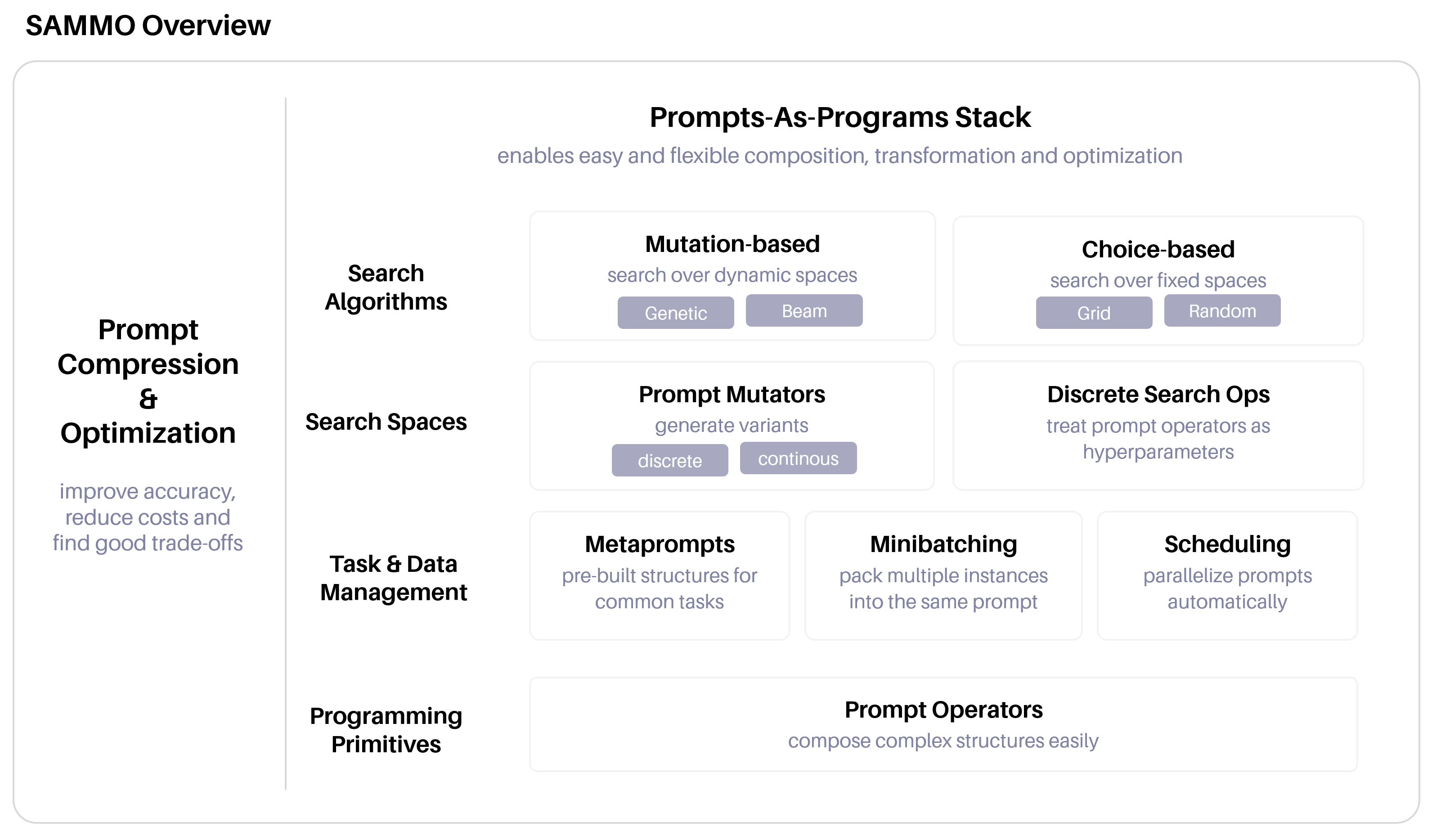

A flexible, easy-to-use library for running and optimizing prompts for Large Language Models (LLMs).

Install library only#

pip install sammo

Install and run tutorials#

Prerequisites

Python 3.11+

The following commands will install sammo and jupyter and launch jupyter notebook. It’s recommended that you create and activate a virtualenv prior to installing packages.

pip install sammo jupyter

# clone sammo to a local directory

git clone https://github.com/microsoft/sammo.git

cd sammo

# launch jupyter notebook and open tutorials directory

jupyter notebook --notebook-dir docs/tutorials

Use Cases#

SAMMO is designed to support

Efficient data labeling: Supports minibatching by packing and parsing multiple datapoints into a single prompt.

Prompt prototyping and engineering: Re-usable components and prompt structures to quickly build and test new prompts.

Instruction optimization: Optimize instructions to do better on a given task.

Prompt compression: Compress prompts while maintaining performance.

Large-scale prompt execution: parallelization and rate-limiting out-of-the-box so you can run many queries in parallel and at scale without overwhelming the LLM API.

It is less useful if you want to build

Example#

This is extending the chat dialog example from Guidance by running queries in parallel.

runner = OpenAIChat(model_id="gpt-3.5-turbo", api_config=API_CONFIG)

expert_names = GenerateText(

Template(

"I want a response to the following question:"

"{{input}}\n"

"Name 3 world-class experts (past or present) who would be great at answering this? Don't answer the question yet."

),

system_prompt="You are a helpful and terse assistant.",

randomness=0,

max_tokens=300,

)

joint_answer = GenerateText(

"Great, now please answer the question as if these experts had collaborated in writing a joint anonymous answer.",

history=expert_names,

randomness=0,

max_tokens=500,

)

questions = [

"How can I be more productive?",

"What will AI look like in 10 years?",

"How do we end world hunger?",

]

print(Output(joint_answer).run(runner, questions))