Unity integration overview

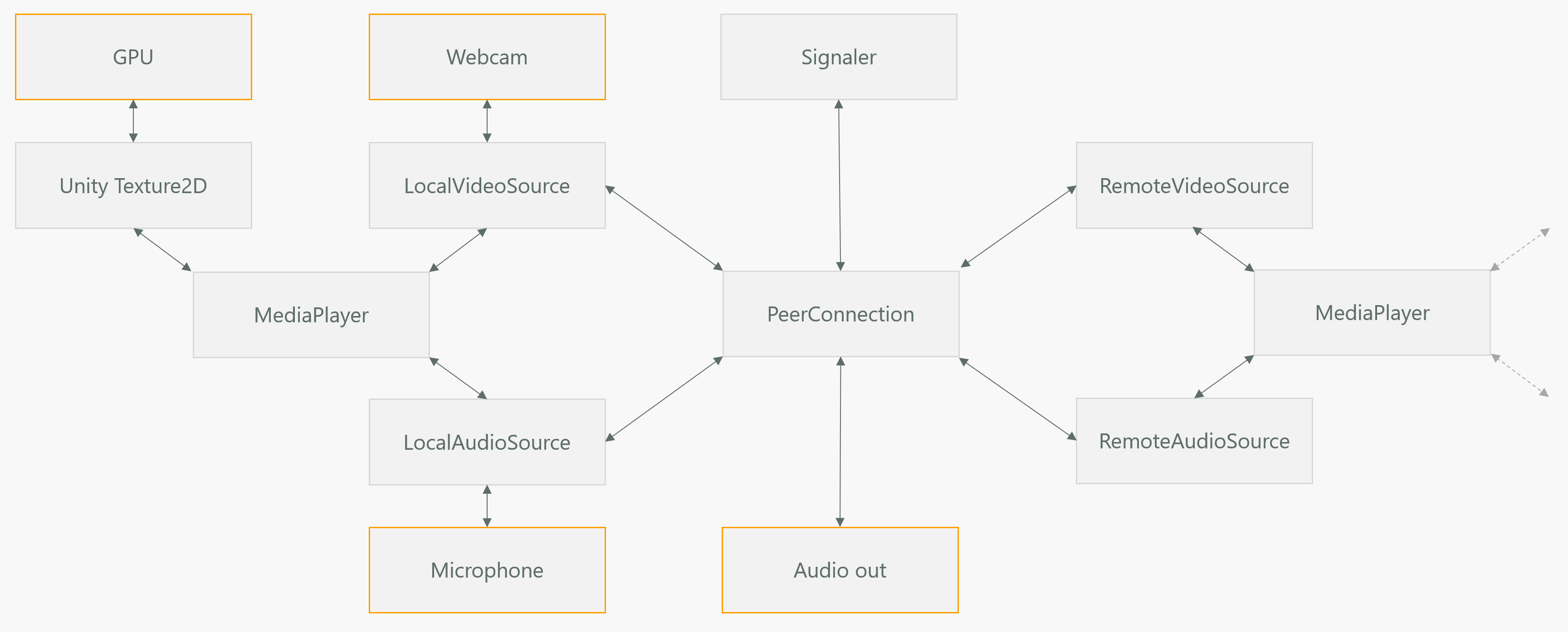

The Unity integration offers a simple way to add real-time communication to an existing Unity application. MixedReality-WebRTC provides a collection of Unity componenents (MonoBehaviour-derived classes) which encapsulate objects from the underlying C# library, and allow in-editor configuration as well as establishing a connection to a remote peer both in standalone and in Play mode.

- The

PeerConnectioncomponent is the entry point for configuring and establishing a peer-to-peer connection. - The peer connection component makes use of a

Signalerto handle the SDP messages dispatching until the direct peer-to-peer connection can be established. - Audio and video tracks from a local audio(microphone) and video (webcam) capture device are handled by the

LocalAudioSourceandLocalVideoSourcecomponents, respectively. - For remote tracks, similarly the

RemoteAudioSourceandRemoteVideoSourcerespectively handle configuring a remote audio and video track streamed from the remote peer. - Rendering of both local and remote media tracks is handled by the

MediaPlayercomponent, which connects to a video source and renders it using a custom shader into a Unity Texture2D object which is later applied on a mesh to be rendered in the scene.

Warning

Currently the remote audio stream is sent directly to the local audio out device, without any interaction with the MediaPlayer component, while the local audio stream is never rendered (heard) locally. This is due to the lack of audio callbacks, not yet implemented on the wrapped PeerConnection object from the underlying C# library.