Note

Go to the end to download the full example code

Measure LLAMA speed#

import pandas

import matplotlib.pyplot as plt

import itertools

import torch

from onnxrt_backend_dev.ext_test_case import unit_test_going

from onnxrt_backend_dev.bench_run import run_benchmark, get_machine, BenchmarkError

repeat = 5

script_name = "onnxrt_backend_dev.llama.dort_bench"

machine = {} if unit_test_going() else get_machine()

if machine.get("capability", (0, 0)) >= (7, 0):

configs = []

for backend, device, num_hidden_layers in itertools.product(

["inductor", "ort"],

["cpu", "cuda"] if torch.cuda.is_available() else ["cpu"],

[1, 2],

):

configs.append(

dict(

backend=backend,

device=device,

num_hidden_layers=num_hidden_layers,

repeat=repeat,

)

)

else:

configs = [

dict(backend="ort", device="cpu", num_hidden_layers=1, repeat=repeat),

dict(backend="ort", device="cpu", num_hidden_layers=2, repeat=repeat),

]

try:

data = run_benchmark(script_name, configs, verbose=1)

data_collected = True

except BenchmarkError as e:

print(e)

data_collected = False

if data_collected:

df = pandas.DataFrame(data)

df = df.drop(["ERROR", "OUTPUT"], axis=1)

filename = "plot_llama_bench.csv"

df.to_csv(filename, index=False)

df = pandas.read_csv(filename) # to cast type

print(df)

0%| | 0/2 [00:00<?, ?it/s]

50%|█████ | 1/2 [00:16<00:16, 16.10s/it]

100%|██████████| 2/2 [00:36<00:00, 18.50s/it]

100%|██████████| 2/2 [00:36<00:00, 18.14s/it]

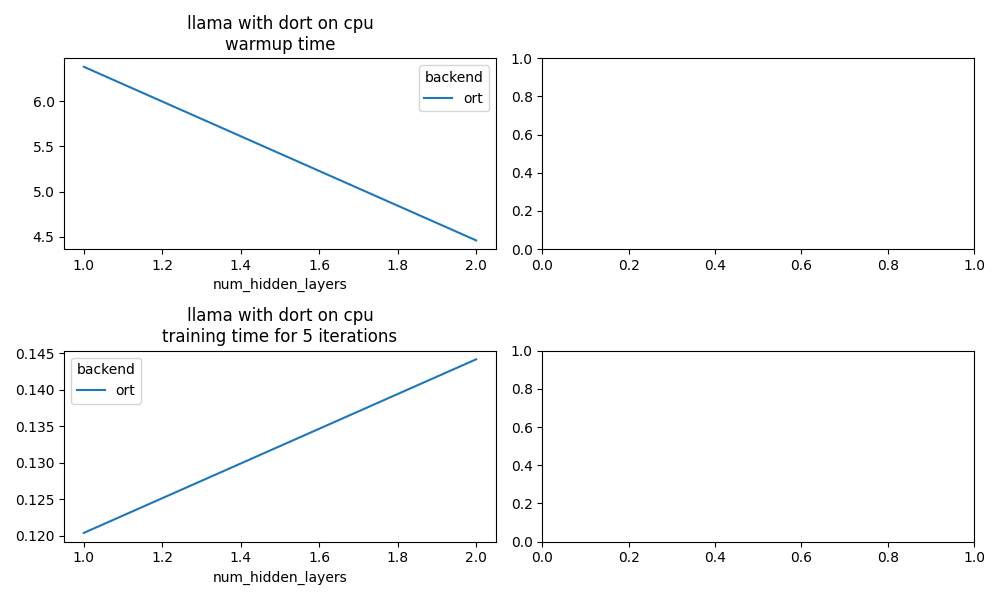

time warmup_time backend device num_hidden_layers repeat machine processor version cpu executable has_cuda capability device_name CMD

0 0.120378 6.381680 ort cpu 1 5 x86_64 x86_64 3.10.12 (main, Jun 11 2023, 05:26:28) [GCC 11.... 8 /usr/bin/python True (6, 1) NVIDIA GeForce GTX 1060 [/usr/bin/python -m onnxrt_backend_dev.llama.d...

1 0.144195 4.460361 ort cpu 2 5 x86_64 x86_64 3.10.12 (main, Jun 11 2023, 05:26:28) [GCC 11.... 8 /usr/bin/python True (6, 1) NVIDIA GeForce GTX 1060 [/usr/bin/python -m onnxrt_backend_dev.llama.d...

More simple

if data_collected:

try:

dfs = df[["backend", "num_hidden_layers", "time", "device", "warmup_time"]]

except KeyError as e:

raise RuntimeError(f"Missing columns in {df.columns}\n{df.head().T}") from e

print(dfs)

backend num_hidden_layers time device warmup_time

0 ort 1 0.120378 cpu 6.381680

1 ort 2 0.144195 cpu 4.460361

Plot.

if data_collected:

fig, ax = plt.subplots(2, 2, figsize=(10, 6))

piv = dfs[dfs.device == "cpu"].pivot(

index="num_hidden_layers", columns="backend", values="warmup_time"

)

if len(piv) > 0:

piv.plot(title="llama with dort on cpu\nwarmup time", ax=ax[0, 0])

piv = dfs[dfs.device == "cuda"].pivot(

index="num_hidden_layers", columns="backend", values="warmup_time"

)

if len(piv) > 0:

piv.plot(title="llama with dort on cuda\nwarmup time", ax=ax[0, 1])

piv = dfs[dfs.device == "cpu"].pivot(

index="num_hidden_layers", columns="backend", values="time"

)

if len(piv) > 0:

piv.plot(

title=f"llama with dort on cpu\ntraining time for {repeat} iterations",

ax=ax[1, 0],

)

piv = dfs[dfs.device == "cuda"].pivot(

index="num_hidden_layers", columns="backend", values="time"

)

if len(piv) > 0:

piv.plot(

title=f"llama with dort on cuda\ntraining time for {repeat} iterations",

ax=ax[1, 1],

)

fig.tight_layout()

fig.savefig("plot_llama_bench.png")

Total running time of the script: (0 minutes 39.855 seconds)