Azure OpenAI Evaluations

PromptPex support exporting the generated tests into a Azure OpenAI Evaluations. PromptPex will generate an eval and launch an eval run for each Model Under Test (MUT) in the test generation.

Configuration

Section titled “Configuration”PromptPex uses the Azure OpenAI credentials configured either in environment variables or through the Azure CLI / Azure Developer CLI. See GenAIScript Azure OpenAI Configuration.

The Azure OpenAI models that can be used as Model Under Test are the deployments available in your Azure OpenAI service.

Azure AI Foundry Portal

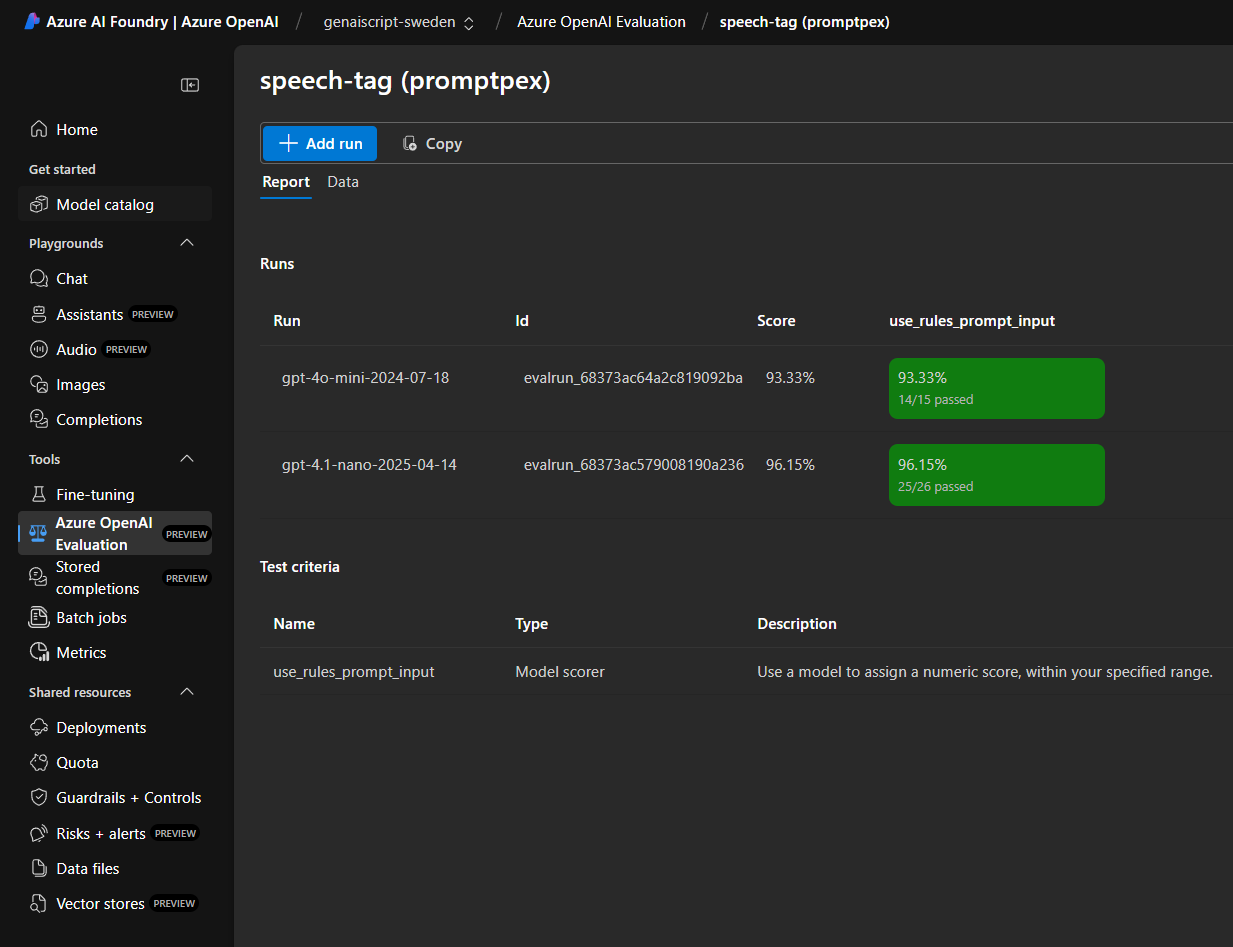

Section titled “Azure AI Foundry Portal”- Open Azure AI Foundry and select your Azure OpenAI resource.

- Navigate to the Azure OpenAI Evaluation section.

- You should see the evaluations created by PromptPex listed there.

Common errors

Section titled “Common errors”- Make sure that the Model Under Tests are deployment names in your Azure OpenAI service. They are should something like

azure:gpt-4.1-mini,azure:gpt-4.1-nano, orazure:gpt-4o-mini. - Make sure to check the

createEvalRunsparameter is set totruein the web interface or on the command line.