How this library works with the Responsible AI Toolbox

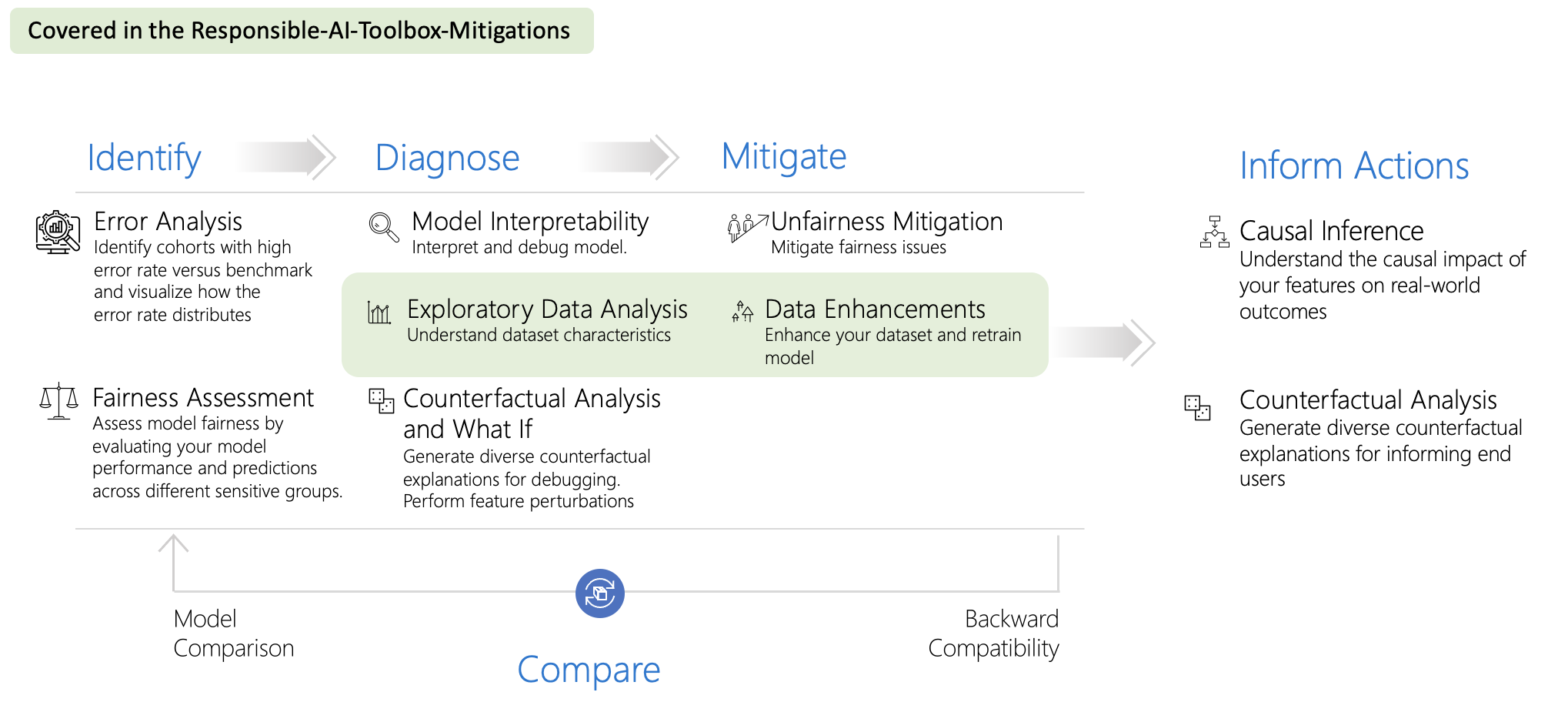

The Responsible AI Mitigations Library is part of the Responsible AI Toolbox, a larger effort for integrating and building development tools for responsible AI. One of the central contributions of the Toolbox is the dashboard, which bringing together several mature Responsible AI tools in the areas of machine learning interpretability, unfairness assessment and mitigation, error analysis, causal inference, and counterfactual analysis for a holistic assessment and debugging of models and making informed business decisions.

A practitioner using the Responsible AI Mitigations Library may rely on the Responsible AI Dashboard ** to identify and diagnose failure modes.** Take a look at this technical blog on how to leverage the dashboard for pre-mitigation steps.

At a high level, components in the dashboard such as Error Analysis and Model Overview help with the identification stage by discovering cohorts of data for which the model underperforms. Other components like the Data Explorer, Interpretability, and Counterfactual Analysis assist with understanding underlying reasons for why the model is underperforming. These components go back to the data (Data Explorer) or to the model (Interpretability) to highlight data statistics and feature importance. As the practitioner investigates the data or the model, they may create hypotheses about how to map the diagnoses to mitigation steps and then implement them through the Responsible AI Mitigations library.

From a mitigation perspective, Fairlearn is a closely relevant library in particular for mitigating fairness-related concerns. The set of mitigations in Fairlearn approach the problem of mitigating model underperformance for given cohorts by framing it as a cost-sensitive classification problem, where samples that satisfy a particular constraint (similar to the cohort definition) are weighed differently in the optimization process. These mitigations are complementary to the ones provided here and can be used in combination together.

In addition, we also encourage practitioners to rigorously validate new post-mitigation models and compare them with previous versions to make sure that the mitigation step indeed improved the model in the way the practitioner expected and that the mitigation step did not lead to new mistakes. To assist with these steps, BackwardCompatibilityML is a package for an extended support on model comparison and backward compatible training.

We also encourage practitioners to rigorously validate new post-mitigation models and compare them with previous versions to make sure that the mitigation step indeed improved the model in the way the practitioner expected and that the mitigation step did not lead to new mistakes. Responsible AI Tracker is a tool in the Responsible AI Toolbox that can be installed as a JupyterLab extension and helps developers track, manage, and compare Responsible AI mitigations and experiments along with the necessary code and model artifacts. Disaggregated comparisons are central to the comparative views offered in Responsible AI Tracker and enable practitioners to track performance improvements or decline across cohorts. For example, if the error mitigation process was focused in improving performance on a cohort of interest, Responsible AI Tracker can validate that the process indeed improves performance for that cohort, while also keeping track at all other cohorts of interests. In addition, BackwardCompatibilityML a package for an extended support on backward compatible training, which aims at improving model accuracy without introducing new errors.

Integration between raimitigations and raiwidgets

The raimitigations and Responsible AI Toolbox (raiwidgets) libraries allow users to apply mitigations or analyze the behavior of certain cohorts. These libraries

can be used together in order to analyze and mitigate certain aspects of a given cohort. We demonstrate this integration between these two libraries in the following

notebook, where we show how to create a set of cohorts in one library and continue the work in the other library by importing these cohorts.