🏠 Overview#

Version: 0.3.3

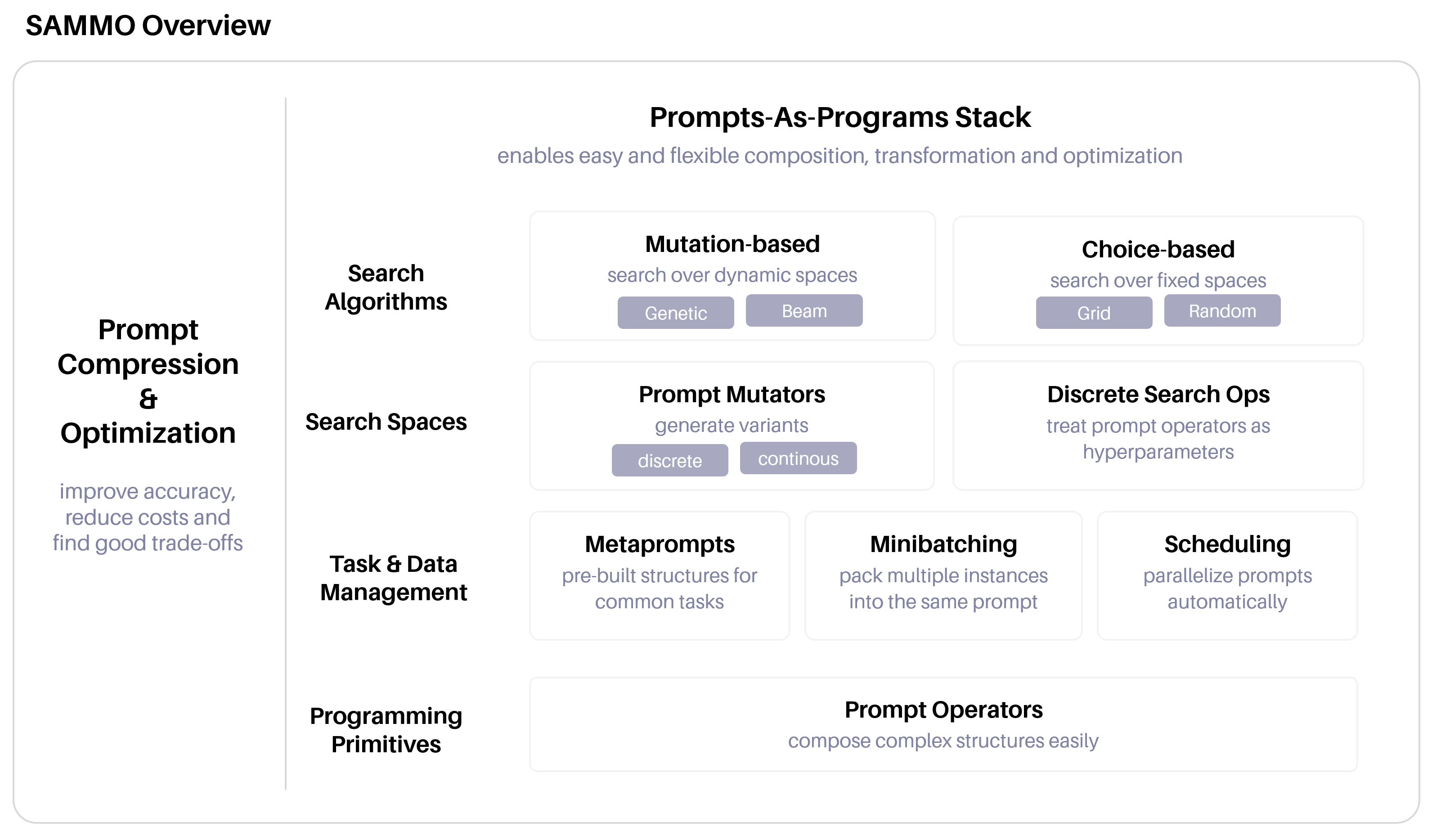

A flexible, easy-to-use library for running and optimizing prompts for Large Language Models (LLMs).

Install library only#

pip install sammo

Install and run tutorials#

Prerequisites

Python 3.9+

The following commands will install sammo and jupyter and launch jupyter notebook. It’s recommended that you create and activate a virtualenv prior to installing packages.

pip install sammo jupyter

# clone sammo to a local directory

git clone https://github.com/microsoft/sammo.git

cd sammo

# launch jupyter notebook and open tutorials directory

jupyter notebook --notebook-dir docs/tutorials

Example#

This example shows how easy it is to optimize a prompt with SAMMO. The full example is in the user guide.

runner = OpenAIChat(model_id="gpt-3.5-turbo", api_config=API_CONFIG)

PROMPT_IN_MARKDOWN = """

# Instructions <!-- #instr -->

Convert the following user queries into a SQL query.

# Table

Users:

- user_id (INTEGER, PRIMARY KEY)

- name (TEXT)

- age (INTEGER)

- city (TEXT)

# Complete this

Input: {{{input}}}

Output:

"""

spp = MarkdownParser(PROMPT_IN_MARKDOWN).get_sammo_program()

mutation_operators = BagOfMutators(

Output(GenerateText(spp)),

Paraphrase("#instr"),

Rewrite("#instr", "Make this more verbose.\n\n {{{{text}}}}")

)

prompt_optimizer = BeamSearch(runner, mutation_operators, accuracy)

prompt_optimizer.fit(d_train)

prompt_optimizer.show_report()

Use Cases#

SAMMO is designed to support

Efficient data labeling: Supports minibatching by packing and parsing multiple datapoints into a single prompt.

Prompt prototyping and engineering: Re-usable components and prompt structures to quickly build and test new prompts.

Instruction optimization: Optimize instructions to do better on a given task.

Prompt compression: Compress prompts while maintaining performance.

Large-scale prompt execution: parallelization and rate-limiting out-of-the-box so you can run many queries in parallel and at scale without overwhelming the LLM API.

It is less useful if you want to build