DirectX-Specs

D3D12 Work Graphs

v1.012 2/4/2026

Contents

- Introduction to work graphs

- Definitions

- System design

- Overview

- Nodes

- Node arrays

- Shader at a node

- Resource bindings

- Feeding nodes

- Node types

- Shader invocation at a node

- Node ID

- Node input

- Node output

- Shaders can use input record lifetime for scoped scratch storage

- Sharing input records across nodes

- Wave semantics

- Quad and derivative operation semantics

- NonUniformResourceIndex semantics

- Producer - consumer dataflow through UAVs

- Work Graph

- Node limits

- Backing memory

- Binding a work graph

- Initiating work from a command list

- No deadlocks / system overload

- Joins - synchronizing within the graph

- Scheduling considerations for the driver

- Discovering device support for work graphs

- Interactions with other D3D12 features

- Graphics nodes

- API

- Device methods

- CheckFeatureSupport Structures

- CheckFeatureSupport

- CreateStateObject

- CreateStateObject Structures

- D3D12_STATE_OBJECT_DESC

- D3D12_STATE_SUBOBJECT

- D3D12_STATE_OBJECT_TYPE

- D3D12_STATE_SUBOBJECT_TYPE

- D3D12_STATE_OBJECT_FLAGS

- D3D12_STATE_OBJECT_CONFIG

- D3D12_WORK_GRAPH_DESC

- D3D12_WORK_GRAPH_FLAGS

- D3D12_NODE

- D3D12_NODE_TYPE

- D3D12_SHADER_NODE

- D3D12_NODE_OVERRIDES_TYPE

- D3D12_BROADCASTING_LAUNCH_OVERRIDES

- D3D12_COALESCING_LAUNCH_OVERRIDES

- D3D12_THREAD_LAUNCH_OVERRIDES

- D3D12_COMMON_COMPUTE_NODE_OVERRIDES

- D3D12_NODE_ID

- D3D12_NODE_OUTPUT_OVERRIDES

- D3D12_PROGRAM_NODE

- D3D12_PROGRAM_NODE_OVERRIDES_TYPE

- D3D12_MESH_LAUNCH_OVERRIDES

- D3D12_MAX_NODE_INPUT_RECORDS_PER_GRAPH_ENTRY_RECORD

- D3D12_DRAW_LAUNCH_OVERRIDES

- D3D12_DRAW_INDEXED_LAUNCH_OVERRIDES

- D3D12_COMMON_PROGRAM_NODE_OVERRIDES

- CreateStateObject Structures

- CreateVertexBufferView

- CreateDescriptorHeap

- AddToStateObject

- ID3D12StateObjectProperties1 methods

- ID3D12WorkGraphProperties methods

- ID3D12WorkGraphProperties1 methods

- Command list methods

- Device methods

- HLSL

- Shader target

- Shader function attributes

- Node Shader Parameters

- Record struct

- Objects

- Record access

- Node input declaration

- Methods operating on node input

- Node output declaration

- Node output array indexing

- Methods operating on node output

- Node and record object semantics in shaders

- Examples of input and output use in shaders

- General intrinsics

- DXIL

- DXIL Shader function attributes

- Node and record handles

- Node input and output metadata table

- Lowering Get-NodeOutputRecords

- Lowering input/output loads and stores

- Lowering IncrementOutputCount

- Lowering OutputComplete

- Lowering input record Count method

- Lowering FinishedCrossGroupSharing

- NodeRecordType metadata structure

- Lowering Node output IsValid method

- Lowering GetRemainingRecursionLevels

- Lowering Barrier

- Node and record object semantics in DXIL

- Example of creating node input and output handles

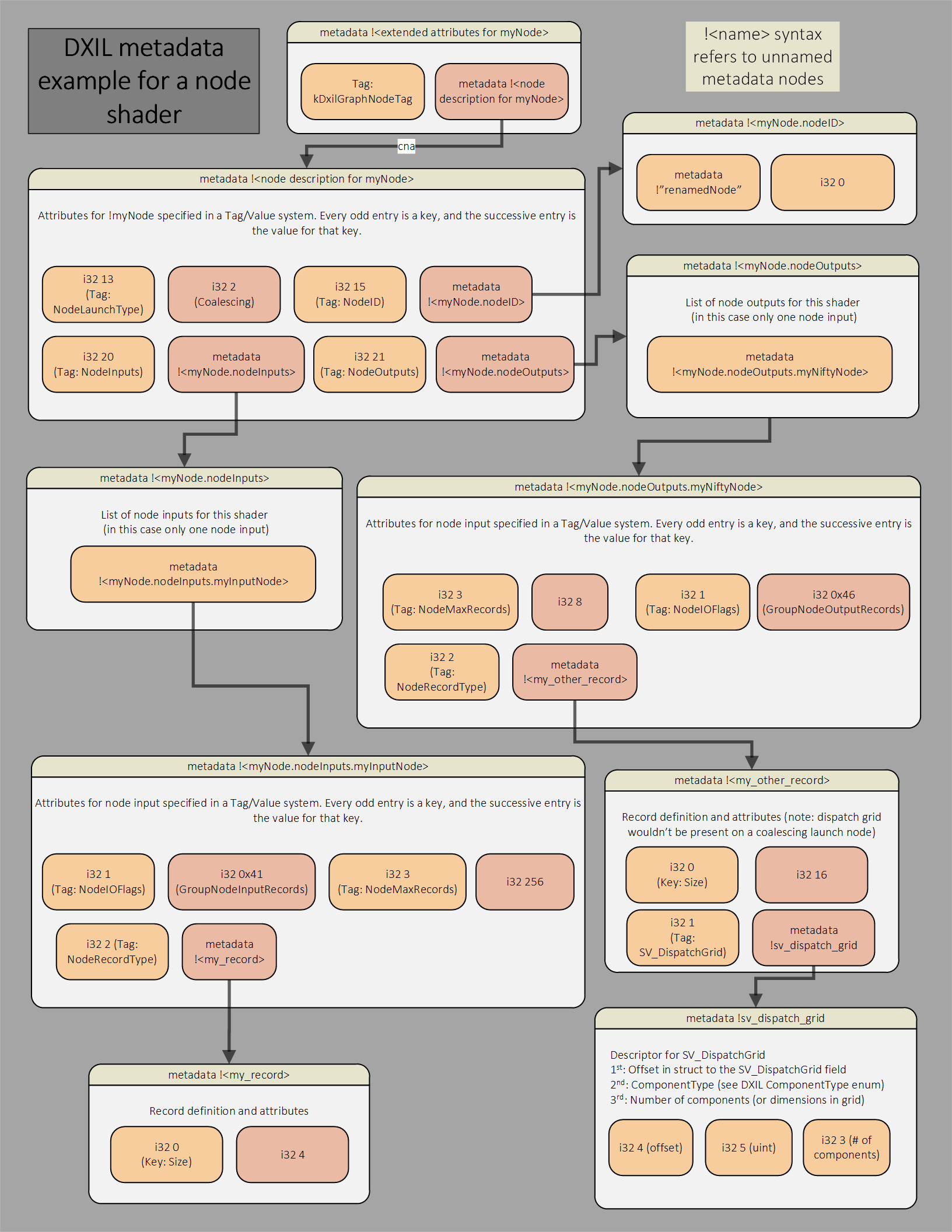

- Example DXIL metadata diagram

- DDI

- DDI for reporting work graph support

- DDI function tables

- DDI function table related structures and enums

- D3D12DDI_PROGRAM_IDENTIFIER_0108

- D3D12DDI_WORK_GRAPH_MEMORY_REQUIREMENTS_0108

- D3D12DDI_SET_PROGRAM_DESC_0108

- D3D12DDI_PROGRAM_TYPE_0108

- D3D12DDI_SET_WORK_GRAPH_DESC_0108

- D3D12DDI_SET_WORK_GRAPH_FLAGS_0108

- D3D12DDI_DISPATCH_GRAPH_DESC_0108

- D3D12DDI_DISPATCH_MODE_0108

- D3D12DDI_NODE_CPU_INPUT_0108

- D3D12DDI_NODE_GPU_INPUT_0108

- D3D12DDI_MULTI_NODE_CPU_INPUT_0108

- D3D12DDI_MULTI_NODE_GPU_INPUT_0108

- DDI state object creation related structures and enums

- D3D12DDI_STATE_SUBOBJECT_TYPE

- D3D12DDI_WORK_GRAPH_DESC_0108

- D3D12DDI_WORK_GRAPH_FLAGS_0108

- D3D12DDI_NODE_LIST_ENTRY_0108

- D3D12DDI_NODE_0108

- D3D12DDI_NODE_TYPE_0108

- D3D12DDI_SHADER_NODE_0108

- D3D12DDI_NODE_PROPERTIES_TYPE_0108

- D3D12DDI_BROADCASTING_LAUNCH_NODE_PROPERTIES_0108

- D3D12DDI_COALESCING_LAUNCH_NODE_PROPERTIES_0108

- D3D12DDI_THREAD_LAUNCH_NODE_PROPERTIES_0108

- D3D12DDI_NODE_OUTPUT_0108

- D3D12DDI_NODE_IO_FLAGS_0108

- D3D12DDI_NODE_IO_KIND_0108

- D3D12DDI_RECORD_DISPATCH_GRID_0108

- D3D12DDI_NODE_ID_0108

- D3D12DDI_PROGRAM_NODE_0110

- D3D12DDI_MESH_LAUNCH_PROPERTIES_0110

- D3D12DDI_MAX_NODE_INPUT_RECORDS_PER_GRAPH_ENTRY_RECORD_0110

- D3D12DDI_DRAW_LAUNCH_PROPERTIES_0108

- D3D12DDI_DRAW_INDEXED_LAUNCH_PROPERTIES_0108

- Generic programs

- Supported shader targets

- Resource binding

- CreateStateObject structures for generic programs

- D3D12_DXIL_LIBRARY_DESC

- D3D12_EXPORT_DESC

- D3D12_GENERIC_PROGRAM_DESC

- Subobjects that can be listed in a generic program

- Defaults for subobjects missing from a generic program

- Missing STREAM_OUTPUT

- Missing BLEND

- Missing SAMPLE_MASK

- Missing RASTERIZER

- Missing INPUT_LAYOUT

- Missing INDEX_BUFFER_STRIP_CUT_VALUE

- Missing PRIMITIVE_TOPOLOGY

- Missing RENDER_TARGET_FORMATS

- Missing DEPTH_STENCIL_FORMAT

- Missing SAMPLE_DESC

- Missing VIEW_INSTANCING

- Missing FLAGS

- Missing DEPTH_STENCIL or DEPTH_STENCIL1 or DEPTH_STENCIL2

- Change log

Introduction to work graphs

Work graphs are a system for GPU based work creation in D3D12.

Basis

In many GPU workoads, an initial calculation on the GPU determines what subsequent work the GPU needs to do. This can be accomplished with a round trip back to the CPU to issue the new work. But it is typically better for the GPU to be able to feed itself directly. ExecuteIndirect in D3D12 is a form of this, where the app uses the GPU to record a very constrained command buffer that needs to be serially processed on the GPU to issue new work.

Consider a new option. Suppose shader threads running on the GPU (producers) can request other work to run (consumers). Consumers can be producers as well. The system can schedule the requested work as soon as the GPU has capacity to run it. The app can also let the system manage memory for the data flowing between tasks.

This is work graphs. A graph of nodes where shader code at each node can request invocations of other nodes, without waiting for them to launch. Work graphs capture the user’s algorithmic intent and overall structure, without burdening the developer to know too much about the specific hardware it will run on. The asynchronous nature maximizes the freedom for the system to decide how best to execute the work.

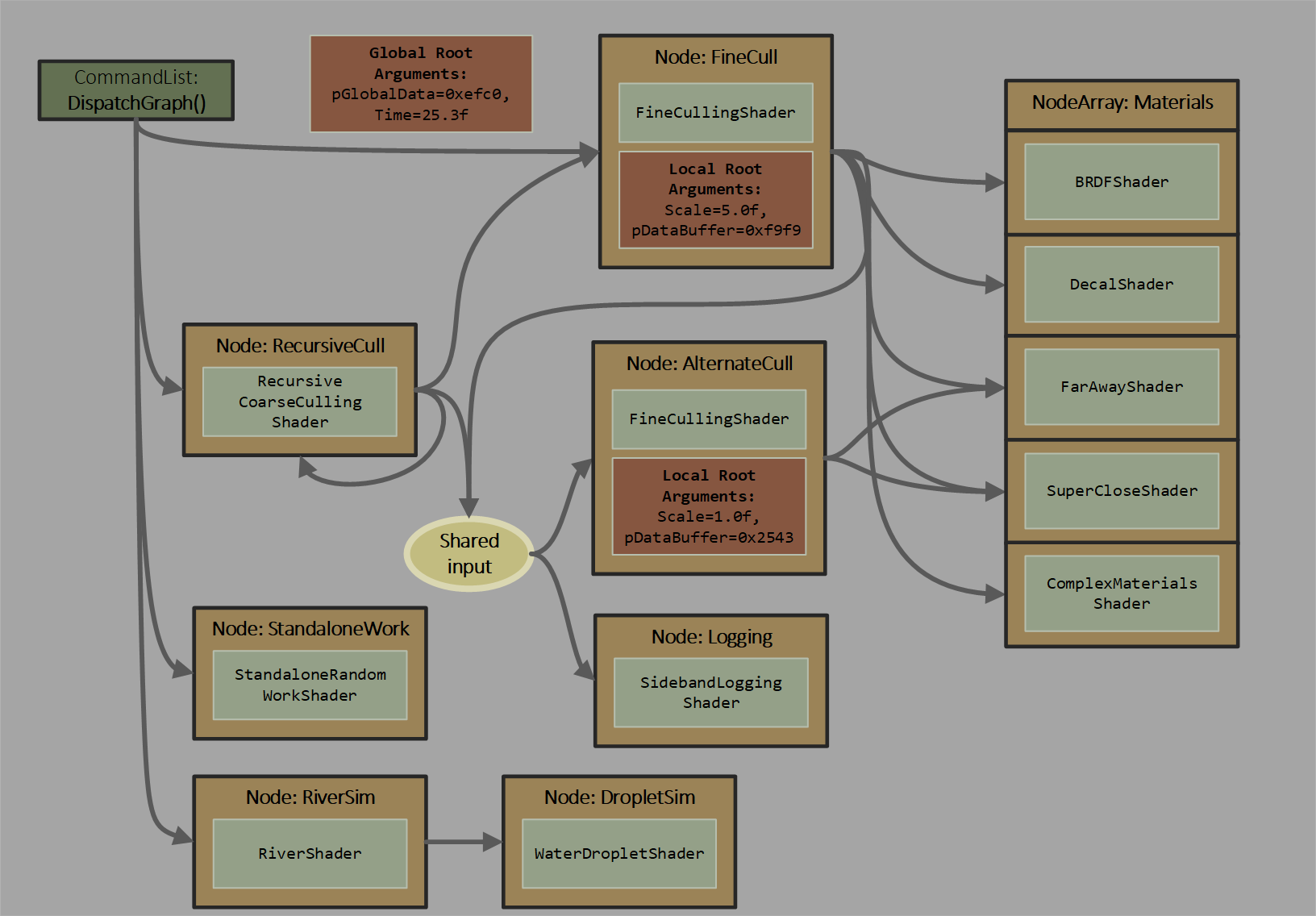

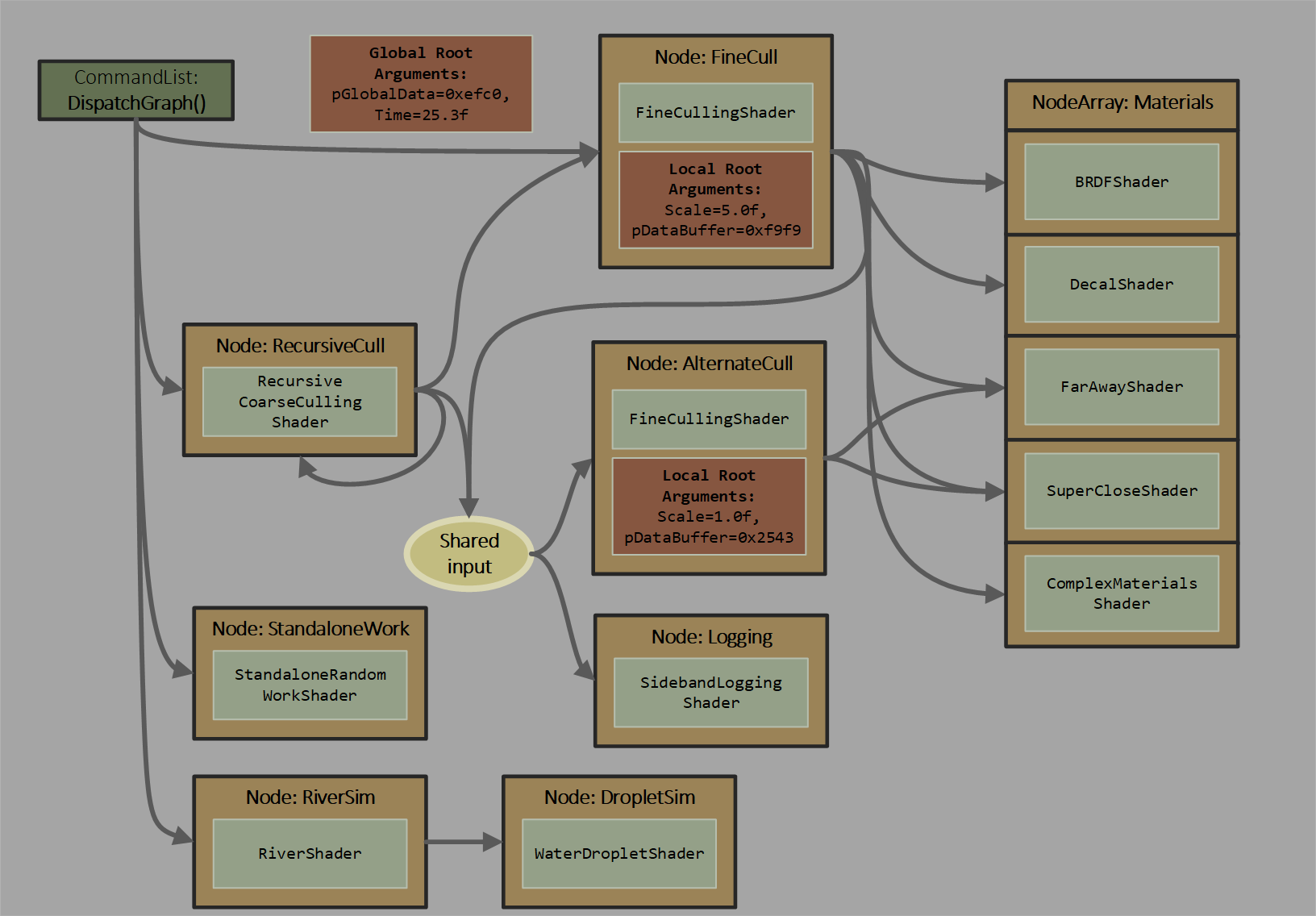

Here is a graph contrived to illustrate several capabilities:

Characteristics

- When initiating work with

DispatchGraph(), the app can pass arguments to graph entrypoints from either app CPU memory copied into a command list’s recording, or app GPU memory read at command list execution. These options are convenient even in a single node graph. - There are a few options for how a node translates incoming work requests into a set of shader invocations, ranging from a single thread per work item to variable sized grids of thread groups per work item.

- The graph is acyclic, with one exception: a node can output to itself. There is a depth limit of 32 including recursion.

- For implementation efficiency there are limits on the amount of data that node invocations can pass directly to other nodes; for bulk data transfer apps need to use UAV accesses.

- Scheduling of work requests can be done by the system with whatever underlying hardware tools are at its disposal.

- Less capable architectures might revert to a single processor on the GPU scheduling work requests. More advanced architectures might use distributed scheduling techniques and account for the specific topology of processing resources on the GPU to efficiently manage many work requests being generated in parallel.

- This is in contrast to the

ExecuteIndirectmodel which forces a serial processing step for the GPU - walking through a GPU generated command list to determine a sequence of commands to issue.

- Data from a producer might be more likely to flow to a consumer directly, staying within caches

- This could reduce reliance on off-chip memory bandwidth for performance scaling

- Because data can flow between small tasks in a fine-grained way, the programming model doesn’t force the application to drain the GPU of work between data processing steps.

- Since the system handles buffering of data passed between producers and consumers (if the app wants), the programming model can be simpler than

ExecuteIndirect.

Analysis

Despite the potential advantages, the free scheduling model may not always the best target for an app’s workload. Characteristics of the task, such as how it interacts with memory/caches, or the sophistication of hardware over time, may dictate whether some existing approach is better. Like continuing to use ExecuteIndirect. Or building producer consumer systems out of relatively long running compute shader threads that cross communicate - clever and fragile. Or using the paradigms in the DirectX Raytracing model, involving shaders splitting up and continuing later. Work graphs are another tool in the toolbox.

Given that the model is about producers requesting for consumers to run, currently there isn’t an explicit notion of waiting before launching a node. For instance, waiting for all work at multiple producer nodes to finish before a consumer launches. This can technically be accomplished by breaking up a graph, or with clever shader logic. Synchronization wasn’t a focus for the initial design, hence these workarounds, discussed more in Joins - synchronizing within the graph. Native synchronization support may be defined in the future and would be better than the workarounds in lots of ways. Many other compute frameworks explicitly use a graph to define bulk dependencies, in contrast to the feed-forward model here.

Scenarios summary

- Reducing number of passes out to memory and GPU idling in multi-pass compute algorithms

- Avoiding the pattern in ExecuteIndirect of serial processing through worst case sized buffers

- Classification and binning via node arrays

- Eventually:

- Feeding graphics from compute

- Bulk work synchronization

Existing support

Here is a summary of existing ways the GPU can generate work for itself; a reminder of the breadth of work scheduling abilities GPUs already have at their disposal.

- Rasterizer

- Variable number of pixels with various strict ordering requirements.

- Tessellation and Geometry Shaders

- Mix of programmable expansion and fixed function.

- Mesh Shaders

- Alternative pipeline for programmable geometry expansion and processing

- Seeks to avoid bottlnecks in the above pipelines

- ExecuteIndirect

- App generates a command buffer on the GPU and then executes it

- Many limitations on PC like not being able to change shaders

- App needs to do worst case buffering between phases

- Messy implementations in drivers

- Could try to add flexibility here, but would be doubling down on the mess

- Callable Shaders (from DXR)

- Form of dynamic call from a shader thread that returns to caller

- Typically implemented by ending the shader invocation at the callsite and starting a new one to resume

- Could be extended outside DXR (even into this spec), but out of scope

- Work graphs expose a variation on launching threads of work when return to caller isn’t needed

Work graphs exists alongside these, but can potentially also merge in some of their abilities. There will be situations where more than one of these options can accomplish a task. Unless the work to be done can be molded to very closely match the structure of one of the solutions, it is difficult to say which option is best. The answer may depend on hardware details or content characteristics.

Definitions

Thread

Individual shader invocation on the GPU. Dynamic function calls made by a thread are considered part of the thread if they are invoked with semantics that require execution to return to the caller and resume in order.

Thread Group

Set of threads (with 3 dimensional ID per thread). Up to 1024 threads can be in a thread group, based on existing compute shader rules.

All threads in a thread group can access some thread group shared memory, whose scope is the lifetime of the thread group.

A compute Dispatch() can issue a 3D grid of thread groups, so each thread group has a 3D GroupID. Each dimension is limited to 65535.

It may be worth introducing a variant where the grid size is just a 1D UINT for simplicity. And/or it is a UINT with Y in the upper 16 bits and X in the lower 16 bits.

Threads in a thread group have no execution ordering guarantee with respect to each other. Same for the set of thread groups in a compute Dispatch().

Wave

Hardware specific grouping of threads/lanes executing together, in lockstep on some architectures but potentially diverging on other architectures.

Hardware chooses the mapping of threads from thread groups into waves. Some tricky shader instructions have been exposed that enable apps to opportunistically exploit whatever wave coherence that any given hardware may provide.

Program

The notion of a “program” encapsulates what used to be described as “pipeline state” or “pipeline state objects” in D3D12. Things that were pipeline state before can be equivalently defined as “program”s now. Like a VS+PS pipeline (along with other relevant state like rasterizer state). Or a standalone compute shader. The list of program types can be seen here.

Raytracing introduced state objects as a way of defining a collection of code and other configuration data. This container format now supports having multiple “programs” defined in a single object, such as multiple permutations of VS+PS graphics pipelines defined in one state object. The the exception is raytracing pipelines, which must be standalone, not mixed with other programs in one state object, for simplicity. Each individual program is something that can be bound as a unit on the GPU for execution.

Existing ways of defining pipelines are still valid, to maintain code compatibility.

The term “program” is used rather than “pipeline” to step away from the connotation in a “pipeline” that there is a sequence of operations. “Program” intends to convey a grouping of shaders and/or fixed function operations that are launched as logical grouping on the GPU. In many cases this grouping is a sequence, like a pipeline. But it can be a graph too, as in this spec.

System design

Overview

The app can define a set of nodes. In the most basic form at each node there is a fixed shader. In more advanced incarnations a node could have other contents, such as holding multiple shaders and state, as in a graphics program. Command lists can send records to nodes, initiating work: groups of shader invocations. Nodes can also send records to other nodes, generating more work.

Multiple nodes can use the same shader, specialized in some way. Multiple nodes can share input, meaning multiple different shaders can be launched for a given input. Multiple nodes can write to the same destination node.

Independent of node input/outputs, shaders can directly access memory via SRVs, UAVs etc.

Repeating the contrived graph from the intro as a visual aid:

Shaders can run when (a) input is available, and (b) if not a leaf node, sufficient output storage is reserved by the system. This is for the system to worry about for the most part - the system will find a way to schedule all generated work. That said, the app has some influence on how much backing storage the system can use in order to make progress.

Cycles in the flow of execution are not allowed, except that a node can target itself. In other words, a node cannot output to an ancestor node. Work is guaranteed to complete, barring app-error.

When a producing shader outputs to a consumer, the execution of the consumer may happen later, independent of the progress of the producing shader. Deadlock may occur if a producing shader’s completion is dependent on the execution of a consumer shader. There is no return from the consumer to the producer.

Nodes can be set up with different semantics in terms of how shaders are invoked relative to inputs.

Nodes

Node are building blocks out of which a system of dynamic execution flow can be constructed.

Nodes have a type defining its semantics.

Nodes that hold a compute shader support three ways to launch. One type of node can launch a batch (grid) of compute shader thread groups that all share a given input. Another type of node can launch thread groups at a time, each group consuming a set of available inputs at once, such that the set of inputs is visible to the group. Yet another type just launches individual threads at a time, each processing one input item.

More advanced implementations can support leaf nodes that can be a graphics program.

Concrete descriptions of these node types appear later.

Node arrays

Nodes can be arranged in arrays. This allows nodes the option of dynamically indexing into a node array to select which node(s) to output to. Binning scenarios can be implemented this way.

A proposal not supported yet is allowing the AddToStateObject() API that currently exists for DXR to be extended to support cheaply adding leaf nodes to an existing array in a work graph after the work graph has been created. This might help with streaming materials for instance, and could be of use in combination with proposed support for graphics nodes.

Shader at a node

The shader (or entire program if applicable) that runs at a node is a fixed choice defined with the node.

Resource bindings

Root constants, root descriptors and descriptor tables (pointing into the descriptor heap) can be sourced from root arguments bound on the command list, declared via global root signature common to all shaders. This is similar to other other types of programs.

The app can also choose to assign a block of memory to a node that acts as fixed storage for local root arguments. So the shader could get its set of resource bindings from a combination of the global root arguments and some local ones at the node. Multiple nodes could then be created out of the same shader, differentiated in behavior by the local root arguments. An alternative to doing this is the app has to pass these arguments into the shader via the input records, but it bloats input records unnecessarily if the data doesn’t need to be varying.

As a reminder, shaders support indexing directly into the full descriptor heap for any resource access, independent of root signatures. In the context of nodes it means indices that resource references can be passed along as input records.

Feeding nodes

The system manages input data arriving at a node to generate a set of shader launches.

The semantics of how nodes inputs are handled may be configurable over time. This might imply various types of queueing happening in the implementation - how this is done is opaque / owned by the driver. There is flexibility for how backing storage for node inputs is handled, discussed later

The sequence that records get consumed / dequed at a node is unordered / unpredictable. This provides maximum flexibility for implementations.

Independent of this unordered dequeueing, the order that records are enqueued is also unordered. Input order is unpredictable simply because input can arrive from multiple GPU sources/threads running concurrently.

Other ordering schemes could be entertained in the future if they could work well. Here are a couple of example possibilities, without implying these are useful or at all feasible, just food for thought:

- dequeue least recently queued

- complete all launched child work before continuing to the next item

There is a constrained case where some ordering is guaranteed, described in graphics nodes with ordered rasterization.

Input records at a node are of a fixed size - the size of the input struct declared in the node’s shader.

The backing physical memory footprint a system needs to execute a graph (to handle the possibility spilling out of caches for instance) is owned by the application - see backing memory. To simplify implementations, there are node output limits, and node input limits.

Input record size can be 0 - discussed under record struct. This simply means the node input is purely of a logical capacity. An app might want to manage data storage between nodes manually (e.g. in UAVs).

Node types

Broadcasting launch nodes : one input seen by many thread groups Thread launch nodes : one input per thread Coalescing launch nodes : variable inputs seen by each thread group

Proposed future graphics node types:

Mesh nodes: invoke a mesh shading program like DispatchMesh()

The following have been cut in favor of starting experimentation with mesh nodes only:

Draw nodes: invoke a graphics program like DrawInstanced()

DrawIndexed nodes: invoke a graphics program like DrawIndexedInstanced()

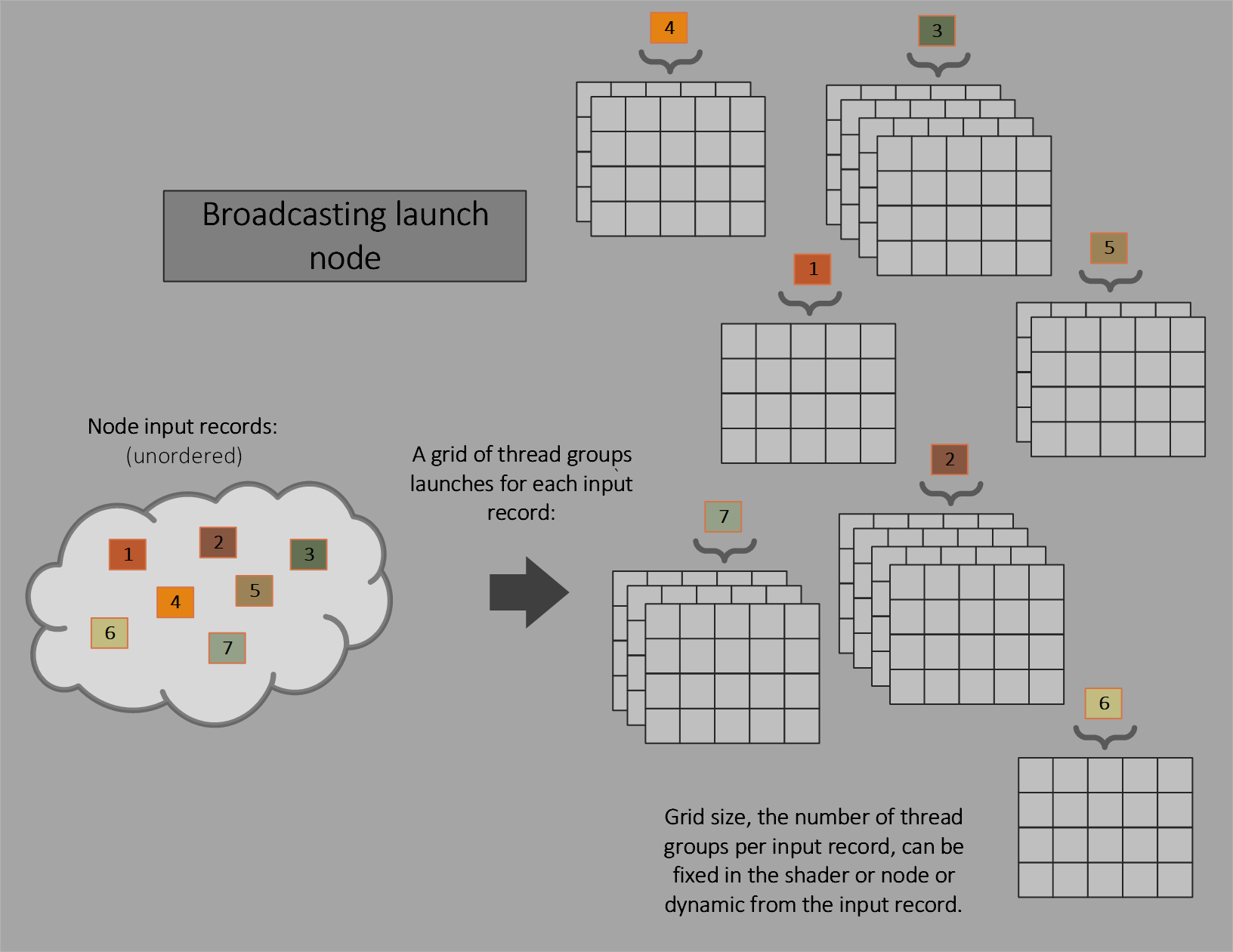

Broadcasting launch nodes

The equivalent of compute Dispatch() is generated when input is present - one grid of thread groups launched per input. Work is invoked a thread group at a time as the system reserves output space (if needed) for each thread group.

The dispatch grid size can either be part of the input record or be fixed for the node.

The thread group is fixed in the shader.

All thread groups that are launched share the same set of input parameters. The exception is the usual system ID values which identify individual threads within the group/grid.

For wave packing see Thread visibility in wave operations.

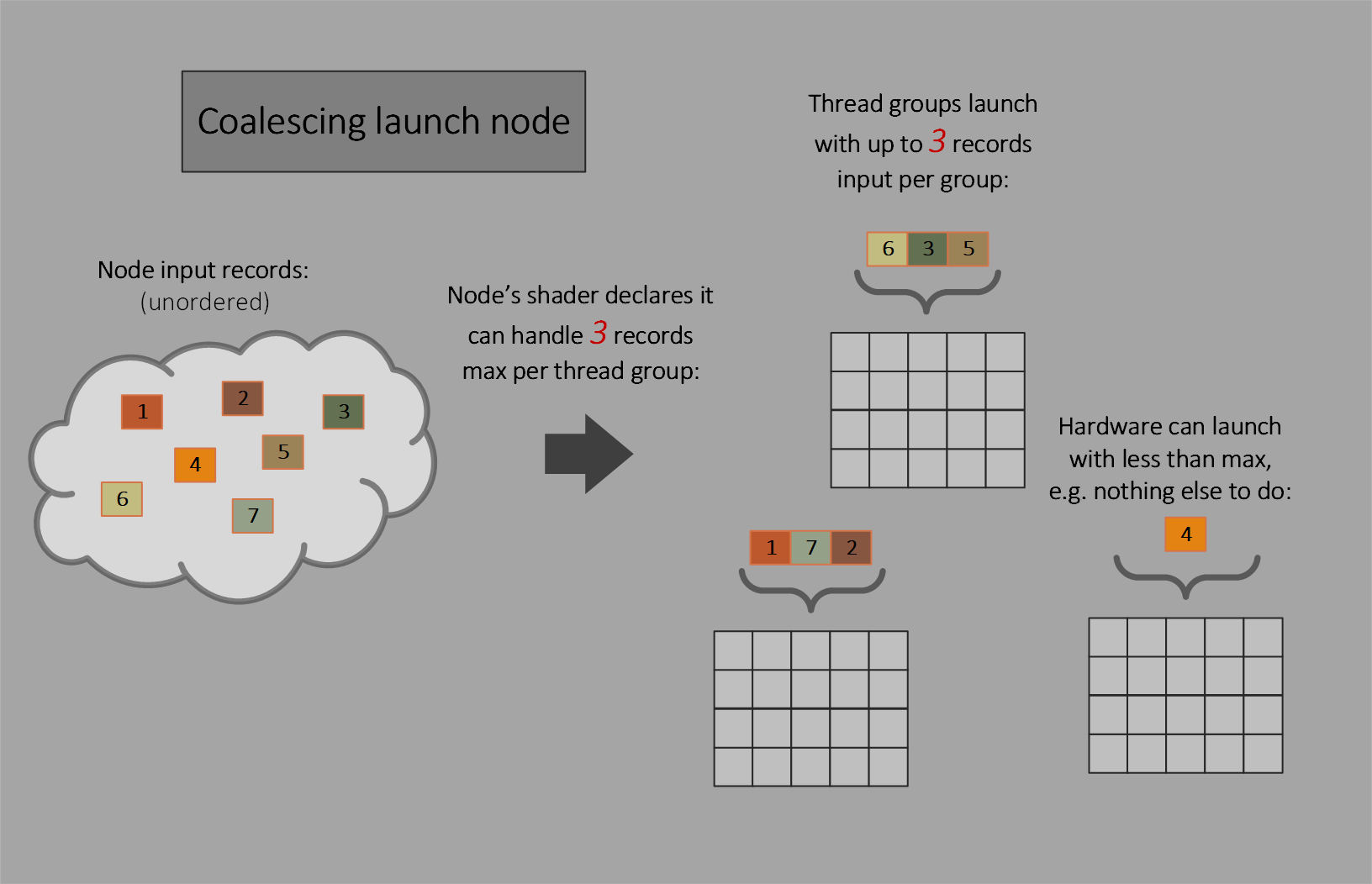

Coalescing launch nodes

Here, multiple inputs are collectively visible to each thread group that is launched.

Coalescing launch nodes can be useful if the shader can use shader memory or cooperation between threads in the group to process multiple inputs together (even though the number of inputs available may vary). If such cooperation is not required (beyond opportunistically using wave ops across threads in a wave), thread launch nodes, described later, may be more appropriate to use. Coalescing launch nodes can also be useful in scenarios where thread launch nodes would have made more sense but the tight output data limits on that node type are too restrictive.

The thread group size is fixed in the shader.

Dispatch grid size doesn’t apply, as instead the system simply launches individual thread groups at a time.

Since there is no dispatch grid size, that means there is no notion of amplification in this type of work launch.

The shader declares as part of its input the maximum number of input records that a thread group can input. If the shader declares 512 as the input record maximum, the system is allowed to invoke a thread group when it has anywhere from 1 to 512 input records available.

Of course the system will attempt to fill each thread group with the maximum declared input records, but it is allowed to invoke with fewer records as it sees fit. An example might be that no other node is ready to launch new work and the GPU has free processing resources, so it might decide that it is better to do something than nothing. There may simply be an odd amount of final work left to drain when ancestors in the graph of nodes are finished.

Launched thread groups can see the full set of input available as an array. The shader can discover how many inputs there are and is responsible for distributing work items across threads in the thread group. If threads don’t have any work to do, they can simply exit immediately.

Any time a shader declares it expects some number greater than 1 as the maximum number of input records that it can handle in a thread group, it must call the input record’s Count() method to discover how many records its thread group actually got.

The number of records sent to any given thread group launch is implementation-defined, and not necessarily repeatable on a given implementation. This is true independent of the method that produces the input – i.e. whether it comes from another node or from DispatchGraph. And it is true regardless size of input records, including 0 size records in particular.

For wave packing see Thread visibility in wave operations.

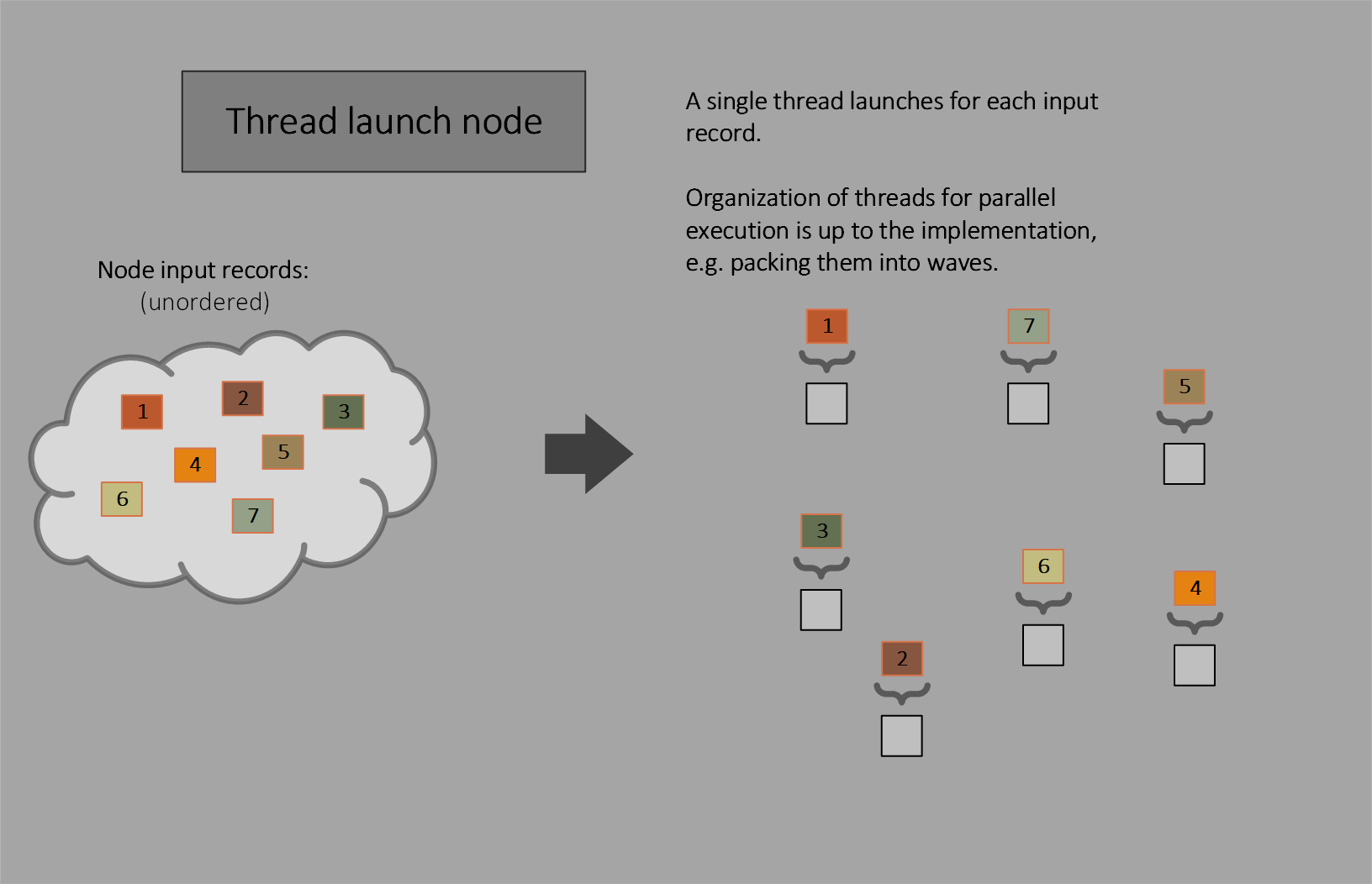

Thread launch nodes

Thread launch nodes invoke one thread for each input.

Thread launch nodes are conceptually a subset of coalescing launch nodes. They use a thread group size of (1,1,1), so the thread group size need not be declared, and limit the number of input records per thread group launch to 1.

While a coalescing launch node can express what a thread launch node can do, it is worth calling out the single thread case with a dedicated type. In terms of implementation, thread launch nodes can pack work fundamentally differently than coalescing or broadcasting launch nodes: thread launch nodes allow multiple threads from different launches to be packed into a wave (see Thread visibility in wave operations). By contrast if a coalescing launch node declared a thread group size of 1, other thread group launches wouldn’t be able to be packed with it (at least they cannot appear to be, and in reality they likely will not be packed).

For wave packing see Thread visibility in wave operations.

Thread launch nodes can be thought of somewhat like the callable shaders that are in DXR, except they do not return back to the caller. And instead of appearing as a function call from a shader, which would be a different path to invoking threads of execution than the work graph itself, thread launch nodes are by definition part of the work graph structure. It may still prove interesting to support DXR-style callable shaders in the future, but for now at least, thread launch nodes serve as alternative that embraces a unified model for launching work - nodes in a work graph, while still allowing applications to indicate situations when their workload does involve independent threads of work.

A restriction that was considered but not enacted: Nodes that output to thread launch nodes (call them producers) may output no more records to thread launch nodes than there are threads in the producer’s thread group. This would allow some implementations to choose to invoke thread launch node threads as a continuation after the producer’s threads exit. As it is, implementations that care about this can observe what a shader is doing and not merge nodes if the stars don’t align.

Mesh nodes

This section is proposed as part of graphics nodes, which aren’t supported yet.

Mesh launch nodes can only appear at a leaf of a work graph. They can appear in the graph as standalone entrypoints as well (which is a form of leaf).

The equivalent of a graphics DispatchMesh() is generated when an input is present at a mesh node - a set of mesh shader threadgroups. The program at the node must begin with a shader with [NodeLaunch("mesh")] (as opposed to mesh shader). This is basically a hybrid of a broadcasting launch node and a mesh shader. These shaders can only be used in work graphs, not from DispatchMesh() on the command list, which is for plain mesh shaders.

Amplification shaders are not supported since they aren’t needed in a work graph. Nodes in the graph that feed into the mesh launch node can do work amplification with more flexibility than an amplification shader alone.

Consistent with the mesh shader spec, each of the thead group’s three dimensions must be less than 64k, and the total number of thread groups launched must not exceed 2^22. Work is launched for the program at the node the same way it would if the equivalent was used on a command list with a DispatchMesh() call.

Node input and dispatch grid behavior follow he same semantics as broadcasting launch nodes.

Accordingly, grid size can be dynamic or fixed. An input record is therefore only required for the dynamic grid case, where SV_DispatchGrid can reside along with any other input data the shader needs, illustrated below. This works the same way as described for broadcasting launch nodes in: SV_DispatchGrid. A [NodeDispatchGrid()] shader function attribute indicates a fixed grid, and [NodeMaxDispatchGrid()] indicates a dynamic grid - one must be present. Whichever one is specified, that one can also be overridden at the API as well.

All of the normal system-generated values for mesh shaders, such as SV_DispatchThreadID, SV_GroupThreadID, SV_GroupIndex, SV_GroupID, etc. work as expected. The snippet below illustrates an example input record to a mesh launch node:

// Example input record with dynamic grid

struct MyMeshNodeInput

{

uint3 dispatchGrid : SV_DispatchGrid; // can appear anywhere in struct

// Other fields omitted for brevity.

};

Related topics:

Draw nodes

[CUT]This section was proposed as part of graphics nodes, but has been cut in favor of starting experimentation with mesh nodes only.

Draw launch nodes can only appear at a leaf of a work graph. They can appear in the graph as standalone entrypoints as well (which is a form of leaf).

The equivalent of a graphics DrawInstanced() is generated when an input is present - a set of instanced draws launched per input. Work is launched for the program at the node the same way it would if the equivalent was used on the command list with a DrawInstanced() call.

Per-draw arguments must be present in the input record. This is specified using SV_DrawArgs. All of the normal system generated values for graphics shaders, such as SV_VertexID, SV_InstanceID, SV_PrimitiveID, etc. work as expected for the shaders at the node. The snippet below illustrates an example input payload to a draw launch node:

// This structure is a fixed part of the API.

struct D3D12_DRAW_ARGUMENTS

{

uint VertexCountPerInstance;

uint InstanceCount;

uint StartVertexLocation;

uint StartInstanceLocation;

};

// This structure is defined by the application's shader.

//

// The presence of SV_DrawArgs is essential here because it defines this input record type as being associated

// with a draw node. It also specifies where in the input record the parameters need to be read

// from by the implementation.

struct MyDrawNodeInput

{

D3D12_DRAW_ARGUMENTS drawArgs : SV_DrawArgs; // can appear anywhere in struct

// Other fields omitted for brevity.

};

There is also a system values that goes in the struct to define optional vertex buffer bindings, desribed in Graphics node resource binding and root arguments.

The entire input record for a draw launch node is accessible from the first shader stage in the node’s associated program, a vertex shader.

For further details see Graphics nodes and Graphics nodes example.

DrawIndexed nodes

[CUT]This section was proposed as part of graphics nodes, but has been cut in favor of starting experimentation with mesh nodes only.

DrawIndexed launch nodes can only appear at a leaf of a work graph. They can appear in the graph as standalone entrypoints as well (which is a form of leaf).

The equivalent of a graphics DrawIndexedInstanced() is generated when an input is present - a set of indexed, instanced draws launched per input. Work is launched for the program at the node the same way it would if the equivalent was used on a command list with a DrawIndexedInstanced() call.

Per-draw arguments must be present in the input record. This is specified by using SV_DrawIndexedArgs. All of the normal system generated values for graphics shaders, such as SV_VertexID, SV_InstanceID, SV_PrimitiveID, etc. work as expected for the shaders at the node. The snippet below illustrates an example input payload to a draw-indexed launch node:

// This structure is a fixed part of the API.

struct D3D12_DRAW_INDEXED_ARGUMENTS

{

uint IndexCountPerInstance;

uint InstanceCount;

uint StartIndexLocation;

int BaseVertexLocation;

uint StartInstanceLocation;

};

// This structure is defined by the application's shader.

//

// The presence of SV_DrawIndexedArgs is essential here because it defines this input record type as being

// associated with a draw-indexed node. It also specifies where in the input record the parameters of the

// draw should be read from by the implementation.

struct MyDrawIndexedNodeInput

{

D3D12_DRAW_INDEXED_ARGUMENTS drawIndexedArgs : SV_DrawIndexedArgs; // can appear anywhere in struct

// Other fields omitted for brevity.

};

There are also system values that go in the struct to define index buffer and optional vertex buffer bindings, desribed in Graphics node resource binding and root arguments.

The entire input record for a draw-indexed launch node is accessible from the first shader stage in the node’s associated program, a vertex shader.

For further details see Graphics nodes and Graphics nodes example.

Shader invocation at a node

A node can run a shader when the shader’s declared requirements are met:

- input is present

- the system can reserve space for the shader’s worst case output to destination nodes (if any)

If the shader at a node is configured to produce a grid of compute thread groups as part of one logical invocation, the system can execute individual thread groups as their output capacity is reserved, as opposed to waiting for output capacity for the entire set of thread groups atomically.

Implementations cannot perform redundant invocations of the requested set of thread groups / dispatch grid of a node’s shader based on a given input if there could be any side effects (such as via UAV accessses and node outputs) that vary over redundant invocations. Certainly the inputs to a node can only produce the one set of output records that the node’s shader invocations are intended to produce.

Why would an implementation want to spend effort processing the same input multiple times? There might be a temptation for an implementation to use a scheduling strategy where it runs a “dummy” invocation of a node which doesn’t actually output anything, just to observe how much it would actually output. Then it can reserve the optimal amount of output storage for a real invocation, avoiding reserving the worst case declared output storage for the node. This could only work if no matter how many times a node runs with a given input, results are identical (including anything written to UAVs), and the outputs to other nodes are only produced once. Of course it would be difficult for an implementation to know this is safe to do. If the need for this type of optimization proves valuable, shaders could be given the option to opt-in redundant node invocations via annotation on a shader, something like:

[InvariantNodeBehavior]. The implementation would be allowed to run such a node multiple times for a given input, with appropriate masking done by the implementation such that the set of output records produced matches what one invocation would have produced.

Node ID

A Node ID is in the form: {string name, array index}. The array index part means all nodes are technically in a node array, where the name of the array is the string name portion of the Node ID. Standalone nodes that have a unique string name just happen to be in an array of size 1.

In terms of HLSL shader function attributes, a node ID can be explicitly defined via [NodeID("name",arrayIndex)], or just [NodeID("name")], which implies array index 0.

In the absence of these attributes for explicitly indicating node IDs, node ID defaults to whatever name is in context (with array index 0) for convenience.

For instance if a node shader definition doesn’t specify a [NodeID()] attribute, the node ID defaults to {shader name in HLSL, 0} in the compiled shader. The NodeID and shader name are separate entities in the shader. From the runtime point of view, it doesn’t know whether the NodeID was specified explicitly or was a default assignment. The significance is if the shader export is renamed when importing into a state object, the NodeID doesn’t also get renamed. To rename NodeIDs at state object creation, use node overrides such as D3D12_COMMON_COMPUTE_NODE_OVERRIDES at the API.

If a node output declaration doesn’t specify a [NodeID()] attribute the node ID defaults to {localVariableName for the output node, 0}.

Given a variable declared in a shader that represents an array of output nodes, say myOutputArray having a Node ID {name,arrayIndex}, the arrayIndex part of the ID serves as the base for array indexing. So the shader body can index the node array via myOutputArray[i], which resolves to Node ID {name,arrayIndex+i}.

Node IDs can be seen in the various examples in the following sections.

Some APIs refer to NodeIDs as well (such as methods for overriding the IDs specified in shaders): see D3D12_NODE_ID.

Various constraints on the construction of node arrays are described in Node array constraints.

Node input

Below are some examples illustrating input (outputs shown later in node outputs).

Documentation of all of the syntax is in the HLSL section.

Here is an example of a compute shader whose input record includes a dispatch grid size:

(There are no outputs shown in these examples - outputs are introduced in a later topic)

struct MY_RECORD

{

// shader arguments

uint3 DispatchGrid : SV_DispatchGrid;

uint foo;

float bar;

};

// A note about node naming:

// By default, the name of a node that uses this shader is the

// name of the shader, "myCullingNode" in this case below.

// The name can be overridden outside the shader,

// as well as by using the function attribute 'NodeID':

// [NodeID("myRename")]

//

// If the node is part of an array of nodes, the location in the array

// can be indicated (defaults to 0 if not specified):

// [NodeID("nodeThatIsPartOfAnArrayOfNodes",5)]

// [NodeLaunch("broadcasting")] indicates this is a broadcasting node,

// so a given input is broadcast to all thread groups launched in the

// dispatch grid.

[Shader("node")]

[NodeLaunch("broadcasting")]

[NumThreads(4,5,6)]

[NodeMaxDispatchGrid(10,1,1)] // when grid size is an input parameter

// either the shader must declare a maximum grid

// size (as shown here), and/or any node that uses the

// shader must do so (if the node declares it overrides)

void myCullingNode(uint3 DTid : SV_DispatchThreadID, ...

// myRecord is the input record for this node

DispatchNodeInputRecord<MY_RECORD> myRecord

)

{

...

// See note about future '->' alternative to .Get(). below.

if(myRecord.Get().foo)

{

...

}

}

Note: see future

->operator support for use in place ofGet().

Here is an example of a shader that uses a coalescing launch, so the input is an array:

struct MY_OTHER_RECORD

{

uint textureIndex;

};

[Shader("node")]

[NodeLaunch("coalescing")]

[NodeID("myRenamedNode")] // optional rename of node.

// Export name is still myCoalescingNode, but

// when used in a node, it's name defaults to "myRenamedNode"

[NumThreads(1024,1,1)]

void myCoalescingNode(...,

// The system will launch a thread group when anywhere from 1 to 256 records

// are available.

[MaxRecords(256)] GroupNodeInputRecords<MY_OTHER_RECORD> myRecords,

uint3 threadInGroup : SV_GroupThreadID

)

{

uint numRecords = myRecords.Count(); // find out how many inputs

// this thread group has

// ([1...256])

// In this shader, every 4 threads processes a given input

if(threadInGroup.x / 4 >= numRecords)

{

return;

}

...

float4 color = DoLookup(texcoords, myRecords[i].textureIndex);

...

}

Here is an example of a compute shader whose input only has shader arguments, as the dispatch grid size is fixed at the node:

struct MY_OTHER_RECORD // this record goes to a node with a fixed dispatch

// grid size so nothing other than shader arguments

// are here

{

uint textureIndex;

};

[Shader("node")]

[NodeLaunch("broadcasting")]

[NumThreads(1024,1,1)]

void myPhysicsNode(uint3 DTid : SV_DispatchThreadID, ...

DispatchNodeInputRecord<MY_OTHER_RECORD> myRecord

)

{

...

}

Here is an example of a shader that gets invoked when the input has a count of zero-sized records available. This can indicate progress for scenarios that may not need any other payload data:

[Shader("node")]

[NodeLaunch("coalescing")]

[NumThreads(1024,1,1)]

void myPostprocessingNode(...,

// Launch a thread group whenever the input has a count from 1 to 64.

// After completion, the input's overlallcount is decremented by the

// launched count.

// The app somehow knows what this represents, so no other payload

// is needed.

EmptyNodeInput<64> myProgressCounter

)

)

{

// Shader knows a batch of prior work is done (from 1 to 64)

uint finishedWork = myProgressCounter.Count();

}

Here is a variation of zero-sized records using broadcast semantics, whereby a dispatch grid is launched per zero-sized record.

[Shader("node")]

[NodeLaunch("broadcasting")]

[NumThreads(1024,1,1)]

[NodeMaxDispatchGrid(256,1,1)]

void myPostprocessingNode(...,

// Since there's no node input declared, it's assumed to be a zero-sized record

)

{

}

For more see Examples of input and output use in shaders.

Node output

Shaders can freely access UAVs of course.

In addition, shaders can declare nodes they want to write to. Outputs are generated per-thread group - threads in the thread group must work together to generate the set of output for the group.

Each such output declaration specifies various attributes like max output records. The record layout declaration is late-bound in the shader body when the shader code is actually writing to it.

Below are some examples illustrating node output variations.

Documentation of all of the syntax is in the HLSL section.

Here is a shader that outputs to 66 different nodes. They are declared as 3 individual output nodes and one array of 63 output nodes, the latter being dynamically indexable.

struct MY_INPUT_RECORD

{

float value;

UINT data;

};

struct MY_RECORD

{

uint3 dispatchGrid : SV_DispatchGrid;

// shader arguments:

UINT foo;

float bar;

};

struct MY_MATERIAL_RECORD

{

uint textureIndex;

float3 normal;

};

[Shader("node")]

[NodeLaunch("broadcasting")]

[NumThreads(4,5,6)]

void myFancyNode(...,

DispatchNodeInputRecord<MY_INPUT_RECORD> myInput,

// Output node named myFascinatingNode of type MY_RECORD

[MaxRecords(4)] NodeOutput<MY_RECORD> myFascinatingNode,

// Output node that is externally named "myNiftyNode". The local

// name in the shader is myRecords. The external name also includes

// an optional number, 3 in this case. This indicates that

// "myNiftyNode" is actually an array of nodes, and this shader is

// choosing to write to just array index [3] in that array of nodes.

// It is also possible to write to multiple array entries in a

// node array, shown by myMaterials further below.

// The name ("myNiftyNode",3) can also be overridden externally.

[NodeID("myNiftyNode",3)] [MaxRecords(4)] NodeOutput<MY_RECORD> myRecords,

// myMaterials is an array of 63 nodes.

// This is declaring that it is sharing it's max output records with myRecords above.

// So across both outputs (including myMaterials itself being an array of nodes),

// the shader will output no more than 4 records (not 4 per node, but 4 across all of the nodes).

// All nodes in the array must accept the same record type (MY_MATERIAL_RECORD in this case).

// If nodes with different input record types need to be targeted, they can't be in the same array.

[MaxRecordsSharedWith(myRecords)]

[AllowSparseNodes] // opt-in: not all nodes in graph need to be populated

[NodeArraySize(63)] NodeOutputArray<MY_MATERIAL_RECORD> myMaterials,

// an output that has empty record size

[MaxRecords(20)] EmptyNodeOutput myProgressCounter

)

{

// examples of how to write to output shown below

}

When a thread group is executing, its threads must request output records from nodes. This can be done with the thread group sharing output records and/or by each thread independently requesting its own output records to fill in. Either way, the calls to retrieve records must be made thread group uniform, so outside any flow control that may vary within the thread group (varying includes threads exiting).

The group shared way accomplish this is by requesting 0 or more records via GetGroupNodeOutputRecords which must be thread group uniform, not in flow control that could vary in the thread group (varying includes threads exiting). The threads in the group can cooperate to fill in the returned output records (if any) and then must call OutputComplete() when finished. The numRecords argument allows storage for multiple output records to be available appearing as an array. Storage for output records can be retrieved in separate chunks via multiple calls, albeit with likely more overhead than batching into fewer larger requests. The sum of records retrieved in the shader must not exceed the maximums declared at the top of the shader, else behavior is undefined.

The per-thread way to request output records from nodes returns records to be filled out to the individual thread that requested them. Different threads in the group making the same call can request record(s) from different nodes in a node array (or the same node) if desired, or request no record at all. The option for a given thread to request no record at all might seem odd (why make the call in the first place?), but it is useful given calls to these methods must be thread group uniform, meaning not within any varying flow control (varying includes threads exiting). The method for this is GetThreadNodeOutputRecords.

Once output records are retrieved, they are read/writeable. For group shared output records, if there is any cooperative read+writing going on that requires synchronization (e.g. threads cooperatively OR’ing in bits into a shared record member), a Barrier() can be used. In particular there is an option to barrier on the record memory itself to ensure data visibility across the thread group.

Some output examples are shown here:

// Observe in these examples that all calls to Get*NodeOutputRecords() are thread group uniform,

// meaning outside of any flow control that could vary within the thread group (varying includes threads exiting):

// This first example retrieves an array of 3 records for the thread group to collectively fill out:

GroupNodeOutputRecords<MY_RECORD> myOutRecords =

myFascinatingNode.GetGroupNodeOutputRecords(3); // no more than 4 per declaration above

myOutRecords[someIndex].foo = 5;

etc.

myOutRecords.OutputComplete(); // indicate that all output has been finished.

...

// Here is an example of binning into an array of nodes. In this example,

// each thread either writes a record to an arbitrary output node in a node array, or writes nothing.

int chosenMaterialForThisThread = ComputeMyMaterial(); // suppose my function returns -1 if the current thread

// has nothing to output

bool allocateRecordForThisThread = chosenMaterialForThisThread >= 0 ? true : false;

ThreadNodeOutputRecords<MY_MATERIAL> myMaterial =

myMaterials[chosenMaterialForThisThread].GetThreadNodeOutputRecords(allocateRecordForThisThread ? 1 : 0);

// Above: if thread local variable "allocateRecordForThisThread" is false, the call ignores

// the specified node, myMaterials[chosenMaterialForThisThread]. Further, the variable

// used to index the node output array, [chosenMaterialForThisThread] need not be initialized

// for that thread. And the returned object, myMaterial, is invalid to access.

if(allocateRecordForThisThread)

{

// See note about future '->' alternative to .Get(). below.

myMaterial.Get().color = red;

// Note that myMaterials is an array of output nodes that have to share

// the same struct for their output.

// It would be handy if HLSL supported unions some day,

// so that this struct could at least be easier to repurpose even if it has a fixed size.

// Suppose one thread went down this branch.

// That means one record from the reservation of 4 is used.

...

// Can't call OutputComplete here, since it must be group uniform:

// myMaterial.OutputComplete(); <- Invalid!

}

myMaterial.OutputComplete();

...

// Note it would likely be more efficient to batch up output requests (merging this with above),

// via GetThreadNodeOutputRecords. But this example is simply showing what is possible:

myMaterial =

myMaterials[anotherMaterial].GetThreadNodeOutputRecords(thisThreadNeedsToOutputSomethingElse);

if(thisThreadNeedsToOutputSomethingElse)

{

// Suppose one thread went down this branch.

// So a second record from the reservation of 4 is used.

// See note about future '->' alternative to .Get(). below.

myMaterial.Get().color = green;

}

myMaterial.OutputComplete();

...

// From the declaration above, myRecords shares its output reservation of 4 records with myMaterials.

// There are 2 records left, and this uses them up:

GroupNodeOutputRecords<MY_RECORD> outRec =

myRecords.GetGroupNodeOutputRecords(2);

outRec[0].textureIndex = 3;

outRec[1].textureIndex = 4;

outRec.OutputComplete();

Note: see future

->operator support for use in place ofGet().

A call to records.OutputComplete() must be reached for every call to Get*NodeOutputRecords() - see OutputComplete() for more detail, including exceptions to this requirement that might be supported time permitting in the future.

Once outputs have been declared, any threads in the thread group can collectively generate any of the output.

For a EmptyNodeOutput (like myProgressCounter above), the shader needs to call {Group|Thread}IncrementOutputCount(n) once during execution to select the logical output count, otherwise 0 outputs are assumed when the shader finishes execution.

myProgressCounter.GroupIncrementOutputCount(12); // see myProgressCounter declared

// above

For more see Examples of input and output use in shaders.

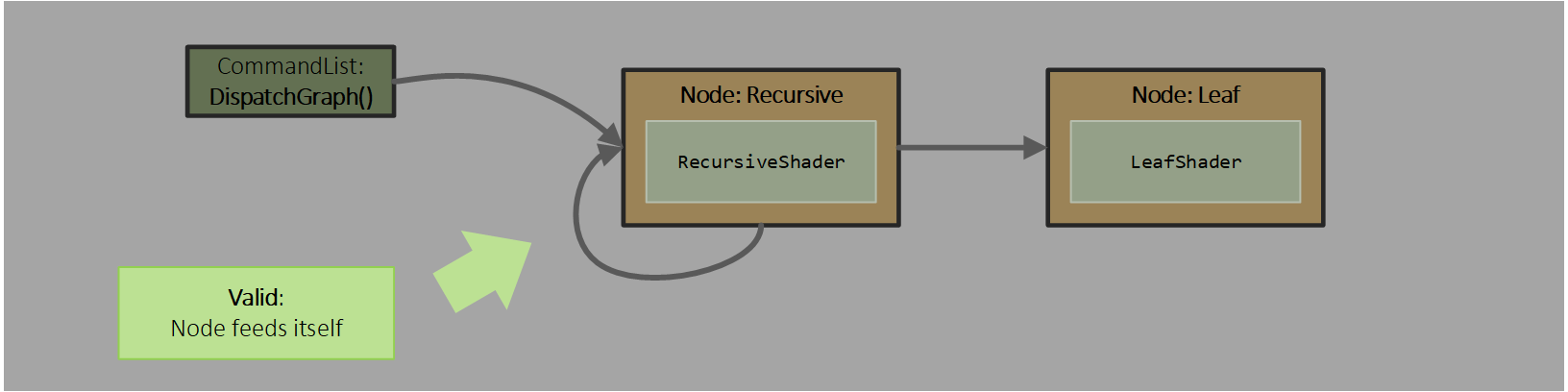

Recursion

To limit system complexity, recursion is not allowed, with the exception of a node targeting itself. There can be no other cycles in the graph of nodes.

If a node targets itself, it must declare a max recursion level - see NodeMaxRecursionDepth in the example shader below, corresponding to node Recursive in the above diagram. Exceeding this declared maximum during execution produces undefined results. GetRemainingRecursionLevels() or the IsValid() node output method can be used to notice recursion status in a shader if needed (not shown in the examples below).

struct MY_BOX

{

uint boxDataBufferDescriptorHeapIndex;

uint boxDataOffset;

float foo;

};

struct MY_LEAF

{

float goo;

int moo;

};

[Shader("node")]

[NodeLaunch("broadcasting")]

[NodeMaxRecursionDepth(16)] // Declaration required when the shader is used in

// such a way that the NodeID for one of its outputs is

// the same ID as the node itself. If, via renaming of

// output NodeID or NodeID that the shader is used at,

// recursion is no longer happening, that's fine, this

// NodeMaxRecursionDepth declaration doesn't apply.

[NodeMaxDispatchGrid(1,1,1)]

[NumThreads(8,1,1)]

void recursiveNode(...,

// The node input can be Broadcast or Coalescing launch,

// but must use the same struct type as the output for recursion

// to make sense:

DispatchNodeInputRecord<MY_BOX> inputBox,

// Output up to 8 records back to `recursive`

// which is the name of this node:

[MaxRecords(8)] NodeOutput<MY_BOX> recursiveNode,

// Share recursiveNode's output budget of 8 with leafNode.

// So up to 8 records can be arbitrarily distributed across `leaf`

// and `recursive`:

[MaxRecordsSharedWith(recursiveNode)] NodeOutput<MY_LEAF> leafNode,

uint3 threadInGroup : SV_GroupThreadID

)

{

// In this example, each thread examines inputBox

// which might contain 8 children.

// Per thread: when a child is found that isn't a leaf write it to

// `recursiveNode` to be further explored, else write the leaf to

// `leafNode`.

ThreadNodeOutputRecords<MY_BOX> box =

recursiveNode.GetThreadNodeOutputRecords(bCurrentThreadIsABox);

if(bCurrentThreadIsABox)

{

box[0].foo = aNumber;

...

}

box.OutputComplete();

ThreadNodeOutputRecords<MY_LEAF> leaf =

leafNode.GetThreadNodeOutputRecords(bCurrentThreadIsALeaf);

if(bCurrentThreadIsALeaf)

{

leaf[0].foo = aNumber;

...

} // else no output for this thread

leaf.OutputComplete();

// Tree explorations could be done many ways. In this example,

// individual threads could instead loop over the children of a box,

// such that each thread is configured to process a box. This would be

// a shader set up to run in coalescing mode, distributing boxes across

// threads, instead of each thread group processing an individual box.

}

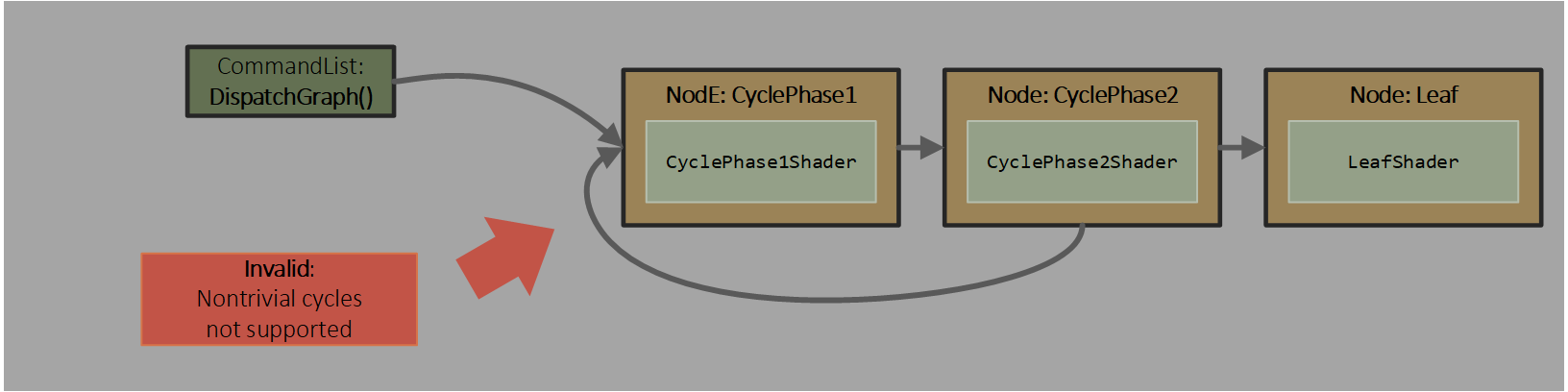

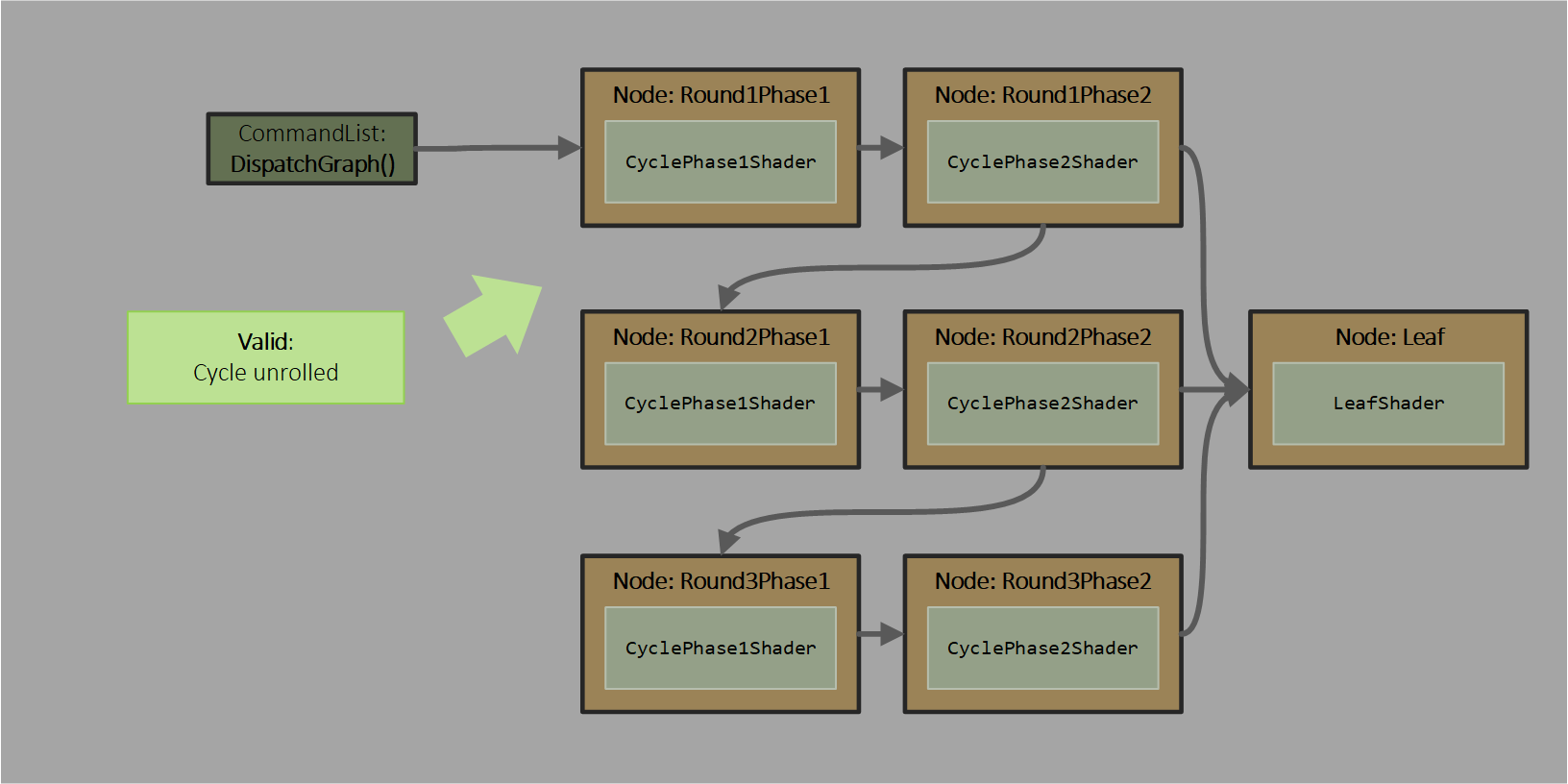

If an app needs a more complex cycle than just a node feeding itself, it can construct a graph that has the worst case recursion expanded into nodes.

As a simple example, consider a graph with nodes CyclePhase1, CyclePhase2, Leaf:

Node CyclePhase2 wants to send work to CyclePhase1 or Leaf. The nontrivial cycle back to CyclePhase1 is not allowed.

If the app knows that 3 is the worst case recursion depth, it can manually specify the following graph, using the same shader CyclePhase1Shader at nodes Round1Phase1, Round2Phase1 and Round3Phase1 and the same shader CyclePhase2Shader at nodes Round1Phase2, Round2Phase2 and Round3Phase2:

To enable a single shader to be reused in multiple places in a graph as above, the input and output node IDs declared in the shader can be renamed when the shader is assigned to a node.

In addition, an output declared by a shader can be left unassigned when it is used at a given node (opt-in with the [AllowSparseNodes] or [UnboundedSparseNodes] output attribute), such as at Round3Phase2 in the example graph above, which doesn’t need to recurse to CyclePhase1Shader any more. This tells the system that it doesn’t need to reserve storage for that output when deciding to launch the shader at that node. The app promises that when the shader executes it will not write to that output - any code in the shader that writes to it will get skipped due to flow control. If the shader violates this promise and tries to write to an unreserved output, behavior is undefined.

Shaders can use input record lifetime for scoped scratch storage

The system keeps an input record alive for all invocations of a shader consuming that input from a node. For a coalescing launch node this would be all the threads in a thread group. For a broadcasting launch node, this would be all threads in all thread groups across the dispatch grid of thread groups.

Only when the system knows all invocations of these shaders have finished reading the input can it’s storage be released. Basic ways the system could track inputs are finished with is tracking that all spawned shaders that use the input have completed execution, or implementing reference counting on the allocation.

The application can take advantage of this input lifetime to use the input as scratch storage that it wants shared across the dispatched threads. The scope is all threads in the dispatch grid if applicable, not just a given thread group. Shaders are allowed read + write access (including atomics) to their input record. If the shader writes to its input record, the guarantee is the system can’t duplicate that record for any reason for different shader launches.

Memory synchronization for read+write input records is supported by the Barrier() intrinsic. In particular there is an option to barrier on the record memory itself to ensure visibility of its data at the appropriate scope.

Consider an example: A node launches a shader with an input record that sets the dispatch group size to be big enough for a parallel bounding box calculation of a set of vertices. The spawned thread groups may locally cooperate to compute local bounding boxes, but the results need to be merged across the entire set of dispatched thread groups.

Certainly the app could be forced to allocate (or preallocate) some memory location to hold this scratch calculation. More convenient, though, is to simply make the bounding box scratch storage a member of the input record. Whoever creates the record initializes the box field appropriately. Then each thread group can merge its local bounding box calculation into the scratch. The last thread group to contribute knows the final bounding box result. But who is last?

Write access to input records is accomplished by using RW{Dispatch|Thread}NodeInputRecord or RWGroupNodeInputRecords to declare the input (as opposed to {Dispatch|Thread}NodeInputRecord or GroupNodeInputRecords).

For broadcasting launch nodes, where multiple thread groups can be launched for a given input record, FinishedCrossGroupSharing() can be optionally called on node input. The shader can make this call when a given thread group is finished reads and writes to it for the purpose of coordination across thread groups. Either every thread group has to call this uniformly within each thread group, or none of them can call it. The system returns false for thread groups that are not the last to finish, and these thread groups are no longer allowed to read or write to the input. The system returns true if the current thread group is the last to access the input. This thread group can continue reading/writing to the input as much as it wants, likely to take some action on the final contents of the input.

bool RWDispatchNodeInputRecord<recordType>::FinishedCrossGroupSharing();

e.g.:

struct [NodeTrackRWInputSharing] rec1

{

Box boundingBox;

//...

};

[NodeLaunch("broadcasting")]

[NumThreads(64, 1, 1)]

[NodeDispatchGrid(4, 1, 1)]

void myNode(

globallycoherent RWDispatchNodeInputRecord<rec1> myInput,

[MaxRecords(4)] NodeOutput<rec0> myOutput)

{

...

// If the local thread group needs to finish work first,

// a barrier with group sync must be used before calling

// FinishedCrossGroupSharing(). e.g.:

Barrier(NODE_INPUT_MEMORY, DEVICE_SCOPE|GROUP_SYNC);

if(myInput.FinishedCrossGroupSharing())

{

// This thread group is the last to finish coordinating

// with other thread groups, so it can take further action

// on the contents of myInput

// See note about future '->' alternative to .Get(). below.

outputRec.boundingBox = myInput.Get().boundingBox;

}

}

Note: see future

->operator support for use in place ofGet().

In the bounding box calculation example, the code that sees FinishedCrossGroupSharing() return

truecan do something like frustum culling on the box and/or copying the box into a record to be sent to some other node for further processing.

For coalescing or thread launch nodes, input records aren’t shared across thread groups, instead a given input is only visible to a single thread group. As such, FinishedCrossGroupSharing() doesn’t apply here. The utility in these nodes for RWThreadNodeInputRecords or RWGroupNodeInputRecords (the latter for coalescing launch with multiple inputs available) is simply allowing the shader to use the input records as scratch space if desired (e.g. when the input data is finished with or transforming the input data in place), instead of having to allocate additional local variables for such storage.

Of course the app could figure this out on its own by using an atomic on a scratch variable in its input record too. And in fact that might be worth doing for more complex situations, such as coordinating multiple stages of execution across all dispatched threads. For the simple FinishedCrossGroupSharing() case though, the system might be able to do better, or it might just use an additional atomic counter behind the scenes - no worse than an app doing this manually.

Unfortunately FinishedCrossGroupSharing() can’t be called in graph entrypoint nodes, reason discussed in the spec for the FinishedCrossGroupSharing() intrinsic.

If a node might call FinishedCrossGroupSharing() it must specify the [NodeTrackRWInputSharing] attribute on the record struct. Matching this, the upstream node that produces the input record must specify the [NodeTrackRWInputSharing] on the corresponding record struct as well. The easiest way to do this is to use the same structure declaration with the attribute for both the producer and consumer nodes.

If it could be interesting to optionally constrain a node such that only one record that has arrived at the node can be launching a shader at a time, scratch memory wouldn’t need to be duplicated in every input record and could instead be associated with the node itself.

Sharing input records across nodes

Multiple nodes can share the same input. This is broadcasting one input to multiple shaders.

This is configured in the the declaration of a node by stating that it shares the same input as another node ID.

In HLSL, this means specifiying [NodeShareInputOf("nodeIDWhoseInputToShare",optionalArrayIndex)] in the configuration block preceding the shader definition.

This name can be overridden in the work graph declaration as well, including specifying NULL for the node ID to disable sharing that might have been declared in the shader.

Shaders used at each of these nodes must be able to be invoked with the same input record.

The system has to finish launching the shaders for all nodes sharing the input before the input record can be released. These don’t need to be launched atomically, so output storage reservations only need to be done for the portions (e.g. thread groups) being launched at a time.

Input records declared as RW{Dispatch|Thread}NodeInputRecord or RWGroupNodeInputRecords (see Shaders can use input record lifetime for scoped scratch storage) cannot be shared across nodes.

If an app wants to keep allocations alive for complicated scopes (such as across multiple nodes), it can implement its own referenced counted heap allocator. If there appears to be value in this being a system service, such that it looks like IHVs could do a significantly better job at this than every application having to roll their own allocators, it could be added in the future. A system provided allocator could perhaps participating in node launch scheduling as well. For instance nodes could declare the maximum amount of memory they may need to allocate, which the scheduler then ensures is avaialble before node launch. Any allocations an app makes within this budget can then be freed anywhere the app wants including nodes arbitrarily downstream in the graph.

The following are some other limitations that exist to reduce driver complexity.

A node that recurses, is directly targeted by another node, or is an entrypoint cannot share the input of another node. And a node can’t share the input of a node that recurses.

A node whose input is shared to other node(s) can’t get its input both from other nodes and from graph entry.

No more than 256 nodes can share an input. This number happens to be related for a possible implementation to the maximum number of outputs from a node, same number.

If nodes share an input, this incurs an extra cost on the producing node’s node output limits.

Wave semantics

Thread visibility in wave operations

Wave operations in shaders exhibit the following rules for thread visibility:

-

For standalone compute shaders, and in work graphs: broadcasting and coalescing launch nodes, only threads from a single thread group can appear to the app to be packed into a wave, visible to wave ops.

-

For thread launch nodes in work graphs, multiple threads from the same node (and only that node) can appear to the app to be packed into a wave, visible to wave ops. Thread launch nodes don’t support any group scope features like group shared memory or group scope barriers.

Support for WaveSize shader function attribute

For HLSL shaders in general there is an WaveSize(N) shader function attribute for forcing a wave size for a shader out of the options a given device supports. This is also supported for node shaders of all launch types.

Quad and derivative operation semantics

Quad and derivative operations are invalid in thread launch nodes since there is not a sufficiently sized thread group available. Other launch modes support these operations as long as the thread group size meets the requirements per spec for the operations.

NonUniformResourceIndex semantics

To restate the rules for shaders in general, irrespective of work graphs: The value indexing into a resource array or a {Resource|Sampler}DescriptorHeap must be uniform across all active threads in a wave, unless NonUniformResourceIndex is called directly on the index within the indexing operation, such as:

myBufferArray[NonUniformResourceIndex(index)].Load(...)

From the perspective of work graphs, the following specific considerations apply:

- Input record members: For thread launch nodes, since threads at a node may be packed into a wave, input record members are likely to be nonuniform unless the shader author knows there are fields in the input that are repeated across all records. For other launch modes, input records are uniform by construction.

- Local root arguments: Constant per node, meaning uniform.

Should an implementation pack threads from multiple thread groups (not from thread launch nodes) into a wave, or pack threads/groups from different nodes into a wave, this must not have any functionally visible side effects for shader code. In the case of deciding when to specify NURI, applications don’t need to worry about this possibility.

Producer - consumer dataflow through UAVs

Suppose a producer node wants to invoke a consumer and pass data to it through UAVs, separate from system-managed records. Perhaps the data doesn’t line up with record flow exactly or record size limits require the app to manually spill to UAVs.

To ensure the data is visible to the consumer, this is the supported path:

| Producer UAV writes | Consumer UAV reads |

|---|---|

atomics or globallycoherent stores |

atomics or globallycoherent loads |

Notice that the stores and loads above are globallycoherent, which means fastest GPU memory caches are bypassed for those paths to work. Atomics have this property as well; globallycoherent is implied, so specifying it has no effect on an atomic operation.

The consumer doesn’t need a barrier before accessing the data. In the producer though, a barrier is required between UAV writes and the node invocation request. A node invocation request is when a producer node’s shader executes an OutputComplete() or {Group|Thread}IncrementOutputCount() call. Resulting consumer node invocations can occur any time after this. The minimum barrier between relevant UAV writes and a node invocation request is Barrier(UAV_MEMORY or object,DEVICE_SCOPE), where object is the UAV. This keeps the UAV accesses and node invocation request in order.

An app might get away without this barrier - things seem to work fine. Maybe the driver internally did a barrier to enable its implementation of OutputComplete(), and unbenownst to the driver that barrier was what the app needed for its UAV data passing to work. And the driver’s compiler didn’t happen to move UAV writing code past

OutputComplete(). Then along comes a different device or driver without a hidden barrier, or the compiler validly moves UAV writing code pastOutputComplete()and the app falls apart.

In contrast to the listing above, here are the unsupported options for passing data from producer to consumer through UAVs, for the purpose of illustration:

| Producer UAV writes | Consumer UAV reads |

|---|---|

atomics or globallycoherent stores |

non-globallycoherent loads |

non-globallycoherent stores |

atomics or non-globallycoherent loads |

In other words, there isn’t a path enabled for both the producer and consumer to benefit from the fastest GPU memory caches during their individual accesses to the UAV data while making producer data visible to the consumer.

Notes on failed explorations:

A solid attempt was made to support producer writes staging in local/fastest caches (non-

globallycoherentmemory) with a path for the data to be visible in the consumer’s local/fastest caches (non-globallycoherentmemory). A complication is that the consumer could end up running on a distant core from the producer, exactly which core unpredictable.

One approach was allowing the Barrier() intrinsic to request release/acquire semantics generally understood in memory model lexicon, so that an appropriate release barrier after a consumer data write paired with acquire barrier before consumer read could complete the data-path from producer to consumer. This might have worked semantically but IHV feedback was that the expense of making these broadly scoped memory operations available at thread/group frequency would result in excessively redundant cache flushing/invalidation.

Another approach considered was a

NodeBarrier()intrinsic, which would allow producers to effectively queue up a request for heavyweight barrier before consumer node invocations that results in necessary producer cache flush and consumer cache invalidation roughly between producer finishing write and consumer invoking. This would not only maintain memory caching at both producer and consumer, but also enable scenarios like transitioning resources between UAV and SRV state between node invocations to allow texture filtering (with cache) of data generated within in a graph. Unfortunately not all drivers/hardware could implement thisNodeBarrier()concept cleanly in a reasonable timeframe. Further, the expectation is that longer term a more first-class mechanism for bulk synchronization will be introduced to work graphs that might help with this scenario and others. It would have been nice to have an interim solution given a full solution may take a long time, but it didn’t work out. Hopefully the learnings from the false starts taken so far will help inform a future design. In the meantime there may be more cases than might have been necessary where apps have to live with the drawbacks ofgloballycoherentmemory, or else break graphs apart over a command list. See Joins - synchronizing within the graph for related discussion about current fundamental graph limitations.

Examples of producer - consumer dataflow through UAVs

Dispatch grid writing to UAV for consumer node to read

globallycoherent RWByteAddressBuffer myUAV : register(u0);

groupshared uint g_TotalCompletedGroups;

[Shader("node")]

[NodeLaunch("broadcasting")]

[NumThreads(32,1,1)]

#define GRID_SIZE 16

[NodeDispatchGrid(GRID_SIZE,1,1)]

void myProducer(

[MaxRecords(1)] EmptyNodeOutput myConsumer,

uint groupID : SV_GroupID,

uint threadInGroup : SV_GroupIndex

)

{

g_TotalCompletedGroups = 0;

Barrier(g_TotalCompletedGroups, GROUP_SCOPE|GROUP_SYNC);

MyHelper_WritePerGroupUAVRegionUsingAtomicsOrStores(myUAV, groupID, threadInGroup);

// This barrier prevents the compiler from reordering UAV writes or node invocation requests across barrier

// The DEVICE_SCOPE portion ensures that the UAV writes are also seen globally before the atomic after the barrier.

// Note the above UAV writes still need to be in globallycoherent memory, or else written by atomics.

Barrier(myUAV, DEVICE_SCOPE|GROUP_SYNC);

if(threadInGroup == 0)

{

// Assume this location was initialized to 0:

myUAV.InterlockedAdd(progressAddress, 1, g_TotalCompletedGroups);

}

Barrier(g_TotalCompletedGroups,GROUP_SCOPE|GROUP_SYNC);

if(g_TotalCompletedGroups == GRID_SIZE-1) // all the other groups are done

{

myConsumer.GroupIncrementOutputCount(1); // Note: this call needs to be group uniform

}

}

[Shader("node")]

[NodeLaunch("broadcasting")]

[NumThreads(32,1,1)]

[NodeDispatchGrid(32,1,1)]

void myConsumer(

uint groupID : SV_GroupID,

uint threadInGroup : SV_GroupIndex

)

{

MyHelper_ConsumeDataUsingAtomicsOrLoads(myUAV, groupID, threadInGroup);

}

Every access to the UAV skips fastest caches. Which may be fine if the access pattern woudn’t benefit from the cache anyhow.

Single thread writing to UAV for consumer node to read

In this example an individual producer thread wants to make its UAV writes visible to a consumer.

globallycoherent RWByteAddressBuffer myUAV : register(u0);

[Shader("node")]

[NodeLaunch("thread")]

void myProducer(

[MaxRecords(1)] EmptyNodeOutput myConsumer

)

{

MyHelper_WriteUAVRegionUsingAtomicsOrStores(myUAV);

// This barrier prevents the compiler from moving UAV writes or node invocation requests across barrier.

// The DEVICE_SCOPE portion ensures that the UAV writes are also seen globally before the node

// invocation request after the barrier.

// Note the above UAV writes still need to be in globallycoherent memory, or else written by atomics.

Barrier(UAV_MEMORY, DEVICE_SCOPE);

myConsumer.ThreadIncrementOutputCount(1);

}

[Shader("node")]

[NodeLaunch("broadcasting")]

[NumThreads(32,1,1)]

[NodeDispatchGrid(32,1,1)]

void myConsumer(

uint groupID : SV_GroupID,

uint threadInGroup : SV_GroupIndex

)

{

MyHelper_ConsumeDataUsingAtomicsOrStores(myUAV, groupID, threadInGroup);

}

Work Graph

An app can define a set of nodes to be active together by defining a program of type WORK_GRAPH in a state object. This is constructed using the same state object infrastructure used for raytracing state objects, via the CreateStateObject API. So the work graph definition can be authored in HLSL or programatically.

All properties of individual nodes can be authored directly into a shader function definition. See HLSL.

The work graph definition can then optionally override any attributes of shaders being assigned to nodes in the graph. See the node description for a work graph.

Connectivity between nodes could manifest in many forms. There could be a set of nodes each with inputs and no outputs to other nodes - they may all simply do work that writes to resources as output. There could be a sets of nodes that generate disconnected paths of execution. The constraint is that the graph cannot have cycles other than any given node being allowed to target itself.

Node limits

Node count limits

The number of nodes in a graph is limited only to simplify the system’s tracking of nodes. From the programming model, nodes have Node IDs, which have two components: name and array index. The limit is defined such that implementations can map each node IDs in a graph into a 24 bit value. Thus there can be at most 0xffffff nodes in a graph.

Furthermore, the array index value that a Node’s ID uses counts against this limit. It is valid to have sparsely populated arrays of nodes, and so gaps count against the limit. Finally, for any node that is recursive, it’s NodeMaxRecursionDepth() also counts against the limit, as implementations may internally unroll recursive node.

Putting that all together, the limit on nodes in the graph is enforced with the following calculation:

- For all nodes that share a given Node ID string name, determine the highest array index value used with that string name:

MaxArrayIndexPerNodeStringName. Since the array index means0based counting, the number of slots used is:NumSlotsPerNodeStringName = MaxArrayIndexPerNodeStringName + 1. - Tally the sum of

NumSlotsPerNodeStringNamefor each unique string name:NumSlotsForAllNodeStringNames - For any node that declares

[NodeMaxRecursionDepth()], tally the sum of all these declarations asNumSlotsForRecursiveNodes NumSlotsForAllNodeStringNames + NumSlotsForRecursiveNodes <= 0xffffff

Regardless of this arbitrarily high limit, the more nodes that are active, the more the scheduler has to track, obviously. It is possible that a scheduler implementation does not have to degrade its performance significantly as node count grows. That is an aspiration though, and in practice there may be hardware dependent performance drops as node counts grow.