MLOS

MLOS is a project to enable autotuning for systems.

Contents

Overview

MLOS currently focuses on an offline tuning approach, though we intend to add online tuning in the future.

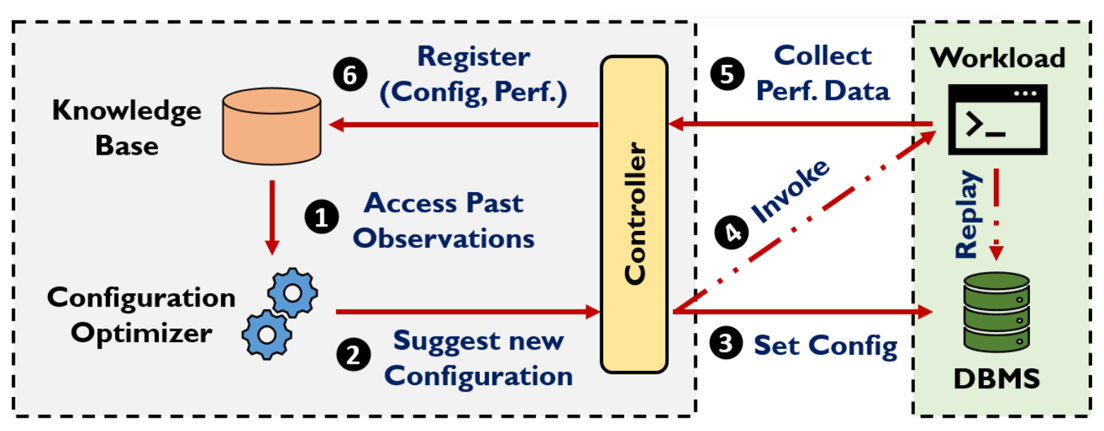

To accomplish this, the general flow involves

Running a workload (i.e., benchmark) against a system (e.g., a database, web server, or key-value store).

Retrieving the results of that benchmark, and perhaps some other metrics from the system.

Feed that data to an optimizer (e.g., using Bayesian Optimization or other techniques).

Obtain a new suggested config to try from the optimizer.

Apply that configuration to the target system.

Repeat until either the exploration budget is consumed or the configurations’ performance appear to have converged.

Source: LlamaTune: VLDB 2022

For a brief overview of some of the features and capabilities of MLOS, please see the following video:

Organization

To do this this repo provides three Python modules, which can be used independently or in combination:

mlos-benchprovides a framework to help automate running benchmarks as described above.mlos-vizprovides some simple APIs to help automate visualizing the results of benchmark experiments and their trials.It provides a simple

plot(experiment_data)API, whereexperiment_datais obtained from themlos_bench.storagemodule.mlos-coreprovides an abstraction around existing optimization frameworks (e.g., FLAML, SMAC, etc.)It is intended to provide a simple, easy to consume (e.g. via

pip), with low dependencies abstraction todescribe a space of context, parameters, their ranges, constraints, etc. and result objectives

an “optimizer” service abstraction (e.g.

register()andsuggest()) so we can easily swap out different implementations methods of searching (e.g. random, BO, LLM, etc.)provide some helpers for automating optimization experiment runner loops and data collection

For these design requirements we intend to reuse as much from existing OSS libraries as possible and layer policies and optimizations specifically geared towards autotuning systems over top.

By providing wrappers we aim to also allow more easily experimenting with replacing underlying optimizer components as new techniques become available or seem to be a better match for certain systems.

Contributing

See CONTRIBUTING.md for details on development environment and contributing.

Getting Started

The development environment for MLOS uses conda and devcontainers to ease dependency management, but not all these libraries are required for deployment.

For instructions on setting up the development environment please try one of the following options:

see CONTRIBUTING.md for details on setting up a local development environment

launch this repository (or your fork) in a codespace, or

have a look at one of the autotuning example repositories like sqlite-autotuning to kick the tires in a codespace in your browser immediately :)

conda activation

Create the

mlosConda environment.conda env create -f conda-envs/mlos.yml

See the

conda-envs/directory for additional conda environment files, including those used for Windows (e.g.mlos-windows.yml).or

# This will also ensure the environment is update to date using "conda env update -f conda-envs/mlos.yml" make conda-env

Note: the latter expects a *nix environment.

Initialize the shell environment.

conda activate mlos

Usage Examples

mlos-core

For an example of using the mlos_core optimizer APIs run the BayesianOptimization.ipynb notebook.

mlos-bench

For an example of using the mlos_bench tool to run an experiment, see the mlos_bench Quickstart README.

Here’s a quick summary:

./scripts/generate-azure-credentials-config > global_config_azure.jsonc

# run a simple experiment

mlos_bench --config ./mlos_bench/mlos_bench/config/cli/azure-redis-1shot.jsonc

See Also:

mlos_bench/README.md for more details on this example.

mlos_bench/config for additional configuration details.

sqlite-autotuning for a complete external example of using MLOS to tune

sqlite.

mlos-viz

For a simple example of using the mlos_viz module to visualize the results of an experiment, see the sqlite-autotuning repository, especially the mlos_demo_sqlite_teachers.ipynb notebook.

Installation

The MLOS modules are published to pypi when new releases are tagged:

To install the latest release, simply run:

# this will install just the optimizer component with SMAC support:

pip install -U mlos-core[smac]

# this will install just the optimizer component with flaml support:

pip install -U "mlos-core[flaml]"

# this will install just the optimizer component with smac and flaml support:

pip install -U "mlos-core[smac,flaml]"

# this will install both the flaml optimizer and the experiment runner with azure support:

pip install -U "mlos-bench[flaml,azure]"

# this will install both the smac optimizer and the experiment runner with ssh support:

pip install -U "mlos-bench[smac,ssh]"

# this will install the postgres storage backend for mlos-bench

# and mlos-viz for visualizing results:

pip install -U "mlos-bench[postgres]" mlos-viz

Details on using a local version from git are available in CONTRIBUTING.md.

See Also

API and Examples Documentation: https://microsoft.github.io/MLOS

Source Code Repository: https://github.com/microsoft/MLOS

Examples

-

Working example of tuning

sqlitewith MLOS.

These can be used as starting points for new autotuning projects outside of the main MLOS repository if you want to keep your tuning experiment configs separate from the MLOS codebase.

Alternatively, we accept PRs to add new examples to the main MLOS repository! See mlos_bench/config and CONTRIBUTING.md for more details.

Publications

TUNA: Tuning Unstable and Noisy Cloud Applications at EuroSys 2025

MLOS in Action: Bridging the Gap Between Experimentation and Auto-Tuning in the Cloud at VLDB 2024

Towards Building Autonomous Data Services on Azure in SIGMOD Companion 2023

LlamaTune: Sample-efficient DBMS configuration tuning at VLDB 2022

MLOS: An infrastructure for automated software performance engineering at DEEM 2020