How To Configure Auto Optimizer

Auto Optimizer is a tool that can be used to automatically search Olive passes combination based on:

input model

target device

target precision:

fp32,fp16,int8,int4and etc.target evaluation metric: accuracy, latency and etc.

All above information(is called “optimization factors” in this doc) is provided by user through a configuration file now, then run by:

olive run --config <config_file>.json

With the help of Auto Optimizer:

User DOES NOT need to provide the passes combination manually.

User DOES NOT need to write redundant passes configs.

At the same time, user still has the flexibility to specify the accelerator, execution providers and expected precision of output models.

Currently, auto optimizer supports onnx optimization stack only. For original OpenVINO (IR MODEL) and SNPE, user still need to provide passes combination manually by now. Auto Optimizer is still under development actively, and we will keep improving it to make it more powerful and easy to use.

Auto Optimizer Configuration

Config Fields:

opt_level[int]: default 0, to look up built-in passes template.

- disable_auto_optimizer[bool]: default False.

If set to True, Auto Optimizer will be disabled and user need to provide passes combination manually.

- precision[optional[str]]: default None.

The precision of output model. If user does not set the precision of output model, it will be determined by above optimization factors. Olive supports “fp32”, “fp16” and “int8” output precision for now.

Here is a simple example of Auto Optimizer configuration, the item which is not provided will use the default value:

{

"engine": {

"search_strategy": {

"execution_order": "joint",

"search_algorithm": "tpe",

"search_algorithm_config": {

"num_samples": 1,

"seed": 0

}

},

"evaluator": "common_evaluator",

"cache_dir": "cache",

"output_dir" : "models/bert_gpu"

},

"auto_optimizer_config": {

"opt_level": 0,

"disable_auto_optimizer": false,

"precision": "fp16"

}

}

Note

In this example, Auto Optimizer will search for the best passes combination for different execution providers, e.g. CUDAExecutionProvider and TensorrtExecutionProvider.

For CUDAExecutionProvider, it will try float16 in

OrtTransformersOptimization.For TensorrtExecutionProvider, it will try trt_fp16 in

OrtSessionParamsTuning.

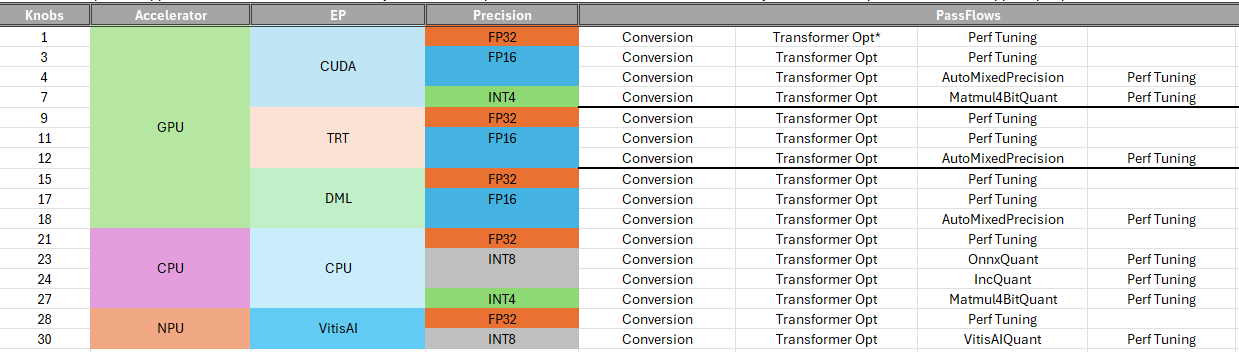

Here the available pass flows for given accelerator, execution providers and precision:

Auto Optimizer can catch up with manual settings in most cases, and it is more convenient to use.

Here is another quick comparison between Auto Optimizer and manual settings.

1{

2 "input_model":{

3 "type": "HfModel",

4 "model_path": "Intel/bert-base-uncased-mrpc",

5 "task": "text-classification"

6 },

7 "systems": {

8 "local_system": {

9 "type": "LocalSystem",

10 "accelerators": [

11 {

12 "device": "gpu",

13 "execution_providers": [

14 "CUDAExecutionProvider",

15 "TensorrtExecutionProvider"

16 ]

17 }

18 ]

19 }

20 },

21 "data_configs": [{

22 "name": "glue",

23 "type": "HuggingfaceContainer",

24 "load_dataset_config": {

25 "data_name": "glue",

26 "split": "validation",

27 "subset": "mrpc"

28 },

29 "pre_process_data_config": {

30 "input_cols": [ "sentence1", "sentence2" ],

31 "label_col": "label"

32 },

33 "dataloader_config": {

34 "batch_size": 1

35 }

36 }],

37 "evaluators": {

38 "common_evaluator": {

39 "metrics":[

40 {

41 "name": "accuracy",

42 "type": "accuracy",

43 "backend": "huggingface_metrics",

44 "data_config": "glue",

45 "sub_types": [

46 {"name": "accuracy", "priority": 1, "goal": {"type": "max-degradation", "value": 0.01}},

47 {"name": "f1"}

48 ]

49 },

50 {

51 "name": "latency",

52 "type": "latency",

53 "data_config": "glue",

54 "sub_types": [

55 {"name": "avg", "priority": 2, "goal": {"type": "percent-min-improvement", "value": 20}},

56 {"name": "max"},

57 {"name": "min"}

58 ]

59 }

60 ]

61 }

62 },

63 "search_strategy": {

64 "execution_order": "joint",

65 "search_algorithm": "tpe",

66 "num_samples": 1,

67 "seed": 0

68 },

69 "evaluator": "common_evaluator",

70 "host": "local_system",

71 "target": "local_system",

72 "cache_dir": "cache",

73 "output_dir" : "models/bert_gpu"

74}

1{

2 "input_model":{

3 "type": "HfModel",

4 "model_path": "Intel/bert-base-uncased-mrpc",

5 "task": "text-classification"

6 },

7 "systems": {

8 "local_system": {

9 "type": "LocalSystem",

10 "accelerators": [

11 {

12 "device": "gpu",

13 "execution_providers": [

14 "CUDAExecutionProvider",

15 "TensorrtExecutionProvider"

16 ]

17 }

18 ]

19 }

20 },

21 "data_configs": [{

22 "name": "glue",

23 "type": "HuggingfaceContainer",

24 "load_dataset_config": {

25 "data_name": "glue",

26 "split": "validation",

27 "subset": "mrpc"

28 },

29 "pre_process_data_config": {

30 "max_samples": 100,

31 "input_cols": [ "sentence1", "sentence2" ],

32 "label_col": "label"

33 },

34 "dataloader_config": {

35 "batch_size": 1

36 }

37 }],

38 "evaluators": {

39 "common_evaluator": {

40 "metrics":[

41 {

42 "name": "accuracy",

43 "type": "accuracy",

44 "backend": "huggingface_metrics",

45 "data_config": "glue",

46 "sub_types": [

47 {"name": "accuracy", "priority": 1, "goal": {"type": "max-degradation", "value": 0.01}},

48 {"name": "f1"}

49 ]

50 },

51 {

52 "name": "latency",

53 "type": "latency",

54 "data_config": "glue",

55 "sub_types": [

56 {"name": "avg", "priority": 2, "goal": {"type": "percent-min-improvement", "value": 20}},

57 {"name": "max"},

58 {"name": "min"}

59 ]

60 }

61 ]

62 }

63 },

64 "passes": {

65 "conversion": {

66 "type": "OnnxConversion"

67 },

68 "cuda_transformers_optimization": {

69 "type": "OrtTransformersOptimization",

70 "float16": true

71 },

72 "trt_transformers_optimization": {

73 "type": "OrtTransformersOptimization",

74 "float16": false

75 },

76 "cuda_session_params_tuning": {

77 "type": "OrtSessionParamsTuning",

78 "enable_cuda_graph": true,

79 "io_bind": true,

80 "data_config": "glue"

81 },

82 "trt_session_params_tuning": {

83 "type": "OrtSessionParamsTuning",

84 "enable_cuda_graph": false,

85 "enable_trt_fp16": true,

86 "io_bind": true,

87 "data_config": "glue"

88 }

89 },

90 "pass_flows": [

91 ["conversion", "cuda_transformers_optimization", "cuda_session_params_tuning"],

92 ["conversion", "trt_transformers_optimization", "trt_session_params_tuning"]

93 ],

94 "search_strategy": {

95 "execution_order": "joint",

96 "search_algorithm": "tpe",

97 "num_samples": 1,

98 "seed": 0

99 },

100 "evaluator": "common_evaluator",

101 "host": "local_system",

102 "target": "local_system",

103 "cache_dir": "cache",

104 "output_dir" : "models/bert_gpu"

105}

Note

In this example, Auto Optimizer can use default settings to catch up with manual settings. Auto Optimizer is aware of following rules which requires expert knowledge in manual settings:

- For CUDAExecutionProvider:

it would be better to disable

enable_trt_fp16and enableenable_cuda_graphinOrtSessionParamsTuningpass, and enablefloat16inOrtTransformersOptimizationpass.

- For TensorrtExecutionProvider:

it would be better to enable

enable_trt_fp16and disableenable_cuda_graphinOrtSessionParamsTuningpass, and disablefloat16inOrtTransformersOptimizationpass.

- At the same time, for both CUDAExecutionProvider and TensorrtExecutionProvider:

it would be better to enable

io_bindinOrtSessionParamsTuningpass.