Scalers

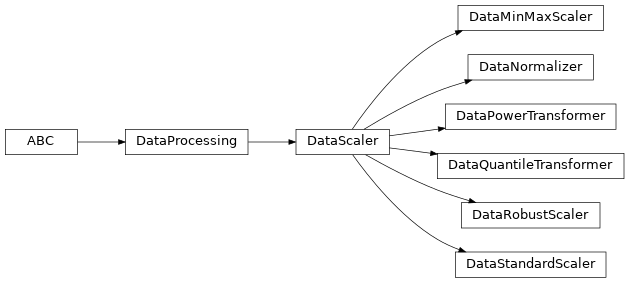

This sub-module of the dataprocessing package collects all scaler transformers implemented here. Scalers are responsible scaling numeric features in order to make these features comparable and have similar value ranges. There are different scaling approaches that can be applied, each with their own advantages. All the scaling methods from the dataprocessing package are based on the abstract class presented below, called DataScaler.

- class raimitigations.dataprocessing.DataScaler(df: Optional[Union[DataFrame, ndarray]] = None, exclude_cols: Optional[list] = None, include_cols: Optional[list] = None, transform_pipe: Optional[list] = None, verbose: bool = True)

Bases:

DataProcessingBase class used by all data scaling or data transformation classes. Implements basic functionalities that can be used by different scaling or transformation class.

- Parameters

df – pandas data frame that contains the columns to be scaled and/or transformed;

exclude_cols – a list of the column names or indexes that shouldn’t be transformed, that is, a list of columns to be ignored. This way, it is possible to transform only certain columns and leave other columns unmodified. This is useful if the dataset contains a set of binary columns that should be left as is, or a set of categorical columns (which can’t be scaled or transformed). If the categorical columns are not added in this list (

exclude_cols), the categorical columns will be automatically identified and added into theexclude_colslist. If None, this parameter will be set automatically as being a list of all categorical variables in the dataset;include_cols – list of the column names or indexes that should be transformed, that is, a list of columns to be included in the dataset being transformed. This parameter uses an inverse logic from the

exclude_cols, and thus these two parameters shouldn’t be used at the same time. The user must used either theinclude_cols, or theexclude_cols, or neither of them;transform_pipe – a list of transformations to be used as a pre-processing pipeline. Each transformation in this list must be a valid subclass of the current library (

EncoderOrdinal,BasicImputer, etc.). Some feature selection methods require a dataset with no categorical features or with no missing values (depending on the approach). If no transformations are provided, a set of default transformations will be used, which depends on the feature selection approach (subclass dependent). This parameter also accepts other scalers in the list. When this happens and theinverse_transform()method of self is called, theinverse_transform()method of all scaler objects that appear in thetransform_pipelist after the last non-scaler object are called in a reversed order. For example, ifis instantiated with transform_pipe=[BasicImputer(), DataQuantileTransformer(), EncoderOHE(), DataPowerTransformer()], then, when calling :meth:`fiton theDataMinMaxScalerobject, first the dataset will be fitted and transformed using BasicImputer, followed by DataQuantileTransformer, EncoderOHE, and DataPowerTransformer, and only then it will be fitted and transformed using the current DataMinMaxScaler. Thetransform()method works in a similar way, the difference being that it doesn’t callfit()for the data scaler in thetransform_pipe. For theinverse_transform()method, the inverse transforms are applied in reverse order, but only the scaler objects that appear after the last non-scaler object in thetransform_pipe: first, we inverse theDataMinMaxScaler, followed by the inversion of theDataPowerTransformer. TheDataQuantileTransformerisn’t reversed because it appears between two non-scaler objects:BasicImputerandEncoderOHE;verbose – indicates whether internal messages should be printed or not.

- fit(df: Optional[Union[DataFrame, ndarray]] = None, y: Optional[Union[Series, ndarray]] = None)

Fit method used by all concrete scalers that inherit from the current abstract DataScaler class. This method executes the following steps: (i) set the dataframe (if not already set in the constructor), (ii) check for errors or inconsistencies in some in the exclude_cols parameter and in the provided dataset, (iii) set the tranforms list, (iv) call the fit and transform methods of other transformations passed through the transform_pipe parameter (such as imputation, feature selection, other scalers, etc.), (v) remove the columns that should be ignored according the exclude_cols or include_cols, (vi) call the _fit() method to effectively fit the current scaler on the preprocessed dataset.

- Parameters

df – the full dataset containing the columns that should be scaled;

y – ignored. This exists for compatibility with the

sklearn’s Pipeline class.

- transform(df: Union[DataFrame, ndarray])

Transform method used by all concrete scalers that inherit from the current abstract DataScaler class. First, check if all columns that should be ignored are present in the dataset, which is useful to check the consistency of the dataset with the one used during fit and transform. In the sequence, apply all the transforms in the transform_pipe parameter. Finally, call the transform() method of the current scaler over the preprocessed dataset and return a new dataset.

- Parameters

df – the full dataset containing the columns that should be scaled.

- Returns

the transformed dataset.

- Return type

pd.DataFrame or np.ndarray

The following is a list of all scalers implemented in this module. All of the classes below inherit from the DataScaler class, and thus, have access to all of the methods previously shown.

Child Classes

Class Diagram