CatBoostSelection

- class raimitigations.dataprocessing.CatBoostSelection(df: Optional[Union[DataFrame, ndarray]] = None, label_col: Optional[str] = None, X: Optional[Union[DataFrame, ndarray]] = None, y: Optional[Union[DataFrame, ndarray]] = None, transform_pipe: Optional[list] = None, regression: Optional[bool] = None, estimator: Optional[Union[CatBoostClassifier, CatBoostRegressor]] = None, catboost_log: bool = True, catboost_plot: bool = False, test_size: float = 0.2, cat_col: Optional[list] = None, n_feat: Optional[int] = None, fixed_cols: Optional[list] = None, algorithm: str = 'loss', steps: int = 1, save_json: bool = False, json_summary: str = 'cb_feat_summary.json', verbose: bool = True)

Bases:

FeatureSelectionConcrete class that uses the

CatBoostmodel and its feature’s importance values to select the most important features.CatBoostis a tree boosting method capable of handling categorical features. This method creates internally an importance score for each feature. This way, these scores can be used to perform feature selection. TheCatBoostimplementation (from thecatboostlib) has already prepared a functionality for this purpose. The subclassCatBoostSelectionsimply encapsulates all the complexities associated with this functionality and makes it easier for the user feature selection over a dataset.- Parameters

df – the data frame to be used during the fit method. This data frame must contain all the features, including the label column (specified in the

label_colparameter). This parameter is mandatory iflabel_colis also provided. The user can also provide this dataset (along with thelabel_col) when calling thefit()method. If df is provided during the class instantiation, it is not necessary to provide it again when callingfit(). It is also possible to use theXandyinstead ofdfandlabel_col, although it is mandatory to pass the pair of parameters (X,y) or (df, label_col) either during the class instantiation or during thefit()method;label_col – the name or index of the label column. This parameter is mandatory if

dfis provided;X – contains only the features of the original dataset, that is, does not contain the label column. This is useful if the user has already separated the features from the label column prior to calling this class. This parameter is mandatory if

yis provided;y – contains only the label column of the original dataset. This parameter is mandatory if

Xis provided;transform_pipe – a list of transformations to be used as a pre-processing pipeline. Each transformation in this list must be a valid subclass of the current library (

EncoderOrdinal,BasicImputer, etc.). Some feature selection methods require a dataset with no categorical features or with no missing values (depending on the approach). If no transformations are provided, a set of default transformations will be used, which depends on the feature selection approach (subclass dependent);regression – if True and no estimator is provided, then create a default CatBoostRegressor. If False, a CatBoostClassifier is created instead. This parameter is ignored if an estimator is provided using the

estimatorparameter;estimator – a

CatBoostClassifierorCatBoostRegressorobject that will be used for filtering the most important features. If no estimator is provided, a defaultCatBoostClassifierorCatBoostRegressoris used, where the latter is used if regression is set to True or if the type of the label column is float, while the former is used otherwise;catboost_log – if True, the default estimator will print logging messages during its training phase. If False, no log will be printed. This parameter is only used when

estimatoris None, since this parameter is only used when creating the default classifier, which is not the case when the user specifies the classifier to be used. This parameter is automatically set to False if ‘verbose’ is False;catboost_plot – if True, uses

CatBoost’s plot feature: if running on a python notebook environment, an interactive plot will be created, which shows the loss function for both training and test sets, as well as the error obtained when removing an increasing number of features. If running on a script environment, a web interface will be opened showing this plot. If False, no plot will be generated. This parameter is only used whenestimatoris None, since this parameter is only used when creating the default classifier, which is not the case when the user specifies the classifier to be used;test_size – the size of the test set used to train the

CatBoostmethod, which is used to create the importance score of each feature;cat_col – a list with the name or index of all categorical columns. These columns do not need to be encoded, since

CatBoostdoes this encoding internally. If None, this list will be automatically set as a list with all categorical features found in the dataset;n_feat – the number of features to be selected. If None, then the following procedure is followed: (i) half of the existing features will be selected, (ii) after the feature selection method from

CatBoostis executed, it generates a ‘loss_graph’, that indicates the loss function for each feature removed: the loss when 0 features were removed, the loss when 1 feature was removed, etc., up until half of the features were removed. With this loss graph, we get the number of features removed that resulted in the best loss function. We then set the features to be selected as the ones selected up until that point;fixed_cols – a list of column names or indices that should always be included in the set of selected features. Note that the number of columns included here must be smaller than n_feat, otherwise there is nothing for the class to do (that is: len(fixed_cols) < n_feat);

algorithm –

the algorithm used to do feature selection.

CatBoostuses a Recursive Feature Selection approach, where each feature is removed at a time. The feature selected to be removed is the one with the least importance. The difference between each algorithm is how this importance is computed. This parameter can be one of the following string values: [‘predict’, ‘loss’, ‘shap’]. Here is a description of each of the algorithms allowed according toCatBoost’s own documentation (text in double quotation marks were extracted fromCatboost’s official documentation):’predict’: uses the

catboost.EFeaturesSelectionAlgorithm.RecursiveByPredictionValuesChangealgorithm. According toCatBoost’s own documentation: “the fastest algorithm and the least accurate method (not recommended for ranking losses)” - “For each feature, PredictionValuesChange shows how much on average the prediction changes if the feature value changes. The bigger the value of the importance the bigger on average is the change to the prediction value, if this feature is changed.”;’loss’: uses the

catboost.EFeaturesSelectionAlgorithm.RecursiveByLossFunctionChangealgorithm. According toCatBoost’s own documentation: “the optimal option according to accuracy/speed balance” - “For each feature the value represents the difference between the loss value of the model with this feature and without it. The model without this feature is equivalent to the one that would have been trained if this feature was excluded from the dataset. Since it is computationally expensive to retrain the model without one of the features, this model is built approximately using the original model with this feature removed from all the trees in the ensemble. The calculation of this feature importance requires a dataset and, therefore, the calculated value is dataset-dependent.”;’shap’: uses the

catboost.EFeaturesSelectionAlgorithm.RecursiveByShapValuesalgorithm. According toCatBoost’s own documentation: “the most accurate method.”. For this algorithm,CatBoostuses Shap Values to determine the importance of each feature;

steps – the number of times the model is trained. The greater the number of steps, the more accurate is the importance score of each feature;

save_json – saves the summary generated by the

CatBoostmodel. For more information on the data contained in this summary, please check CatBoost’s official documentation.json_summary – the path and name of the json file created when saving the summary. This file is only saved when

save_jsonis set to True;verbose – indicates whether internal messages should be printed or not.

- get_summary()

Public method that returns the summary generated by the

CatBoostmodel. For more information on the data contained in this summary, please checkCatBoost’s official documentation: https://catboost.ai/en/docs/concepts/output-data_features-selection- Returns

a dictionary with the results obtained by the feature selection method.

- Return type

dict

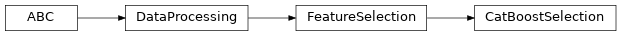

Class Diagram