Overview

Most customers have accumulated a large collection of genomic data that will need to be moved to the cloud. It’s not uncommon to have customers with at least 1PB of data on premises. In addition to this initial snapshot, they are also producing new datasets daily. This data will also need to be moved to the cloud on a periodic basis.

For the initial snapshot, there are two commonly used ways to migrate the data. We can use a storage device like Azure Databox or we can move the data over the wire using the customers current connectivity to Azure, whether that is Azure Express Route, a Site-to-Site VPN or even using the secure internet endpoints for the Azure Storage Services.

Azure Data Box is not always the right, or fastest solution. It takes some time to acquire the physical Data Box, copy and load the data, ship it back, and have it loaded into your Storage Account. Depending on the amount of data, and available bandwidth, it may be faster to move the data over the wire. Make sure you review both the online, and offline options and see which one works best for your scenario.

The choices for moving the periodic datasets are more clear cut. Given the frequency and the data size of daily genomic datasets, moving the data over the wire will likely be the best option.

For this quickstart, we will focus on moving the data over the wire and demonstrate how to set up the required infrastructure. When moving the initial snapshot, we’ll use Azure AzCopy and or the differential data we’ll use {Azure Data Box Gateway](https://docs.microsoft.com/en-us/azure/databox-gateway/data-box-gateway-overview).

Using AzCopy to move snapshot data

AzCopy is a command-line utility that you can use to copy blobs or files to, or from, a storage account. AzCopy is cross-platform, and available on Windows, Linux and MacOS. Please review the Getting started with AzCopy documentation to setup and start using the tool.

In order to access your storage account, you will need to choose what type of Security Principal you are going to use. AzCopy supports User Identity, Managed Identity and Service Principal. Because we are running the load process from outside of Azure , and we’ll be automating the process, we’ll use a Service Principal. We’ll want to make sure the Service Principal has the appropriate role to read/write data to the storage account, and in this case we’ll use the Storage Blob Data Contributor role.

Service Principal configuration

If you don’t have a Service Principal, follow this guide to learn more and set one up in your environment.

Once you have one handy, there are a few minor configurations that you’ll need to do before you can start using it on machine that you will use for copying data. We’ll run through the set up on an Ubuntu 20.18 VM . If you are using Windows or MacOs, please refer to the Getting started with AzCopy documentation.

There are two components of the service principal that you’ll need, the application(client-id) and the corresponding client-secret.

Create an enviroment variable called AZCOPY_SPA_CLIENT_SECRET and set the value to your client-secret.

AZCOPY_SPA_CLIENT_SECRET=*********-JZe0675*********

Once the secret is set, you can now use azcopy to login in as the Service Principal.

azcopy login --service-principal --application-id <your-client-id> --tenant-id <your-tenant-id>

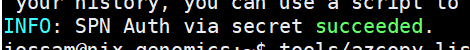

If you were successful, you’ll see a message similar to this:

Now that you can log in, try running a simple command to test connectivity to your storage account. For example, this command will list the contents of the given container.

azcopy list https://<account>.blob.core.windows.net/<container>

Great, you now have access to your Azure Storage account from your private network. Moving files from your private network to Azure can now be accomplished by running the azcopy copy command. Here’s an example that copies the content of an entire directory.

azcopy copy '/datadrive/1000-genomes' 'https://mystorageaccount.blob.core.windows.net/1000-genomes' --recursive

Refer to the documentation for proper syntax and additional examples.

Moving large files over the wire has some complexities which AzCopy is designed to address. Here are a few things that we’ve found to be helpful.

- Run the benchmark function in AzCopy. This will test connectivity between source/destination and will simulate loading some test files. The output will help you understand the expected throughput and help you right size your VM .

- Orchestrate and monitor your jobs using scheduler or orchestrator. Azure Data Factory could be used to trigger and monitor jobs. <!– this is under the section of how to deal with complexities of AzCopy, I would move this out of here or re-word it. >

- Check for failed jobs and resume them if needed. File uploads will fail for various reasons. Monitor your jobs and resume any jobs that fail. AzCopy resume feature will look at the job plan to figure out which files should be restarted. <!– consider adding something about “–put-md5” or “–log-level”>

Using Azure Storage Explorer

Microsoft Azure Storage Explorer is a standalone app that makes it easy to work with Azure Storage data on Windows, macOS, and Linux. Upload, download, and manage Azure Storage blobs, files, queues, and tables, as well as Azure Data Lake Storage entities and Azure managed disks. Configure storage permissions and access controls, tiers, and rules. Please review the Get started with Storage Explorer documentation to setup and start using the tool.

Using Azure DataBox Gateway to move periodic data

Azure Data Box Gateway is a managed file transfer solution that enables you to copy data onto it’s local SMB or NFS share, and have it move that data into Azure. We are going to use Azure Data Box Gateway to continuously move data from your private network, to Azure. For a detailed overview and set up instructions, please review the documentation.

Azure Data Box Gateway is configured by building a VM on your private network that is based on an image downloaded from the Azure portal after creating the Azure Data Box resource. This tutorial will walk you through the setup-by-step configuration process. The sizing requirements for the VM are discussed in the article, you will need a minimum of 8GB of RAM and 2TB of available storage for the data volume.

Once the gateway device is setup, you will create a local network share onto which you will copy data. Once data is loaded, it will be moved to the configured Storage Account on Azure.

There are few limitations of Azure Data Box Gateway highlighted here, most of these shouldn’t be an issue for a typical genomics process.

For security best practices please review this document. Each of the components in the solution have their own security requirements. For example, Data Box Gateway uses an activation key to connect the local VM to the Azure resources; use your own best practices for managing that key.