Run prompt flow in Azure AI#

Assuming you have learned how to create and run a flow following Quick start. This guide will walk you through the main process of how to submit a promptflow run to Azure AI.

Benefits of use Azure AI comparison to just run locally:

Designed for team collaboration: Portal UI is a better fix for sharing & presentation your flow and runs. And workspace can better organize team shared resources like connections.

Enterprise Readiness Solutions: prompt flow leverages Azure AI’s robust enterprise readiness solutions, providing a secure, scalable, and reliable foundation for the development, experimentation, and deployment of flows.

Prerequisites#

An Azure account with an active subscription - Create an account for free

An Azure AI ML workspace - Create workspace resources you need to get started with Azure AI.

A python environment,

python=3.9or higher version like 3.10 is recommended.Install

promptflowwith extra dependencies andpromptflow-tools.

pip install promptflow[azure] promptflow-tools

Clone the sample repo and check flows in folder examples/flows.

git clone https://github.com/microsoft/promptflow.git

Create necessary connections#

Connection helps securely store and manage secret keys or other sensitive credentials required for interacting with LLM and other external tools for example Azure Content Safety.

In this guide, we will use flow web-classification which uses connection open_ai_connection inside, we need to set up the connection if we haven’t added it before.

Please go to workspace portal, click Prompt flow -> Connections -> Create, then follow the instruction to create your own connections. Learn more on connections.

Submit a run to workspace#

Assuming you are in working directory <path-to-the-sample-repo>/examples/flows/standard/

Use az login to login so promptflow can get your credential.

az login

Submit a run to workspace.

pfazure run create --subscription <my_sub> -g <my_resource_group> -w <my_workspace> --flow web-classification --data web-classification/data.jsonl --stream

Default subscription/resource-group/workspace

Note --subscription, -g and -w can be omitted if you have installed the Azure CLI and set the default configurations.

az account set --subscription <my-sub>

az configure --defaults group=<my_resource_group> workspace=<my_workspace>

Specify run name and view a run

You can also name the run by specifying --name my_first_cloud_run in the run create command, otherwise the run name will be generated in a certain pattern which has timestamp inside.

With a run name, you can easily stream or view the run details using below commands:

pfazure run stream -n my_first_cloud_run # same as "--stream" in command "run create"

pfazure run show-details -n my_first_cloud_run

pfazure run visualize -n my_first_cloud_run

More details can be found in CLI reference: pfazure

Import the required libraries

from azure.identity import DefaultAzureCredential, InteractiveBrowserCredential

# azure version promptflow apis

from promptflow.azure import PFClient

Get credential

try:

credential = DefaultAzureCredential()

# Check if given credential can get token successfully.

credential.get_token("https://management.azure.com/.default")

except Exception as ex:

# Fall back to InteractiveBrowserCredential in case DefaultAzureCredential not work

credential = InteractiveBrowserCredential()

Get a handle to the workspace

# Get a handle to workspace

pf = PFClient(

credential=credential,

subscription_id="<SUBSCRIPTION_ID>", # this will look like xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

resource_group_name="<RESOURCE_GROUP>",

workspace_name="<AML_WORKSPACE_NAME>",

)

Submit the flow run

# load flow

flow = "web-classification"

data = "web-classification/data.jsonl"

# create run

base_run = pf.run(

flow=flow,

data=data,

)

pf.stream(base_run)

View the run info

details = pf.get_details(base_run)

details.head(10)

pf.visualize(base_run)

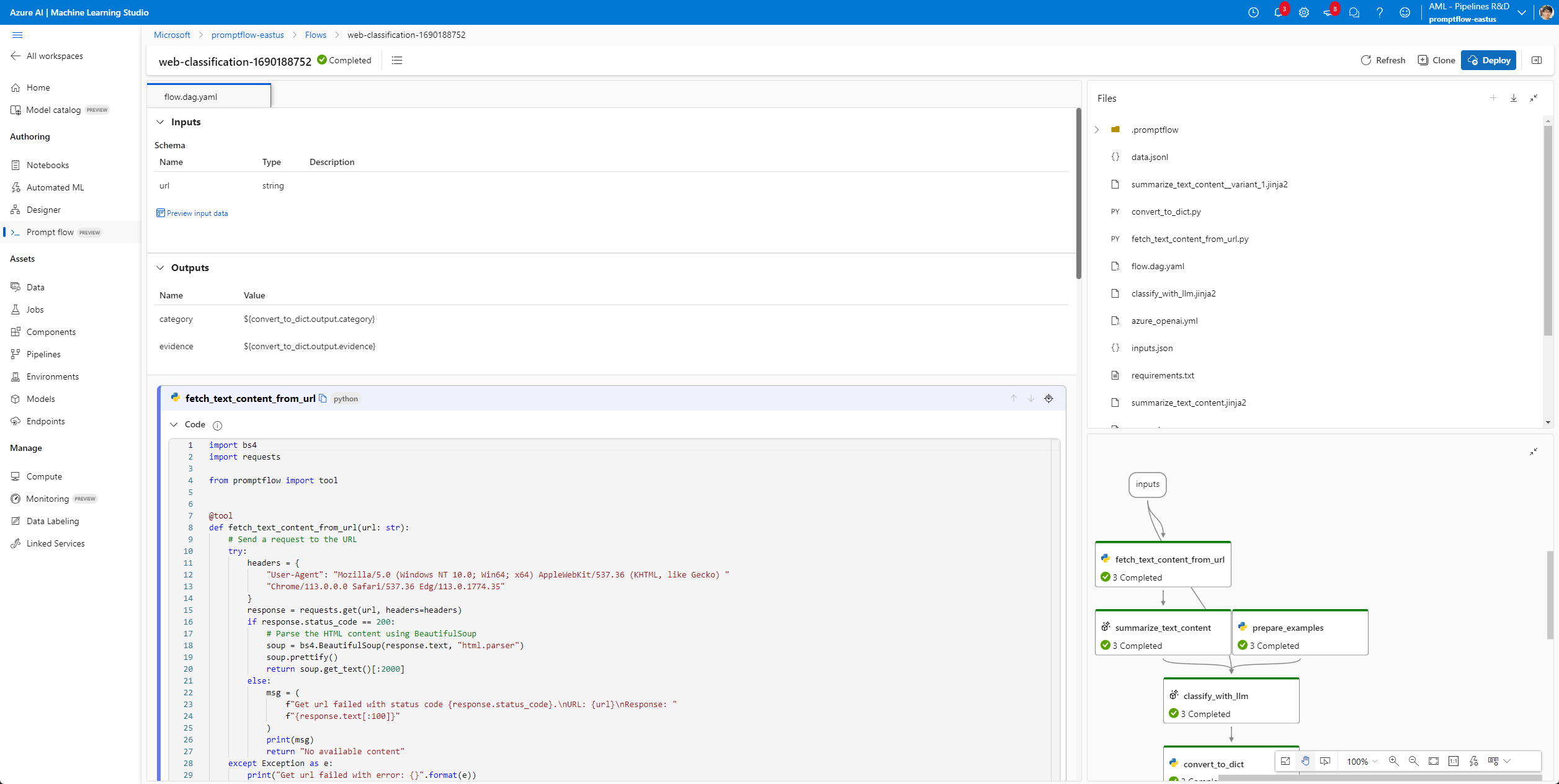

View the run in workspace#

At the end of stream logs, you can find the portal_url of the submitted run, click it to view the run in the workspace.

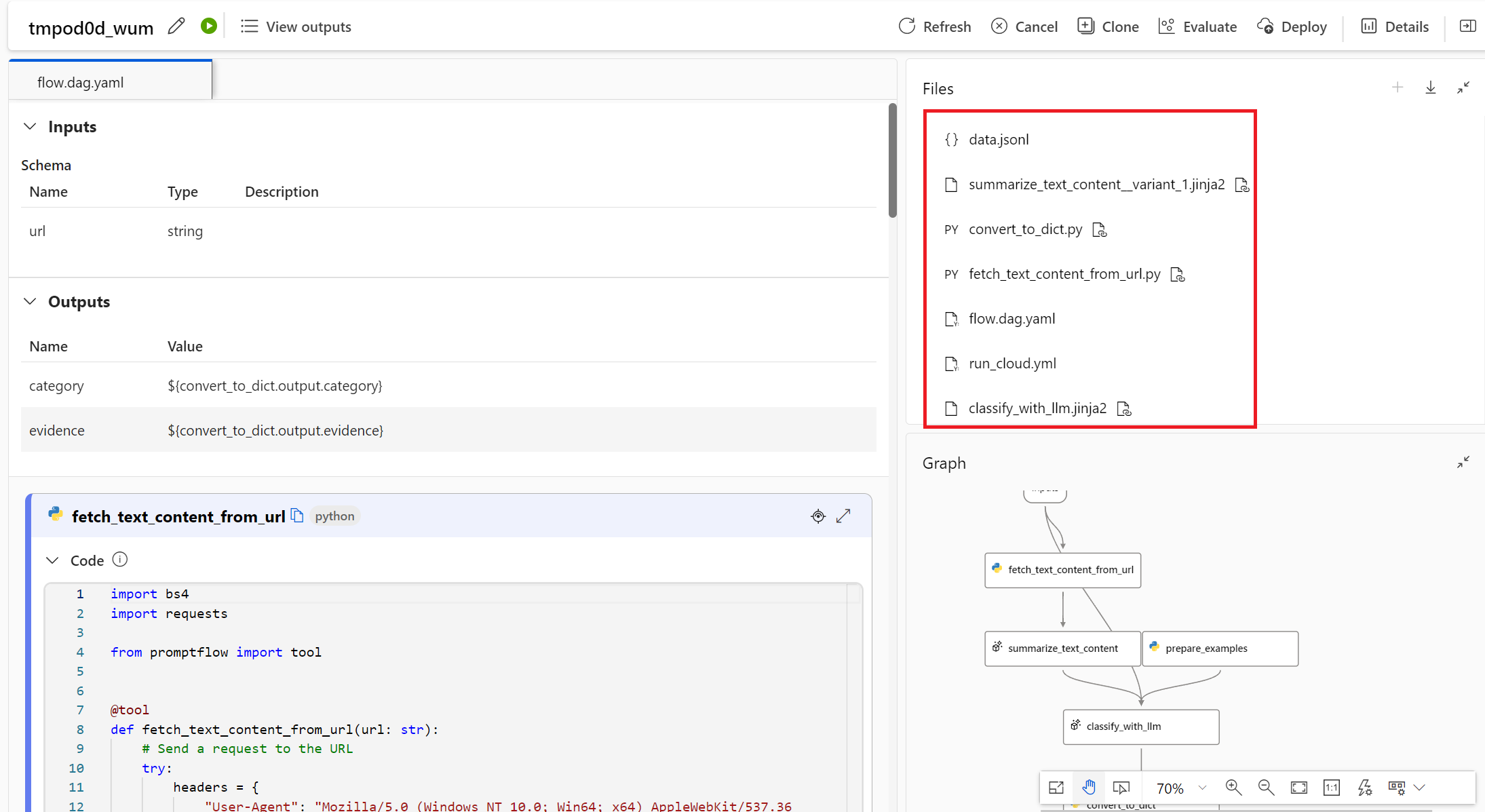

Run snapshot of the flow with additional includes#

Flows that enabled additional include files can also be submitted for execution in the workspace. Please note that the specific additional include files or folders will be uploaded and organized within the Files folder of the run snapshot in the cloud.

Next steps#

Learn more about: