For the Data Scientist - Develop with R

- Step 0: Intermediate Directories Creation

- Step 1: Merge with Account Information

- Step 2: Tagging

- Step 3: Splitting

- Step 4: Preprocessing

- Step 4: Preprocessing

- Step 5: Create Risk Tables

- Step 6: Feature Engineering

- Step 7: Training

- Step 8: Prediction

- Step 9: Evaluation

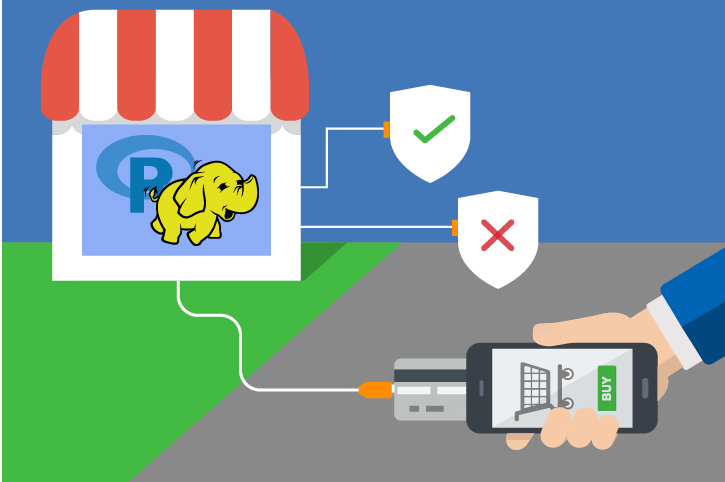

Fraud Detection

Fraud detection is one of the earliest industrial applications of data mining and machine learning. This solution shows how to build and deploy a machine learning model for online retailers to detect fraudulent purchase transactions.

View more information about the data.

Predict_Scores is created in SQL Server. This data is then visualized in PowerBI.

To try this out yourself, visit the Quick Start page.

The file modeling_main.R development_main.R enables the user to define the input and call all the steps. Inputs are: paths to the raw data files, database name, server name, username and password.

The database is created if it does not not already exist, and the connection string as well as the SQL compute context are defined.

- Opens the Spark connection.

- Lets the user specify the paths to the working directories on the edge node and HDFS. We assume they already exist.

- Creates a directory, LocalModelsDir, that will store the model and other tables for use in the Production or Web Scoring stages (inside the fraud_dev main function).

- Updates the tables of the Production stage directory, ProdModelDir, with the contents of LocalModelsDir (inside the fraud_dev main function).

System Requirements

The following are required to run the scripts in this solution:

- SQL Server (2016 or higher) with Microsoft ML Server (version 9.1.0 or later) installed and configured.

- The SQL user name and password, and the user configured properly to execute R scripts in-memory.

- SQL Database which the user has write permission and execute stored procedures.

- For more information about SQL server 2017 and ML Services, please visit: https://docs.microsoft.com/en-us/sql/advanced-analytics/what-s-new-in-sql-server-machine-learning-services

Step 1: Tagging

In this step, the raw data is loaded into SQL in three tables called Untagged_Transactions, Account_Info, and Fraud_Transactions. The date time variable transactionDateTime is created during this upload.

After sorting the table Account_Info into Account_Info_Sort in decreasing order of recordDateTime for each accountID, we merge the two tables Untagged_Transactions and Account_Info_Sort into Untagged_Transactions_Account. (SQL queries are used here instead of the rxMerge function of RevoScaleR because it is not yet available for SQL data sources.) We then remove duplicate observations with another SQL query executed through rxExecuteSQLddl.

Finally, we create labels for the untagged transactions by using the Fraud table. This is done by:

-

Aggregating the

Fraudtable on the account level, creating a start and end datetime. -

Joining this data with the

Untagged_Transactions_Accountdata with a left join. Start and end time are the NULL for non fraud. -

Tagging the data:

0for non fraud,1for fraud,2for pre-fraud.

The tagging logic is the following: the Fraud data is grouped by account ID and sorted by time, thus, we have the fraud time period for each fraud account. For each transaction in the untagged data:

- if the account ID is not in the fraud data, this transaction is labeled as non fraud (

label = 0). - if the account ID is in the fraud data and the transaction time is within the fraud time period of this account, this transaction is labeled as fraud (

label = 1). - if the account ID is in the fraud data and the transaction time is out of the fraud time period of this account, this transaction is labeled as pre-fraud or unknown (

label = 2) which will be removed later.

Input:

- Raw data: Untagged_Transactions.csv, Account_Info.csv, and Fraud_Transactions.csv.

Output:

TaggedSQL table.

Related files:

- step1_tagging.R

Step 2: Splitting and Preprocessing

Because feature engineering will require us to compute risk values, and in order to avoid label leakage, the risk values should be computed on a training set. This is why splitting the Tagged data into a training and a testing set is performed before the feature engineering step.

This is done by selecting randomly 70% of accountID to be part of the training set. In order to ensure repeatability and to make sure that the same accountID ends up in the same data set, accountID values are mapped to integers through a hash function, with the mapping and accountID written to the Hash_Id SQL table. We create the Hash_Id table though a SQL query in order to use the same hash function as the SQL Stored Procedures for coherence.

We then create a pointer to the training set, which, at the same time, removes the pre-fraud labels (label = 2), variables not used in the next steps, observations with ID variables missing, and observations where the transaction amount in USD is negative.

After creating this pointer, we apply the clean_preprocess function on it. After detecting the variables with missing values by using the rxSummary function, it wraps the function preprocessing into rxDataStep, and acts on the data as following:

- It replaces the missing observations with 0 (or -99 for the variable localHour since 0 already represents a valid value for this variable).

- It fixes some data entries.

- It converts a few variables to numeric to ensure correct computations in the following steps.

This function will later be applied to the testing set as well.

Input:

TaggedSQL table.

Output:

Tagged_Training_ProcessedSQL table containing the cleaned and preprocessed training set.Hash_IdSQL table containing theaccountIDand the mapping through the hash function.

Related files:

- step2_splitting_preprocessing.R

Step 3: Feature Engineering

For feature engineering, we want to design new features:

- Risk values for various numeric variables.

- Flags for mismatches between addresses, and flags for high amount transactions.

- Aggregates corresponding to the number and amount of transactions in the past day and 30 days for every transaction per accountID.

The risk table stores risk (log of smoothed odds ratio) for each level of one categorical variable. For example, variable X has two levels: A and B. For level A, we compute the following:

- Total number of good transactions,

n_good(A), - Total number of bad transactions,

n_bad(A). - The smoothed odds,

odds(A) = (n_bad(A)+10)/(n_bad(A)+n_good(A)+100). - The the risk of level

A,Risk(A) = log(odds(A)/(1-odds(A)).

Similarly, we can compute the risk value for level B. Thus, the risk table of variable X is constructed as the following:

| X | Risk |

|---|---|

| A | Risk(A) |

| B | Risk(B) |

With the risk table, we can assign the risk value to each level. This is how we transform the categorical variable into numerical variable.

We first compute the risk values, using the training set, with the function create_risk_table. For a given variable of interest, it uses rxSummary to get the proportion of fraudulent and non-fraudulent transactions for every level of that variable.

The risk value for a level of the variable will be the log of a smoothed odd fraud rate. The risks are written to SQL tables to be used in feature engineering on the training and testing sets or for batch scoring.

Then, the function assign_risk_and_flags will apply the function assign_risk, wrapped into rxDataStep on the training set. This assigns the risk values for every variable of interest, using the previously created Risk tables. At the same time, rxDataStep creates the address mismatch flags and a flag for high amounts. The output is written to SQL Server in the table Tagged_Training_Processed_Features1. This function will later be applied to the testing set as well.

Finally, we create the aggregates with the function compute_aggregates. They correspond to the number and USD amount of transactions in the past day and 30 days for every transaction per accountID. Although a SQL query can be used, we used R code here for illustrative purposes. The computation follows a standard split-apply-combine process.

- Load the intermediate data set Tagged_Training_Processed_Features1 in memory.

- Split the data set into a list of data frames for each accountID.

- Compute aggregates for each accountID with the function

aggregates_account_level. - Combine the results, use zero values when no aggregates, and write the result back to SQL Server.

On an accountID level, aggregates_account_level works as follows on a given data frame of transactions corresponding to an accountID:

- Perform a cross-apply of the data frame on itself, while only keeping for each

transactionID, all the other transactions that occurred in the past 30 days. - Split the table in 2:

-

z1daywill conatain the transactions and their corresponding transactions that happened in the past 1 day. -

z30daywill conatain the transactions and their corresponding transactions that happened in the past 30 days. - For each transaction in each of

z1dayandz30day, we compute the number and total USD amount of the previous transactions. - The aggregated result is returned as a data frame and is the output of the

aggregates_account_levelfunction.

The function compute_aggregates will later be used on the testing set as well.

The final data is written to the SQL table Tagged_Training_Processed_Features. Using stringsAsFactors = TRUE, we convert the character variables to factors and get their levels information in the column_info list, to be used as well for the testing set.

Input:

Tagged_Training_ProcessedSQL table.

Output:

Tagged_Training_Processed_FeaturesSQL table containing new features.- Various Risk SQL tables containing the risk values for each level of the variables.

column_infolist to be used on the training and testing sets to specify the types of variables and levels of the factors in particular.

Related files:

- step3_feature_engineering.R

Step 4: Training, Testing and Evaluating

After pointing to the training set with the correct variable types (using column_info), we write the formula to be used for the classification.

We build a gradient boosted trees (GBT) model with the rxFastTrees algorithm from the MicrosoftML library. The argument unbalancedSets = TRUE helps deal with the class imbalance that is observed in this data set.

The trained model is serialized and uploaded to a SQL table Models if needed later, through an Odbc connection.

We then point with a query to the raw testing set, and using the previously defined functions clean_preprocess, assign_risk_and_flags, and compute_aggregates, we get the testing set Tagged_Testing_Processed_Features ready for scoring.

Finally, we compute predictions on the testing set, written to the SQL table Predict_Scores. It is uploaded in memory, and various performance metrics are computed.

-

AUC (Area Under the Curve) for the ROC. This represents how well the model can differenciate between the non-fraudulent transactions and the fraudulent ones given a good decision threshold in the testing set. We draw the ROC, representing the true positive rate in function of the false positive rate for various possible cutoffs.

-

Various account level metrics and graphs.

- ADR – Fraud Account Detection Rate. The percentage of detected fraud accounts in all fraud accounts.

- VDR - Value Detection Rate. The percentage of monetary savings, assuming the current fraud transaction triggered a blocking action on subsequent transactions, over all fraud losses.

- AFPR - Account False Positive Ratio. The ratio of detected false positive accounts over detected fraud accounts.

Input:

Tagged_Training_Processed_FeaturesSQL table containing new features and preprocessed training set.Hash_IdSQL table containing theaccountIDand the mapping through the hash function.- Various Risk SQL tables containing the risk values for each level of the variables, to be used for feature engineering on the testing set.

column_infolist to be used on the training and testing sets to specify the types of variables and levels of the factors in particular.

Output:

ModelsSQL table containing the serialized GBT model.Tagged_Testing_Processed_FeaturesSQL table containing new features and preprocessed testing set.Predict_ScoreSQL table containing the predictions made on the testing set.- Performance metrics and graphs.

Related files:

- step4_training_evaluation.R

Updating the Production Stage Directory (“Copy Dev to Prod”)

At the end of the main function of the script development_main.R, the copy_dev_to_prod.R script is invoked in order to copy (overwrite if it already exists) the model, statistics and other data from the Development Stage to a directory of the Production or Web Scoring stage.

If you do not wish to overwrite the model currently in use in a Production stage, you can either save them to a different directory, or set update_prod_flag to 0 inside the main function.

Production Stage

Visualize Results

Predict_Score of the Fraud database. The test data itself is in the Tagged_Testing table. The next step of this solution is to visualize both tables in PowerBI.

Predict_Score. The test data itself is in the Tagged_Testing table. The next step of this solution is to visualize both tables in PowerBI.

- See For the Business Manager for details of the PowerBI dashboard.

Step 0: Intermediate Directories Creation

In this step, we create or clean intermediate directories both on the edge node and HDFS. These directories will hold all the intermediate processed data sets in subfolders.

Related Files

- step0_directories_creation.R

Step 1: Merge with Account Information

In this step, we convert the untagged transaction data and account info data into hive table and merge them by the account ID using hive command. Then every transaction will get corresponding account information. The duplicated rows will be removed as well. If this step is used in “production scoring” or “web scoring” stage, we will add a fake label column to the data for rxPredict function work properly later.

Input

- Two data files: Untagged_Transactions and Account_Info.

- The working directory on HDFS.

Stage: “Dev” for development, “Prod” for batch scoring, “Web” for web scoring.

Output

- Hive table:

UntaggedTransactionsAccountUnique(Stage = “Dev”) orTaggedProd(Stage = “Prod” or “Web”)

Related Files

- step1_merge_account_info.R

Step 2: Tagging

In this step, we tag the untagged data on account level based on the fraud data. The tagging logic is the following. In fraud data, we group it by account ID and sort by time, thus, we have the fraud time period for each fraud account. For each transaction in untagged data, if the account ID is not in fraud data, this transaction is labeled as non fraud (label = 0); if the account ID is in fraud data and the transaction time is within the fraud time period of this account, this transaction is labeled as fraud (label = 1); if the account ID is in fraud data and the transaction time is out of the fraud time period of this account, this transaction is labeled as pre-fraud or unknown (label = 2) which will be removed later.

Input

Input_Hive_Table: name of the hive table from the merging step with the untagged transactions and account info.- Path to csv Fraud files with the raw data Fraud_Transactions.csv

HDFSWorkDir: Working directory on HDFS.

Output

- Tagged data.

Related Files

- step2_tagging.R

Step 3: Splitting

In this step, we will hash accountID into 100 different hash code and split the whole data into training(70%) and testing(30%) based on the hash code, e.g., training = hash code <=70 and testing = hash code >70. In the same time, transactions with label = 2 will be removed.

Input

- Tagged data set.

Output

- Training and Testing sets.

Related Files

- step3_splitting.R

Step 4: Preprocessing

In this step, We use rxSummary function to get the missing information. Missing values of localHour will be filled with -99. Missing values for the rest columns will be filled with 0. We also fix some data entries and convert a few variables to numeric.

Input

HDFSWorkDir: Working directory on HDFS.HiveTable: Input data name of Hive table to be preprocessed.

Output

- Hive table with preprocessed data.

Related Files

- step4_preprocessing.R

Step 5: Create Risk Tables

In this step, we create risk tables for categorical variables, such as location related variables.

The risk table stores risk (log of smoothed odds ratio) for each level of one categorical variable. For example, variable X has two levels: A and B. For level A, we compute the following:

- Total number of good transactions,

n_good(A), - Total number of bad transactions,

n_bad(A). - The smoothed odds,

odds(A) = (n_bad(A)+10)/(n_bad(A)+n_good(A)+100). - The the risk of level

A,Risk(A) = log(odds(A)/(1-odds(A)).

Similarly, we can compute the risk value for level B. Thus, the risk table of variable X is constructed as the following:

| X | Risk |

|---|---|

| A | Risk(A) |

| B | Risk(B) |

With the risk table, we can assign the risk value to each level. This is how we transform the categorical variable into numerical variable.

rxSummary function is used to get the count of fraud and non-fraud for variables need to be converted. Then, for each variable, we combine the count for fraud and non-fraud to calculate risk table. All risk tables will be put into one list and saved to model directory on edge node.

Input

LocalWorkDirandHDFSWorkDir: working directories on HDFS and local edge node.HiveTable: name of the Hive table containing the preprocessed training set to be used to create risk tables.smooth1andsmooth2: smoothing parameters used to compute the risk values.

Output

- Risk tables embedded in a list

Risk_list, saved on the edge node.

Related Files

- step5_create_risk_tables.R

Step 6: Feature Engineering

This step does feature engineering for an input data set. We will generate three groups of new features:

- Numerical risk variables transformed from categorical variables based on the risk tables generated in step 5.

- Binary variables. For example, high amount flag and address mismatch flags.

- Aggregates. For example, completed transactions of a customer in past 1 day and 30 days.

To calculate the aggregates, since there is no existing rx-function we can use, we have to use regular R functions. In order to make it scalable to big data, we hash the account ID and split the data into small chunks by hash code. Chunks are account ID exclusive so that we can safely apply aggregates calculation to each chunk in parallel. Finally, the output chunks will be combined to one xdf file.

Input

LocalWorkDirandHDFSWorkDir: working directories on HDFS and local edge node.HiveTable: name of the Hive table containing the preprocessed data set to which new features will be added.Stage: “Dev” for development, “Prod” for batch scoring, “Web” for web scoring.

Output

- Preprocessed xdf file with new features and correct variable types.

Related Files

- step6_feature_engineering.R

Step 7: Training

In this step, we will train a GBT model with the training data. Note that, the label is imbalanced (much more non-fraud than fraud), and this can be handled by “unbalancedSets” argument in “rxFastTrees” function. The trained model will be saved to the model directory on local edge node.

Input

LocalWorkDirandHDFSWorkDir: working directories on HDFS and local edge node.Input_Data_Xdf: training data.

Output

- Trained GBT model object.

Related Files

- step7_training.R

Step 8: Prediction

In this step, we do prediction (scoring) on the model created in step 7. If Stage is “Dev” or “Prod”, the model object is loaded from model directory on edge node. If Stage is “Web", the model object is directly passed. In “Dev” Stage, we will also create a hive table for the scored data set. The hive table will be ingested by PowerBI for visualization.

Input

LocalWorkDirandHDFSWorkDir: working directories on HDFS and local edge node.Input_Data_Xdf: input data name of xdf file to be scored.Stage: “Dev” for development, “Prod” for batch scoring, “Web” for web scoring.

Output

- Scored data set.

Related Files

- step8_prediction.R

Step 9: Evaluation

In this step, we will evaluate the scored data set if the ground truth label exists (thus, only performed in development stage). We create both transaction and account level metrics. For transaction level metrics, rxRoc function is used to get the ROC curve and rxAuc function is used to calculate the AUC. For account level metrics, we import the data in memory and use customized function to get the result.

Input

HDFSWorkDir: working directories on HDFS and local edge nodeScored_Data_Xdf: scored data set

Output

- AUC.

- Plotted ROC curve.

- Account level metrics and plots

Related Files

- step9_evaluation.R

Updating the Production Stage Directory (“Copy Dev to Prod”)

At the end of the main function of the script development_main.R, the copy_dev_to_prod.R script is invoked in order to copy (overwrite if it already exists) the model, statistics and other data from the Development Stage to a directory of the Production or Web Scoring stage.

If you do not wish to overwrite the model currently in use in a Production stage, you can either save them to a different directory, or set update_prod_flag to 0. If you are running the solution at the very first time, make sure to set the flag to 1.

Production Stage

In the Production stage, the goal is to perform a batch scoring.

The script production_main.R will complete this task by invoking the scripts described above. The batch scoring can be done either:

- In-memory : The input should be provided as data frames. All the preprocessing and scoring steps are done in-memory on the edge node (local compute context). In this case, the main batch scoring function calls the R script in_memory_scoring.R.

- Using data stored on HDFS: The input should be provided as paths to the Production data sets. All the preprocessing and scoring steps are one on HDFS in Spark Compute Context.

When the data set to be scored is relatively small and can fit in memory on the edge node, it is recommended to perform an in-memory scoring because of the overhead of using Spark which would make the scoring much slower.

The script:

- Lets the user specify the paths to the Production working directories on the edge node and HDFS (only used for Spark compute context).

- Lets the user specify the paths to the Production data sets Untagged_Transactions and Account_Info (Spark Compute Context) or point to them if they are data frames loaded in memory on the edge node (In-memory scoring).

The computations described in the Development stage are performed, with the following differences:

- No tagging step. A fake label column will be given instead.

- No splitting into a training and testing set, since the whole data is used for scoring.

- No creating risk tables. The risk tables generated in development stage will be loaded for use.

- No training. The GBT model created in the Development Stage is loaded and used for predictions

- No model evaluation since usually we don’t have ground truth label for new coming data.

Warning: in case you get the following error: “Error: file.exists(inData1) is not TRUE”, you should reset your R session with Ctrl + Shift + F10 (or Session -> Restart R) and try running it again.

Deploy as a Web Service

In the script web_scoring_main.R, we define a scoring function and deploy it as a web service so that customers can score their own data sets locally/remotely through the API. Again, the scoring can be done either:

- In-memory : The input should be provided as data frames. All the preprocessing and scoring steps are done in-memory on the edge node (local compute context). In this case, the main batch scoring function calls the R script in_memory_scoring.R.

- Using data stored on HDFS: The input should be provided as paths to the Production data sets. All the preprocessing and scoring steps are one on HDFS in Spark Compute Context.

When the data set to be scored is relatively small and can fit in memory on the edge node, it is recommended to perform an in-memory scoring because of the overhead of using Spark which would make the scoring much slower.

This is done in the following way:

- Log into the ML server that hosts the web services as admin. Note that even if you are already on the edge node, you still need to perform this step for authentication purpose.

- Specify the paths to the working directories on the edge node and HDFS.

- Specify the paths to the input data sets Untagged_Transactions and Account_Info or point to them if they are data frames loaded in memory on the edge node.

-

Load the static .rds files needed for scoring and created in the Development Stage. They are wrapped into a list called

model_objectswhich will be published along with the scoring function. - Define the web scoring function which calls the steps like for the Production stage.

-

Publish as a web service using the

publishServicefunction. Two web services are published: one for the string input (Spark Compute Context) and one for a data frame input (In-memory scoring in local compute context). In order to update an existing web service, use theupdateServicefunction to do so. Note that you cannot publish a new web service with the same name and version twice, so you might have to change the version number. -

Verification:

- Verify the API locally: call the API from the edge node.

- Verify the API remotely: call the API from your local machine. You still need to remote login as admin from your local machine in the beginning. It is not allowed to connect to the edge node which hosts the service directly from other machines. The workaround is to open an ssh session with port 12800 and leave this session on. Then, you can remote login. Use getService function to get the published API and call the API on your local R console.

Using Your Own Data Set

A new data set can be used for either or both the Modeling and the Production pipeline. However, for this template to work as is, it should follow these requirements:

- The data schema should be the same for files Untagged_Transactions, Account_Info and Fraud_Transactions.

Template Contents

To try this out yourself:

- View the Quick Start.