Run ONNX model with OpenVINO™ in Intel® powered hardware

<– Back to project listing

Intel® powered developer kits for ONNX and Azure IoT

|

Get good, better or best Intel® powered developer kits come with multiple CPU choices - Atom™, Core™ and Xeon™. You can select to use the one that best fits your use case

|

| UP Squared* AI Vision X Developer Kit that comes with Intel’s Atom™ processor is a high performance kit that provides a clear path to production, simple set up and configuration with pre-installed Ubuntu OS, expanded I/O to help with rapid prototyping, and a means to incorporate complex and advanced libraries in an intuitive fashion. This kit provides Computer vision and deep learning from prototype to production.

|

|

| IEI* Tank AIoT Developer Kit ships with either Core™ (i5/i7) or Xeon™ (E3) processor with an optional vision accelerator that enables analysis of more than 16 video streams, deep neural network (DNN) inferencing for AI at the edge and comes with consistent APIs and runtimes across cameras, edge appliances, and cloud solutions. This kit provides commercial production-ready development with deep learning, computer vision and AI.

|

|

| FLEX-BX200 FLEX-BX200 ships with Intel® Core™ i7/i5/i3 Pentium® processor. It is an AI hardware ready system ideal for deep learning and inference computing to help you get faster, deeper insights into your customers and your business. IEI’s FLEX-BX200 supports graphics cards, Intel FPGA acceleration cards, and Intel VPU acceleration cards, to provide additional computational power plus an end-to-end solution to run your tasks more efficiently. This kit provides enormous computational power to perform accurate inference and prediction in near-real time, especially in harsh environments.

|

|

| AAEON BOXER-6841M enables turnkey development on the AAEON IoT platform, which is based on Azure services and enables developers and system integrators to quickly evaluate their solutions. You can order it with 6th / 7th Generation Intel® Core™ processor.

|

|

|

Solution example

|

|

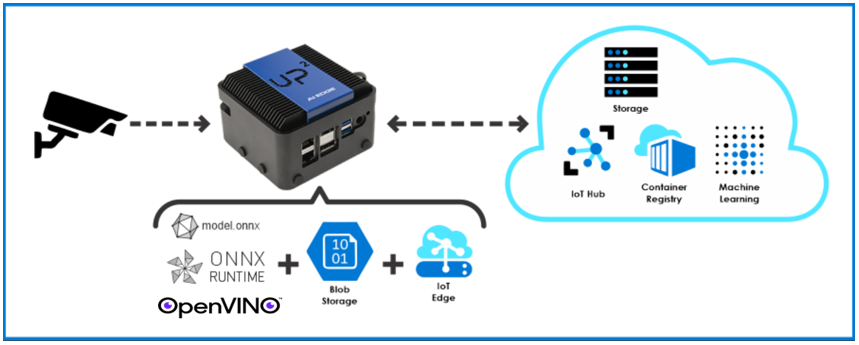

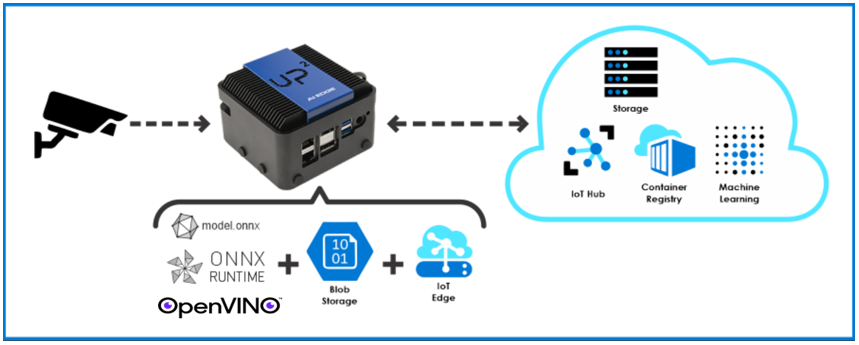

Intel and Microsoft joined forces to create development tools that make it easier for you to use the cloud, the edge or both, depending on your need. The latest is an execution provider (EP) plugin that integrates two valuable tools: the Intel Distribution of OpenVINO™ toolkit and Open Neural Network Exchange (ONNX) Runtime. The goal is to give you the ability to write once and deploy everywhere — in the cloud or at the edge.

Read Intel's blog regarding advancing edge to cloud inferencing for AI.

|

|

This solution example provides step by step instructions for enabling ONNX with on Intel powered devices. ONNX is an open format to represent deep learning models. With ONNX, AI developers can more easily move models between state-of-the-art tools and choose the combination that is best for them. ONNX is developed and supported by a community of partners.

|

|

Tutorial for deploying ONNX Runtime with OpenVINO™

|

|

Deployment with ONNX Runtime with Azure IoT Edge

|

|

Edge Analytics FaaS with OpenVINO™ and ONNX with docker containers

|

ONNX with UP Squared solution diagram

|

|