TIP

🔥 The FREE Azure Developer Guide eBook is available here (opens new window).

💡 Learn more : Kubernetes Event-driven Autoscaling (KEDA) Quickstart (opens new window).

📺 Watch the video : Azure Functions on Kubernetes with KEDA (opens new window).

# Azure Functions on Kubernetes with KEDA Part 2 of 2

# Azure Functions Running in Kubernetes

This post is par of a series of posts:

- Azure Functions on Kubernetes with KEDA Part 1 of 2 - Creating and building a containerized Azure Function

- Azure Functions on Kubernetes with KEDA Part 1 of 2 (this post) - Running the Azure Function in AKS with KEDA

In the previous post, we've created a containerized Azure Function (opens new window) that is triggered by an Azure Storage Queue (opens new window). In this post, we'll deploy that Function to Azure Kubernetes Service (AKS) (opens new window) and install KEDA (opens new window) to scale it.

# Prerequisites

If you want to follow along, you'll need the following:

- An Azure subscription (If you don't have an Azure subscription, create a free account (opens new window) before you begin)

- You'll need the latest version of Visual Studio (opens new window) with the Azure workload installed. Alternatively, you can use Visual Studio Code (opens new window) with the Azure Function extension (opens new window) installed

- You need Docker on your PC to develop container-based applications. Download it here (opens new window)

- The Azure CLI (opens new window). You can download it for Windows, Linux or Mac (opens new window)

- Node.js, which includes npm that we need to install the Azure Functions Core Tools (opens new window). You can download Node.js here (opens new window)

- The Azure Functions Core Tools (opens new window)

# Running a containerized Azure Function in AKS at scale with KEDA

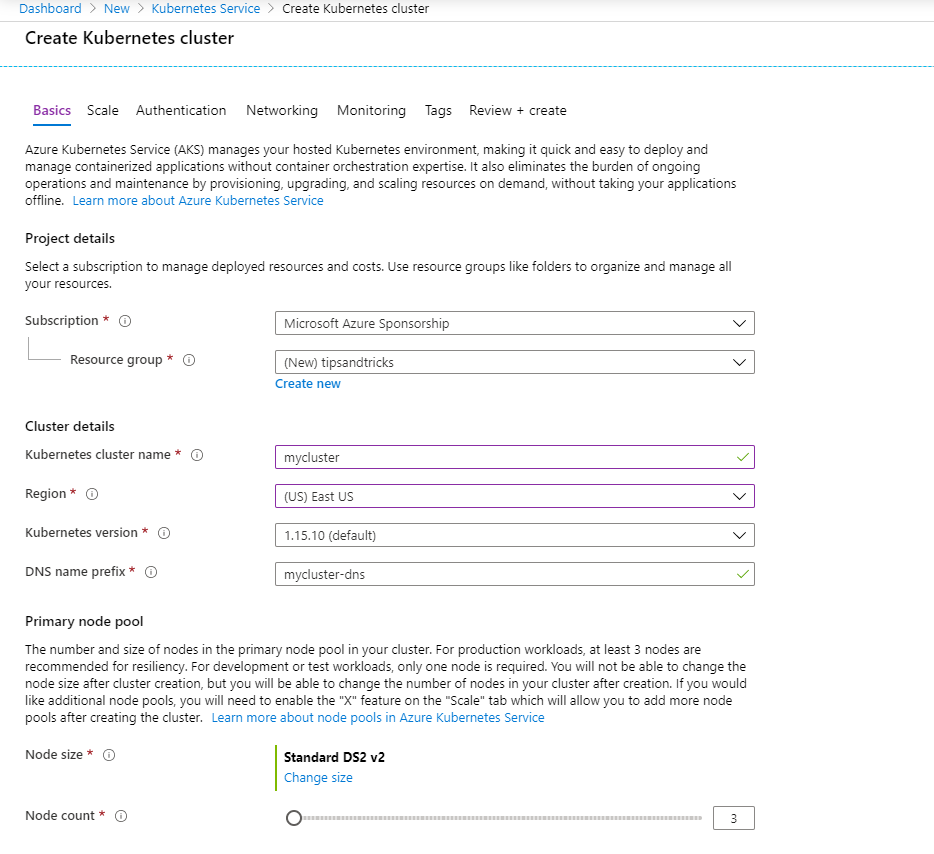

We have a container that contains an Azure Function with a queue-based trigger on our local machine. Let's deploy that to Azure Kubernetes Service (AKS) and run it at scale with KEDA. We'll start by creating an Azure Kubernetes Service using the Azure portal:

- Go to the Azure portal (opens new window)

- Click the Create a resource button (the plus-sign in the top left corner)

- Search for Kubernetes and click on the result to start creating an AKS cluster a. This brings you to the create blade of Azure Kubernetes Service b. Select a Resource Group or create a new one c. Fill in a name for Kubernetes cluster name d. Select a Region to deploy the cluster to e. Select a Kubernetes version. You should select the latest default version f. Leave the rest as it is and click Review + create and Create after that. The AKS cluster will now be deployed, which can take a few minutes

(Create Azure Kubernetes Service in the Azure portal)

In Azure, we now have an AKS cluster and an Azure Storage Queue. And locally, we have a container with a queue-based Azure Function in it. Let's install KEDA on AKS and deploy the container to AKS.

- Open the Azure CLI on your local machine

- Next, we need to connect to the AKS in Azure. You can do that with the following command where you insert your resource group name and AKS name:

az aks get-credentials -g <yourresourcegroup> -n <youraksclustername> --overwrite-existing

- Let's check if we are connected and if AKS is up and running with the command below. This will show the AKS nodes and should say that their status is Ready

kubectl get nodes

- Alright. Now we install KEDA on AKS. This will enable the container to behave like an Azure Function running in consumption mode. It will be able to scale to 0 instances when needed and will automatically scale up when the the Function needs to run more. You can install KEDA using the helm charts as provided by KEDA. This does require you to install helm (opens new window). You can install KEDA on AKS with the following command:

helm repo add kedacore https://kedacore.github.io/charts

helm repo update

kubectl create namespace keda

helm install keda kedacore/keda --namespace keda

2

3

4

- When KEDA is installed, we can deploy the container to AKS and start running it. The command below builds the container with the Function in it and deploys it to AKS. It leverages the docker CLI to build and deploy the image. Be sure to have docker connected to your account with docker login. Replace the <name-of-function-deployment> with the name of your Function app. And replace <container-registry-username> with your username for the Docker container registry

func kubernetes deploy --name <name-of-function-deployment> --registry <container-registry-username>

That's it. The container with the Function is now deployed and running in AKS. The Function will run whenever a new message is put on the Azure Storage Queue. Let's test if KEDA actually scales the container when there are multiple messages on the queue.

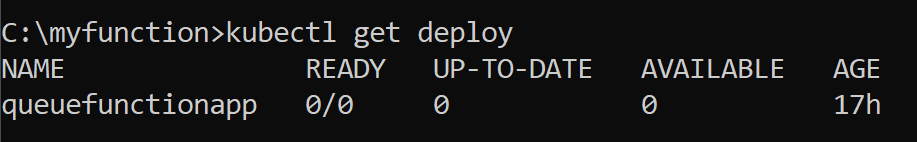

- In the Azure CLI, run the following command to see what is running in AKS:

kubectl get deploy

This will show that there is nothing running. 0 instances ot the queuefunctionapp are running, which is what we want. There are no queue messages to process, so we don't want any containers running.

(No Azure Functions containers running in AKS)

- We can use a simple console application to put many messages on the queue in a short time: a. Open Visual Studio b. Click File > New Project c. Select Console App (.NET Core) and click Next d. Pick a location for the app and give it a name e. Click Create to create the console application f. Once it is created, right-click the project file and click Manage NuGet Packages g. Search for and install the NuGet package WindowsAzure.Storage h. Now paste the following code into the Program.cs file and insert the Azure Storage Access Key where it says "Your Cloud Storage Key" and insert the Azure Storage Queue name where it says "myqueue":

using System;

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Queue;

namespace QueueFiller

{

class Program

{

static void Main(string[] args)

{

CloudStorageAccount storageAccount = CloudStorageAccount.Parse("Your Cloud Storage Key");

// Create the CloudQueueClient object for the storage account.

CloudQueueClient queueClient = storageAccount.CreateCloudQueueClient();

// Get a reference to the CloudQueue named "NewQueue"

CloudQueue newQueue = queueClient.GetQueueReference("myqueue");

// Create the CloudQueue if it does not exist.

newQueue.CreateIfNotExistsAsync();

for (int i = 0; i < 10000; i++)

{

// Create a message and add it to the queue.

CloudQueueMessage message = new CloudQueueMessage("My new message");

newQueue.AddMessageAsync(message);

Console.WriteLine("Message added to the queue");

}

}

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

- Run the console application. This will insert 10000 messages into the queue, which the Azure Function in AKS has to handle

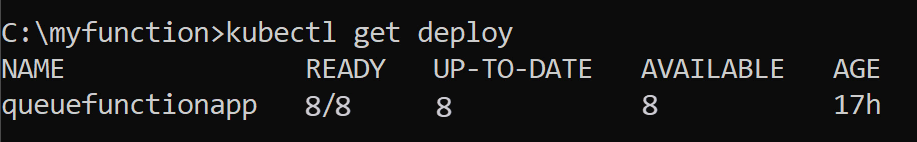

- Wait a minute or so for the queue to fill and for KEDA to scale up the Azure Function container in AKS. In the Azure CLI, run the following command:

kubectl get deploy

You'll see that there are now more instances of the queuefunctionapp running to handle the messages on the queue. And when the messages are processed, the number of instances will go down after a while.

(Azure Functions containers running in AKS)

# Conclusion

Azure Functions (opens new window) are really powerful, because they can run whatever logic you want and scale automatically. And they can even scale automatically when you run them in a container in Azure Kubernetes Service (AKS) (opens new window) with KEDA (opens new window) (Kubernetes Event-driven Autoscaling). Go and check it out!