Deploy app with Puppet on Azure

In this hands-on lab you will deploy the Parts Unlimited MRP App (PU MRP App), a Java application, using Puppet from Puppet Labs.

Puppet is a configuration management system that allows you to automate the provisioning and configuring of machines, by describing the state of your Infrastructure as Code. Implementing Infrastructure as Code is an important pillar of good DevOps practice.

DevOps MPP Course Source:

This lab is used in the following courses.

- DevOps200.2x: Infrastructure as Code - Module 4 Azure IaaS and PaaS, Environment Configuration and Deployment, and Optimization.

- AZ-400T05: Implementing Application infrastructure - Module 4: Third Party and Open Source Tool integration with Azure. The lab compliments the AZ-400 series of courses to help you prepare for the AZ-400 Microsoft Azure DevOps Solutions certification exam.

Pre-requisites:

- An SSH client, such as PuTTY.

- An active Azure subscription.

Lab Tasks:

- Task 1: Provision a Puppet Master and Node in Azure using Azure Resource Manager templates

- Task 2: Install Puppet Agent on the node

- Task 3: Configure the Puppet Production Environment

- Task 4: Test the Production Environment Configuration

- Task 5: Create a Puppet Program to describe the prerequisites for the PU MRP app

- Task 6: Run the Puppet Configuration on the Node

Estimated Lab Time:

- Approx. 90 minutes

Lab environment

In this lab you will use the following two machines.

-

A machine known as a Node will host the PU MRP app. The only task you will perform on the node is to install the Puppet Agent. The Puppet Agent can run on Linux or Windows. For this lab, we will configure the Node in a Linux Ubuntu Virtual Machine (VM).

-

A Puppet Master machine. The rest of the configuration will be applied by instructing Puppet how to configure the Node through Puppet Programs, on the Puppet Master. The Puppet Master must be a Linux machine. For this lab, we will configure the Puppet Master in a Linux Ubuntu VM.

Instead of manually creating the VMs in Azure, we will use an Azure Resource Management (ARM) template.

Task 1: Provision a Puppet Master and Node in Azure using Azure Resource Manager templates (both inside Linux Ubuntu Virtual Machines)

-

To provision the required VMs in Azure using an ARM template, select the Deploy to Azure button, and follow the wizard. You will need to log in to the Azure Portal.

The VMs will be deployed to a Resource Group along with a Virtual Network (VNET), and some other required resources.

Note: You can review the JSON template and script files used to provision the VMs in the Puppet lab files folder on GitHub.

-

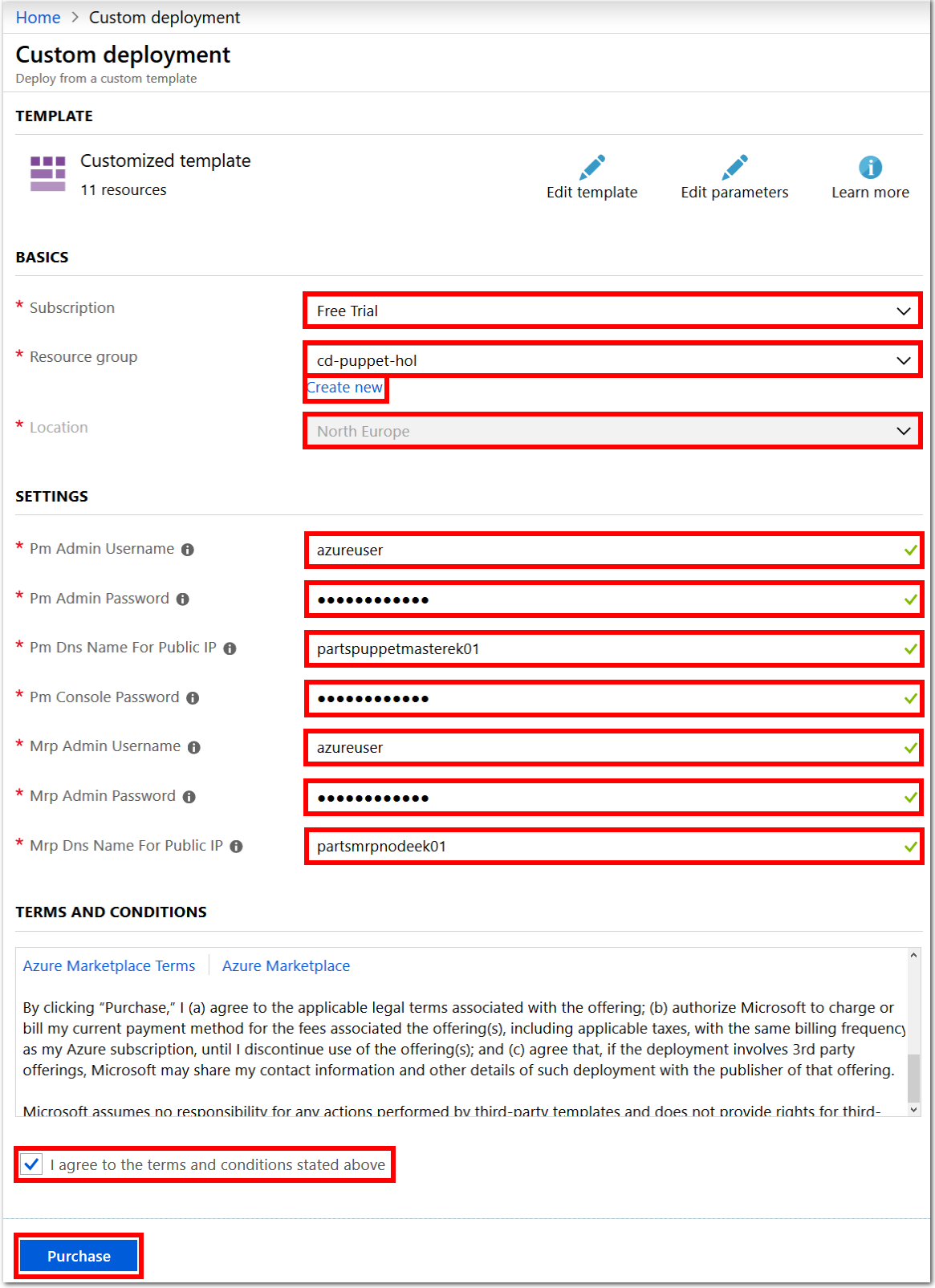

When prompted specify a Subscription, Location, and Resource Group for deploying your VM resources. Provide admin usernames and passwords, as well as a unique Public DNS Names for both machines.

Consider the following guidelines.

- Subscription.

< your Azure subscription >. - Resource group.

< a unique resource group name >. For examplepuprgek01. Create a new Resource Group during the deployment process, to demarcate the resources that you set up in this lab. Remove the resources created in this lab by deleting the Resource Group. - Location. Select a region to deploy the VMs to. For example

West EuropeorEast US. - Pm Admin Username. The Pm refers to the Puppet Master VM, and Mrp refers to the Node VM. Choose the same Admin Username for both Pm and Mrp VMs. For example,

azureuser. - Pm Admin Password. Set the same Admin Password for both VMs, and for the Pm Console. For example,

Passw0rd0134 - Pm Dns Name For Public IP. Include the word master in the Puppet Master DNS name, to distinguish it from the Node VM. You could include the word node in the Node DNS name, if you wish, for example

partsmrpnode. Create unique DNS names for both VMs by adding your initials as a suffix. For example,partspuppetmasterek01andpartsmrpnodeek01

Make a note of the location region, as well as any usernames and passwords you set for the VMs.

Use the checkbox to agree to the Azure Marketplace terms and conditions. Select the Purchase button. Allow about 10 minutes for each deployment to complete.

- Subscription.

-

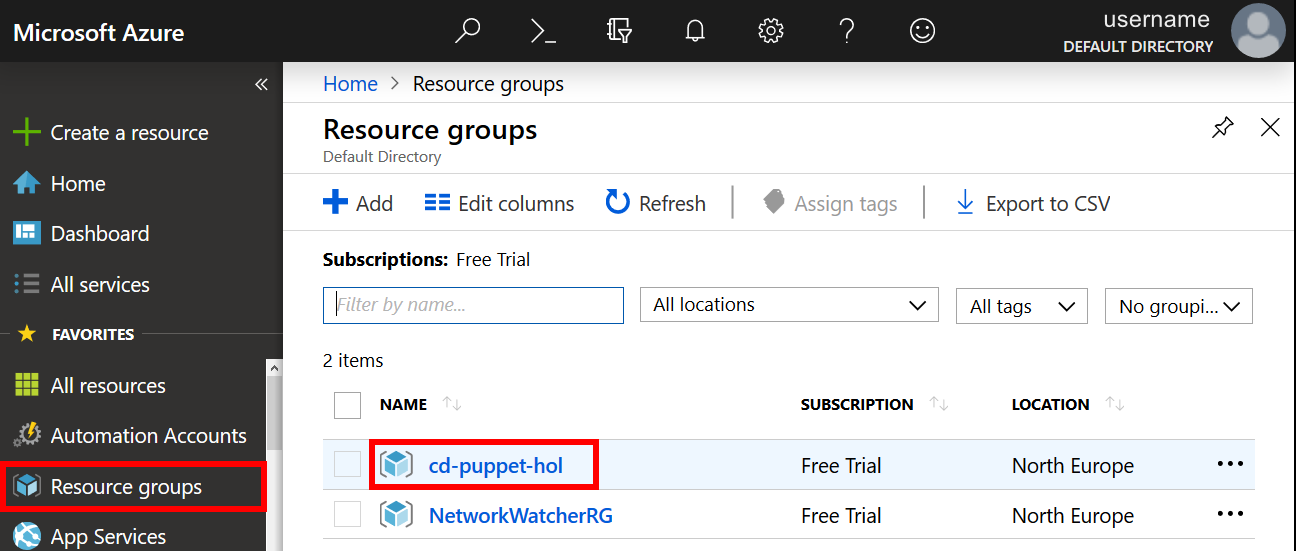

When the deployment completes, select the Resource Groups blade from the Azure Portal menu. Choose the name of Resource Group that you created, cd-puppet-hol for example.

-

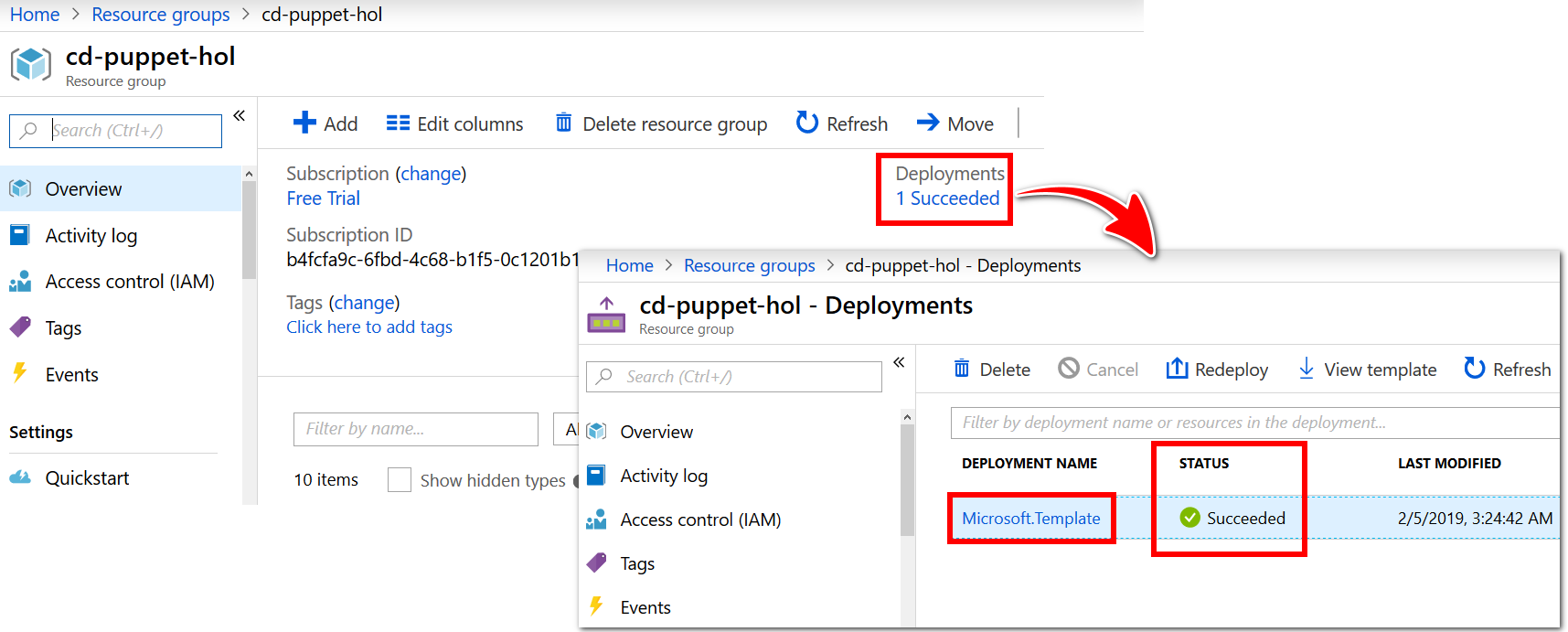

Within the Resource Group Overview pane, beside Deployments, select the 1 succeeded message to view the deployment details. The status of the

Microsoft.Templateresource should indicate that the deployment has succeeded.Choose the

Microsoft.Templateresource, listed in the Deployment Name column.

-

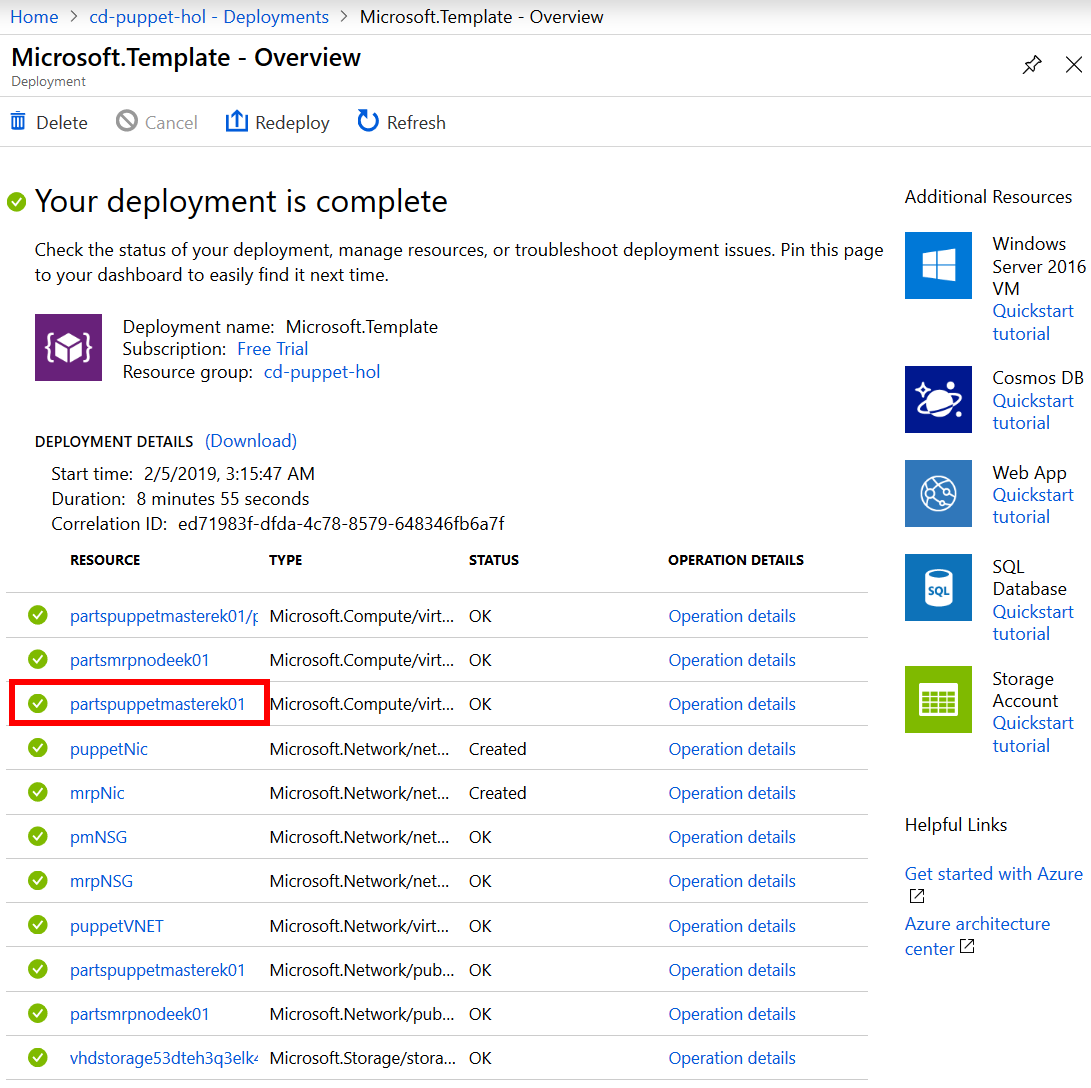

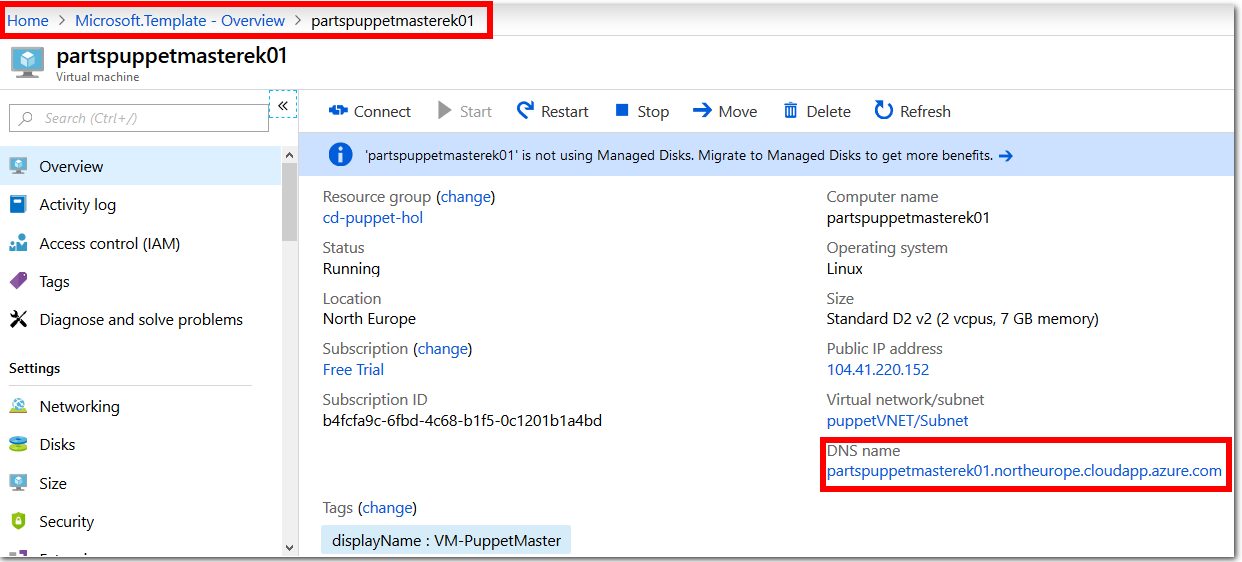

There is a list of the deployed resources in the Microsoft.Template - Overview pane, below the Your deployment is complete message. Select the Puppet Master VM from the list, for example

partspuppetmasterek01.

-

Make a note of the DNS name value, listed inside the Overview pane for the Puppet Master Virtual Machine. The DNS name value is in the format

machinename.region.cloudapp.azure.com. For example,partspuppetmasterek01.northeurope.cloudapp.azure.com.

-

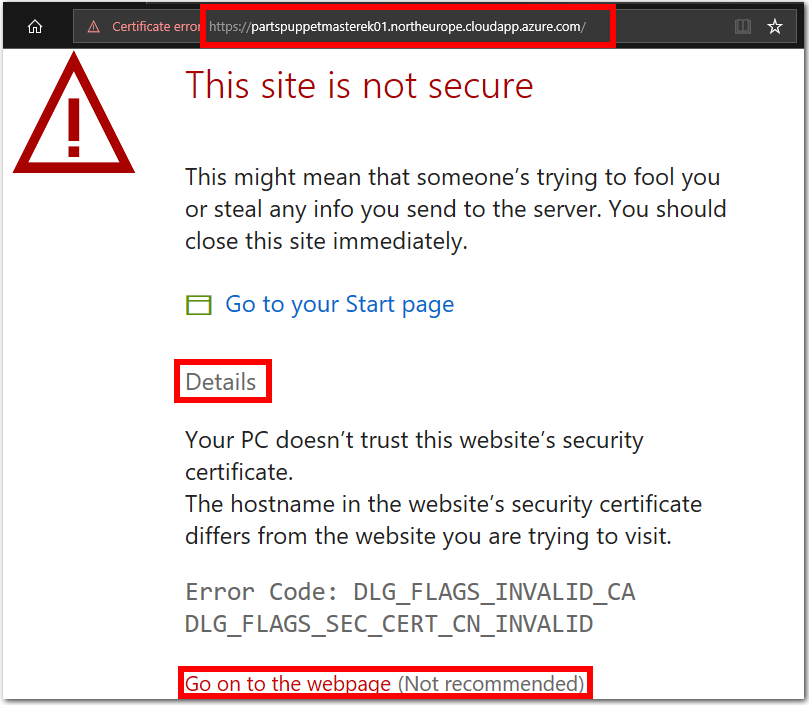

Append

https://to the beginning of the DNS name to create a URL for the Puppet Master’s public DNS Address. The resulting URL should be in the formathttps://machinename.region.cloudapp.azure.com. For example,https://partspuppetmasterek01.northeurope.cloudapp.azure.com.Using

https, nothttp, visit the URL in a web browser.Override the certificate error warning messages, and visit the webpage. It is safe to ignore these error messages for the purposes of this lab. The prompts that you see, and steps required to access the URL, may depend on the web browser you use.

-

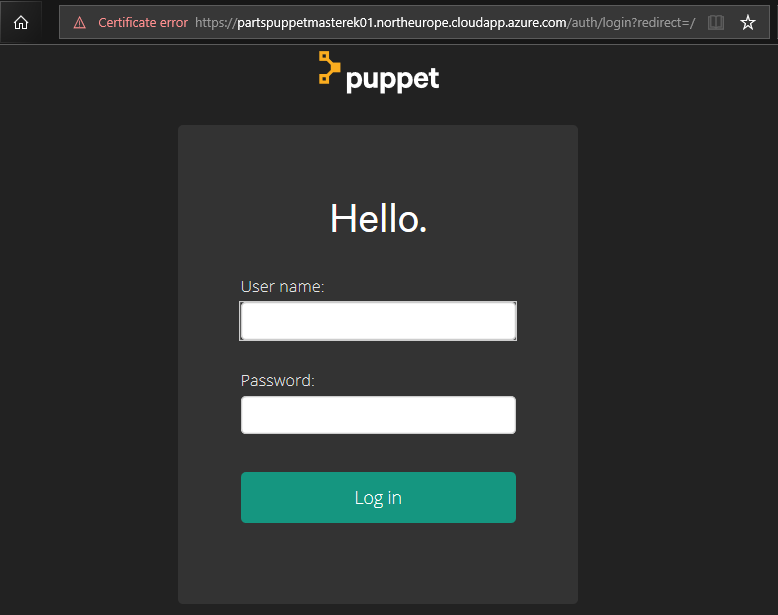

If the Puppet configuration has succeeded, you should see the Puppet Master Console sign in webpage.

Note: The lab requires several ports to be open, such as the Puppet Server port, the Puppet Console port, SSH ports, and the PU MRP app port on the

partsmrpVM. The ARM template opens these ports on the VMs for you. You can look through the deployment JSON template to view the port configuration details in the PuppetPartsUnlimitedMRP.json file on GitHub. -

Log in to the Puppet Master Console with the following credentials.

- user name =

admin - Password = Pm Console Password you specified earlier in this lab. For example,

Passw0rd0134

Note: You cannot log into the Puppet Master Console with the username and password you specified earlier in this lab. You must login using the built in

adminaccount instead, as shown. - user name =

-

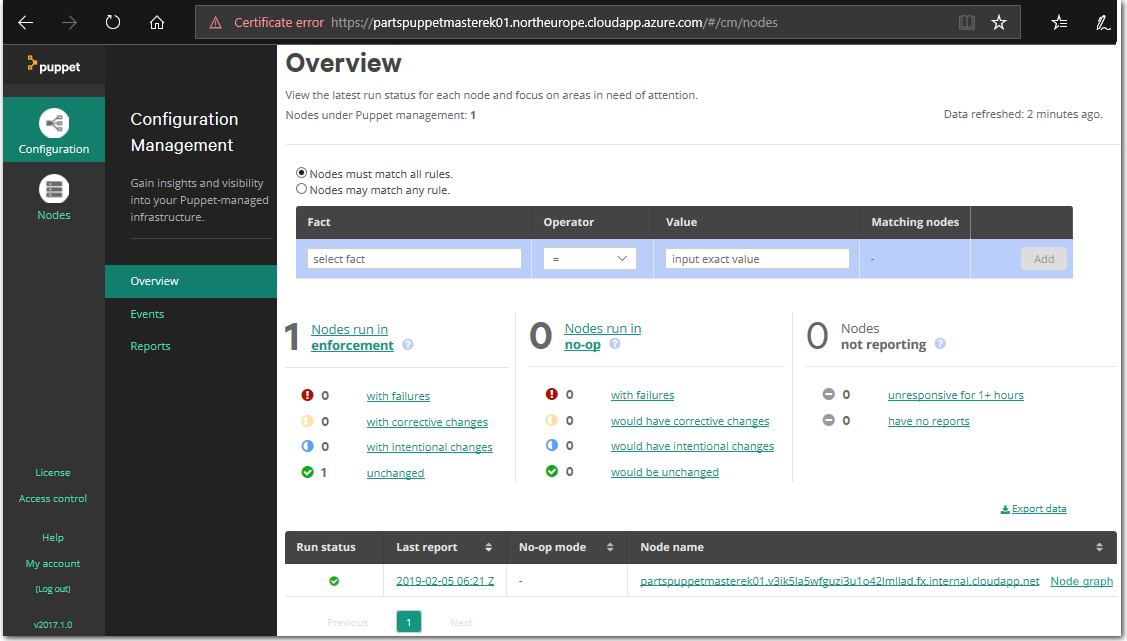

If your log in is successful, you will be redirected to the Puppet Configuration Management Console webpage which is similar in appearance to the following screenshot.

Task 2: Install Puppet Agent on the node

You are now ready to add the Node to the Puppet Master. Once the Node is added, the Puppet Master will be able to configure the Node.

-

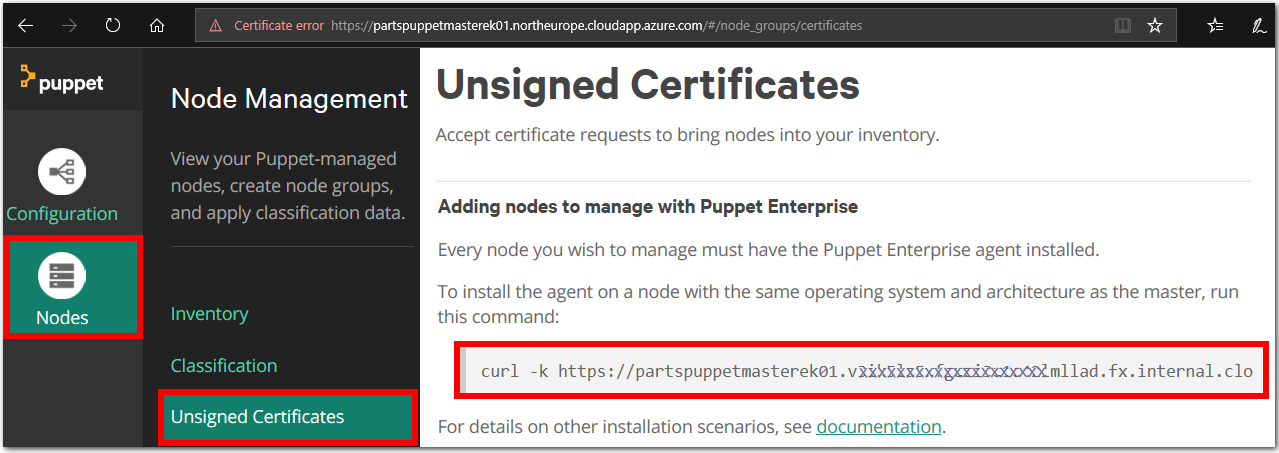

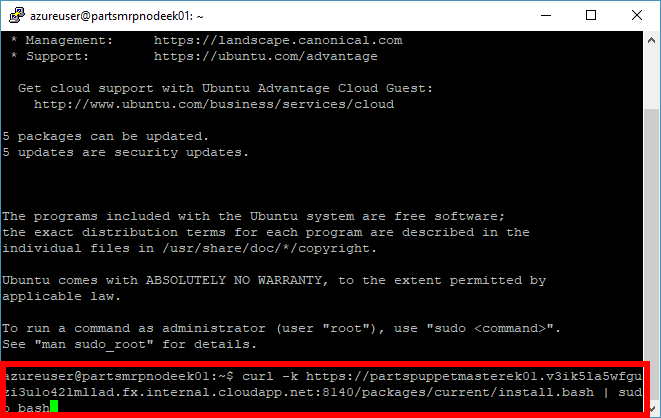

Get the Puppet Master internal DNS name. On the Puppet Configuration Management Console webpage, go to Nodes > Unsigned Certificates. The page that loads will show an

Add nodecommand. Make a note of the command, we will run it in Step 4 of this lab task.For example, the Puppet Master machine name is

partspuppetmasterek01.northeurope.cloudapp.azure.com, and the command we need to run will be similar to the following command.curl -k https://partspuppetmasterek01.irblmudbrloe5hz001blu2g34f.ax.internal.cloudapp.net:8140/packages/current/install.bash | sudo bash

-

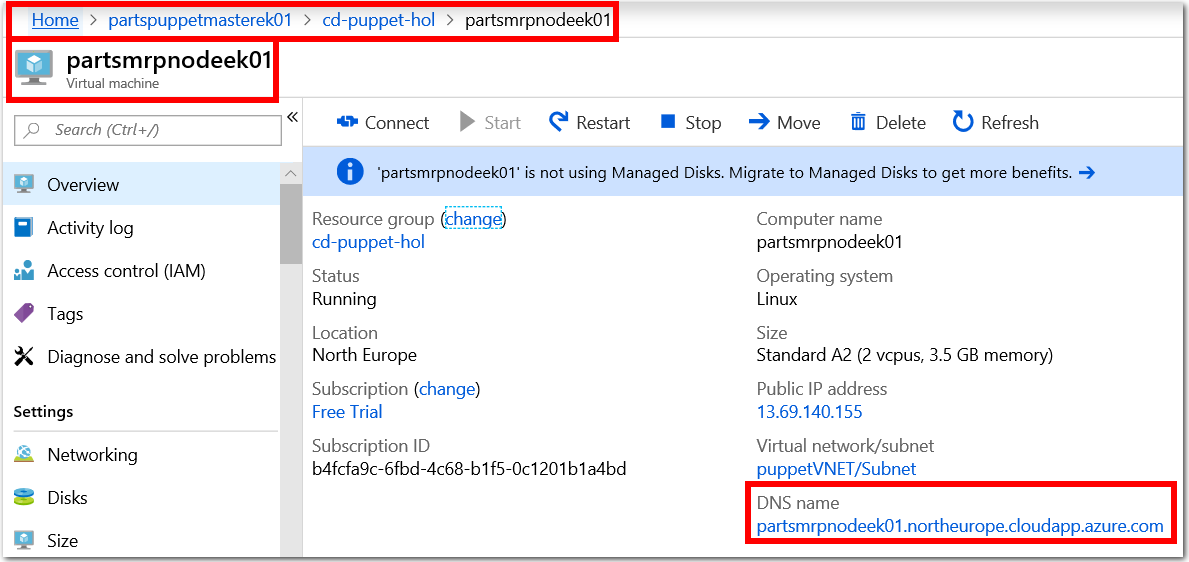

In Azure Portal, from the left menu, choose All Resources > partsmrpnodeek01 (or whatever name you specified for the Node / partsmrp VM). Make a note of the DNS name value for the Node VM.

-

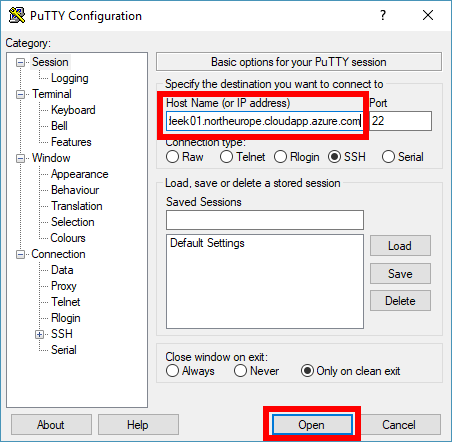

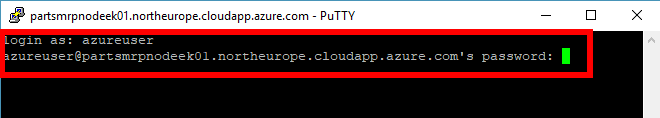

Establish an SSH connection to the Node VM. In the following example, we will connect to the Node VM using the PuTTy SSH client.

Specify the Node DNS name as the destination Host Name.

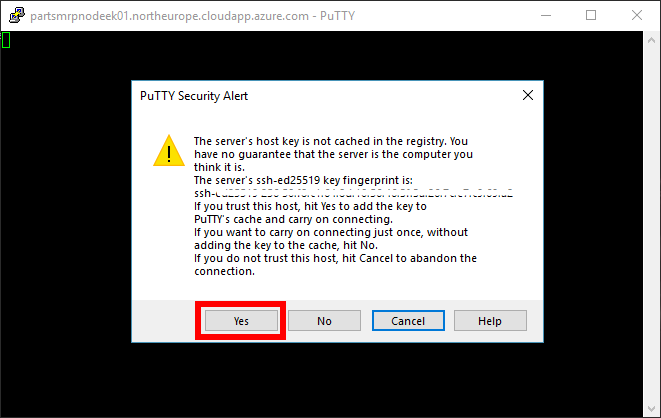

If prompted, choose Yes to add the SSH key to PuTTy’s cache.

Log in with username and password credentials that you specified in Task 1. For example,

azureuserandPassw0rd0134.

-

Run the

Add Nodecommand on the node.Enter the

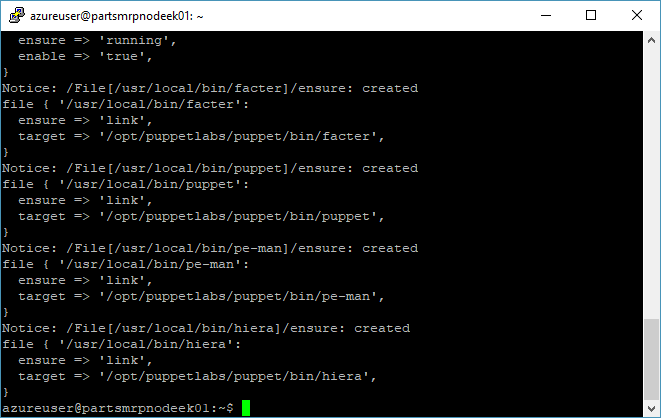

Add Nodecommand into the SSH terminal, which you noted earlier in Step 1. The command begins withcurl.... Run the command.

Wait for the command to install the Puppet Agent and any dependencies on the Node. The command takes two or three minutes to complete.

From here onwards, you will configure the Node from the Puppet Master only.

-

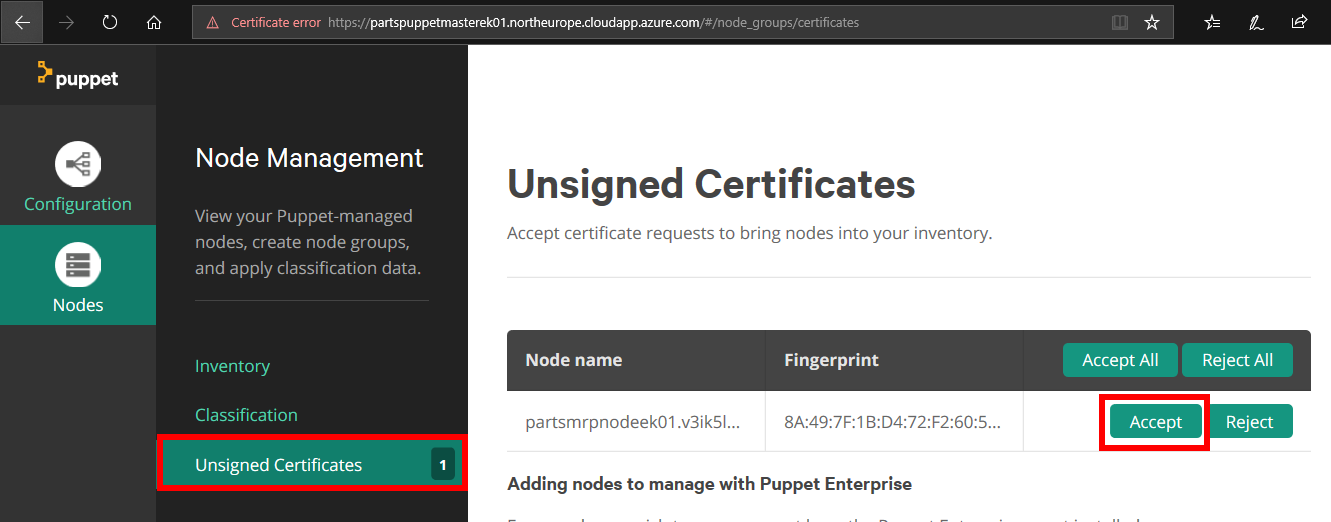

Accept the pending Node request.

Return to the Puppet Configuration Management Console. Refresh the Unsigned Certificates webpage (where you previously got the Node install command). You should see a pending unsigned certificate request. Choose Accept to approve the node.

This is a request to authorize the certificate between the Puppet Master and the Node, so that they can communicate securely.

-

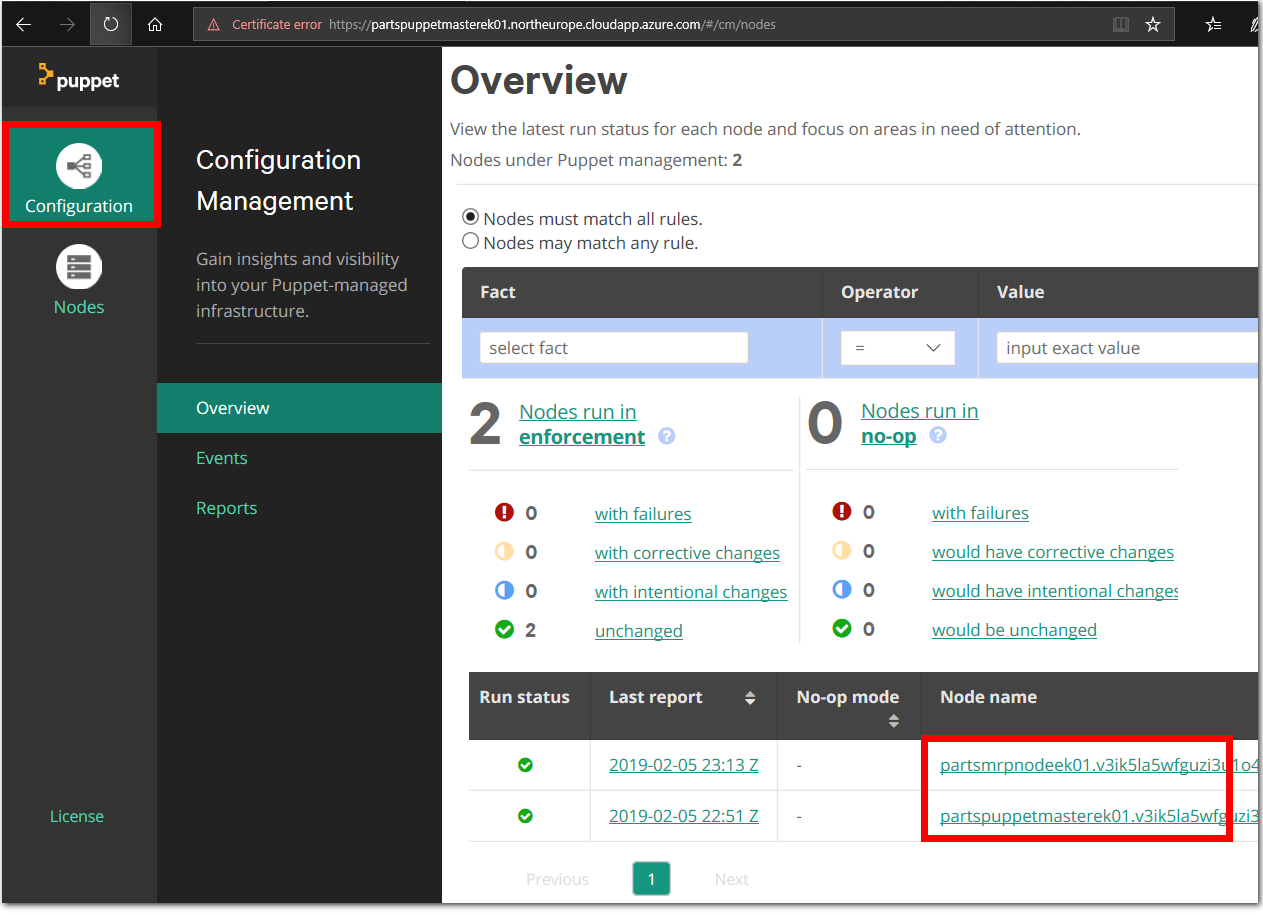

Goto the Nodes tab in the Puppet Configuration Management Console. It may take a few minutes to configure the Node / partsmrp VM, before it is visible in the Puppet Configuration Management Console. When the Node is ready, you should see the following nodes listed in the Puppet Configuration Management Console.

- Puppet Master. For example,

partspuppetmasterek01 - Node / partsmrp. For example,

partsmrpnodeek01

The nodes are also listed in the Puppet Configuration Management Console under Configuration > Overview.

Note: You can automate the Puppet Agent installation and configuration process on an Azure VM using the Puppet Agent extension from the Azure Marketplace.

There are also a series of PowerShell cmdlets for provisioning, enabling, and disabling the Puppet Extension Handler on Windows VMs. This provides a command-line interface for deploying Puppet Enterprise Agents to Windows VMs in Azure. For details see the Puppet PowerShell Cmdlets for Azure Guide.

- Puppet Master. For example,

Puppet modules and programs

The Parts Unlimited MRP application (PU MRP App) is a Java application. The PU MRP App requires you to install and configure MongoDB and Apache Tomcat on the Node / partsmrp VM. Instead of installing and configuring MongoDB and Tomcat manually, we will write a Puppet Program that will instruct the Node to configure itself.

Puppet Programs are stored in a particular directory on the Puppet Master. Puppet Programs are made up of manifests that describe the desired state of the Node(s). The manifests can consume modules, which are pre-packaged Puppet Programs. Users can create their own modules or consume modules from a marketplace that is maintained by Puppet Labs, known as The Forge.

Some modules on The Forge are supported officially, others are open-source modules uploaded from the community. Puppet Programs are organized by environment, which allows you to manage Puppet Programs for different environments such as Dev, Test and Production.

For this lab, we will treat the Node as if it were in the Production environment. We will also download modules from The Forge, which we will consume in order to configure the Node.

Task 3: Configure the Puppet Production Environment

The template we used to deploy Puppet to Azure also configured a directory on the Puppet Master for managing the Production environment. The Production Directory is in /etc/puppetlabs/code/environments/production.

-

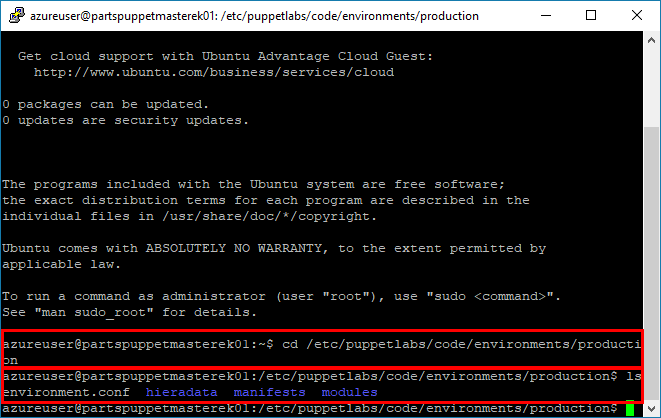

Inspect the Production modules.

Connect to the to the Puppet Master via SSH, with the PuTTy client for example. Use the Change Directory command

cdto change into the Production Directory/etc/puppetlabs/code/environments/production.cd /etc/puppetlabs/code/environments/productionUse the list command

lsto list the contents of the Production Directory. You will see directories namedmanifestsandmodules.- The

manifestsdirectory contains descriptions of machines that we will apply to Nodes later in this lab. - The

modulesdirectory contains any modules that are referenced by the manifests.

- The

-

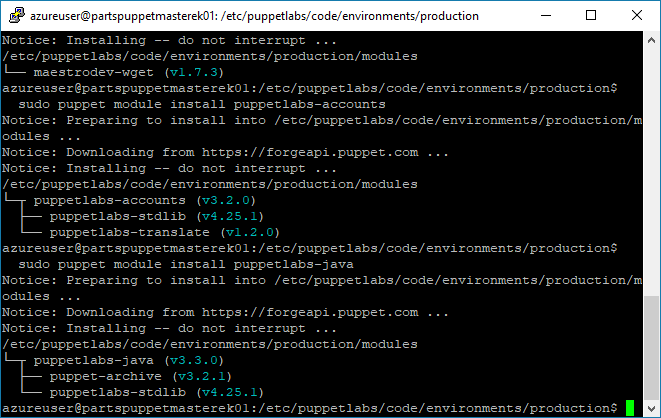

Install additional Puppet Modules from The Forge.

We will install modules from The Forge that are needed to configure the Node / partsmrp. Run the following commands in a terminal with an SSH connection to the Puppet Master.

sudo puppet module install puppetlabs-mongodb sudo puppet module install puppetlabs-tomcat sudo puppet module install maestrodev-wget sudo puppet module install puppetlabs-accounts sudo puppet module install puppetlabs-java

Note: The

mongodbandtomcatmodules from The Forge are supported officially. Thewgetmodule is a user module, and is not supported officially. Theaccountsmodule provides Puppet with Classes for managing and creating users and groups in our Linux VMs. Finally, thejavamodule provides Puppet with additional Java functionality. -

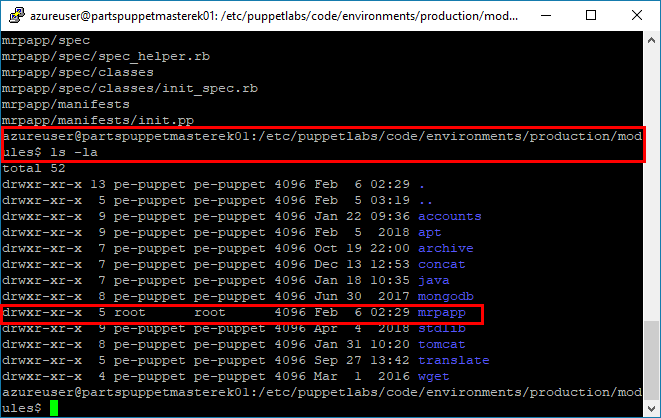

Create a custom module.

Create a custom module named

mrpappin the Production/ Modules Directory on the Puppet Master. The custom module will configure the PU MRP app. Run the following commands in a terminal with an SSH connection to the Puppet Master.Use the Change Directory command

cdto change into the Production/ Modules Directory/etc/puppetlabs/code/environments/production/modules.cd /etc/puppetlabs/code/environments/production/modulesRun the

module generatecommands to create themrpappmodule.sudo puppet module generate partsunlimited-mrpappThis will start a wizard that will ask a series of questions as it scaffolds the module. Simply press

enterfor each question (accepting blank or default) until the wizard completes.Running list command

ls -lashould show a list of the modules in the directory~/production/modules, including the newmrpappmodule.

Note: The

ls -lacombined commands will list the contents of a directory (i.e.ls), using a long list format (i.e.-l), with hidden files shown (i.e.-a). -

The

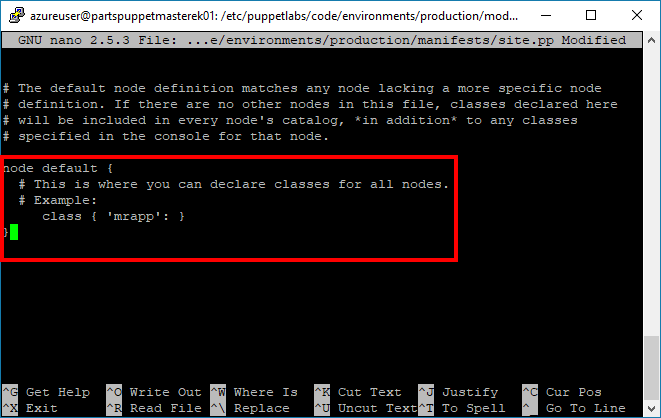

mrpappmodule will define our Node’s configuration.The configuration of Nodes in the Production environment is defined in a

site.ppfile. Thesite.ppfile is located in the Production \ Manifests directory. The.ppfilename extension is short for Puppet Program.We will edit the

site.ppfile by adding a configuration for our Node.Run the following command in an SSH terminal to the Puppet Master, which will open the

site.ppin the Nano Text Editor.sudo nano /etc/puppetlabs/code/environments/production/manifests/site.ppScroll to the bottom of the file and edit the

node defaultsection. Edit it to look as follows:node default { class { 'mrpapp': } }Note: Make sure that you remove the comment tag

#before the Class definition, as instructed.

-

Press

ctrl + o, thenenter, and thenctrl + x, to write the changes you made to thesite.ppfile, save the changes, and close the file.The edits that you made to the

site.ppfile instruct Puppet to configure all Nodes with themrpappmodule, by default. Themrpappmodule (though currently empty) is in the Modules directory of the Production environment, so Puppet will know where to find the module.

Task 4: Test the Production Environment Configuration

Before we describe the PU MRP app for the Node fully, test that everything is hooked up correctly by configuring a dummy file in the mrpapp module. If Puppet executes and creates the dummy file successfully, then everything is configured and working correctly. We can then set up the mrpapp module properly.

-

Edit the

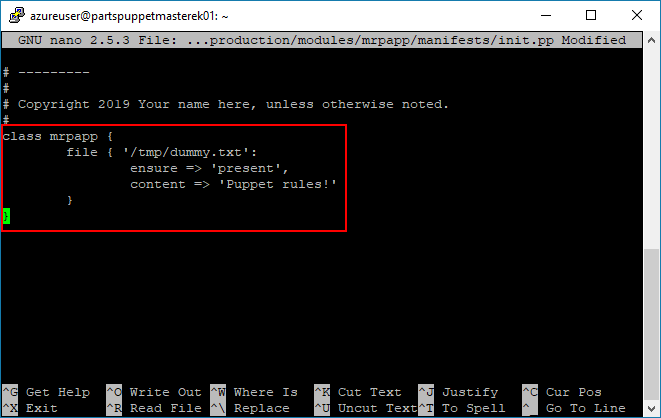

init.ppfile.The entry point for the

mrpappmodule is theinit.ppfile, in themrpapp/manifestsdirectory, on the Puppet Master. Run the following command in an SSH terminal to the Puppet Master, to open theinit.ppfile for editing with Nano.sudo nano /etc/puppetlabs/code/environments/production/modules/mrpapp/manifests/init.ppYou can either delete all of the boiler-plate comments or ignore them. Scroll down to the

Class: mrpappdeclaration. Make the following edits.class mrpapp { file { '/tmp/dummy.txt': ensure => 'present', content => 'Puppet rules!' } }Note: Make sure that you remove the comment tag

#before the Class definition, as instructed.

Press

ctrl + x, theny, and thenenterto save your changes to the file.Note: Classes in Puppet Programs are not like Classes in Object Oriented Programming. Classes in Puppet Programs define a resource that is configured on a Node. By adding the

mrpappClass (or resource) to the init.pp file, we have instructed Puppet to ensure that a file exists on the path/tmp/dummy.txt, and that the file has the content “Puppet rules!”. We will define more advanced resources within themrpappClass as we progress through this lab. -

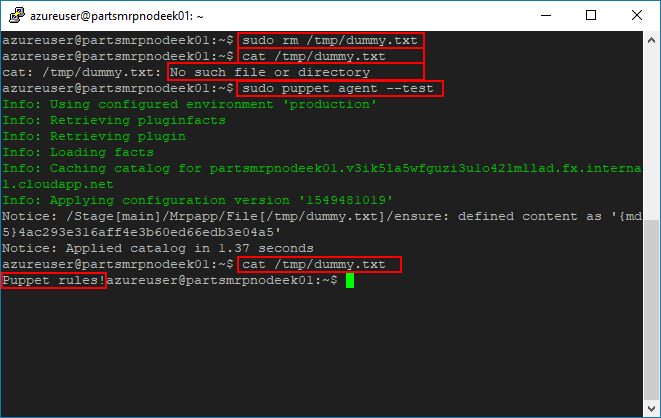

Test the dummy file.

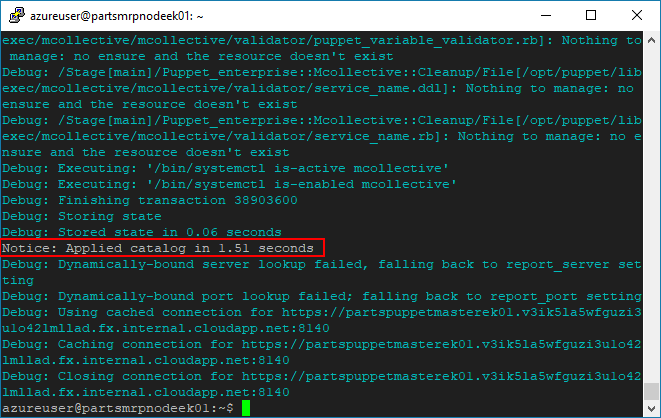

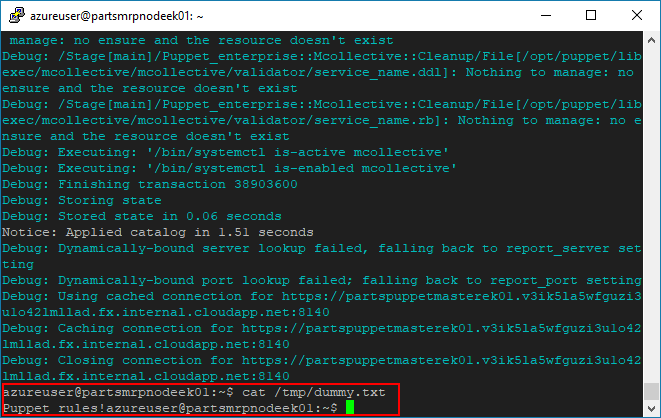

To test our setup, establish an SSH connection to the Node / partsmrp VM (using the PuTTy client, for example). Run the following command in an SSH terminal to the Node.

sudo puppet agent --test --debug

By default, Puppet Agents query the Puppet Master for their configuration every 30 minutes. The Puppet Agent then tests its current configuration against the configuration specified by the Puppet Master. If necessary, the Puppet Agent modifies its configuration to match the configuration specified by the Puppet Master.

The command you entered forces the Puppet Agent to query the Puppet Master for its configuration immediately. In this case, the configuration requires the

/tmp/dummy.txtfile, so the Node creates the file accordingly.You may see more output in your terminal than is shown in the previous screenshot. We used the

--debugswitch for learning purposes, to display more information as the command executes. You can remove the--debugswitch to receive less text output in the terminal if you wish.You can also use the

catcommand on the Node, to verify the presence of the file/tmp/dummy.txton the Node, and to inspect the file’s contents. The “Puppet rules!” message should be displayed in the terminal.cat /tmp/dummy.txt

-

Correct configuration drift.

By default, Puppet Agent runs every 30 minutes on the Nodes. Each time the Agent runs, Puppet determines if the environment is in the correct state. If it is not in the correct state, Puppet reapplies Classes as necessary. This process allows Puppet to detect Configuration Drift, and fix it.

Simulate Configuration Drift by deleting the dummy file

dummy.txtfrom the Node. Run the following command in a terminal connected to the Node to delete the file.sudo rm /tmp/dummy.txtConfirm that the file was deleted from the Node successfully by running the following command on the Node. The command should produce a No such file or directory warning message.

cat /tmp/dummy.txtRe-run the Puppet Agent on the Node with the following command.

sudo puppet agent --testThe re-run should complete successfully, and the file should now exist on the Node again. Verify that the file is present on the Node by running the following command on the Node. Confirm that the “Puppet rules!” message is displayed in the terminal.

cat /tmp/dummy.txt

You can also edit the contents of the file

dummy.txton the Node. Re-run thesudo puppet agent --testcommand, and verify that the contents of the filedummy.txthave been reverted to match the configuration specified on the Puppet Master.

Task 5: Create a Puppet Program to describe the prerequisites for the PU MRP app

We have hooked the Node (partsmrp) up to the Puppet Master. Now we can write the Puppet Program to describe the prerequisites for the PU MRP app.

In practice, the different parts of a large configuration solution are typically split into multiple manifests or modules. Splitting the configuration across multiple files is a form of Modularization, and promotes better organization and reuse of code.

For simplicity, in this lab, we will describe our entire configuration in a single Puppet Program file init.pp, from inside the mrpapp module that we created earlier. In Task 5, we will build up our init.pp step-by-step.

Note: You can access a completed

init.ppfile on Microsoft’s PU MRP GitHub repository. Copy theinit.ppfile contents from GitHub, or from the Task 5 sub-sections that follow, and paste them into your localinit.ppfile.

Tomcat requires the presence of a Linux User and a Group on the Node, to access and run Tomcat services. The default name that Tomcat uses for both the User and Group is Tomcat. There are a number of ways to create this User and Group, but we will add the User and Group to the Node VM by adding a separate Class to the init.pp file.

In addition to editing the init.pp file, we will make the following modifications.

- Edit the file

war.pp. Editing thewar.ppfile allows us to deploy the PU MRP app more easily. - Edit the permissions on the directory

~/tomcat7/webappsfor extracting.warfiles, at the end of Task 5. Editing these permissions will allow the.warfile to be extracted automatically, when the Tomcat service is restarted as the.ppfile runs.

Note: All of the actions required to create our Puppet Program will be explained clearly, as we step through the Task 5 sub-sections that follow. If you encounter problems, see the Potential issues and troubleshooting section at the end of this lab.

Task 5.1 Configure MongoDB

Once MongoDB is configured, we want Puppet to download a Mongo script that contains data for our PU MRP application’s database. We will include this as part of our MongoDB setup. To implement these requirements, add a Class to configure MongoDB.

On the Puppet Master, use the following command to open the init.pp file, inside the mrpapp module, for editing.

sudo nano /etc/puppetlabs/code/environments/production/modules/mrpapp/manifests/init.pp

Add the following configuremongodb Class to the bottom of the file.

class configuremongodb {

include wget

class { 'mongodb': }->

wget::fetch { 'mongorecords':

source => 'https://raw.githubusercontent.com/Microsoft/PartsUnlimitedMRP/master/deploy/MongoRecords.js',

destination => '/tmp/MongoRecords.js',

timeout => 0,

}->

exec { 'insertrecords':

command => 'mongo ordering /tmp/MongoRecords.js',

path => '/usr/bin:/usr/sbin',

unless => 'test -f /tmp/initcomplete'

}->

file { '/tmp/initcomplete':

ensure => 'present',

}

}

Press ctrl + o, then enter to save the changes to the file without exiting.

Let’s examine the configuremongodb Class.

- Line 1. We create a Class (resource) called

configuremongodb. - Line 2. We include the

wgetmodule so that we can download files viawget. - Line 3. We invoke the

mongodbresource (from themongodbmodule we downloaded earlier). This installs Mongo DB using defaults defined in the Puppet Mongo DB module. That is all we have to do to install Mondo DB! - Line 5. We invoke the

fetchresource from thewgetmodule, and call the resourcemongorecords. - Line 6. We set the source of the file we need to download from PU MRP GitHub.

- Line 7. We set the destination where the file must be downloaded to.

- Line 10. We use the built-in Puppet resource

execto execute a command. - Line 11. We specify the command to execute.

- Line 12. We set the path for the command invocation.

- Line 13. We specify a condition using the keyword

unless. We only want this command to execute once, so we create a tmp file once we have inserted the records (Line 15). If this file exists, we do not execute the command again.

Note: The

->notation on Lines 3, 9 and 14 is an Ordering Arrow. The ordering arrow tells Puppet that it must apply the left resource before invoking the right resource. This allows us to specify order, when necessary.

Task 5.2 Configure Java

Add the following configurejava Class, below the configuremongodb Class, to set up our Java requirements.

class configurejava {

include apt

$packages = ['openjdk-8-jdk', 'openjdk-8-jre']

apt::ppa { 'ppa:openjdk-r/ppa': }->

package { $packages:

ensure => 'installed',

}

}

Press ctrl + o, then enter to save the changes to the file without exiting.

Let’s examine the configurejava Class.

- Line 2. We include the

aptmodule, which will allow us to configure new Personal Package Archives (PPAs). - Line 3. We create an array of packages that we need to install.

- Line 5. We add a PPA.

- Lines 6 to 8. We tell Puppet to ensure that the packages are installed. Puppet expands the array and installs each package in the array.

Note: We cannot use the Puppet

packagetarget to install Java, because this will only install Java 7. We add the PPA and use theaptmodule to get Java 8.

Task 5.3 Create User and Group

Add the following createuserandgroup Class, to specify the Linux Users and Groups to be used for configuring, deploying, starting and stopping services.

class createuserandgroup {

group { 'tomcat':

ensure => 'present',

gid => '10003',

}

user { 'tomcat':

ensure => 'present',

gid => '10003',

home => '/tomcat',

password => '!',

password_max_age => '99999',

password_min_age => '0',

uid => '1003',

}

}

Press ctrl + o, then enter to save the changes to the file without exiting.

Let’s examine the createuserandgroup Class.

- Line 1. We call the

createuserandgroupClass that we defined at the start of theinit.ppfile. We defined this Class to use the User and Group resources. - Lines 3 to 6. We use the

groupcommand to create the GroupTomcat. We use the parameter valueensure = > presentto check if theTomcatGroup exists, and if not we create it. We then assign agidvalue to the Group. This assignment is not mandatory, but it helps manage Groups across multiple machines. - Lines 8 to 17. We use the

usercommand to create the UserTomcat. We use theensurecommand to check if theTomcatUser exists, and if not we create it. We assigngidanduidvalues to help manage Users. Then we set theTomcatuser’s home directory path. We also designate the User password values. We have left the password value open and unspecified, for ease of use. In practice you should implement strict password policies.

Note: The commands

userandgroupare made available via thepuppetlabs-accountsmodule, which we installed on the Puppet Master earlier.

Task 5.4 Configure Tomcat

Add the following configuretomcat Class to configure tomcat.

class configuretomcat {

class { 'tomcat': }

require createuserandgroup

tomcat::instance { 'default':

catalina_home => '/var/lib/tomcat7',

install_from_source => false,

package_name => ['tomcat7','tomcat7-admin'],

}->

tomcat::config::server::tomcat_users {

'tomcat':

catalina_base => '/var/lib/tomcat7',

element => 'user',

password => 'password',

roles => ['manager-gui','manager-jmx','manager-script','manager-status'];

'tomcat7':

catalina_base => '/var/lib/tomcat7',

element => 'user',

password => 'password',

roles => ['manager-gui','manager-jmx','manager-script','manager-status'];

}->

tomcat::config::server::connector { 'tomcat7-http':

catalina_base => '/var/lib/tomcat7',

port => '9080',

protocol => 'HTTP/1.1',

connector_ensure => 'present',

server_config => '/etc/tomcat7/server.xml',

}->

tomcat::service { 'default':

use_jsvc => false,

use_init => true,

service_name => 'tomcat7',

}

}

Press ctrl + o, then enter to save the changes to the file without exiting.

Let’s examine the configuretomcat Class.

- Line 1. We create a Class (resource) called

configuretomcat. - Line 2. We invoke the

tomcatresource from the Tomcat module we downloaded earlier. - Line 3. We require the

TomcatUser and Group to be available, before we can configure Tomcat, so we mark the Classcreateuserandgroupas required. - Lines 6 to 9. We install the Tomcat package from the Tomcat module we downloaded earlier. The package name is

Tomcat7, so we call it here. We designate the home directory where we will place our default installation. Then, we specify that it is not from source; this is required when installing from a downloaded package. We could install directly from a web source, if we wished. We also install thetomcat7-adminpackage. We will not use thetomcat7-adminpackage in this lab, but it can be used to provide the Tomcat Manager Web User Interface. - Lines 13 to 23. We create two usernames for Tomcat to use. These are created within the Tomcat application for managing and configuring Tomcat. They are created within, and called from, the file

/var/lib/tomcat7/conf/tomcat-users.xml. You can open the file, and view its contents, if you wish. - Lines 26 to 31. We configure the Tomcat connector, define the protocol to use and the port number. We also ensure that the connector is present, to allow it to serve requests on the ports that we set. We also specify the connector properties for Puppet to write to the Tomcat

server.xmlfile. Again, you can open the file, and view its contents, on the Puppet Master at/var/lib/tomcat7/conf/server.xml. - Lines 35 to 38. We configure the Tomcat service, which is our default installation. As there is only one installation of Tomcat, we specify the default value that we are applying the configuration to. We ensure the service is running, and the start the service using the

use_initvalue. Then, we specify the service connector name astomcat7for the PU MRP app.

Task 5.5 Deploy a WAR File

The PU MRP app is compiled into a .war file that Tomcat uses to serve pages. We need to specify a resource to deploy a .war file for the PU MRP app site.

Return to the Puppet SSH session, and edit the init.pp file by adding the following deploywar Class to the bottom of the file.

class deploywar {

require configuretomcat

tomcat::war { 'mrp.war':

catalina_base => '/var/lib/tomcat7',

war_source => 'https://raw.githubusercontent.com/Microsoft/PartsUnlimitedMRP/master/builds/mrp.war',

}

file { '/var/lib/tomcat7/webapps/':

path => '/var/lib/tomcat7/webapps/',

ensure => 'directory',

recurse => 'true',

mode => '777',

}

}

Press ctrl + o, then enter to save the changes to the file without exiting.

Let’s examine the deploywar Class.

- Line 1. We create a Class (resource) called

deploywar. - Line 2. We tell Puppet to ensure that

configuretomcathas completed, before invoking thedeploywarClass. - Line 5. We set the

catalina basedirectory so that Puppet deploys the.warto our Tomcat service. - Line 6. We use the Tomcat module’s

warresource to deploy our.warfrom thewar_source. - Line 9. We call the

fileClass, which comes from theTomcatmodule. ThefileClass allows us to configure files on our system. - Line 10. We specify the path to the web directory for our PU MRP app.

- Line 11. We ensure a directory exists on the path we specified, before applying our configuration.

- Line 12. We specify that the configuration should be applied recursively, so it will affect all files and sub-directories within the

~/tomcat7/webapps/directory. - Line 13. We designate a

777permission on the all files and sub-directories within the~/tomcat7/webapps/directory. It is very important to set these permissions to allow read, write and execute permissions on all content within the~/tomcat7/webapps/directory. Setting777permissions is acceptable in this lab. You should always set more restrictive permissions according to each user, group and access level in a Production environment.

Note: Typically, the following actions are needed to deploy a

.warfile

- copy the

.warfile to the Tomcat/var/lib/tomcat7/webappsdirectory- set the correct permissions on the

webappsdirectory- specify values for

unpackWARSandautoDeployin the Tomcat server config file at/var/lib/tomcat7/conf/server.xml- list a valid and available user in the Tomcat file

/var/lib/tomcat7/conf/tomcat-users.xmlTomcat will extract and then deploy the

.warwhen the service starts. This is why we restart the service in theinit.ppfile. When you complete this lab, you can open and view the contents of the Tomcat filesserver.xmlandtomcat-users.xml.

Task 5.6 Start the Ordering Service

The PU MRP app calls an Ordering Service, which is a REST API that manages orders in the Mongo DB. The Ordering Service is compiled to a .jar file. We need to copy the .jar file file to our Node. The Node then runs the Ordering Service, in the background, so that it can listen for REST API requests.

To ensure that the Ordering Service runs, add the following orderingservice Class to the bottom of the init.pp file.

class orderingservice {

package { 'openjdk-7-jre':

ensure => 'installed',

}

file { '/opt/mrp':

ensure => 'directory'

}->

wget::fetch { 'orderingsvc':

source => 'https://raw.githubusercontent.com/Microsoft/PartsUnlimitedMRP/master/builds/ordering-service-0.1.0.jar',

destination => '/opt/mrp/ordering-service.jar',

cache_dir => '/var/cache/wget',

timeout => 0,

}->

exec { 'stoporderingservice':

command => "pkill -f ordering-service",

path => '/bin:/usr/bin:/usr/sbin',

onlyif => "pgrep -f ordering-service"

}->

exec { 'stoptomcat':

command => 'service tomcat7 stop',

path => '/bin:/usr/bin:/usr/sbin',

onlyif => "test -f /etc/init.d/tomcat7",

}->

exec { 'orderservice':

command => 'java -jar /opt/mrp/ordering-service.jar &',

path => '/usr/bin:/usr/sbin:/usr/lib/jvm/java-8-openjdk-amd64/bin',

}->

exec { 'wait':

command => 'sleep 20',

path => '/bin',

notify => Tomcat::Service['default']

}

}

Press ctrl + o, then enter to save the changes to the file without exiting.

Let’s examine the orderingservice Class.

- Line 1. We create a Class (resource) called

orderingservice. - Lines 2 to 4. We install the Java Runtime Environment (JRE) required to run the application using Puppet’s

packageresource. - Lines 6 to 8. We ensure that the directory

/opt/mrpexists (Puppet creates it if it does not exist). - Lines 9 to 14. We

wgetthe Ordering Service binary, and place it in/opt/mrp. - Line 12. We specify a cache directory, to ensure that the file is only downloaded once.

- Lines 15 to 19. We stop the

orderingservice, but only if it is running already. - Lines 20 to 24. We stop the

tomcat7service, but only if it is running already. - Lines 25 to 28. We start the Ordering Service.

- Lines 29 to 33. We sleep for 20 seconds, to give the Ordering Service time to start up, before

notifyingthetomcatservice. These actions trigger a refresh on the service. Puppet will re-apply the state that we defined for the service (i.e. start it, if it is not running already).

Note: We need to wait after running the

javacommand, since this service needs to be running before we can start Tomcat. Otherwise, Tomcat will occupy the port that the Ordering Service needs to listen on for incoming API requests.

Task 5.7 Complete the mrpapp Resource

Go to the top of the file init.pp. Modify the mrpapp Class by deleting the content that relates to the dummy.txt file which we tested earlier in the lab. Add the following.

class mrpapp {

class { 'configuremongodb': }

class { 'configurejava': }

class { 'createuserandgroup': }

class { 'configuretomcat': }

class { 'deploywar': }

class { 'orderingservice': }

}

Press ctrl + x, then y, and then enter to save your changes to the file.

The modifications to the mrpapp Class specify which Classes are needed by the init.pp file, in order to run our resources.

Note: After you have made all of the necessary modifications to the

init.ppfile, it should be identical to the completedinit.ppfile on Microsoft’s PU MRP GitHub repository. If you prefer, you can copy theinit.ppfile contents from GitHub and paste them into your localinit.ppfile.

Task 5.8 Configure .war file extracton permissions

Part of the configuration we specified in our init.pp file involves copying the .war file for our PU MRP app to the directory /var/lib/tomcat7/webapps.

Tomcat automatically extracts .war files from the webapps directory. However, in our lab we will set full read, write and access permissions in the file war.pp, to ensure that the file extracts sucessfully.

Note: More details about configuration editing are availble on the page Resource Type: file.

On the Puppet Master, run the following command to open the war.pp file for the Tomcat module in the Nano editor.

sudo nano /etc/puppetlabs/code/environments/production/modules/tomcat/manifests/war.pp

Modify the permissions at the bottom of the war.pp file, by changing the mode from mode => '0640' to mode => '0777'.

When you have finished editing, it should look like the following.

file { "tomcat::war ${name}":

ensure => file,

path => "${_deployment_path}/${_war_name}",

owner => $user,

group => $group,

mode => '0777',

subscribe => Archive["tomcat::war ${name}"],

}

}

}

Press ctrl + x, then y, and then enter to save your changes and close the file.

Let’s examine this section of the war.pp file.

- Line 1. Specifies the file name, which comes with the Tomcat module

tomcat::war. - Line 2. Ensures the file is present before acting on it.

- Line 3. Defines the path to the web directory, which we previously set to

/var/lib/tomcat7/webapps. This is where the.warfile for our PU MRP app will be copied to, as we specified in theinit.ppfile. - Line 4 to 5. Set the User and Group owners of the files.

- Line 6. Set the file permissions mode to

0777, which allows read, write and execute permissions to everyone. You should not use 777 permissions in a Production environment because you may expose your environment to risk and malicious code.

Task 6: Run the Puppet Configuration on the Node

-

Re-run the Puppet Agent.

Return to, or re-establish, your SSH session on the Node/ partsmrp VM. Force Puppet to update the Node’s configuration with the following command.

sudo puppet agent --testNote: This first run may take a few moments, as there is a lot to download and install. The next time that you run the Puppet Agent, it will verify that the existing environment is configured correctly. This verification process will take less time than the first run, because the services will be installed and configured already.

-

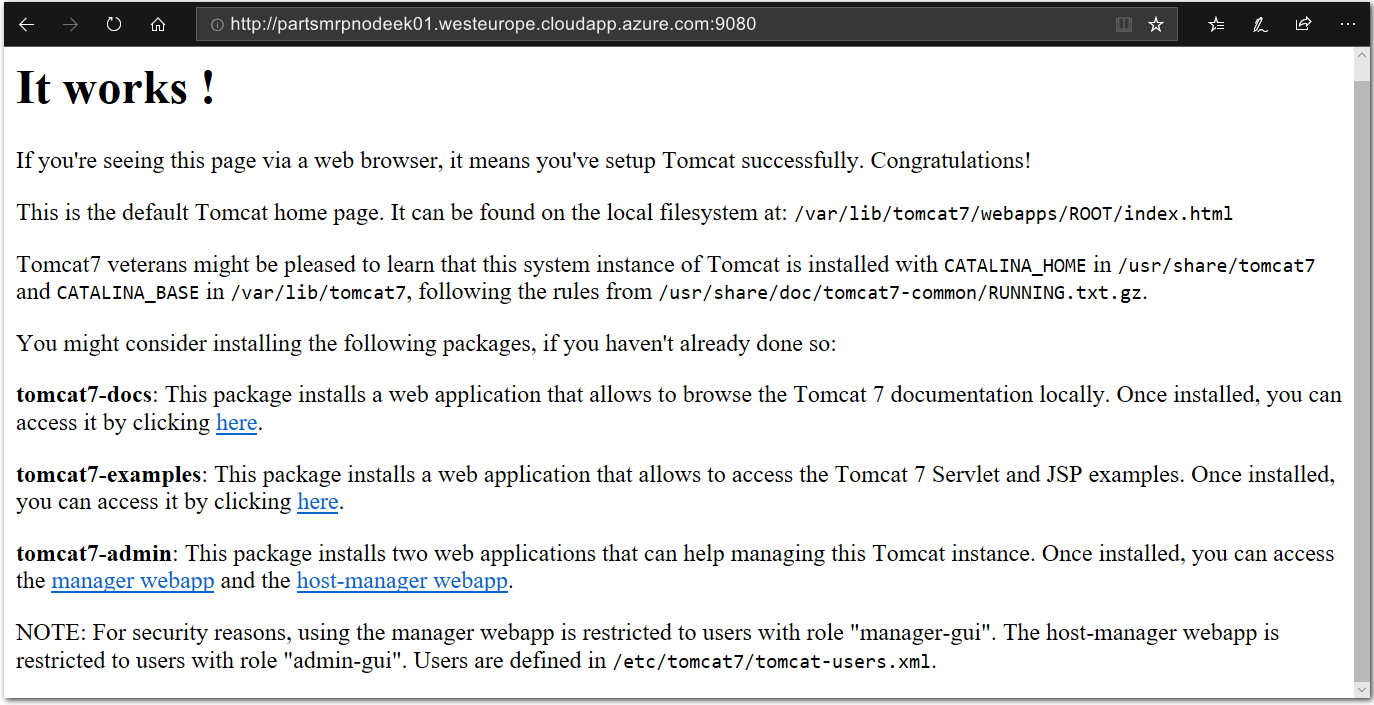

Verify that Tomcat is running correctly.

Append the port number

9080to the DNS address URL for the Node/ partsmrp VM, for examplehttp://partsmrpnodeek01.westeurope.cloudapp.azure.com:9080You can get the DNS address URL from the Public IP resource for the Node, in Azure Portal (just as you did when you got the URL of the Puppet Master earlier).

Open a web browser and browse to port

9080on the Node/ partsmrp VM. Once open in the web browser, you should see the Tomcat Confirmation webpage.Note: Use the

httpprotocol, and nothttps.

-

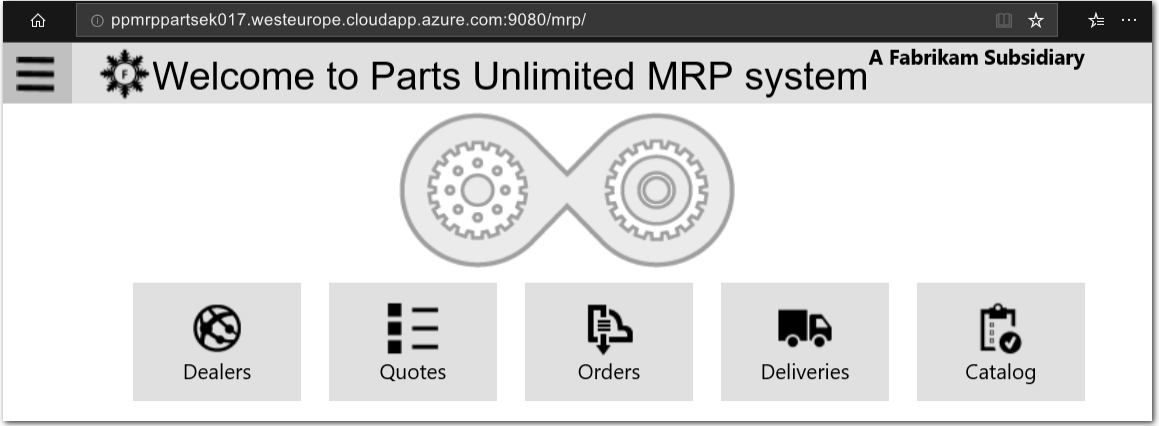

Verify that the PU MRP app is running correctly.

Check that the configuration is correct by opening a web browser to the PU MRP app. In your web browser, append

/mrpto the end of DNS address URL you used in Step 2. For example,http://partsmrpnodeek01.westeurope.cloudapp.azure.com:9080/mrp.You can also get the DNS name for the Node/ partsmrp VM in Azure Portal.

The PU MRP app Welcome webpage should be displayed in your web browser.

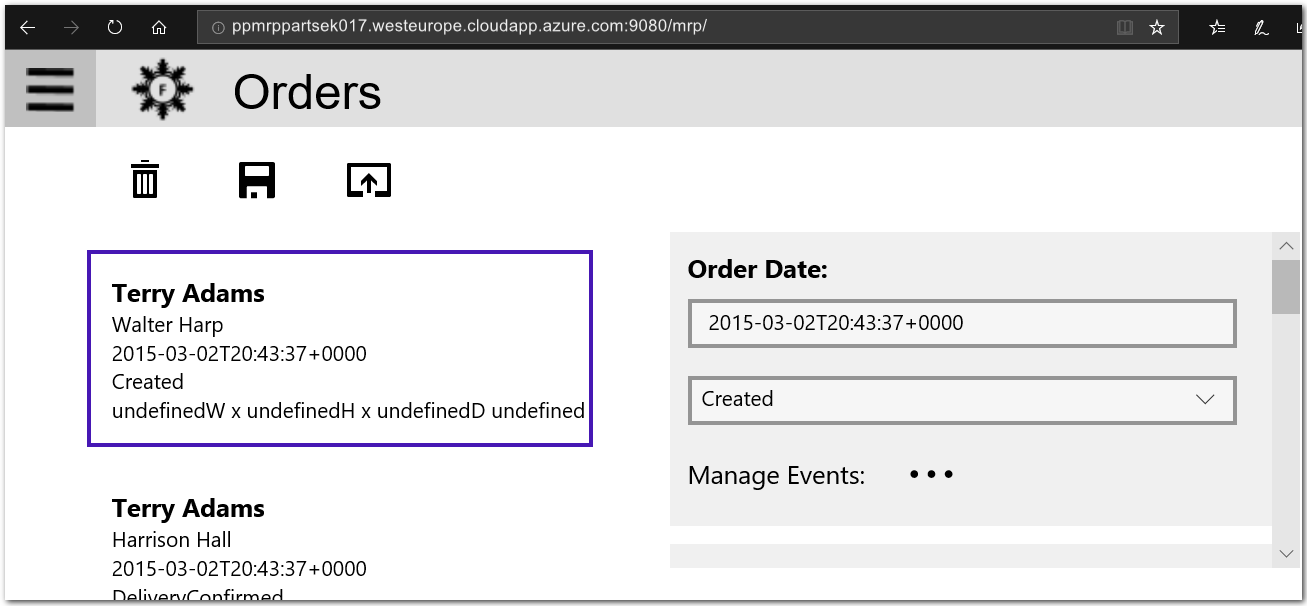

Explore the PU MRP app to confirm that it functions as intended. For example, select the Orders button, in your web browser, to view the Orders page.

Potential issues and troubleshooting

-

You may encounter errors on first run of the configuration, related to a Java package used in the Mongo DB installation, as shown below. Re-run the configuration on the Node with the command

sudo puppet agent --test --debug. The second run should complete without error. If more errors occur, the--debugswitch will help you locate the problem(s). Work through the problems, and fix them one-by-one, until the configuration completes without error.Error: Execution of '/usr/bin/apt-get -q -y -o DPkg::Options::=--force-confold install openjdk-7-jre' returned 100: Reading package lists... Building dependency tree... Reading state information... Package openjdk-7-jre is not available, but is referred to by another package. This may mean that the package is missing, has been obsoleted, or is only available from another source -

Manually typing code or copying and pasting into a terminal from the code on this page may induce errors. After you have made all of the necessary modifications to the

init.ppfile, it should be identical to the completedinit.ppfile on Microsoft’s PU MRP GitHub repository. If you have not already done so, copy theinit.ppfile contents from GitHub and paste them into your localinit.ppfile. Then, try re-running the configuration on the Node with the commandsudo puppet agent --testto resolve errors.

Summary

In this lab you created the Puppet infrastructure needed to deploy the PU MRP app, deployed the application to a Node and managed Configuration Drift. To do so you completed the following tasks:

- Provisioned a Puppet Master and Node in Azure using Azure Resource Manager (ARM) templates (both inside Linux Ubuntu Virtual Machines)

- Installed Puppet Agent on the Node

- Configured the Puppet Production Environment

- Tested the Production Environment Configuration

- Created a Puppet Program to describe the environment for the PU MRP app

- Ran the Puppet Configuration on the Node