This page was generated from

docs/examples/DataSet/Working-With-Pandas-and-XArray.ipynb.

Interactive online version:

.

Working with Pandas and XArray¶

This notebook demonstrates how Pandas and XArray can be used to work with the QCoDeS DataSet. It is not meant as a general introduction to Pandas and XArray. We refer to the official documentation for Pandas and XArray for this. This notebook requires that both Pandas and XArray are installed.

Setup¶

First we borrow an example from the measurement notebook to have some data to work with. We split the measurement in two so we can try merging it with Pandas.

[1]:

%matplotlib inline

from pathlib import Path

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import qcodes as qc

from qcodes.dataset import (

Measurement,

initialise_or_create_database_at,

load_or_create_experiment,

)

from qcodes.instrument_drivers.mock_instruments import (

DummyInstrument,

DummyInstrumentWithMeasurement,

)

[2]:

# preparatory mocking of physical setup

dac = DummyInstrument("dac", gates=["ch1", "ch2"])

dmm = DummyInstrumentWithMeasurement("dmm", setter_instr=dac)

station = qc.Station(dmm, dac)

[3]:

initialise_or_create_database_at(

Path.cwd().parent / "example_output" / "working_with_pandas.db"

)

exp = load_or_create_experiment(

experiment_name="working_with_pandas", sample_name="no sample"

)

[4]:

meas = Measurement(exp)

meas.register_parameter(dac.ch1) # register the first independent parameter

meas.register_parameter(dac.ch2) # register the second independent parameter

meas.register_parameter(

dmm.v2, setpoints=(dac.ch1, dac.ch2)

) # register the dependent one

[4]:

<qcodes.dataset.measurements.Measurement at 0x7fecac858a90>

We then perform a very basic experiment. To be able to demonstrate merging of datasets in Pandas we will perform the measurement in two parts.

[5]:

# run a 2D sweep

with meas.run() as datasaver:

for v1 in np.linspace(-1, 0, 200, endpoint=False):

for v2 in np.linspace(-1, 1, 201):

dac.ch1(v1)

dac.ch2(v2)

val = dmm.v2.get()

datasaver.add_result((dac.ch1, v1), (dac.ch2, v2), (dmm.v2, val))

dataset1 = datasaver.dataset

Starting experimental run with id: 1.

[6]:

# run a 2D sweep

with meas.run() as datasaver:

for v1 in np.linspace(0, 1, 201):

for v2 in np.linspace(-1, 1, 201):

dac.ch1(v1)

dac.ch2(v2)

val = dmm.v2.get()

datasaver.add_result((dac.ch1, v1), (dac.ch2, v2), (dmm.v2, val))

dataset2 = datasaver.dataset

Starting experimental run with id: 2.

Two methods exists for extracting data to pandas dataframes. to_pandas_dataframe exports all the data from the dataset into a single dataframe. to_pandas_dataframe_dict returns the data as a dict from measured (dependent) parameters to DataFrames.

Please note that the to_pandas_dataframe is only intended to be used when all dependent parameters have the same setpoint. If this is not the case for the DataSet then to_pandas_dataframe_dict should be used.

[7]:

df1 = dataset1.to_pandas_dataframe()

df2 = dataset2.to_pandas_dataframe()

Working with Pandas¶

Lets first inspect the Pandas DataFrame. Note how both dependent variables are used for the index. Pandas refers to this as a MultiIndex. For visual clarity, we just look at the first N points of the dataset.

[8]:

N = 10

[9]:

df1[:N]

[9]:

| dmm_v2 | ||

|---|---|---|

| dac_ch1 | dac_ch2 | |

| -1.0 | -1.00 | -0.000231 |

| -0.99 | -0.001167 | |

| -0.98 | -0.000155 | |

| -0.97 | -0.000009 | |

| -0.96 | 0.000017 | |

| -0.95 | 0.000491 | |

| -0.94 | -0.000723 | |

| -0.93 | 0.000077 | |

| -0.92 | 0.000400 | |

| -0.91 | 0.000162 |

We can also reset the index to return a simpler view where all data points are simply indexed by a running counter. As we shall see below this can be needed in some situations. Note that calling reset_index leaves the original dataframe untouched.

[10]:

df1.reset_index()[0:N]

[10]:

| dac_ch1 | dac_ch2 | dmm_v2 | |

|---|---|---|---|

| 0 | -1.0 | -1.00 | -0.000231 |

| 1 | -1.0 | -0.99 | -0.001167 |

| 2 | -1.0 | -0.98 | -0.000155 |

| 3 | -1.0 | -0.97 | -0.000009 |

| 4 | -1.0 | -0.96 | 0.000017 |

| 5 | -1.0 | -0.95 | 0.000491 |

| 6 | -1.0 | -0.94 | -0.000723 |

| 7 | -1.0 | -0.93 | 0.000077 |

| 8 | -1.0 | -0.92 | 0.000400 |

| 9 | -1.0 | -0.91 | 0.000162 |

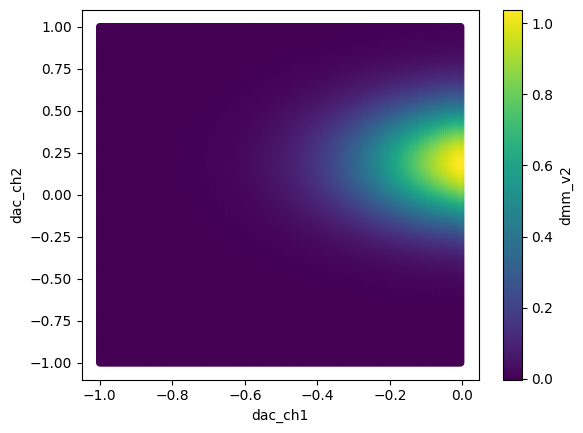

Pandas has built-in support for various forms of plotting. This does not, however, support MultiIndex at the moment so we use reset_index to make the data available for plotting.

[11]:

df1.reset_index().plot.scatter("dac_ch1", "dac_ch2", c="dmm_v2")

[11]:

<Axes: xlabel='dac_ch1', ylabel='dac_ch2'>

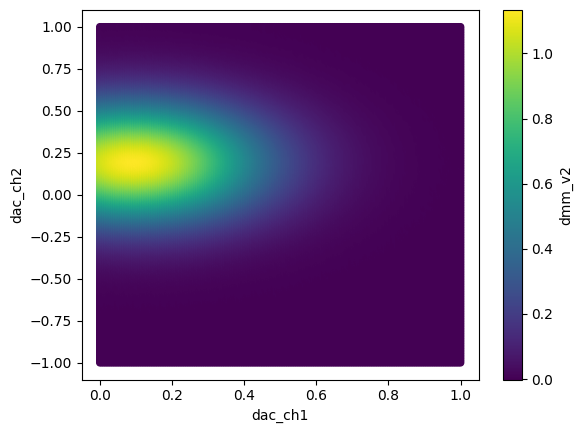

Similarly, for the other dataframe:

[12]:

df2.reset_index().plot.scatter("dac_ch1", "dac_ch2", c="dmm_v2")

[12]:

<Axes: xlabel='dac_ch1', ylabel='dac_ch2'>

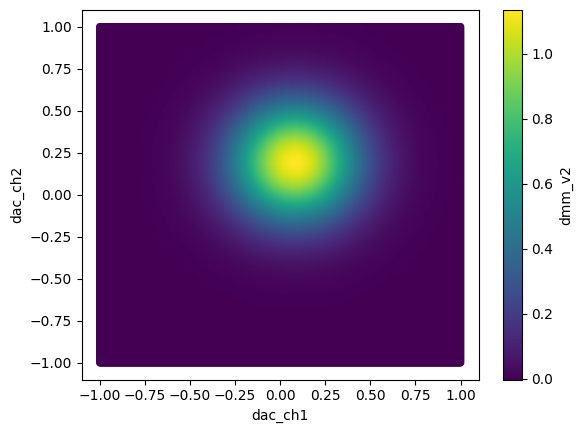

Merging two dataframes with the same labels is fairly simple.

[13]:

df = pd.concat([df1, df2], sort=True)

[14]:

df.reset_index().plot.scatter("dac_ch1", "dac_ch2", c="dmm_v2")

[14]:

<Axes: xlabel='dac_ch1', ylabel='dac_ch2'>

It is also possible to select a subset of data from the datframe based on the x and y values.

[15]:

df.loc[(slice(-1, -0.95), slice(-1, -0.97)), :]

[15]:

| dmm_v2 | ||

|---|---|---|

| dac_ch1 | dac_ch2 | |

| -1.000 | -1.00 | -0.000231 |

| -0.99 | -0.001167 | |

| -0.98 | -0.000155 | |

| -0.97 | -0.000009 | |

| -0.995 | -1.00 | 0.000187 |

| -0.99 | 0.000167 | |

| -0.98 | 0.000014 | |

| -0.97 | 0.000808 | |

| -0.990 | -1.00 | 0.000225 |

| -0.99 | -0.000146 | |

| -0.98 | 0.000413 | |

| -0.97 | -0.000377 | |

| -0.985 | -1.00 | 0.000143 |

| -0.99 | 0.000566 | |

| -0.98 | -0.000341 | |

| -0.97 | 0.000267 | |

| -0.980 | -1.00 | 0.000197 |

| -0.99 | -0.000249 | |

| -0.98 | 0.000323 | |

| -0.97 | -0.000137 | |

| -0.975 | -1.00 | 0.000347 |

| -0.99 | -0.000503 | |

| -0.98 | -0.000992 | |

| -0.97 | -0.000328 | |

| -0.970 | -1.00 | 0.000288 |

| -0.99 | -0.000111 | |

| -0.98 | -0.000799 | |

| -0.97 | -0.000024 | |

| -0.965 | -1.00 | -0.000133 |

| -0.99 | -0.001359 | |

| -0.98 | -0.000094 | |

| -0.97 | -0.000777 | |

| -0.960 | -1.00 | 0.000506 |

| -0.99 | -0.000457 | |

| -0.98 | -0.000201 | |

| -0.97 | 0.000555 | |

| -0.955 | -1.00 | 0.000030 |

| -0.99 | -0.000222 | |

| -0.98 | -0.000334 | |

| -0.97 | 0.000537 | |

| -0.950 | -1.00 | 0.000243 |

| -0.99 | 0.000699 | |

| -0.98 | 0.000315 | |

| -0.97 | -0.000333 |

Working with XArray¶

In many cases when working with data on rectangular grids it may be more convenient to export the data to a XArray Dataset or DataArray. This is especially true when working in multi-dimentional parameter space.

Let’s setup and rerun the above measurment with the added dependent parameter dmm.v1.

[16]:

meas.register_parameter(

dmm.v1, setpoints=(dac.ch1, dac.ch2)

) # register the 2nd dependent parameter

[16]:

<qcodes.dataset.measurements.Measurement at 0x7fecac858a90>

[17]:

# run a 2D sweep

with meas.run() as datasaver:

for v1 in np.linspace(-1, 1, 200):

for v2 in np.linspace(-1, 1, 201):

dac.ch1(v1)

dac.ch2(v2)

val1 = dmm.v1.get()

val2 = dmm.v2.get()

datasaver.add_result(

(dac.ch1, v1), (dac.ch2, v2), (dmm.v1, val1), (dmm.v2, val2)

)

dataset3 = datasaver.dataset

Starting experimental run with id: 3.

The QCoDeS DataSet can be directly converted to a XArray Dataset from the to_xarray_dataset method. This method returns the data from measured (dependent) parameters to an XArray Dataset. It’s also possible to return a dictionary of XArray DataArray’s if you were only interested in a single parameter using the to_xarray_dataarray method. For convenience we will access the DataArray’s from XArray’s Dataset directly.

Please note that the to_xarray_dataset is only intended to be used when all dependent parameters have the same setpoint. If this is not the case for the DataSet then to_xarray_dataarray should be used.

[18]:

xaDataSet = dataset3.to_xarray_dataset()

[19]:

xaDataSet

[19]:

<xarray.Dataset> Size: 646kB

Dimensions: (dac_ch1: 200, dac_ch2: 201)

Coordinates:

* dac_ch1 (dac_ch1) float64 2kB -1.0 -0.9899 -0.9799 ... 0.9799 0.9899 1.0

* dac_ch2 (dac_ch2) float64 2kB -1.0 -0.99 -0.98 -0.97 ... 0.97 0.98 0.99 1.0

Data variables:

dmm_v1 (dac_ch1, dac_ch2) float64 322kB 6.041 6.094 6.26 ... 4.091 3.939

dmm_v2 (dac_ch1, dac_ch2) float64 322kB -0.0004148 ... 0.0003498

Attributes: (12/14)

ds_name: results

sample_name: no sample

exp_name: working_with_pandas

snapshot: {"station": {"instruments": {"dmm": {"functions...

guid: 272fad7c-0000-0000-0000-019cc29c503d

run_timestamp: 2026-03-06 10:05:50

... ...

captured_counter: 3

run_id: 3

run_description: {"version": 3, "interdependencies": {"paramspec...

parent_dataset_links: []

run_timestamp_raw: 1772791550.020074

completed_timestamp_raw: 1772791555.7310505As mentioned above it’s also possible to work with a XArray DataArray directly from the DataSet. The DataArray can only contain a single dependent variable and can be obtained from the Dataset by indexing using the parameter name.

[20]:

xaDataArray = xaDataSet["dmm_v2"] # or xaDataSet.dmm_v2

[21]:

xaDataArray

[21]:

<xarray.DataArray 'dmm_v2' (dac_ch1: 200, dac_ch2: 201)> Size: 322kB

array([[-4.14770130e-04, -4.70163863e-04, 9.09245476e-05, ...,

-1.03562077e-04, -1.28038324e-04, -3.28774027e-04],

[-4.99982161e-04, -2.05751504e-04, -7.88074273e-04, ...,

4.58749495e-04, 2.51684413e-05, -3.22510200e-04],

[-1.17758310e-03, 8.06600627e-04, -1.75453714e-04, ...,

4.30869248e-04, 9.83160027e-04, -6.53817618e-04],

...,

[-4.18065981e-04, -7.72577742e-06, 2.39995085e-05, ...,

-2.84537128e-05, -1.26181906e-04, -3.86071179e-04],

[-1.70514168e-04, 2.14080469e-04, 6.11640796e-04, ...,

-6.97273572e-04, 3.63848029e-04, 5.75025153e-05],

[-6.32275291e-04, -4.30547822e-04, -3.40180413e-05, ...,

-3.59981064e-04, -3.54526309e-04, 3.49793045e-04]],

shape=(200, 201))

Coordinates:

* dac_ch1 (dac_ch1) float64 2kB -1.0 -0.9899 -0.9799 ... 0.9799 0.9899 1.0

* dac_ch2 (dac_ch2) float64 2kB -1.0 -0.99 -0.98 -0.97 ... 0.97 0.98 0.99 1.0

Attributes:

name: dmm_v2

paramtype: numeric

label: Gate v2

unit: V

inferred_from: []

depends_on: ['dac_ch1', 'dac_ch2']

units: V

long_name: Gate v2[22]:

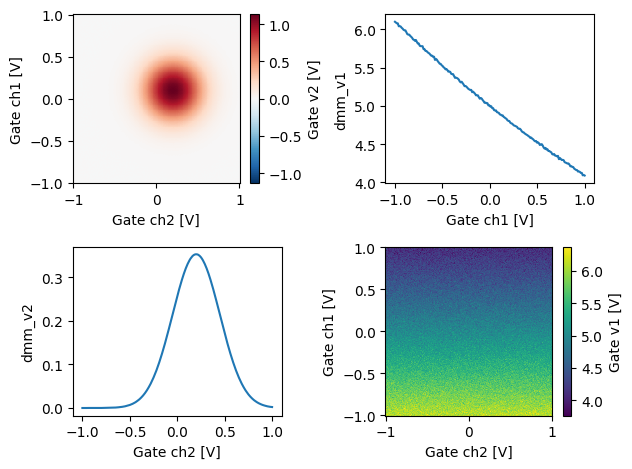

fig, ax = plt.subplots(2, 2)

xaDataSet.dmm_v2.plot(ax=ax[0, 0])

xaDataSet.dmm_v1.plot(ax=ax[1, 1])

xaDataSet.dmm_v2.mean(dim="dac_ch1").plot(ax=ax[1, 0])

xaDataSet.dmm_v1.mean(dim="dac_ch2").plot(ax=ax[0, 1])

fig.tight_layout()

Above we demonstrated a few ways to index the data from a DataArray. For instance the DataArray can be directly plotted, the extracted mean or a specific row/column can also be plotted.

Working with XArray on non gridded data.¶

Sometimes your data does not fit well on a regular grid. Perhaps you are sweeping 2 parameters at the same time or you are messuring at random points.

[23]:

# run a 2D sweep

with meas.run() as datasaver:

for v1, v2 in zip(np.linspace(-1, 1, 200), np.linspace(-1, 1, 201)):

dac.ch1(v1)

dac.ch2(v2)

val1 = dmm.v1.get()

val2 = dmm.v2.get()

datasaver.add_result(

(dac.ch1, v1), (dac.ch2, v2), (dmm.v1, val1), (dmm.v2, val2)

)

dataset4 = datasaver.dataset

Starting experimental run with id: 4.

[24]:

xaDataSet = dataset4.to_xarray_dataset()

If this is the case QCoDeS will export the data using a XArray MultiIndex.

[25]:

xaDataSet

[25]:

<xarray.Dataset> Size: 8kB

Dimensions: (multi_index: 200)

Coordinates:

* multi_index (multi_index) object 2kB MultiIndex

* dac_ch1 (multi_index) float64 2kB -1.0 -0.9899 -0.9799 ... 0.9899 1.0

* dac_ch2 (multi_index) float64 2kB -1.0 -0.99 -0.98 ... 0.97 0.98 0.99

Data variables:

dmm_v1 (multi_index) float64 2kB 6.006 6.089 6.067 ... 4.178 4.111

dmm_v2 (multi_index) float64 2kB -3.422e-05 -0.0003267 ... -0.0001367

Attributes: (12/14)

ds_name: results

sample_name: no sample

exp_name: working_with_pandas

snapshot: {"station": {"instruments": {"dmm": {"functions...

guid: 254c3ef6-0000-0000-0000-019cc29c6a05

run_timestamp: 2026-03-06 10:05:56

... ...

captured_counter: 4

run_id: 4

run_description: {"version": 3, "interdependencies": {"paramspec...

parent_dataset_links: []

run_timestamp_raw: 1772791556.6185071

completed_timestamp_raw: 1772791556.6416075Note how the expected coordinates can be seen above along with a coordinate called multi_index

QCoDeS has build in support for exporting such datasets to NetCDF files using cf_xarray to compress and decompress the data. Note however, that if you manually export or import such XArray datasets to / from NetCDF you will be responsible for compressing / decompressing as needed.